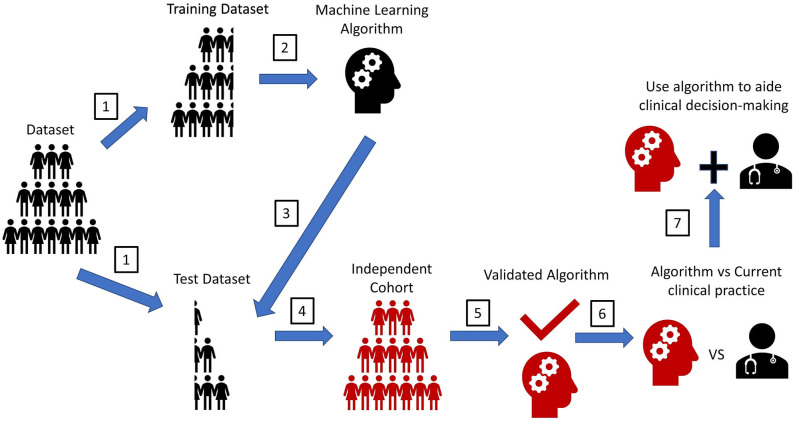

Figure 3.

Experimental workflow for machine learning algorithms. A pipeline diagram for the ideal machine learning (ML) experiment. This pipeline is simplified into seven steps. (1) Retrospectively collected datasets with labeled outcomes need to be randomly split into a training set and a test set. The method to split the dataset to create a training dataset and test dataset vary based on the type cross-validation method. (2) ML will train on the training set to make predictions for the labeled outcome. (3) ML predictions of an outcome will be compared with the actual outcomes in the test set to determine prediction accuracy. (4) The gold standard assessing ML performance is to test the ML prediction accuracy on another dataset that is completely independent from the original training dataset. (5) This step will validate the performance strength of the ML. (6) To assess the MLs clinical utility, a prospective trial should be conducted. The ML's prediction accuracy of an outcome should be compared with that seen from the current clinical standard-of-care (i.e., current scoring system, physician prediction or diagnosis). If prediction accuracy of the ML is non-inferior or greater than that seen from the current clinical standard-of-care, the ML should be used to assist in the clinical decision-making. Ideally, the ML would be implemented in the electronic health record (EHR) to automatically extract pertinent patient data for each patient to aid decision-making, like how certain risk-stratification scores are automatically calculated in some EHR systems. This will help reduce workload burden for clinical teams, while also possibly improving the accuracy of clinical decision-making. Furthermore, since MLs have an automatic process for interpreting patient data, MLs could help teams reduce any potential biased-based decisions made by different rounding ICU teams.