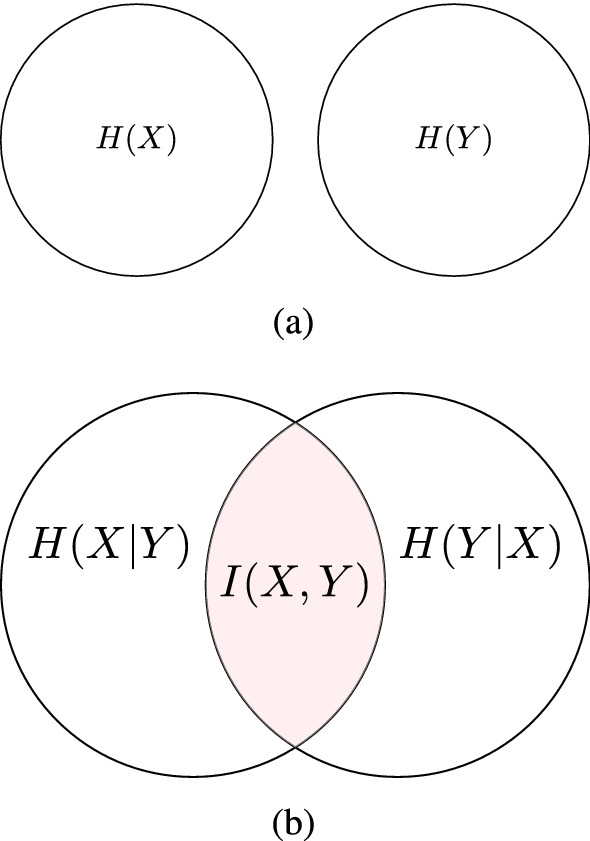

Figure 15.

This figure depicts the relationship between entropy and mutual information for two variables X and Y. I(X, Y) measures the amount of information content that one variable contains about another. (a) I(X, Y) is equal to zero if and only if X and Y are statistically independent; and, (b) , which corresponds to the reduction in the entropy of one variable due to the knowledge of the other. Hence, the I(X, Y) can take values in the interval: ; the larger the value of I(X, Y) is, the more the two variables are related. This figure has been created using the package TikZ (version 0.9f 2018-11-19) in Latex, available at https://www.ctan.org/pkg/tikz-cd.