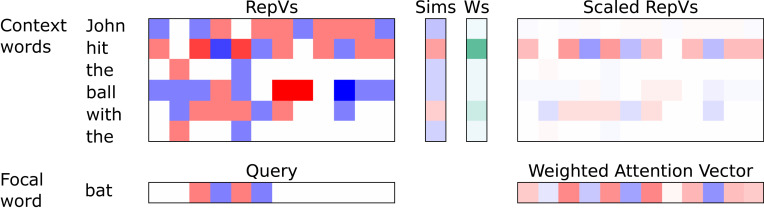

Fig. 3.

Query-based attention (QBA). To constrain the interpretation of the word “bat” in the context “John hit the ball with the __,” a query generated from “bat” can be used to construct a weighted attention vector, which shapes the word’s interpretation. The query is compared with each of the learned representation vectors (RepVs) of the context words; this creates a set of similarity scores (Sims), which in turn, produce a set of weightings (Ws; a set of positive numbers summing to one). The Ws are used to scale the RepVs of the context words, creating Scaled RepVs. The weighted attention vector is the element-wise sum of the Scaled RepVs. The Query, RepVs, Sims, Scaled RepVs, and weighted attention vector use red color intensity for positive magnitudes and blue for negative magnitudes. Ws are shown as green color intensity. White = 0 throughout. The Query and RepVs were made up for illustration, inspired by ref. 24. Mathematical details: for query and representation vector for context word , the similarity score is . The are converted into weightings by the softmax function, , where the sum in the denominator runs over all words in the context span, and is a scale factor.