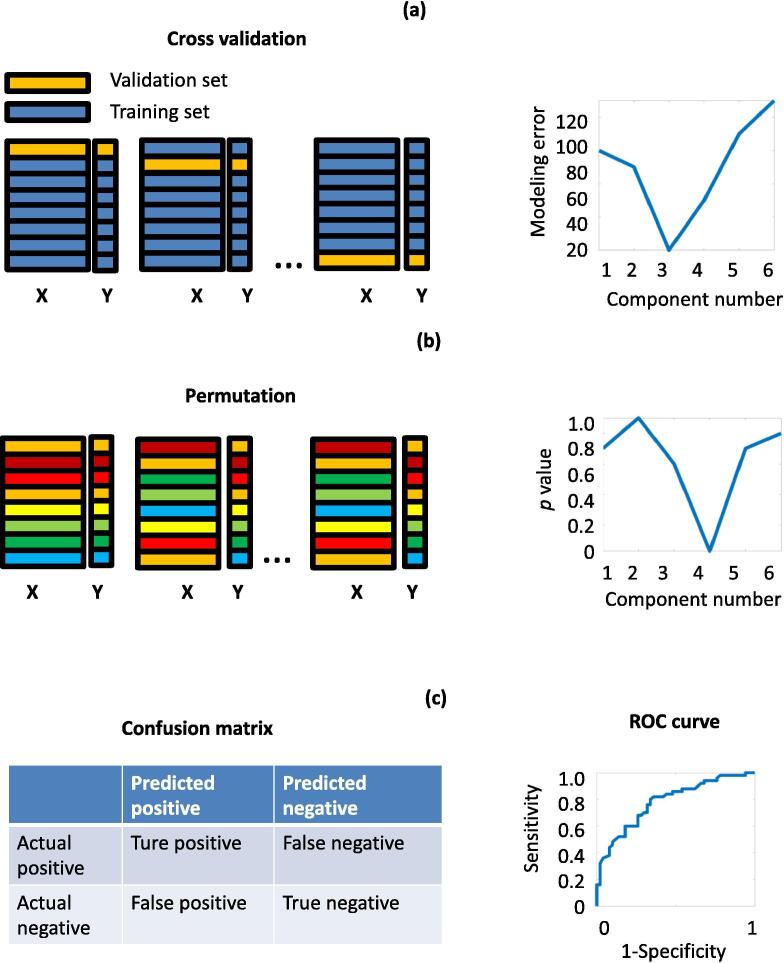

Fig. 3.

Validation methods for machine learning results, including cross-validation, permutation, confusion matrix and receiver operator characteristic (ROC) curve. Matrix X represents the spectral dataset and Y represents the classification/concentration information (a) Cross-validation illustration using the Leave-One-Out (LOO) strategy as an example. Each time a single sample is left out and the remaining samples are used as a training set to build the multivariate model. This step is repeated for all the samples. The modelling error is calculated for each multivariate model with different numbers of components (the plot on the right side). (b) The permutation test shuffles the samples in the X block. The samples in the Y block remain in the same order as the original data. Pseudo machine learning models are built and statistical tests are applied to compare the original model with the pseudo machine learning models. The permutation test can be applied with different parameters (e.g. the number of components), and only the models with p-values lower than 0.05 are considered statistically reliable (the plot on the right side). (c) Confusion matrix indicating the number of true positive, false positive, false negative and true negative samples predicted by the machine learning method. Specificity and sensitivity are related to the number of samples that fall into each category (Equation (1), (2)). The ROC curve shows the relationship between specificity and sensitivity of the models where the threshold of the classifier is varied.