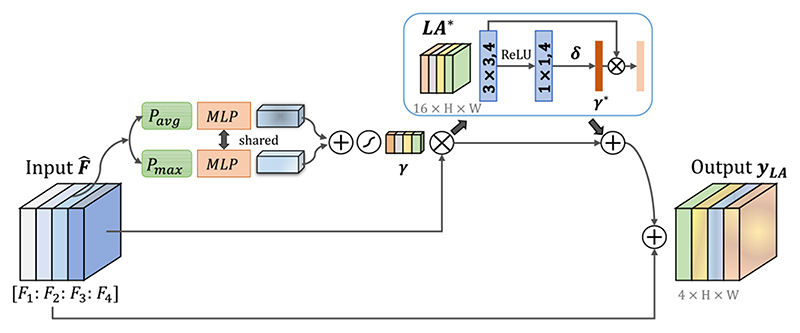

Fig. 4.

Structure of our proposed scale attention module with residual connection. Its input is the concatenation of interpolated feature maps at different scales obtained in the decoder. γ means scale-wise attention coefficient. We additionally use a spatial attention block LA* to gain pixel-wise scale attention coefficient γ*.