Abstract

Objective

To evaluate the impact of a referral manager tool on primary care practices.

Materials and Methods

We evaluated a referral manager module in a locally developed electronic health record (EHR) that was enhanced to improve the referral management process in primary care practices. Baseline (n = 61) and follow-up (n = 35) provider and staff surveys focused on the ease of performing various steps in the referral process, confidence in completing those steps, and user satisfaction. Additional metrics were calculated that focused on completed specialist visits, acknowledged notes, and patient communication.

Results

Of 1341 referrals that were initiated during the course of the study, 76.8% were completed. All the steps of the referral process were easier to accomplish following implementation of the enhanced referral manager module in the EHR. Specifically, tracking the status of an in-network referral became much easier (+1.43 [3.91–2.48] on a 5-point scale, P < .0001). Although we found improvement in the ease of performing out-of-network referrals, there was a greater impact on in-network referrals.

Discussion

Implementation of an electronic tool developed using user-centered design principles along with adequate staff to monitor and intervene when necessary made it easier for primary care practices to track referrals and to identify if a breakdown in the process occurred. This is especially important for high-priority referrals. Out-of-network referrals continue to present challenges, which may eventually be helped by improving interoperability among EHRs and scheduling systems.

Conclusion

An enhanced referral manager system can improve referral workflows, leading to enhanced efficiency and patient safety and reduced malpractice risk.

Keywords: referral and consultation, quality of care, risk management, electronic health records, medical malpractice

BACKGROUND AND SIGNIFICANCE

Outpatient referrals represent an integral part of ambulatory care and involve multiple steps that require provider-to-provider and provider-to-patient communication, and the process is often frustrating to both providers and patients. Excellent communication around referrals is essential for physicians to provide high-quality care. However, the referral management process is especially prone to incomplete follow-up and communication breakdown. A large percentage of primary care physicians (PCPs) and specialists report that they do not receive necessary patient information in a timely fashion.1–3 Over a quarter of patients claim that they do not receive enough information prior to visiting a specialist or do not receive any follow-up after the appointment.4 One analysis of a national malpractice claims database involving outpatients (from 2006 to 2010) showed that errors in referral management made up almost a third of all diagnostic errors and incurred over $168 million in costs.5

Electronic referral management systems have the potential to improve the referral management process and, in particular, to improve its reliability. Users of these modules have reported improvements in access to specialty care,6–8 in communication between PCPs and specialists,8–14 in overall clinical care,7,12,14,15 and in physician satisfaction.9,11,13–15 The referral module at our large integrated delivery system was initially developed in 2003, and it allowed PCPs and staff to initiate referrals online and to deliver pertinent material to specialists.16 Physicians who used the module within this system were 3 times more likely to communicate the necessary information to specialists than physicians who did not use the module.17 There was also a 19% increase (from 50% to 69%) in communication from specialists back to intervention PCPs.3 Although these findings showed a positive trend, multiple failure points continued to exist. There continues to be a need to close the communication loop between PCPs and specialists, since any failure to follow through with an important referral can be catastrophic. Closed-loop control would allow all practices involved in the referral to access each other’s information and provide timely and appropriate feedback.18

CRICO, a national medical malpractice insurer, through evaluations of malpractice data and medical office practices, identified best practice steps for the referral management process5: (1) a referral is ordered by the provider; (2) the practice/patient schedules the referral appointment; (3) the referring provider’s office reconciles the referral against the consult report to identify missed appointments; (4) missed appointments are reviewed with the ordering provider for appropriate follow-up; (5) the office contacts the patient to reschedule if necessary; (6) a note is placed in the medical record about missed/canceled/not rescheduled appointments; (7) the consult note is transmitted to the responsible provider in electronic or paper format; (8) the consult note is reviewed by the responsible provider and acknowledged; (9) the consult note is filed in the medical record and includes the provider’s acknowledgment; (10) the patient is notified of the consult report and any new treatment recommendations and knows who is responsible for coordination of care; and (11) there is auditing and reporting system compliance and success with the prior 10 steps.

We sought to enhance a referral management module in a locally developed electronic health record (EHR) to address the above best practice steps and to evaluate the effect of the enhanced referral manager module on the referral process in primary care practices. We had the following hypotheses: (1) PCPs and their staff would find it easier to track referrals and to identify if a breakdown in the process occurred (eg, a patient did not make an appointment, or missed or cancelled a scheduled appointment); (2) users would have greater confidence that individual steps in the referral process would be completed; and (3) users would have greater satisfaction with the referral process. We also evaluated the usefulness of specific functions within the enhanced referral manager module and the performance of practices on 3 referral metrics: the percentage of referrals that resulted in a completed specialist encounter, the percentage of completed specialist encounters for which the specialist’s note was received and acknowledged by the referring clinician, and the percentage of completed encounters for which there was documentation of communication to the patient of the follow-up plan and the responsible clinician.

MATERIALS AND METHODS

We enhanced the Referral Manager module in the Longitudinal Medical Record, Partners HealthCare’s in-house developed EHR, to meet CRICO’s best practice steps as well as the requirements for both the Centers for Medicare and Medicaid Service EHR Incentive Program (Meaningful Use stage 2) and the National Committee on Quality Assurance Patient-Centered Medical Home program.

We followed a user-centered design process19 that included user feedback from the initial design version, focus groups, prototype development, iterative feedback and prototype modifications, and post-release tunings and additional enhancements. We followed many of the principles described by Sittig and Singh20 in an 8-dimensional sociotechnical model for the evaluation of health information technology solutions applied to complex health care systems. The model focuses on the challenges involved in the design, development, implementation, and monitoring of health information technology. This model was applied to the outpatient referral process, and 10 best practice recommendations were published that focus on the dimensions of hardware and software, human-computer interface, clinical content, people involved in the referral process (PCPs, specialists, and patients), workflow and communication, organization policies and procedures, and measurement and monitoring.18

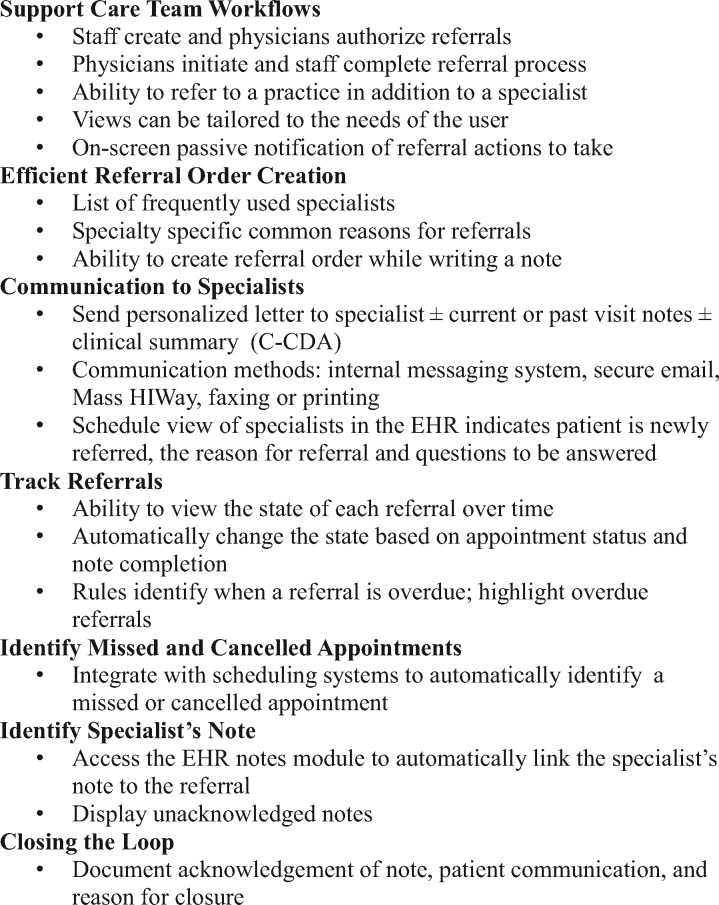

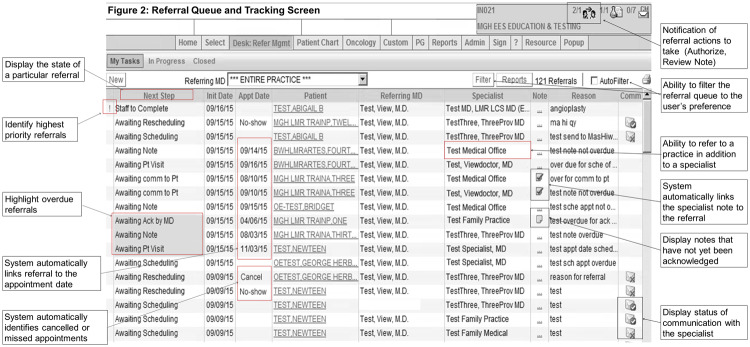

Figure 1 lists the key design considerations and associated functions used in the development of the enhanced referral management module. Figure 2 presents the main referral queue and tracking screen with examples of specific functionalities developed to meet the key design considerations.

Figure 1.

Key design considerations

Figure 2.

Referral queue and tracking screen

The enhanced Referral Manager module was piloted at 7 primary care clinics for a period of 6 months (September 2014 to February 2015). One practice switched to a new EHR system partway through the project and dropped out, leaving 6 practices that were included in the analysis. One practice was a solo practice, 4 were community-based group practices, and 1 was a hospital-based ambulatory practice. All were located in suburbs around the Boston, Massachusetts, area.

We administered a baseline survey to identify the characteristics of participants, including role (whether the user was a referring clinician, such as physician, physician assistant, or nurse practitioner or supporting staff, such as registered nurse, medical assistant, practice manager, or administrator), years in health care, average number of referrals involved with each month, and percentage of referrals that the respondent would consider high priority. The ease of performing various steps in the referral process for in-network referrals (ie, referrals to specialists within the integrated delivery network) and the confidence that those steps would be completed were evaluated on a 5-point scale, from easy to difficult. A subset of questions also focused on external referrals, ie, referrals outside of the network. Overall satisfaction was measured on a 7-point scale, from very satisfied to very dissatisfied. After 6 months of use, a follow-up survey was administered that included the baseline questions as well as the usefulness of functions on a 5-point scale, from very useful to not at all useful. To compare the characteristics of participants between the pre- and post-surveys, chi-square or Fisher’s exact test (for variables with low numbers) was used. The Wilcoxon exact test (2-sided) was used to compare the averages of baseline and follow-up survey results and to compare the in-network and out-of-network changes from baseline to follow-up. For this latter analysis, the variable “provide info to specialist” was calculated by taking the average of the responses to “provide reason for referral” and “provide clinical information.” Similarly, identifying missed or cancelled appointments also used the average of responses to the 2 questions of identifying missed and cancelled appointments. All analyses were done with SAS v. 9.4.20,21

Additionally, metrics were recorded to assess how thoroughly providers were using the module. The system tracked the percentage of referrals in which the specialist note was completed and attached to the referral, the percentage of PCPs who acknowledged the note when it was completed, and the percentage of physicians who communicated the results of the referral to the patient when the note was completed. This communication occurred external to the system. Documentation of note acknowledgement and patient communication occurred via checkboxes. Furthermore, the total number of referrals, the top specialties referred to, and the number of closed referrals were recorded for each practice. The final report on the metrics was run 6 months following the conclusion of the pilot period in order to allow closure of the referrals.

Institutional Review Board approval was obtained for this project.

RESULTS

Among the 6 primary care practices, we received a total of 61 pre-surveys (response rate: 67.8%) and 35 post-surveys (response rate: 45.5%). Each practice filled out between 5 and 18 pre-surveys and between 1 and 11 post-surveys. Nineteen participants filled out both surveys (Table 1). There were no significant differences between the groups regarding role, years in health care, referrals per month, and percentage of high-priority referrals.

Table 1.

Characteristics of participants

| Characteristic | Pre-survey, n (%) | Post-survey, n (%) | P-value |

|---|---|---|---|

| Role | .14 | ||

| Referring clinician | 20 (32.8) | 17 (48.6) | |

| Staff | 41 (67.2) | 18 (51.4) | |

| Years in health care | .76 | ||

| 0–10 | 38 (65.5) | 19 (57.6) | |

| 11–20 | 10 (17.2) | 6 (18.2) | |

| 21–30 | 5 (8.6) | 5 (15.2) | |

| 31–40 | 5 (8.6) | 3 (9.1) | |

| Referrals per month | .62 | ||

| 0–25 | 28 (51.9) | 18 (51.4) | |

| 26–50 | 11 (20.4) | 10 (28.6) | |

| 51–75 | 4 (7.4) | 4 (11.4) | |

| 76–100 | 5 (9.3) | 1 (2.9) | |

| >100 | 6 (11.1) | 2 (5.7) | |

| Percent high priority | .11 | ||

| 0–25 | 37 (74.0) | 29 (85.3) | |

| 26–50 | 4 (8.0) | 0 (0.0) | |

| 51–75 | 5 (10.0) | 5 (14.7) | |

| 76–100 | 4 (8.0) | 0 (0.0) |

After using the Enhanced Referral Manager module, respondents reported that virtually all the steps in the referral process were easier to accomplish (Table 2). Those that were statistically significant included in-network referral tasks such as identifying when an appointment was not made with the specialist (Δ = 0.90, P = .006), identifying when a scheduled appointment was missed (Δ = 0.71, P = .012) or cancelled (Δ = 0.75, P = .003), documenting a missed or cancelled appointment (Δ = 0.78, P = .003), documenting that a consult note was acknowledged (Δ = 1.06, P = .0004), and notifying the patient of the responsible provider for follow-up (Δ = 0.53, P = .036). Tracking the status of the referral (Δ = 1.43, P < .0001) was shown to have the greatest change in ease of use. While initially only 9% of respondents rated tracking the status of an in-network referral as “easy” or “fairly easy,” 45% rated it this way after using the module. Although all the steps of an out-of-network referral were rated as being easier following implementation of the Enhanced Referral module, none reached statistical significance.

Table 2.

Difficulty of steps in the referral process

| Step in the Referral Process | Baselinea | Follow-upa | P-value |

|---|---|---|---|

| In-network referrals | |||

| Create referral | 1.94 | 1.85 | .586 |

| Schedule appointment | 2.76 | 2.63 | .439 |

| Provide reason for referral | 1.94 | 1.71 | .598 |

| Provide clinical information | 1.82 | 1.63 | .465 |

| Identify when appointment not made | 3.37 | 2.47 | .006 |

| Identify missed appointment | 3.62 | 2.91 | .012 |

| Identify cancelled appointment | 3.96 | 3.21 | .003 |

| Document missed/cancelled appointment | 3.57 | 2.79 | .003 |

| Reschedule appointment | 3.15 | 2.74 | .095 |

| Find and review consult note | 1.88 | 1.88 | .834 |

| Document acknowledgment of consult note | 3.00 | 1.94 | .0004 |

| Notify patient of recommendations | 2.70 | 2.29 | .087 |

| Notify patient of responsible provider | 2.80 | 2.27 | .036 |

| Track status of referral | 3.91 | 2.48 | <.0001 |

| Out-of-network referrals | |||

| Track status of a referral | 4.11 | 3.91 | .359 |

| Schedule an appointment | 3.30 | 3.11 | .450 |

| Provide reason for a referral and clinical information | 3.12 | 2.91 | .504 |

| Identify missed or cancelled appointment | 4.29 | 4.14 | .325 |

| Obtain a consult note | 3.76 | 3.46 | .173 |

aValues are average scores between 1 and 5, where 1 = easy and 5 = difficult.

Performing steps in the referral process (Table 3) was easier for in-network referrals than out-of-network referrals at both baseline and follow-up (with a statistically significant difference for all steps other than tracking referrals at baseline). The module significantly improved the identification of missed or cancelled appointments (Δ = 0.79, P = .0324) and the tracking of referrals (Δ = 0.29, P < .0001) for in-network compared to out-of-network referrals.

Table 3.

Comparison of changes between in-network and out-of-network referrals

| Step in the Referral Process | Baselinea |

Follow-upa |

Differences |

||||||

|---|---|---|---|---|---|---|---|---|---|

| In network | Out of network | P-value | In network | Out of network | P-value | In net diff | Out of net diff | P-value | |

| Schedule appointment | 2.74 | 3.27 | .0003 | 2.63 | 3.08 | .0023 | 0.11 | 0.19 | .7196 |

| Provide info to specialist | 1.95 | 3.18 | <.0001 | 1.65 | 2.80 | <.0001 | 0.30 | 0.39 | .6770 |

| Identify missed or cancelled appointment | 3.46 | 4.31 | <.0001 | 2.67 | 4.10 | <.0001 | 0.79 | 0.21 | .0324 |

| Receive consult note | 1.87 | 3.76 | <.0001 | 1.88 | 3.48 | <.0001 | −0.005 | 0.29 | .3123 |

| Track referrals | 3.96 | 4.20 | .0857 | 2.52 | 3.91 | <.0001 | 1.44 | 0.29 | <.0001 |

aValues are average scores between 1 and 5, where 1 = easy and 5 = difficult.

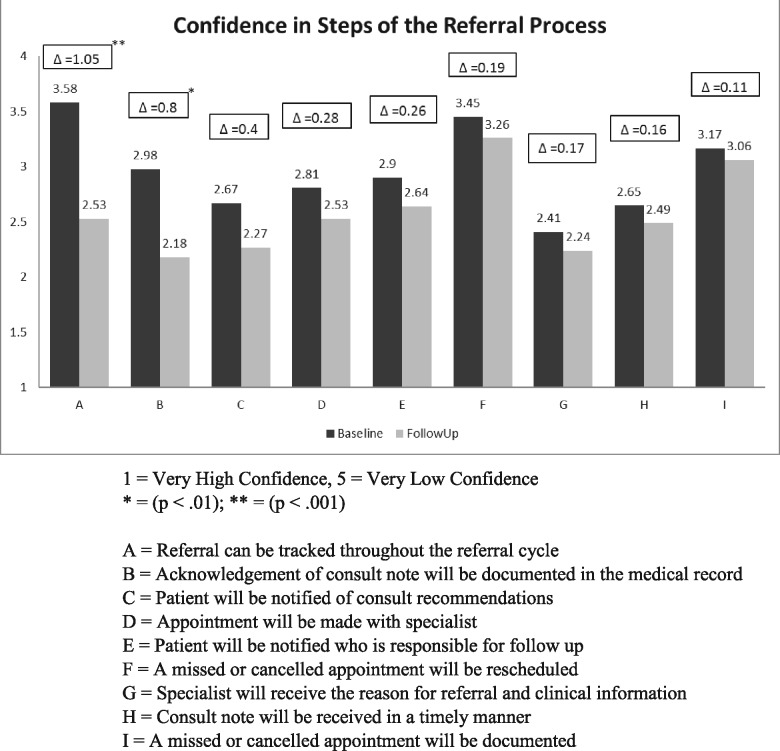

Respondents also stated that they had greater confidence in tracking referrals throughout the referral process after using the module (Figure 3). Before implementation of the module, 56% of respondents stated that they had “low” or “very low” confidence in tracking referrals, while only 18% had “high” or “very high” confidence (average score = 3.58). These results changed to 13% and 47%, respectively, after using the module, with the average score improving to 2.53 (P < .001).

Figure 3.

Change in confidence in completing the referral process

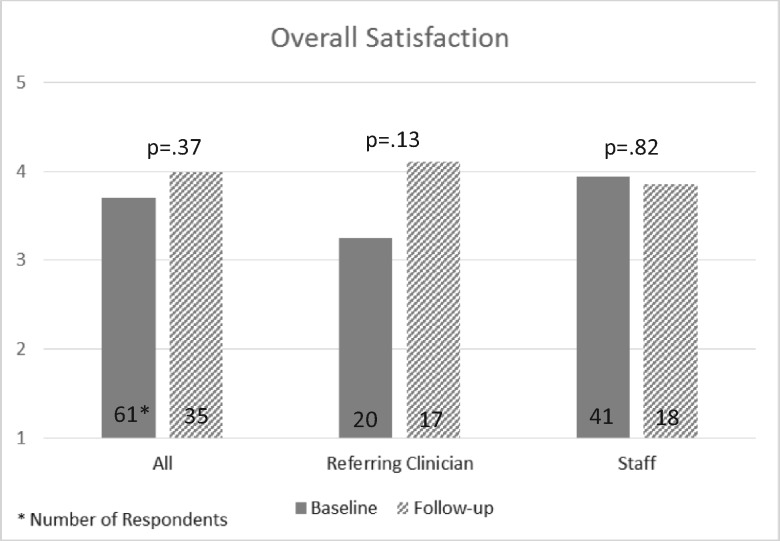

Figure 4 illustrates the change in satisfaction among all users, stratified by role type: referring clinicians and staff. Overall, there was 0.29 improvement in satisfaction scores. However, that was not statistically significant. When analyzed by role type, the satisfaction change in referring clinicians was 0.87 (a large effect size) compared to basically no change in the staff.

Figure 4.

Overall satisfaction

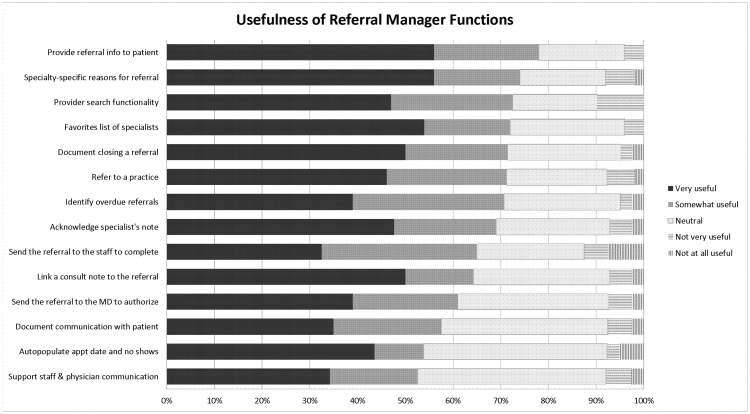

As summarized in Figure 5, 14 main functions were found by >50% of users to be very useful or somewhat useful. None of the functions were rated “not very” or “not at all” useful by >16% of respondents. The top functions found to be very or somewhat useful were having the ability to: provide referral information to the patient (78%), select reasons for referral from a list that was specific to the specialty selected (74%), search through a directory of specialists (73%), create a favorite list of specialists who are commonly referred to (72%), document the closing of a referral (71%), refer to a practice in addition to a particular specialist (71%), and identify referrals that were overdue (71%).

Figure 5.

Usefulness of referral manager functions

Usage and referral metrics of the 6 practices are summarized in Table 4. The metrics (last 3 columns in the table) are reported as percentages of closed referrals. There was a large variation in usage of the module at the practices (11–805 referrals created for the 6-month period from September 2014 through February 2015). Toward the beginning of the pilot period, practice 4 had staffing issues, which greatly reduced their ability to implement the referral module and led to low usage. Overall, the top specialties referred to were gastroenterology, cardiology, and neurology. A specialist encounter was completed for about two-thirds of referrals (avg: 65.7%, range: 9.1%–82.9%), the consult note was acknowledged by the referring clinician about three-quarters of the time (avg: 76.5%, range: 38.5%–86.5%), and information was communicated to the patient by the referring clinician <10% of the time (avg: 8.4%; range: 0.3%–54.5%).

Table 4.

Usage and referral metrics by practice

| Practice | Total no. of referrals | Top 3 specialties | No. closed (%) | % Note | % Ack | % Pt Comm |

|---|---|---|---|---|---|---|

| Practice 1 | 805 | GI, Card, Derm | 556 (69) | 82.9 | 86.5 | 5 |

| Practice 2 | 359 | Neuro, GI, Card | 341 (95) | 22.9 | 42.2 | 0.3 |

| Practice 3 | 113 | Neuro, GI, Card | 88 (78) | 29.7 | 38.5 | 15.4 |

| Practice 4 | 11 | Card | 11 (100) | 9.1 | 63.6 | 54.5 |

| Practice 5 | 157 | GI, Card, Uro | 99 (63) | 67.7 | 77.8 | 31.3 |

| Practice 6 | 49 | GI, Card, Neuro | 28 (57) | 32.1 | 50 | 17.9 |

| Total | 1341 | GI, Card, Neuro | 1030 (76.8) | 65.7 | 76.5 | 8.4 |

Abbreviations: GI: gastroenterology; Card: cardiology; Derm: dermatology; Neuro: neurology; Uro: urology; % Note: % of closed referrals that had a specialist note completed; % Ack: % of closed referrals where the specialist note was acknowledged by the referring clinician; % Pt Comm: % of closed referrals where the referral plan was communicated to the patient.

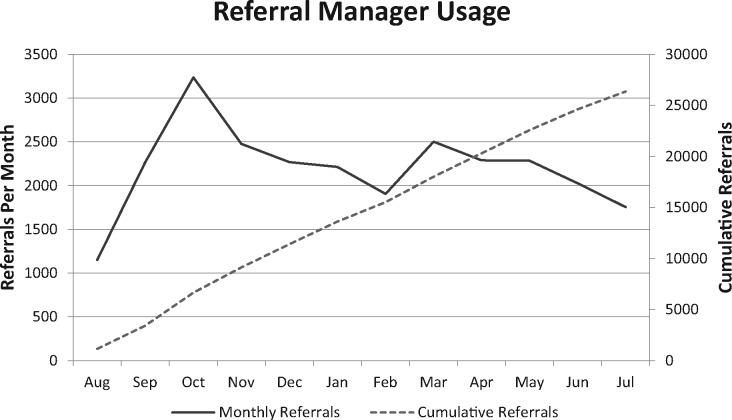

Following the period of the pilot study, >60 additional practices have implemented the referral module. Figure 6 shows overall usage of the module from August 2014 through July 2015. During that period, a total of 26 368 referrals were ordered using the module, with an average of 2197 referrals per month.

Figure 6.

Referral manager usage

DISCUSSION

We enhanced a referral module in a locally developed EHR to provide improved intra-office workflow support, improved specialist selection and communication of referral information, automated referral tracking capabilities, identification of breakdowns in the referral process, better ability to close the loop on referrals, and advanced reporting tools. We found that implementation of an enhanced referral manager system in primary care practices made it easier for PCPs and their staff to track referrals to specialists, to identify if a breakdown in the referral process occurred, and to have greater confidence in being able to track referrals throughout the referral cycle. This suggests improvement in both efficiency and safety.

We identified primary care practices that were interested in piloting this module. Much of the impetus for participation included being able to meet National Committee for Quality Assurance requirements for a patient-centered medical home as well as Centers for Medicare and Medicaid Service Meaningful Use stage 2 requirements. All the pilot practices prior to this study were using a mixture of paper and electronic processes for their referral workflows. None previously used the electronic referral module in the EHR. Surveying the clinical and nonclinical staff who were involved in the primary care practices’ referral processes, we found a large baseline variation in the ease of performing various steps and important differences between in-network and out-of-network referrals. Steps that were relatively easy to perform for in-network referrals included referral creation and bilateral communication with the specialist, while the more difficult steps included tracking referrals and identifying when a breakdown in the process occurred. For out-of-network referrals, there were no referral actions that the majority of respondents rated as easy or fairly easy. Out-of-network referrals had similar difficult steps to in-network referrals (eg, identifying missed or cancelled appointments, tracking the status of the referral). In addition, obtaining the consult note was very difficult with referrals to out-of-network providers.

Following implementation of the enhanced referral module, all the more difficult steps of in-network referrals had a large improvement in ratings of ease of performance that was statistically significant. For example, the overall ability of tracking the status of the referral was significantly easier (36% increase). However, for out-of-network referrals, while the ratings did improve for all the steps, none of these changes were statistically significant.

Reasons for the large improvement for in-network referrals and the differential improvement between in-network and out-of-network referrals may be explained by the scope of the functionality implemented. A new practice-based view of all patient referrals included the status of where the patient is in the referral cycle. Whether a patient is awaiting the scheduling of a new appointment or rescheduling of an appointment that was missed or cancelled is easily viewed by the user. The system is integrated with the scheduling systems utilized at the practices and can automatically identify if an appointment was made (thereby changing the referral status to awaiting specialist appointment) or if one was missed or cancelled (and changing the status to needing rescheduling). In addition, rules were implemented to highlight referrals when the patient remained in a stage of the referral for an extended period of time. These rules depended on the urgency that was indicated at the time the referral was created. In addition, integrating with the notes module in the EHR allowed the referral module to automatically link the consultant’s note to the referral. The referring clinician thereby had one-click access to review the specialist’s note directly from the practice-based view of referrals and then acknowledge the note in the record and acknowledge communication with the patient about the follow-up plan and who would follow up, thus completing the closing-the-loop best practice step as described by CRICO.5

Integration with scheduling systems of out-of-network providers is much more difficult and was not done for this project. Thus, identifying when an appointment was not made, missed, or cancelled remained a manual effort by the referring practice. However, communication with out-of-network providers was enhanced by providing the ability to send the referral information along with pertinent parts of the medical record using the Mass HIway, the state electronic health information exchange in Massachusetts. Although not statistically significant, this may explain why the largest change (0.21 on a 5-point scale) for out-of-network referrals was in being able to provide a reason for the referral and associated clinical information. At the time of this project, many specialists were not yet in the state’s physician directory and were not available to receive information electronically though the health information exchange.

The top functions providers felt were useful focused on initiation and documentation of the referral in the office as well as improved tracking of the referral. Common to these functions is the ability to make data entry efficient, to automate previously manual steps, and to assist in identifying problems that occur in the referral process.

Overall, about three-quarters of referrals were completed during this study. Reasons for noncompletion of a referral included: an appointment was not scheduled, an appointment was scheduled but was missed, the specialist’s note was not received and linked to the referral, or the referral was manually completed but that information was not entered into the EHR. About two-thirds of notes were electronically linked to referrals. Some reasons for not being able to electronically link a referral included: out-of-network referral, or the referral note contained an incorrect date that did not match the actual referral appointment date.

Referring physicians acknowledged about three-quarters of notes in the referral system. Some of these notes (unknown percentage) were manually reviewed but not electronically acknowledged. The low percentage of documentation of communication with patients (8.4%) was not unexpected, since many PCPs felt that communication with their patients about the referral plan would be done by the specialist physician. However, it is important to note that from the malpractice insurer’s perspective, the referring physician has the responsibility for following up with the patient.

We did not identify a significant difference in satisfaction scores overall. However, there was a difference in the change in scores between referring clinicians (+0.86) and staff (−0.08). In discussions with the practices, it was apparent that additional time was required by the staff to monitor the referral queue and follow up as needed, and while they almost uniformly felt that patients were getting better follow-up and attention, the lack of improvement in satisfaction may reflect the added workload. Overall, the experience of practices was very positive, and this was reflected in the fact that post-pilot, practices continued to use the module at a rate of 2000–2500 referrals per month.

LIMITATIONS

This study was done using an in-house developed EHR, which allowed for much flexibility in adding functionality, iteratively obtaining user feedback, and then performing enhancements. Performing such a process using other EHRs would either take a protracted time or not be possible at all. In addition, the study was performed within primary care practices within a single integrated health care system. Although these practices were of varying sizes and had different physician-staff workflows, all were in suburban settings. This may limit the generalizability of these results to other settings. However, we believe that being able to provide a highly usable application that supports care team workflows, supports efficient referral creation, expedites bilateral communication between referring physicians and specialists, tracks and automatically updates the status of each referral over time, allows for closing the loop on a referral, and identifies when a breakdown in the referral process has occurred are key functions that can be enhanced in other EHRs and implemented in other settings.

CONCLUSION

We found that enhancing the electronic referral application in an EHR improved the tracking of referrals by primary care practices and enabled identification of when breakdowns in the referral process occurred. Staff time is required to adequately monitor and follow up referrals to improve quality and patient safety.

FUNDING

This work was supported by CRICO/Risk Management Foundation.

COMPETING INTERESTS

The authors have no competing interests to declare.

CONTRIBUTORSHIP

HR, AN, PN, and DK contributed to the conception and design of this work. HR, AN, PN, RK, and AA contributed to the acquisition of data for this work. HR, AN, SK, PN, DK, and DWB contributed to the analysis and interpretation of this work. HR, AN, and SK led drafting and writing the manuscript, and all co-authors reviewed and commented on the drafts. All authors gave final approval of the version to be published.

ACKNOWLEDGMENTS

The authors would like to thank Greg Kanevsky and Salavat Akhmetov for software engineering, Inna Natanel for user interface design, and Shimon Shaykevich for statistical support.

REFERENCES

- 1. O’Malley AS, Reschovsky JD. Referral and consultation communication between primary care and specialist physicians. JAMA Intern Med. 2011;1711:56–65. [DOI] [PubMed] [Google Scholar]

- 2. Stille CJ, McLaughlin TJ, Primack WA et al. , Determinants and impact of generalist-specialist communication about pediatric outpatient referrals. Pediatrics. 2006;1184:1341–49. [DOI] [PubMed] [Google Scholar]

- 3. Gandhi TK, Sittig DF, Franklin M et al. , Communication breakdown in the outpatient referral process. J Gen Intern Med. 2001;159:626–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Ireson CL, Slavova S, Steltenkamp CL et al. , Bridging the care continuum: patient information needs for specialist referrals. BMC Health Serv Res. 2009;9:163. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hoffman J. Managing Risk in the Referral Lifecycle. https://www.rmf.harvard.edu/Clinician-Resources/Newsletter-and-Publication/2012/SPS-Managing-Risk-in-the-Referral-Lifecycle. Accessed September 14, 2017. [Google Scholar]

- 6. Witherspoon L, Liddy C, Afkham A et al. , Improving access to urologists through an electronic consultation service. Can Urol Assoc. J 2017;118:270–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Kim Y, Chen AH, Keith E et al. , Not perfect, but better: primary care providers’ experiences with electronic referrals in a safety net health system. J Gen Intern Med. 2009;245:614–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Straus SG, Chen AH, Yee H Jr et al. , Implementation of an electronic referral system for outpatient specialty care. AMIA Annu Symp Proc. 2011;2011:1337–46. [PMC free article] [PubMed] [Google Scholar]

- 9. Forrest CB, Glade GB, Baker AE et al. , Coordination of specialty referrals and physician satisfaction with referral care. JAMA Pediatr. 2000;1545:499–506. [DOI] [PubMed] [Google Scholar]

- 10. Murthy R, Rose G, Liddy C et al. , eConsultations to infectious disease specialists: questions asked and impact on primary care providers’ behavior. Open Forum Infect Dis. 2017;42:ofx030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Stoves J, Connolly J, Cheung CK et al. , Electronic consultation as an alternative to hospital referral for patients with chronic kidney disease: a novel application for networked electronic health records to improve the accessibility and efficiency of healthcare. Qual Saf Health Care. 2010;195:e54. [DOI] [PubMed] [Google Scholar]

- 12. Callahan CW, Malone F, Estroff D et al. , Effectiveness of an Internet-based store-and-forward telemedicine system for pediatric subspecialty consultation. Arch Pediatr Adolesc Med. 2005;1594:389–93. [DOI] [PubMed] [Google Scholar]

- 13. Vimalananda VG, Gupte G, Seraj SM et al. , Electronic consultations (e-consults) to improve access to specialty care: a systematic review and narrative synthesis. J Telemed Telecare. 2015;21:323–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Liddy C, Drosinis P, Keely E. Electronic consultation systems: worldwide prevalence and their impact on patient care: a systematic review. Fam Pract. 2016;33:274–85. [DOI] [PubMed] [Google Scholar]

- 15. Liddy C, Afkham A, Drosinis P et al. , Impact of and satisfaction with a new eConsult service: a mixed methods study of primary care providers. J Am Board Fam Med. 2015;283:394–403. [DOI] [PubMed] [Google Scholar]

- 16. Poon EG, Wald J, Bates DW et al. , Supporting patient care beyond the clinical encounter: three informatics innovations from Partners Health Care. AMIA Annu Symp Proc. 2003:1072. [PMC free article] [PubMed] [Google Scholar]

- 17. Gandhi TK, Keating NL, Ditmore M et al. , Improving referral communication using a referral tool within an electronic medical record. In: Henriksen K, Battles JB, Keyes MA et al., , eds. Advances in Patient Safety: New Directions and Alternative Approaches (Vol. 3: Performance and Tools). Rockville, MD: Agency for Healthcare Research and Quality; 2008; 3:1–12. [Google Scholar]

- 18. Esquivel A, Sittig DF, Murphy DR et al. , Improving the effectiveness of electronic health record-based referral processes. BMC Med Inform Decis Mak. 2012;12107:1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Schumacher RM, Lowry SZ. NIST Guide to the Processes Approach for Improving the Usability of Electronic Health Records. Washington, DC: National Institute of Standards and Technology; 2010. Report No.: NISTIR 7741. [Google Scholar]

- 20. Sittig DF, Singh H. A new socio-technical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care. 2010;19(Suppl 3):i68–i74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. SAS. 9.4 ed. Cary, NC: SAS Institute, Inc; 2015.