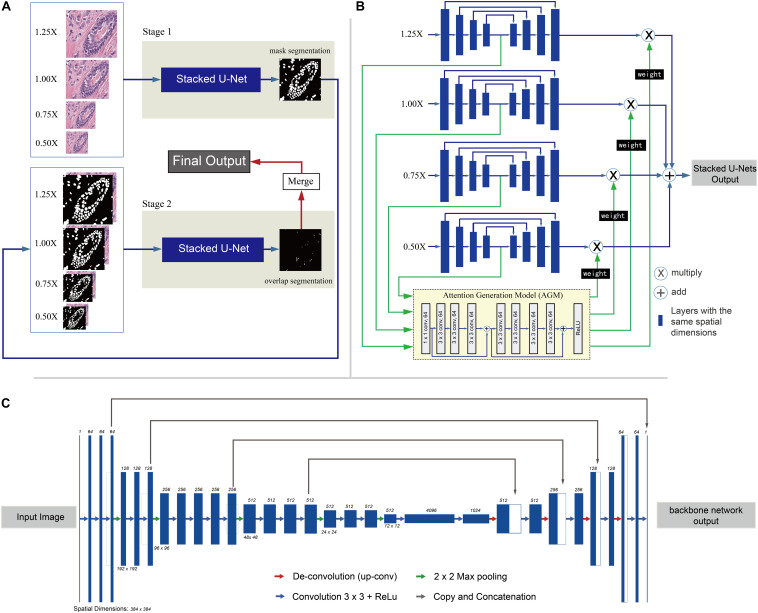

FIGURE 1.

Overview of the two-stage learning method. (A) Overall flow chart of the two-stage method. Input: First, images are split into small patches of 384 × 384-pixel size, resized into four different scales (1.25×, 1.0×, 0.75×, and 0.50×). Stage 1: In stage 1, the patches are fed into the first set of Stacked U-Nets for the first round of nuclei segmentation. The Stacked U-Nets consist of four parallel backbone nets that have different sized images as input. At the end of stage 1, the mask segmentation of nuclei regions is generated with pixel gray value of 0 (not nuclei regions) and 1 (nuclei region). In addition, the nuclei instance segmentation is also predicted by the watershed algorithm. Stage 2: The stage 2 input contains not only the original RGB image patches but also the binary masks segmentation of nuclei regions predicted by stage 1 as the fourth set of values. At the end of stage 2, the segmentation result of overlapped regions is generated with pixel gray value of 0 (not overlapped regions) and 1 (overlapped region). Merge: In the merge step, the first round of nuclei instance segmentations results from stage 1 are updated by merging the corresponding overlapped objects, which have at least 10 pixels overlapped with the contour objects derived from stage 2. Output: The final output of the flow is nuclei instance segmentation result which includes separate nuclei of the overlapping regions if they have. (B) Architecture of the Stacked U-Nets. Blue rectangles stand for the multiple layers in the backbone net with the same spatial dimensions. The Attention Generation Model (AGM) is used to weight and sum the predictions of the four scaled backbone nets and generate the final segmentation. The output of the Attention Generation Model (AGM) is a weight matrix which weights for each backbone net that have different scaled images as input. Each backbone net returns a segmentation result weight matrix generated by the AGM which is used to multiply (X circle in panel C) the result of each segmentation result and sum them together (+ circle in panel C) to get the final result. (C) Detailed architecture of the backbone net used in the Stacked U-Nets. Each dark blue box corresponds to a multi-channel feature map. The number of channels is denoted on the top of the box. The spatial dimensions are provided under some of the boxes (boxes with the same height have the same spatial dimension). White boxes represent copied feature maps from layers where the gray arrows originate. The arrows with different colors denote the different operations–red for de-convolution, green for max-pooling, blue for regular convolution, and gray for copy and concatenation.