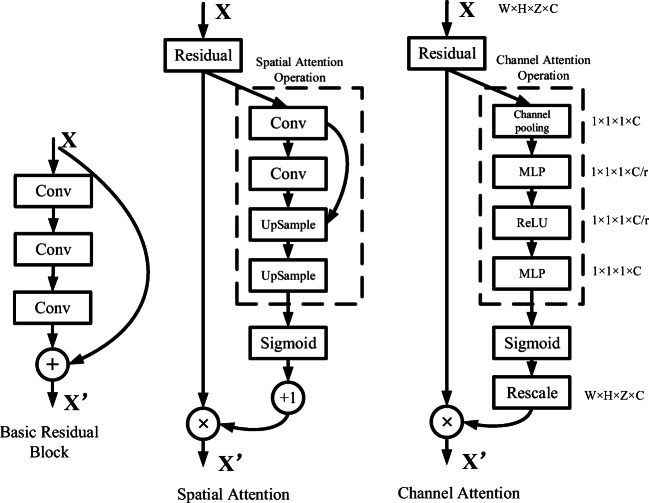

Fig. 2.

The two parts of the attention module and basic residual block. The spatial attention part: a bottom-up top-down structure, the feature maps in shallow level and deep level are merged to consist the spatial attention feature map. The channel attention part: global pooling to exploit the whole channel filter’s contextual information. The parameter r is equal to 16 in this paper