Abstract

Objective: Despite federal policies put in place by the Office of the National Coordinator (ONC) to promote safe and usable electronic health record (EHR) products, the usability of EHRs continues to frustrate providers and have patient safety implications. This study sought to compare government policies on usability and safety, and methods of examining compliance to those policies, across 3 federal agencies: the ONC and EHRs, the Federal Aviation Administration (FAA) and avionics, and the Food and Drug Administration (FDA) and medical devices. Our goal was to identify whether differences in policies exist and, if they do exist, how policies and enforcement mechanisms from other industries might be applied to optimize EHR usability.

Method: We performed a qualitative study using publicly available governing documents to examine similarities and differences in usability and safety policies across agencies.

Results: The policy review and analysis revealed several consistencies within each agency’s usability policies. Critical differences emerged in the usability standards and policy enforcement mechanisms utilized by the 3 agencies. The FAA and FDA look at evidence of usability processes and are more prescriptive when it comes to testing final products as compared to the ONC, which relies on attestation and is less prescriptive.

Discussion: A comparison of usability policies across industries illustrates key differences between the ONC and other federal agencies. These differences could be contributing to the usability challenges associated with EHRs.

Conclusion: Our analysis highlights important areas of usability and safety policy from other industries that can better inform ONC policies on EHRs.

Keywords: electronic health records, human factors engineering, policy

Background and Significance

Spurred by the $40B Health Information Technology for Economic and Clinical Health Act, the vast majority of health care providers have adopted new electronic health records (EHRs) since 2011, and many cite challenges with using EHR technology as a barrier to providing good care.1–3 Clinicians have loudly voiced frustration and dissatisfaction with the ability of EHR technology to meet their functional needs, and poor usability is often cited as a primary contributor.4 In addition to frustrating clinicians, the poor usability of EHRs has patient safety implications.5,6 Patients feel that the technology is getting in the way of their care.7 Because of the significance of the usability problem and the consequences for patient care, the US Senate Health, Education, Labor, and Pensions Committee held a series of hearings dedicated to the usability of EHRs.8 Usability of EHRs is a complex problem, and many stakeholders, including government agencies, trade associations, advocacy organizations, human factors research groups, and EHR vendors, are engaging in a dialogue to determine how to make improvements.

Usability impacts effectiveness, efficiency, and user satisfaction with an EHR system and can be impacted by both the design of the interface (screen visualization and means of entering commands and data) and how well the system is designed to support the cognitive and functional work of users.9–11 There are well-recognized design and development processes for creating usable products. These processes put the needs of end users at the forefront by considering scientific data about human performance, engaging end users during development, and testing the products with representative end users. When these usability-focused approaches are used, there are improvements in efficiency, satisfaction, and safety.9 Usability methods have grown out of the scientific discipline of human factors engineering, which is focused on designing and developing systems that meet the performance characteristics and capabilities of the people who will be using the system.12

In high-risk industries, such as aviation and health care, usability has been shown to be closely coupled to safety. It is therefore imperative that technology used in these industries meets the highest standards of usability in order to protect lives. Most high-risk industries have implemented federal usability guidance, policies, or standards to encourage the development of usable technology. The Federal Aviation Administration (FAA) has had policy guidance in place to shape the usability and safety of aviation technology for >25 years, and the Food and Drug Administration (FDA) has had similar guidance regarding the usability and safety of medical devices for >15 years. Only recently have policies been established, through the Office of the National Coordinator of Health Information Technology (ONC), to guide the design and development of EHR technology, with the first ones put in place in 2014. An analysis of EHR vendor adherence to these policies demonstrated that many vendors do not follow them, yet their technology is still being certified as following the policies and is used by front-line health-care providers.13 Clinician frustration and the safety challenges associated with EHRs may be due partially to usability policies and lack of adherence.

This study sought to compare the government regulation and evaluation of software usability in the EHR industry (by the ONC) with that of flight deck displays and controls in avionics (by the FAA) and medical devices (by the FDA). With the FAA and FDA having policies in place for considerably longer than the ONC and their evolving to meet changing needs, there may be an opportunity to learn from these industries and apply the knowledge to policies governing EHR usability and safety, if differences exist. There are clear differences among the industries being compared, including the motivation driving creation of usability and safety policies, the complexity of the technology in each industry, and the public’s awareness of and attention to these policies. Despite these differences, recognized usability design and development processes can be applied to the development of any technology, and adhering to these processes promotes more usable and safe products.14 While there are some indicators that usability polices differ from industry to industry, a comprehensive and systematic analysis has not been performed.15 We sought to determine whether differences exist in the usability policies of the ONC, FAA, and FDA and, if so, identify policies and enforcement mechanisms that might be applied to optimize EHR usability and safety.

Method

We performed a qualitative study using publicly available ONC, FAA, and FDA governing documents, human factors practices, and usability statements. A human factors and usability policy researcher (ES) retrieved and reviewed the documents to gain a better understanding of the usability standards and their enforcement mechanisms across agencies, and discussed them with a usability policy expert (RR). Within each industry, the following focus areas were selected based on their similarities with health information technology (IT) in user interaction, complexity of use, and potential for hazard: electronic health records (ONC), flight deck displays and controls (FAA), and medical devices (FDA).

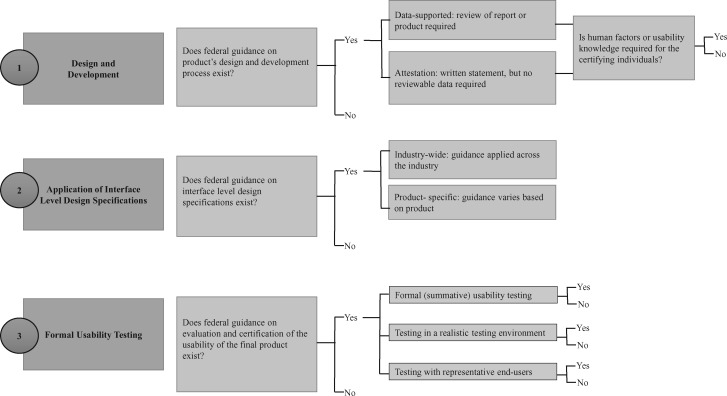

Our analysis focused on the usability policies in place and the methods for examining compliance with these policies. The general usability design and development approach involves utilizing a design process that prioritizes the end user, applying recognized design principles and standards established in the human factors research literature, and conducting formal usability tests during design and on the final product. These components were used to formulate a usability process framework, described below, and the documents retrieved from each industry were analyzed based on the degree to which each agency’s policy guidance addressed each component in the framework. The left side of Figure 1 shows the specific elements of the usability process that were examined in our analysis.

Figure 1.

Usability Framework Analysis Process.

There are 2 common ways for federal agencies to examine compliance with policy: attestation and data-supported.16 The process of attestation generally requires a written or verbal statement that the policy has been adhered to. Data-supported compliance is based on an organization’s provision of demonstrable evidence that the policy was adhered to. Our analysis examined whether each agency used an attestation or data-supported approach for each framework component.

The usability framework

There are 3 widely accepted processes to designing and developing a safe and usable product. First, the design and development process should embrace the end user from the very beginning. A commonly accepted design process is user-centered design (UCD), although other design processes that recognize end-user needs exist.14,17 UCD is an iterative process that includes studying how end users interact with their environment to accomplish their goals, developing prototypes based on this knowledge, and continually refining the prototypes. Second, during the design and development process, design principles and standards are applied to optimize interactions with technology. These principles are largely grounded in human factors science, and standards may be agreed upon by the community of vendors. Third, formal usability testing is generally conducted at the end of product development to determine whether the technology meets end-user needs. Our analysis examined which aspects of the usability framework were the focus of ONC, FAA, and FDA policies, with the goal of identifying similarities and differences across industries. Figure 1 provides a summary of the approach.

1. Design and Development

We examined whether each agency has guidance on the product design and development process and how compliance to requirements is inspected. Compliance methods were identified as attestation if they required a written statement but no reviewable data. Compliance methods were identified as data-supported if they required a review of either a report or product by the governing agency, which verifies or validates with its own personnel or representatives. We then identified the expertise levels of the certifying persons in each agency, whether they are required to have human factors expertise, and whether compliance is determined by the governing agency itself or delegated to an outside organization.

2. Application of Interface-Level Design Specifications

We determined whether the federal agency has guidance for interface-level design specifications. Interface-level design specifications, also known as style guides, are standards or guidelines that stipulate graphical user interface elements such as font, size, color, iconography, screen layout, and alerts. If specifications were available, their specificity was determined by analyzing whether the governing agency’s guidance applies to all covered technology or to specific products, or whether it is general and leaves interface-level decisions up to the product developer.

3. Formal Usability Testing

We identified the process by which each agency evaluates and certifies that final products meet the specified usability requirements. We identified components of the evaluation and certification of final products, including formal (summative) usability testing, testing in a realistic testing environment, and testing with representative end users.

Results

The policy review and analysis revealed several consistencies within each agency’s usability policies. We found that the ONC, FAA, and FDA all have standards to promote a design process that considers the needs of end users, and each agency evaluates compliance with this standard, although in different ways. We also found that each agency requires an evaluation of the end product.

Differences emerged in the usability standards and enforcement mechanisms utilized by the 3 agencies. We found that there were differences in the assessment of the design process used, the availability of interface-level design specifications, and the means by which final products were evaluated. The similarities and differences identified between the agencies are summarized in Table 1.

Table 1.

Usability Analysis Review

| Usability Standards | ONC | FAA | FDA |

|---|---|---|---|

| Rigor of design process used | +Requirement: Apply user-centered design process.31 | +Requirement: Apply human-centered design principles.26,32 | +Requirement: Follow human factors considerations.22 |

| −Compliance: Attestation evaluated by external group with no requirement of human factors and usability expertise.31 | +Compliance: Data-supported evaluation conducted by internal associates with human factors and usability expertise.20,21 | +Compliance: Data-supported evaluation conducted by internal associates with human factors and usability expertise.22,23,25 | |

| Availability of interface-level design specifications | −No interface-level design specifications.31 | +Interface-level design specifications (specific and applied across the industry).33 | +Interface-level design specifications (general industry-wide, but specific to some device types).23 |

| Certification and evaluation of final product | −Summative testing does not require representative end users or a realistic testing environment.31 Significant changes can be made post-certification. | +Summative testing using representative end users in a realistic testing environment.21 Significant changes cannot be made post-certification.33 | +Summative testing using representative end users in a realistic testing environment.22,23 Significant changes cannot be made post-certification, however customization does occur.29 |

Design process

Electronic health records and the ONC

Design process: The ONC requires EHR vendors to employ a UCD process. The ONC allows EHR vendors to determine which process they use, but recommends industry or federal government standards from the International Organization for Standardization and National Institute of Standards and Technology Internal or Interagency Reports.18

Compliance: EHR vendors must attest to using a UCD process. ONC Accredited Testing Laboratories (ONC-ATLs) are approved contractors that work on behalf of the ONC to certify that EHR vendors have met this standard by reviewing their attestation. In the attestation, vendors must specify the UCD industry or federal government standard used by name, description, and citation or by submitting information on a UCD process if theirs is not an industry standard, including the name and an outline and short description of the process(es).18 Because ONC-ATLs are external to the ONC, we were not able to determine whether certifying personnel are required to have human factors engineering or usability expertise to review vendor attestations and certify them as compliant.

Aviation and the FAA

Design process: The FAA requires that human-centered design principles are applied to the design of flight deck displays in aircraft and that product developers report the design philosophy they used, as well as supply specifics of human performance considerations, including descriptions of error potential, user workload, and expected training requirements.19

Compliance: The FAA uses a data-supported approach by reviewing the documented design philosophy and evaluating the supporting data of the process used. This process must be documented and is rigorously evaluated by a team internal to the FAA that includes individuals with human factors and usability expertise.20,21 The FAA conducts an in-depth review of the certification documentation and evaluates the products themselves, when necessary, with internal experts.21

Medical devices and the FDA

Design process: The FDA’s Center for Devices and Radiological Health recommends that human factors considerations be followed in the design of medical devices. The agency published guidance on the application of human factors in medical device design.22 Its guidance includes taking an analytical approach to identifying use-related hazards, formative testing, simulation testing, and usability testing.23,24

Compliance: The FDA uses a data-supported approach by reviewing data from the human factors engineering and usability formative evaluation documentation submitted in the premarket approval application. The FDA has an internal team of qualified human factors experts who review many of the human factors portions of the medical device application. The remaining applications are reviewed by general reviewers who have been trained in human factors principles. The review goal is to ensure that the device has been “designed to be reasonably safe and effective when used by the intended user populations.”25

Availability of interface-level design specifications

Electronic health records and the ONC

The ONC does not require any interface-level design specifications be used in the development of EHRs.18

Aviation and the FAA

The FAA has detailed interface-level design specifications that are applied to all flight deck displays and controls. These standards cover display resolution, glare and reflections, labels and symbols, alerts, error management, and many other aspects of the software.26 Interface-level design specifications are required across the industry, which leads to similarities across product developers.27 Pilots can expect to see many of the same design elements across aircraft, even if the aircraft are equipped by different avionics manufacturers. Interface-level design specification standardization across the industry helps equip pilots to be able to orient themselves and control different aircraft effectively, while reducing workload and the training time needed to transition from one cockpit to another safely.27,28

Medical devices and the FDA

The FDA provides general guidance on interface-level design, including consistency, hierarchical structure, and navigational logic,22 but does not provide design specification guidance or consensus standards across the medical device industry due to the wide variety of devices covered.29 The FDA focuses its recommendations and evaluation on the processes used during the design process rather than specific interface-level elements. This model gives manufacturers flexibility to design devices that comply with policies while also using design specifications that are most appropriate for their devices and users.29 The FDA provides additional design guidance on selected medical devices that have been identified to have increased risk for adverse events, such as infusion pumps.30

Evaluation and certification of the final product

Electronic health records and the ONC

EHR vendors must conduct summative testing and submit the results to ONC-ATLs using a recommended format.18 ONC-ATLs review these reports to ensure that documentation exists; however, the ONC-ATL is not present for any part of the summative testing. The ONC certification criteria require a minimum of 10 participants in the summative test; however, the requirements do not stipulate the expertise and background of these participants or particular testing environments (eg, simulation).18 After a product is certified, it can be modified significantly by purchasers during the implementation phase. The implemented product, which can be modified dramatically from the certified product, is not reviewed or approved by the ONC or an affiliated ONC agency.

Flight deck displays and controls and the FAA

FAA guidance requires flight deck display developers to develop a compliance matrix that identifies the features of their design and their plans to comply with FAA standards. The compliance standards are determined based on the novelty of the design or feature. More complex and novel designs require product developer evaluations and simulated testing with an FAA representative present. Final simulation testing involves representative end users and is conducted in an aircraft or simulated test environment that adequately represents the airplane environment. Testing is conducted on the final product, the version that will be implemented if certified.21

Medical devices and the FDA

Medical device product developers must conduct validation testing and are recommended to conduct human factors validation testing on the final versions of their products.23 Evidence of validation testing is reported to the FDA for review. The FDA conducts an in-depth review of the human factors engineering and usability engineering considerations, and field personnel may perform a quality systems inspection. FDA guidance states that medical device developers should conduct human factors validation testing with test participants who represent actual device users, performing all critical tasks using the final product design, in an environment that represents the actual conditions of use.22,29 If significant changes or changes in response to participant performance of safety-critical errors are required, the device will have to go through redesign and revalidation before being certified.29 However, customization of the medical device and its settings and alerts can occur after certification.

Discussion

Our analysis of the usability policies put in place by the ONC, FAA, and FDA show many similarities, but also a few key differences, particularly with ONC policies. While all 3 agencies have requirements guiding the design process of product developers, there are differences in the way they examine compliance with the guidance. The FAA and FDA tend to look at evidence of the process. In addition, they are more prescriptive when it comes to testing the final product. Our discussion highlights some of the differences we identified in this comparison. These differences in federal agency approaches to human factors engineering around safety-critical systems may identify opportunities that can be leveraged to improve the policies focused on the usability and safety of EHRs.

Rigor of the design processes used

ONC, FAA, and FDA standards all require a similar design and development process that considers the needs of their respective end users; the difference among these industries lies in the rigor of enforcement of their policies. The FAA and FDA are much more rigorous, in that they require evidence of a development process and employ individuals with expertise in human factors and/or usability to assist in certifying products. These experts have the knowledge to more effectively review and evaluate documentation around the processes used during design and development to ensure the technical rigor of the process. The ONC, on the other hand, requires attestation and delegates certifying authority to outside testing laboratories that may not require individuals to have human factors expertise to review vendor applications for certification. Since the Accredited Testing Laboratories and Authorized Certification Bodies are external to the ONC, it is unclear what regulatory authority the ONC has over them. In previous work we demonstrated that many EHR vendors do not have a UCD process in place, and that despite the attestation requirement many vendors do not state having a UCD process yet are still being certified; this suggests that the reviewers may not have human factors or usability expertise.13,34 Requiring vendors to provide data support of their UCD process, as the FAA and FDA do, and having human factors experts review vendor reports could force vendors to adopt and use a UCD process and ultimately improve the usability of their products.

While considered more rigorous, our analysis identified a potential opportunity for improvement in the FDA human factors evaluation. The FDA has different teams of people reviewing products depending on the perceived need for human factors expertise. Medical devices that have a known human factors need are reviewed by an internal team of human factors experts. All other devices are reviewed by other personnel who have received some human factors training. While this process can lead to efficiencies, it can also result in unintended variability in the quality of the review.

Availability of interface-level design specifications

The ONC is the only agency of those reviewed that does not provide any guidance on interface-level design specifications. As a result, different EHR products have entirely dissimilar designs, icons, and workflows, which poses safety challenges for clinicians using multiple products. FAA standards require the same specifications across the industry, whereas FDA guidance makes general recommendations for compliance with design philosophies and points developers to technology-specific guidance for some high-risk devices. The industry-wide standardization of the FAA interface-level specifications equips operators to be able to orient more effectively no matter the product developer. The FDA’s broad interface-level design specifications create industry guidance while reducing the burden of unnecessary policies and leaving room for innovation. However, less specific guidance must be paired with evaluators who have human factors and usability expertise, like those employed by the FDA, to ensure that varying interface designs can be reviewed and evaluated based on human factors and usability principles.

Evaluation and certification of the final product

The ONC, FAA, and FDA all require summative or validation testing of their products prior to being certified for use. The difference in these requirements lies within the testing components. Both the FAA and FDA require testing with intended users in a real or realistic simulation testing environment. These approaches are rigorous, but can be very expensive. The ONC requires summative testing; however, the guidance is less rigorous and products can be certified without using representative end users or a realistic testing environment. The ONC’s policies have several implications. First, summative usability tests conducted without the appropriate end-user population misses assessment of critical user expectations and workflow processes that only intended end users can contribute. As a result, products will be developed and implemented that do not capture the actual needs of users. Second, failing to test products in representative environments will result in products that are not appropriately designed to address environmental variables that have a significant impact on how clinicians do their work. For example, high levels of noise, stress, and interruptions are environmental variables that should influence the design of a product to ensure safety and usability. Finally, both the FDA and the ONC certify technologies that can be customized by end users after certification. Customization can dramatically impact a product’s usability, and neither organization controls those changes post-certification.

Limitations

This study sought to understand whether differences exist in the usability policies of the ONC, FAA, and FDA. There are limitations to this approach that should be considered when interpreting our results. As with any policy review, it is important to consider the context of each agency and the historical and political motivations to make appropriate comparisons and conclusions. Much of this insight is not represented in the public governing document that we reviewed; therefore, our analysis does not account for this context. Another limitation is that it is difficult to clearly understand the relationship between each agency’s policies and the actual usability and safety of the technology that is shaped by those polices, given the differences in domains, the complexity of the technology, and other factors. We make a comparison across these 3 high-risk industries recognizing that there are many differences between the industries and governing agencies.

Conclusion

The ONC, FAA, and FDA are all federal agencies charged with ensuring public safety in complex, safety-critical, sociotechnical industries. The FDA and FAA, which have had usability and safety policies in place for many years, appear to have a more rigorous oversight process to ensure that human factors expertise and design processes that account for end-user needs are employed in the development of devices and systems they oversee. While the ONC has a basic framework in place to encourage usability and safety and has made some positive movement forward recently, there are areas of concern that are likely contributing to the current usability and safety challenges of EHRs. Both the FDA and FAA require more usability testing and analysis than the ONC. Optimizing ONC policies, based on the differences identified in our analysis, could serve to improve current vendor usability practices and ultimately the usability and safety of implemented products. ONC policies could be optimized with greater authority over ONC-ATLs and ONC-Authorized Certification Bodies to ensure that human factors expertise is part of the review and certification process and that evidence of EHR vendor UCD processes is reviewed. While additional policies should not be put in place unless absolutely necessary, it appears that more specific policies and different compliance mechanisms may be necessary to bring the health IT industry to the same level of usability rigor as the aviation and medical device industries. One of the biggest challenges to this path forward is getting agreement from all health IT stakeholders on this approach. If there is not agreement to move forward, policy actions may need to be considered in an effort to improve EHR usability and help correct market failures such as increasing transparency, eliminating gag clauses, and raising awareness of the safety implications of usability issues.

Funding

This project was funded under contract/grant number R01 HS023701‐02 from the Agency for Healthcare Research and Quality, US Department of Health and Human Services. The opinions expressed in this document are those of the authors and do not reflect the official position of the Agency for Healthcare Research and Quality or the Department of Health and Human Services.

Competing Interests

The authors have no competing interests to declare.

Contributors

Each author contributed to the conception or design of the work, data analysis and interpretation, critical revision of the article, and final approval of the version to be published.

References

- 1. Meeks DW, Smith MW, Taylor L, Sittig DF, Scott JM, Singh H. An analysis of electronic health record–related patient safety concerns. J Am Med Inform Assoc. 2014;216:1053–59. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Shaha JS, El-Othmani MM, Saleh JK et al. . The growing gap in electronic medical record satisfaction between clinicians and information technology professionals. J Bone Jt Surg. 2015;9723:1979–84. [DOI] [PubMed] [Google Scholar]

- 3. Zahabi M, Kaber DB, Swangnetr M. Usability and safety in electronic medical records interface design: a review of recent literature and guideline formulation. Hum Factors. 2015;575:805–34. [DOI] [PubMed] [Google Scholar]

- 4. Friedberg MW, Chen PG, Van Busum KR et al. . Factors Affecting Physician Professional Satisfaction and Their Implications for Patient Care, Health Systems, and Health Policy. Santa Monica, CA: RAND Health; 2013. [PMC free article] [PubMed] [Google Scholar]

- 5. Institute of Medicine. Health IT and Patient Safety Building Safer Systems for Better Care. Washington, DC: National Academy Press; 2012. [PubMed] [Google Scholar]

- 6. Varpio L, Rashotte J, Day K, King J, Kuziemsky C, Parush A. The EHR and building the patient’s story: a qualitative investigation of how EHR use obstructs a vital clinical activity. Int J Med Inform. 2015;8412:1019–28. [DOI] [PubMed] [Google Scholar]

- 7. Bailey JE. Does health information technology dehumanize health care? Virtual Mentor. 2011;133:181–85. [DOI] [PubMed] [Google Scholar]

- 8. Ratwani RM. Acheiving the promise of health information technology improving care through patient access to their records. Testimony Raj M Ratwani, PhD. 2016:1–13. [Google Scholar]

- 9. International Standards Organization. ISO 9241: Ergonomics of Human System Interaction. 2010. https://www.iso.org/obp/ui/#iso:std:iso:9241:-210:ed-1:v1:en. Accessed October 10, 2016. [Google Scholar]

- 10. Hettinger AZ, Ratwani R, Fairbanks RJ. New Insights on Safety and Health IT. https://psnet.ahrq.gov/perspectives/perspective/181/new-insights-on-safety-and-health-it. Published 2015. [Google Scholar]

- 11. Middleton B, Bloomrosen M, Dente MA et al. . Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013;20(e1):e2–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Spear ME. Ergonomics and human factors in health care settings. Ann Emerg Med. 2002;402:213–16. [DOI] [PubMed] [Google Scholar]

- 13. Ratwani RM, Benda NC, Hettinger AZ, Fairbanks RJ. Electronic health record vendor adherence to usability certification requirements and testing standards. JAMA. 2015;31410:1070–71. [DOI] [PubMed] [Google Scholar]

- 14. Shackel B. Usability: context, framework, definition, design and evaluation. Interact Comput. 2009;21(5-6):339–46. [Google Scholar]

- 15. Lowry SZ, Quinn MT, Ramaiah M. NISTIR 7804: Technical Evaluation, Testing, and Validation of the Usability of Electronic Health Records. 2012. http://csg5.nist.gov/healthcare/usability/upload/EUP_WERB_Version_2_23_12-Final-2.pdf. [Google Scholar]

- 16. Coglianese C. Measuring regulatory performance: evaluating the impact of regulation and regulatory policy. Organ Econ Co-operation Dev. 2012; August (Expert Paper No. 1):1–59. http://www.oecd.org/gov/regulatory-policy/1_coglianese web.pdf. Accessed August 10, 2016. [Google Scholar]

- 17. Marshall R, Cook S, Mitchell V et al. . Design and evaluation: end users, user datasets and personas. Appl Ergon. 2015;46(PB):311–17. [DOI] [PubMed] [Google Scholar]

- 18. U.S. Department of Health and Human Services. 2015 Edition Health Information Technology (Health IT) Certification Criteria, 2015 Edition Base Electronic Health Record (EHR) Definition, and ONC Health IT Certification Program Modifications. Vol. 80 Washington, D.C: Office of the National Coordinator for Health Information Technology; 2015:1–159. [Google Scholar]

- 19. Federal Aviation Administration. Advisory Circular: Controls for Flight Deck Systems. Washington, D.C: Federal Aviation Administration; 2011. [Google Scholar]

- 20. Federal Aviation Administration. Human Factors Policy. 1993. http://www.faa.gov/documentLibrary/media/Order/9550.8.pdf. Accessed August 11, 2016.

- 21. US FAA. Advisory Circular Installed Systems and Equipment for Use by the Flightcrew. 2013:1–62. [Google Scholar]

- 22. U.S. Department of Health and Human Services Food and Drug Administration Center for Drug Evaluation and Research. Applying Human Factors and Usability Engineering to Medical Devices Guidance for Industry and Food and Drug Administration Staff. 2016. [Google Scholar]

- 23. FDA. CFR: Code of Federal Regulations. Title 21, food and drugs; chapter I, Food and Drug Administration, Department of Health and Human Services; subchapter H, medical devices; part 820, quality system regulation. USA: Office of the Federal Register (OFR) and the Government Publishing Office; 2015. [Google Scholar]

- 24. CDRH. Medical device use-safety: incorporating human factors engineering into risk management. Guid Ind FDA Premarket Des Control Rev. 2000:2–33. [Google Scholar]

- 25. Office of Device Evaluation (ODE) USF and DA. Contact Us Human Factors at Center for Devices and Radiological Health (CDRH). http://www.fda.gov/MedicalDevices/DeviceRegulationandGuidance/HumanFactors/ucm124822.htm. Published 2016. Accessed August 10, 2016. [Google Scholar]

- 26. Yeh M, Jo YJ, Donovan C, Gabree S. Human Factors Considerations in the Design and Evaluation of Electronic Flight Deck Displays and Controls. Washington, DC; 2013. http://ntl.bts.gov/lib/50000/50700/50760/General_Guidance_Document_Nov_2013_v1.pdf. Accessed August 10, 2016. [Google Scholar]

- 27. Ontiveros R, Spangler R, Sulzer R. General Aviation (FAR 23) Cockpit Standardization Analysis. 1978. file:///C:/Users/Erica Savage/Downloads/ADA052803 (1).pdf. Accessed August 10, 2016. [Google Scholar]

- 28. Federal Aviation Administration. Advisory Circular: Standardization Guide for Integrated Cockpits in Part 23 Airplanes. 2011. http://www.faa.gov/documentLibrary/media/Advisory_Circular/AC23‐23.pdf. Accessed August 10, 2016. [Google Scholar]

- 29. Food and Drug Administration Center for Devices and Radiological Health. Design control guidance for medical device manufacturers. Des Hist File. 1997:53. [Google Scholar]

- 30. Food and Drug Administration Center for Devices and Radiological Health. Infusion Pumps Total Product Life Cycle Guidance for Industry and FDA Staff. 2014. [Google Scholar]

- 31. Department of Health and Human Services Office of the National Coordinator for Health Information Technology. 2015 Edition Health Infomraiton Technology Certification Criteria, 2015 Edition Base Electronic Health Record Definition, and ONC Health IT Certificaiton Program Modification. 2015. [Google Scholar]

- 32. US FAA. Aviation Circular Approval of Flight Guidance Systems. 2016;25(1329-1C):1–120. [Google Scholar]

- 33. Federal Aviation Administration. Installed Systems and Equipment for Use by the Flightcrew. 2013. https://www.law.cornell.edu/cfr/text/14/25.1302. Accessed August 10, 2016. [Google Scholar]

- 34. Ratwani RM, Fairbanks RJ, Hettinger AZ, Benda N. Electronic health record usability: analysis of the user centered design processes of eleven electronic health record vendors. J Am Med Inform Assoc 2015;226:1179–82. [DOI] [PubMed] [Google Scholar]