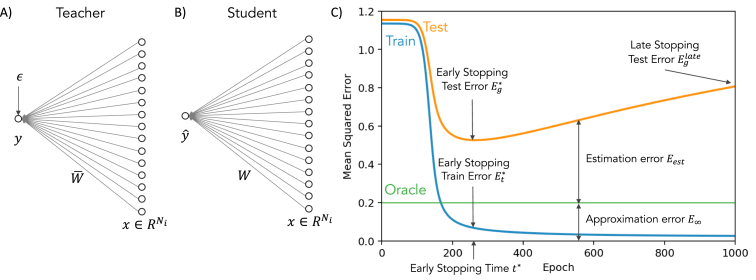

Fig. 1.

Learning from a noisy linear teacher. (A) A dataset of examples is created by providing random inputs to a teacher network with a weight vector , and corrupting the teacher outputs with noise of variance . (B) A student network is then trained on this dataset . (C) Example dynamics of the student network during full batch gradient descent training. Training error (blue) decreases monotonically. Test error, also referred to as generalization error, (yellow), here computable exactly (4), decreases to a minimum at the optimal early stopping time before increasing at longer times (), a phenomenon known as overtraining. Because of noise in the teacher’s output, the best possible student network attains finite generalization error (“oracle”, green) even with infinite training data. This error is the approximation error . The difference between test error and this best-possible error is the estimation error . (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)