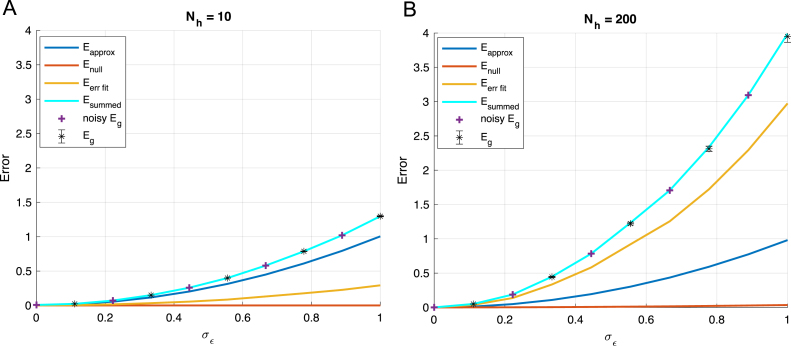

Fig. 10.

Impact of label noise on performance of wide networks: We plot the generalization error decomposition as in Fig. 9 for (A) an under-parameterized and (B) an over-parameterized network. Because output noise is added to both networks, approximation of the function is difficult even for the larger network because the function it is learning is not deterministic. However, the fitting error is larger in the larger network due to the eigenvalue spectrum of the hidden layer covariance. This is in contrast to our finding that larger networks perform better in the deterministic, low noise setting depicted in Fig. 9. Other parameters: , , , .