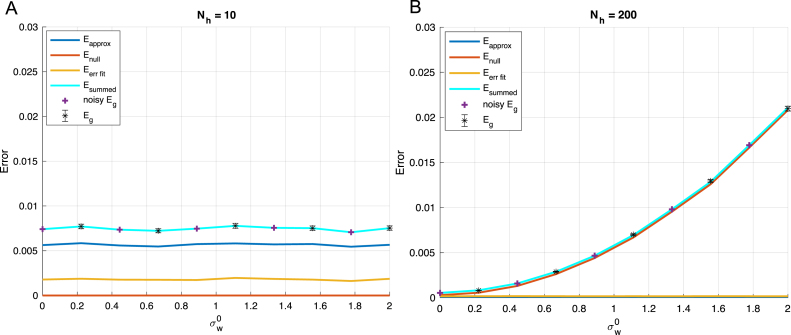

Fig. 11.

Impact of initialization on performance of wide networks. We plot the generalization error decomposition as in Fig. 9 for (A) an under-parameterized and (B) an over-parameterized network. We demonstrate that large initializations have a detrimental impact on generalization performance in wide neural networks which are over-parameterized and not on smaller networks. This effect is due to the frozen subspace observed when training with fewer examples than free parameters, and again is in contrast to our finding that larger networks perform better in the low initialization setting depicted in Fig. 9. Other parameters: , , , .