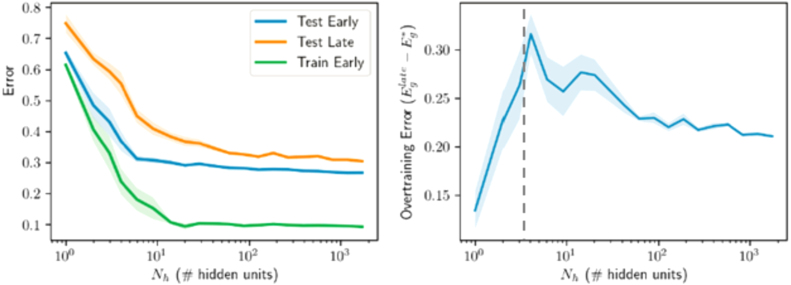

Fig. 13.

Two layer network trained (without frozen weights) on binary MNIST classification of 7s vs. 9s with 1024 training samples. The qualitative trends identified earlier hold: over-training is a transient phenomenon at intermediate levels of complexity, and large models work well: no over-fitting is observed given optimal early stopping. Note that the over-training is less distinct than before primarily because these experiments were run for only 5000 epochs as opposed to tens of thousands of epochs as in Fig. 7, Fig. 12, due to computational constraints.