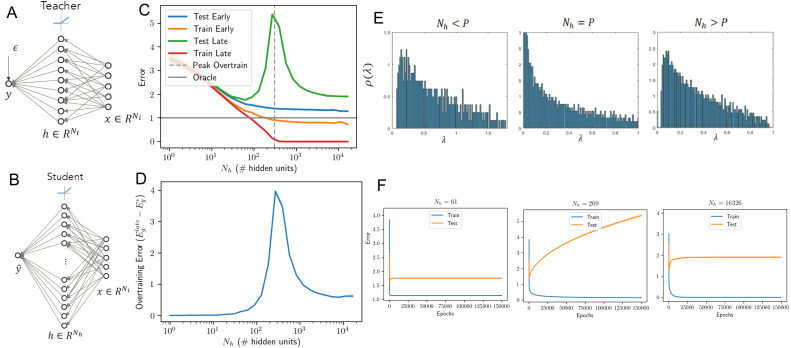

Fig. 7.

Learning from a nonlinear teacher. (A) The teacher network ( ReLUs). (B) The student network ( ReLUs, but only the output weights are trained). (C) Effect of model complexity. Optimal early stopping errors as a function of number of hidden units for the case and training samples. Shaded regions show standard error of the mean over 50 random seeds. (D) Overtraining peaks at an intermediate level of complexity near the number of training samples: when the number of free parameters in the student network equals the number of samples (300). (E) The eigenspectrum of the hidden layer of a random non-linear neural network with samples and an dimensional input space. We consider three cases and find a similar eigenvalue density to a rescaled Marchenko–Pastur distribution when we concentrate only on the small eigenvalues and ignore a secondary cluster of eigenvalues farther from the origin. Left: Fewer hidden nodes than samples ( hidden units) leads to a gap near the origin and no zero eigenvalues. Center: An equal number of hidden nodes and samples () leads to no gap near the origin so that eigenvalues become more probable as we approach the origin. Right: More hidden nodes than samples () leads to a delta function spike of probability 0.5 at the origin with a gap to the next eigenvalue. (F) Average training dynamics for several models illustrating overtraining at intermediate levels of complexity. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)