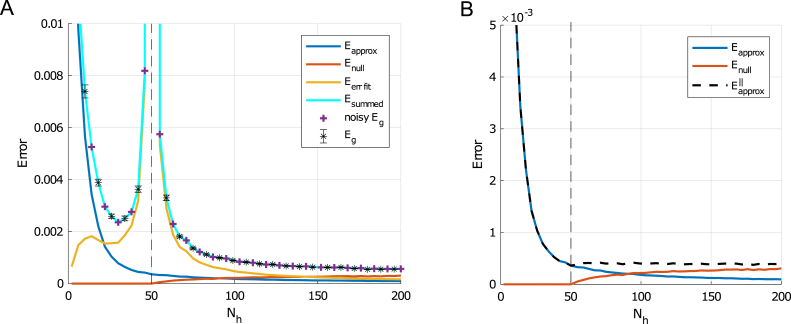

Fig. 9.

Two layer network generalization error decomposition: Here we use data generated from a two layer teacher network with ReLU activations, 20 hidden units, and no noise. The student network has fixed random first layer weights and ReLU activations. (A) Shows the approximation error in blue, the null-space error in red, the over-fitting error in gold, and the sum of all three in cyan. Generalization error computed from 5000 new examples are black error bars. Plus sign denotes generalization error with a two layer teacher with weights and Gaussian noise with a variance matched to the sum of approximation and null-space error. The number of examples used in training is (dashed vertical line) and number of trails used to compute error bars is 500. (B) Shows the approximation error (blue) and null space error (red) as well as the sum of the two (dashed black). (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)