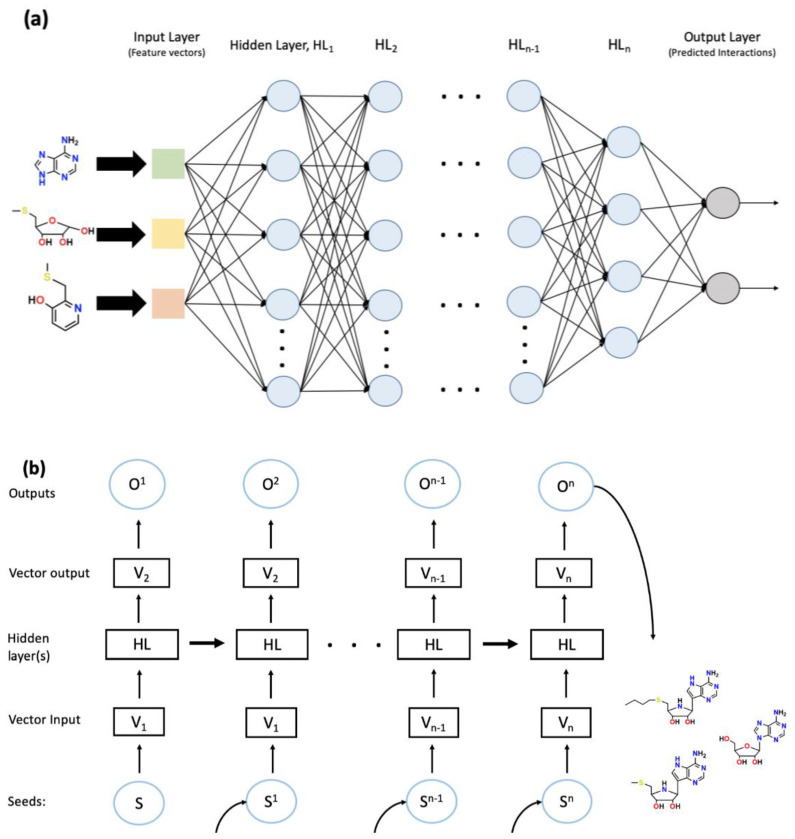

Figure 3.

The general scheme of deep neural network (DNN) (a) and recurrent neural network (RNN) (b). (a) DNN consists of an input layer followed by several hidden layers and an output layer. In this case, the input layer utilizes feature vectors generated by a convolutional network. The progression of the NN follows a single path through hidden layer 1 (HL1) to HLn, indicating the feedforward nature of the NN. The generated outputs are often processed using supervised learning techniques for the identification and collection of sensible interactions. (b) RNN begins with a seed, S, which is inputted into the system. Through the use of algorithmic processing, the seed is turned into a reference vector, V1, which is used by the HL to generate a vector output, V2. V2 is subsequently optimized through input training sets and creates the output, O. The generation of these outputs eventually leads to the creation of a gatherable data set. In the meantime, the HLs feed forward to provide information from previous steps. One example is chemical structure generation using SMILE string characters as seeds; hence the desired gathered outputs would be a string of SMILE characters that would be the desired structure. The dataset created in the figure is gathered and analyzed into the resultant molecules.