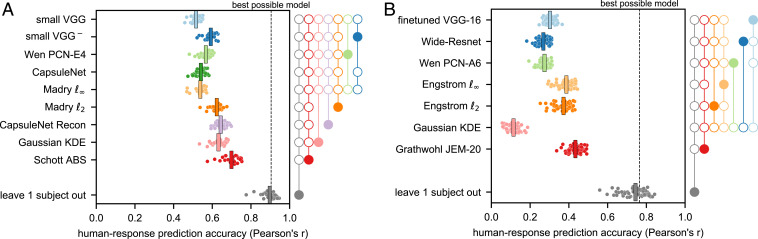

Fig. 4.

The performance of the candidate MNIST (A) and CIFAR-10 (B) models in predicting the human responses to the entire stimulus set. Each dot marks the correlation coefficient between the responses of one individual human participant and one model (Eq. 3). The vertical bars mark across-subject means (). The gray dots mark the correlation between each participant’s responses and the mean response pattern of the other participants. The mean of the gray dots (a black bar) is the lower bound of the noise ceiling. The dashed line (“best possible model”) marks the highest across-subject mean correlation achievable by any single model (upper bound of the noise ceiling). Significance indicators (A and B, Right): A solid dot connected to a set of open dots indicates that the model aligned with the solid dot has significantly higher correlation than any of the models aligned with the open dots (P 0.05, subject-stimulus bootstrap). Testing controlled the familywise error rate at 0.05, accounting for the total number of model-pair comparisons (45 for experiment 1, 28 for experiment 2). For equivalent analyses with alternative measures of human-response prediction accuracy, see SI Appendix, Fig. S7. See SI Appendix, Fig. S8 for the models’ prediction accuracy evaluated separately on controversial and natural stimuli and SI Appendix, Fig. S9 for an evaluation on different ratios of controversial to natural stimuli. The deep generative model (ABS, experiment 1) and the deep hybrid model (JEM-20, experiment 2) (both in red) explain human responses to the combined set of controversial and natural stimuli better than all of the other candidate models. And yet, none of the models account for all explainable variance: Predicting each subject from the subject’s peers’ mean response pattern achieves significantly higher accuracy.