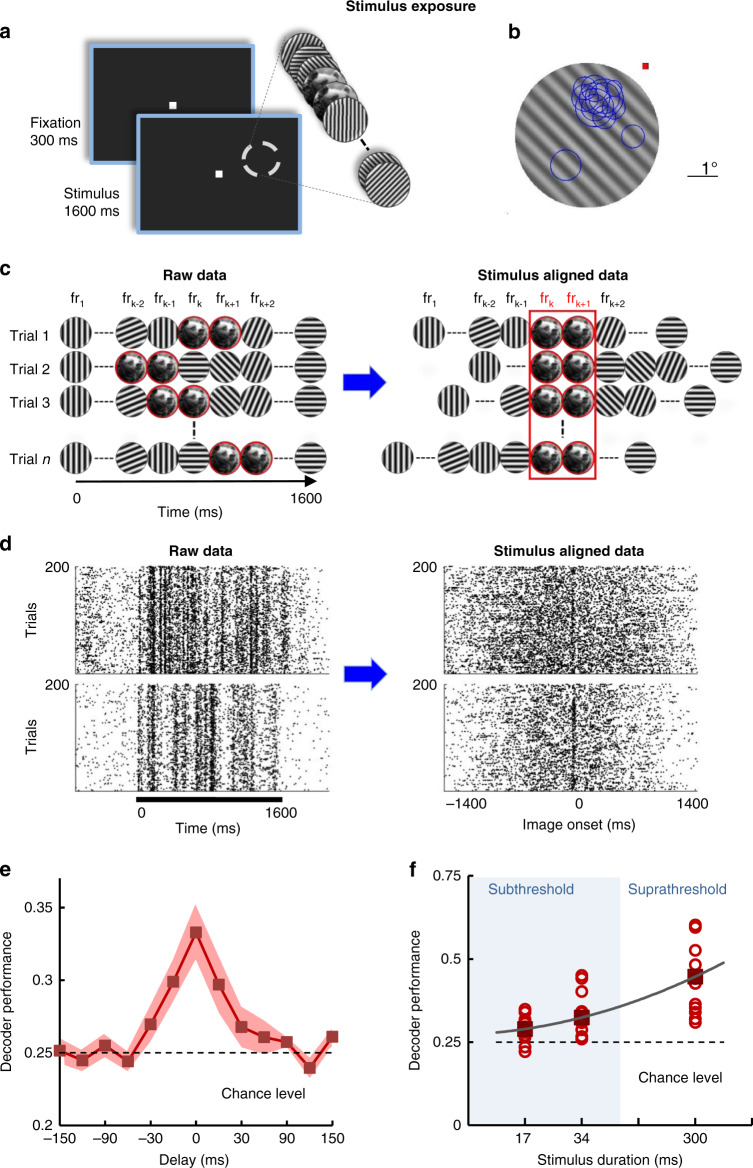

Fig. 2. Neural populations extract stimulus information during exposure.

a Exposure–monkeys are performing a passive fixation task while a natural image is flashed for two consecutive frames and is embedded at a random time between frames 10–86 into the movie (200 consecutive trials; the image is presented at a range of orientations, as in Fig. 3). b Receptive field positions (blue circles) of individual V1 neurons recorded simultaneously in a representative session shown with reference to a grating frame from the movie stimulus. c Cartoon describing the alignment procedure for analyzing the spike trains during exposure. Spike trains were shifted and aligned with respect to the first frame containing the image (occurring at a random time in each trial; frk represents the kth frame in the movie). d Raw raster plots for example neurons recorded during stimulus exposure (left). The black bar marks the movie presentation. Same raster plots after alignment relative to the onset of the first image (right). e Decoder performance of a linear classifier (using firing rates calculated for a 34 ms interval) starting at different delays with respect to image onset. Neurons are reliably encoding image orientation information relative to chance level (shuffled trials) and to epochs in which the two-frame intervals contained random gratings (delays >30 ms). Error represents s.e.m, n = 12 sessions. f Decoder performance for images flashed for 17 and 34 ms, and 300 ms is significantly higher than chance level (P = 0.0093, P = 0.0048, P = 0.00049, respectively; Wilcoxon sign-rank test). Circles represent individual sessions (n = 12).