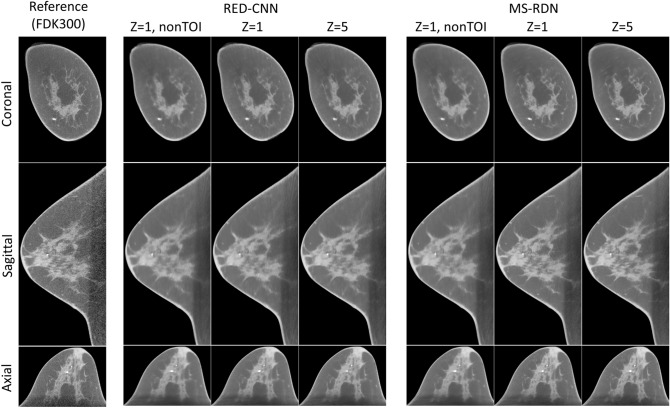

Figure 3.

Comparisons to the residual encoder–decoder convolutional neural network (RED-CNN). The proposed MS-RDN was compared with RED-CNN in three sets of configurations: single slice training without TOI oriented patch extraction (, nonTOI), single slice training (), and multi-slice training (). Breast images of the test subject were reconstructed by these RED-CNNs and MS-RDNs using the retrospectively undersampled 100-view data. The reference images were obtained using FDK on the 300-view data. The display window is .