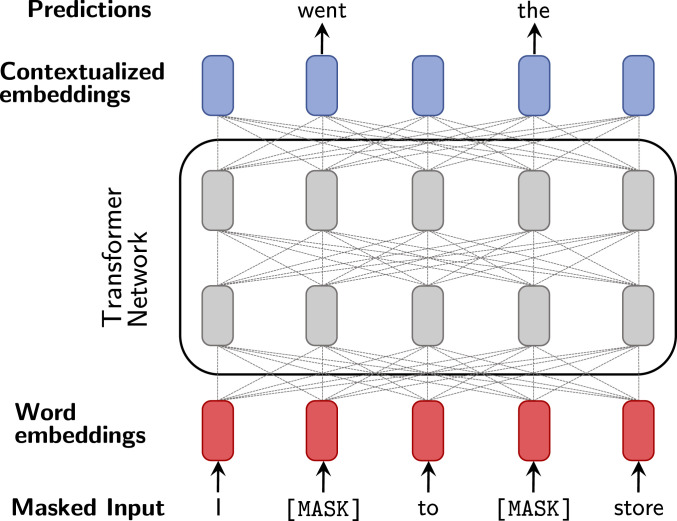

Fig. 3.

A high-level illustration of BERT. Words in the input sequence are randomly masked out and then all words are embedded as vectors in . A Transformer network applies multiple layers of multiheaded attention to the representations. The final representations are used to predict the identities of the masked-out input words.