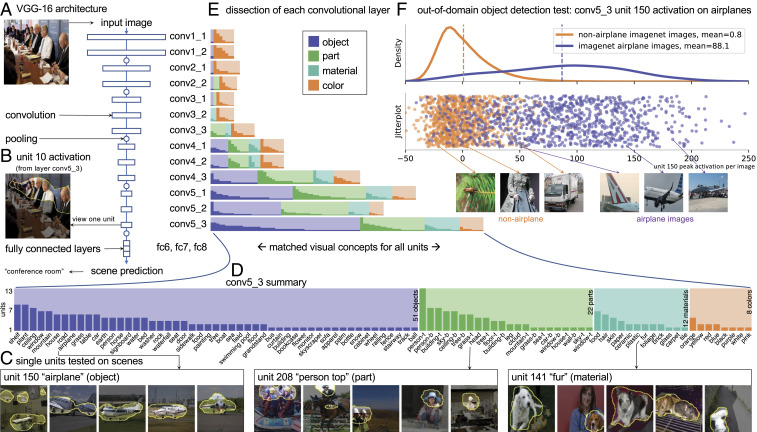

Fig. 1.

The emergence of single-unit object detectors within a VGG-16 scene classifier. (A) VGG-16 consists of 13 convolutional layers, conv1_1 through conv5_3, followed by three fully connected layers: fc6, -7, -8. (B) The activation of a single filter on an input image can be visualized as the region where the filter activates beyond its top 1% quantile level. (C) Single units are scored by matching high-activating regions against a set of human-interpretable visual concepts; each unit is labeled with its best-matching concept and visualized with maximally activating images. (D) Concepts that match units in the final convolutional layer are summarized, showing a broad diversity of detectors for objects, object parts, materials, and colors. Many concepts are associated with multiple units. (E) Comparing all of the layers of the network reveals that most object detectors emerge at the last convolutional layers. (F) Although the training set contains no object labels, unit 150 emerges as an airplane object detector that activates much more strongly on airplane objects than nonairplane objects, as tested against a dataset of labeled object images not previously seen by the network. The jitter plot shows peak activations for the unit on randomly sampled 1,000 airplane and 1,000 nonairplane Imagenet images, and the curves show the kernel density estimates of these activations.