Abstract

There has been widespread concern over the design of the Merit-Based Incentive Payment System (MIPS) since its authorization with the Medicare Access and CHIP Reauthorization Act (MACRA) in 2015. Using detailed performance data from 2017, the first implementation year of MIPS, we found that while 90 percent of participating clinicians reported performance equal to the low performance threshold of 3 out of 100 (a calculated composite score), almost half of clinicians did not participate in at least one of the three categories of the program (quality, advancing care information, and improvement activities). The decision to participate in each category explained 86 percent of the total variance in clinicians’ overall score, while actual performance explained just 14 percent because of the ease of achieving high scores within each category. Still, 74 percent of clinicians that only partially participated in the program received positive payment adjustments. These findings underline concerns that MIPS’ design may have been too flexible to effectively incentivize clinicians to make incremental progress across all targeted aspects of the program. In turn, this is likely to lead to resistance when payment penalties become more severe in 2022 as required by MIPS’ authorizing legislation.

Introduction

In 2017, The Merit-based Incentive Payment System (MIPS) was implemented to streamline several physician-based quality improvement programs.1 In MIPS’ first year, clinicians were evaluated by combining performance scores across three categories: Quality, Advancing Care Information (ACI), and Clinical Process Improvement Activities (IA).2 Detailed information describing clinician performance and participation in each of these categories remains unknown, despite summative reports from CMS illustrating high participation rates and high composite scores in the first two years of MIPS.3,4 A more detailed understanding of clinician participation in MIPS could highlight how clinicians pursued quality initiatives under the incentives created by the program, and provide insight into their progress towards policymakers’ overarching quality improvement goals.

Since its authorization with the Medicare Access and CHIP Reauthorization Act (MACRA) in 2015, MIPS has faced criticism about its structure and likely impact. Several groups have highlighted the potential burden of participating in the program, including the administrative cost of reporting5 and the inequities of quality measures.5,6 Similarly, physicians have echoed concerns about administrative costs and emphasized challenges for smaller and rural practices.7 In tandem with concerns over the effort involved in participation, researchers and policy groups have raised concerns about the effectiveness of MIPS’ incentives to influence engagement in quality improvement.5,8 These critiques have been rooted in the ease of the program (at least in early years) and limited incentives associated with high performance. In the first year of MIPS, the Centers for Medicare and Medicaid Services (CMS) reported that 93 percent of clinicians achieved the very low threshold score of 3 out of 100, thereby avoiding penalties.3 Since financial incentives were calculated to be budget neutral (i.e. the amount collected in penalties drove the amount given in payments) and avoiding penalties was easy, the maximum incentive payments in the first year was positive 1.88%, creating limited incentives to improve.8

Together, the low threshold, small incentive payment, and combinatorial nature of MIPS created great flexibility in the program. In contrast to the separate programs that were combined and replaced under MIPS (e.g. the Electronic Health Records Incentive Program or “Meaningful Use”), which individually penalized clinicians for non-participation, clinicians in MIPS could score quite highly without participating in all categories of the program. In fact, it was possible to achieve a perfect score of 100 in MIPS while not participating in one or more of the categories due to the availability of exemptions from ACI and Quality categories.

The degree to which the flexibility of the program became manifest in how clinicians participated has been obscured by high-level program participation and composite performance statistics released by CMS.3,4 While it is clear that the incentives were small relative to their potential,9 the high scores reported by CMS may make it seem as though clinicians performed very well across categories despite weak financial incentives. This may overlook specific behavior that implies limited impact from MIPS, namely selective participation in program categories and wide use of exemptions, which have not been empirically investigated.

The purpose of this study is to better understand how eligible clinicians achieved payment adjustments and composite scores in the first year of MIPS. We used detailed data from Physician Compare to examine the distribution of composite MIPS scores as well as participation rates and scores across each of the three performance categories. To our knowledge, this is the first study of MIPS performance to utilize category-specific scores to detail the underlying construction of MIPS composite scores, revealing how clinicians participated in the first year of this important quality program.

STUDY DATA AND METHODS

Data

Starting in 2017, clinician performance on MIPS influenced future Medicare reimbursement rates, and in July 2019, CMS made 2017 MIPS performance data publicly available through the Physician Compare database.10 The 2017 performance year scores informed payment adjustments for clinicians’ 2019 Medicare Part B payments. While CMS had previously made limited information available through press releases and infographics,3 the public availability of detailed clinician- and group-level data provides a new window into participation and performance within this incentive program, which impacts approximately one million eligible clinicians nationwide. We performed data cleaning and validation of the raw data published by CMS prior to analyzing this public data.11 A description of our data cleaning steps and validation can be found in the online Appendix sections Population Construction and Final and Category Performance Measures.12

The data includes the overall composite score for each clinician and scores for each of the three categories that make up the composite score. In 2017, quality measures accounted for 60 percent of the composite score, ACI counted for 25 percent and IA for 15 percent, except for those clinicians who applied for and received exemptions from the quality or ACI categories. Scores in each category were converted to a percentage, multiplied by the category weighting proportion, and summed to reach the composite MIPS score, out of a possible 100 points.2

Analysis

For our analysis, we assigned MIPS performance scores to ‘clinicians’ following CMS’ approach, which defined a clinician as a combination of individual and practice-level identifiers [here, combinations of National Provider Identifiers and Organization Provider Enrollment, Chain, and Ownership System (PECOS) Associate Control (PAC) Identifiers].3 We then visualized the distribution of composite scores and calculated payment adjustments for each score. We grouped clinicians by their payment adjustments (negative, neutral, positive, or positive & exceptional) based on CMS composite score cutoffs (less than 3 for negative, 3 for neutral, greater than 3 for positive, and 70 and above for positive & exceptional). Positive payments were limited to budget neutrality, and clinicians earning a score of 70 (the highest ‘positive’ score that did not qualify as ‘exceptional’ were only eligible to receive a payment bonus of 0.20%. Positive & exceptional performance included additional payments from a $500 million bonus fund, such that clinicians with a maximum score of 100 received a bonus of 1.88%. To measure selective participation in each category of the overall program, we calculated the proportion of clinicians that had no score or a score of zero in each of the three categories, and the proportion not participating in more than one category. We considered clinicians as “not participating” in a category if they received a score of zero or “NA” or if they received an exemption from that category.

We then examined the distribution of scores within each category and the most frequently observed composite scores to better understand how participation and performance in each category influenced composite scores and therefore overall performance. To further describe the degree to which participation - rather than performance - determined a clinicians’ composite score, we conducted a multiple linear regression with the clinician’s composite score as the dependent variable and three binary independent variables indicating participation in each of the three categories. The adjusted R-squared value of this regression model reflects the amount of variation in composite scores that can be explained solely by category participation.

Finally, we calculated the proportion of each clinicians’ composite score made up by performance within each category (i.e. the realized contribution), averaged those contributions, and compared those values to the designed weighting of each category (i.e. 60, 25, and 15 percent for Quality, ACI, and IA, respectively).

Limitations

Our study has several limitations. First, we are unable to observe the various rationales for clinicians’ participation choices across categories. These rationales may include the inability to record the measures required for a given category or a lack of technical sophistication (this applies to ACI in particular). Clinicians have also expressed challenges in understanding the precise requirements for MIPS reporting as well as the multitude of choices for reporting measures to CMS.7 This complexity could have resulted in clinicians not participating or inaccurate recording of scores. Surveys have also illustrated low awareness of MIPS as a program,13 which may have played a role in preventing participation in certain categories for clinicians unaware of the program or its requirements. In part due to this limitation, we focus on observed participation in MIPS and only discuss possible reasons why (or why not) clinicians participated in the program or specific categories. This logic also underpins our choice to classify providers with exemptions as “not participating;” we sought to treat all non-participating clinicians equally. Second, because MIPS combined three separate quality programs and category scores reflect aggregations of measures within each category, we do not have directly comparable historical data or baseline data for the clinicians included in our sample. This makes it impossible to determine whether, for a given clinician, performance increased, decreased, or remained constant. As a result, our findings focus on participation across categories rather than performance. Third, we encountered large amounts of missing data in specific performance measures and were therefore unable to examine the composition of the three category scores (see Appendix section Individual Performance Measures for more detail).12 This limited granularity in the data informed the focus of our study on participation and use of category-level scores only. We believe MIPS would benefit from making individual performance metrics more transparent.

STUDY RESULTS

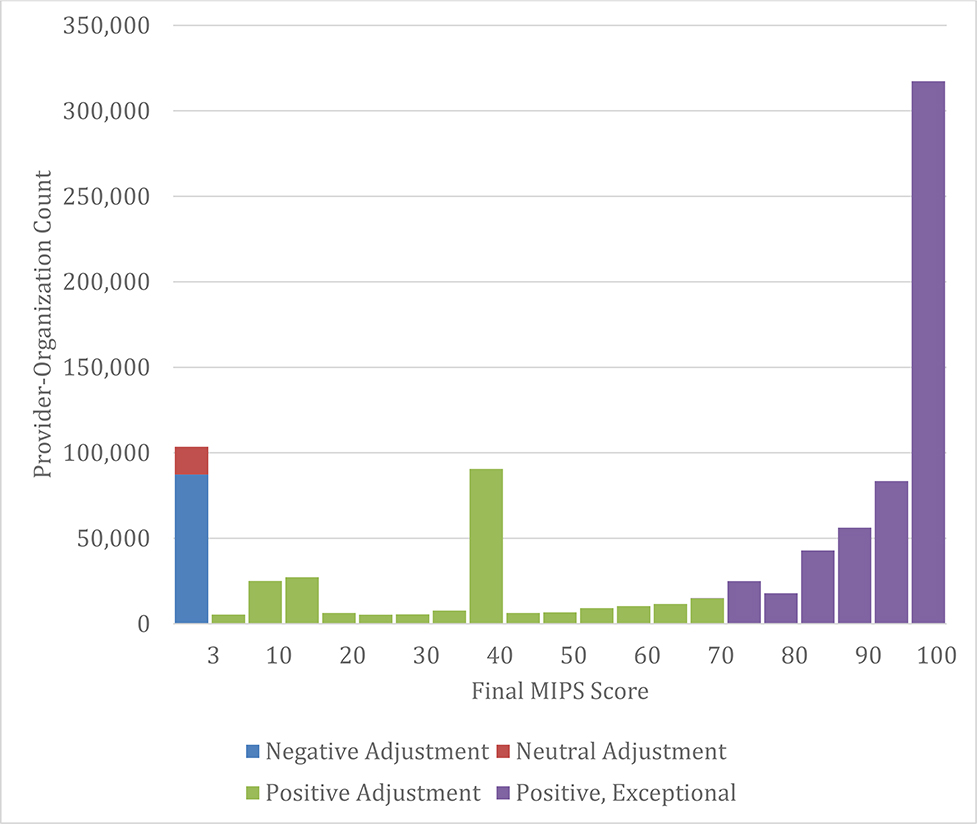

In our analysis, 90 percent of clinicians met the threshold score of 3 and 62 percent reported “exceptional performance.” The median composite score was 86 out of 100, and 21 percent scored a perfect 100 (Exhibit 1). High frequency bars in the histogram presented in Exhibit 1 are due to clustering of clinicians not participating in specific categories: for instance, many clinicians received a score of 40 for participating in ACI and IA (25 and 15 percent, respectively) and by not participating in the quality component (60 percent). Our data aligns with data from CMS: in their press materials, CMS announced that 95 percent of eligible clinicians reported performance equal to or greater than the low performance threshold of 3 out of 100.3

Exhibit 1. Distribution of MIPS composite scores & Payment Adjustments.

Source: Authors’ analysis of 2017 MIPS performance data from CMS Physician Compare database.

Notes: In the first year of MIPS, clinicians reporting below the performance threshold of 3 received negative payment adjustments to Medicare Part B reimbursements. Those reporting a score of exactly 3 received a neutral adjustment, while those between 3 and 70 received positive adjustments. Any provider scoring over 70 was eligible for a share of a $500 million “exceptional performance” bonus payment adjustment. In 2017, the maximum positive payment adjustment a clinician could obtain was 1.88 percent.4 High observed frequencies at 15 points were achieved by clinicians achieving the maximum points in Improvement Activities (IA) but not participating in Quality or Advancing Care Information (ACI), and the high frequency at 40 points was due to clinicians achieving the maximum in IA and ACI but not participating in the Quality component.

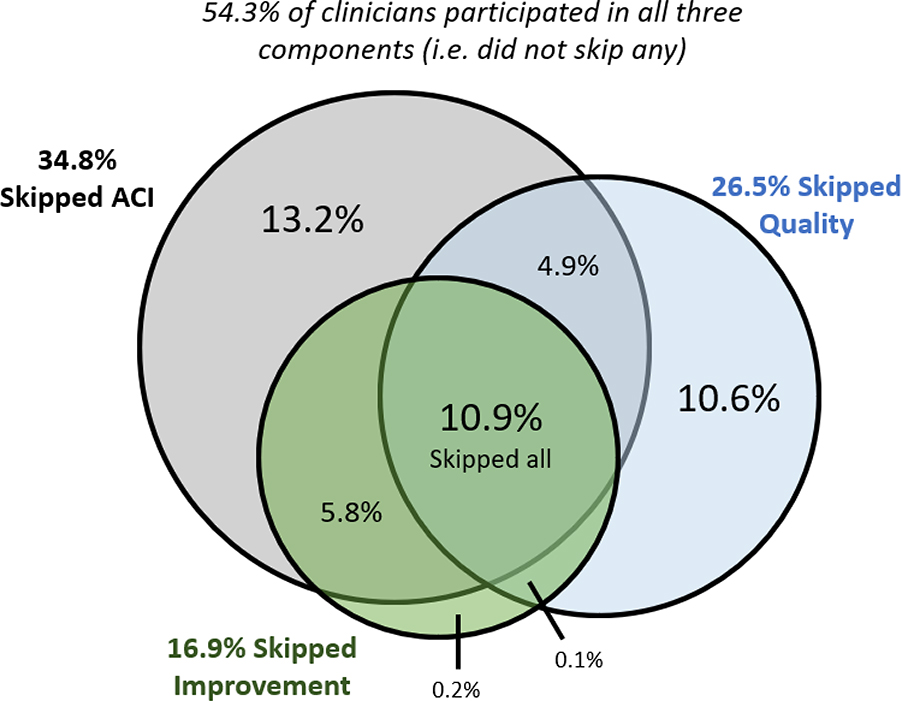

The high-level figures reported by CMS and replicated above reflect overall participation in the program, which could be achieved through participating in just one of three categories. In the detailed Physician Compare data, however, participation in each category of MIPS was substantially lower than this summative number indicates. In total, almost half (45.6 percent) of participating clinicians did not participate in at least one of the three underlying categories (i.e. either did not report or received a score of zero) (Exhibit 2). Of all clinicians that reported to MIPS, 34.8 percent did not participate in the ACI category (37.3 percent of these non-participating clinicians received an exemption), 26.5 percent did not participate in the quality category (1.5 percent of non-participating clinicians received an exemption), and 16.9 percent did not participate in improvement activities (CMS did not offer exemptions from this category in 2017). Eleven percent of clinicians who reported received a zero in all three categories. In total, 309,560 clinicians (35.2 percent) received sub-100 scores at least in part because they did not participate in one or more category and did not have an exemption from that category (data not shown).

Exhibit 2. Clinician Participation in Each Category of MIPS.

Source: Authors’ analysis of 2017 MIPS performance data from CMS Physician Compare database.

Notes: The circles illustrate clinicians not participating in each category of MIPS in 2017. Overlapping sections illustrate the percentages of clinicians not participating in two or more categories. For example, 5.8 percent of clinicians did not participate in either improvement activities and advancing care information (ACI). 10.9 percent did not participate in any categories, while 13.3 percent did not participate in only the ACI category.

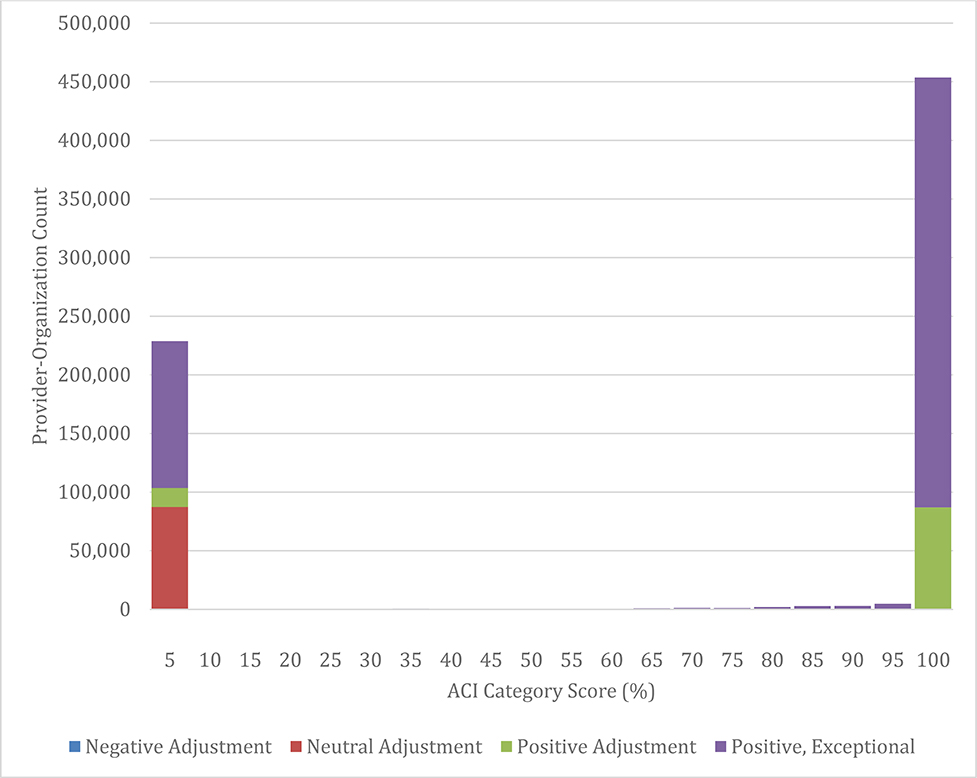

The majority of clinicians that participated in any of the three categories were measured as having very high performance (Exhibit 3). For instance, 218,403 of 646,472 (34 percent) clinicians that participated in the quality category scored a perfect 100 while 383,086 (59 percent) scored above 90. 405,825 of 572,735 (71 percent) received a score of 100 on ACI and 484,833 (85 percent) achieved a score above 90. 694,429 of 729,274 (95 percent) participating clinicians scored 100 on improvement activities.

Exhibit 3. Distribution of ACI Category Scores in MIPS Year 1.

Source: Authors’ analysis of 2017 MIPS performance data from CMS Physician Compare database.

Notes: The zero bar to the left of the distribution includes clinicians that did not participate in ACI and therefore received no score. For ACI, this includes 306,512 non-participant clinicians. Scores for are scaled to be out of 100 percent, rather than the points available for each component. Similar distributions of category scores for Quality and Improvement Activities are in Appendix Exhibit A2.12

Because clinicians that participated in each category generally achieved high scores in every category, the overall composite score was largely driven by the specific categories in which clinicians participated. In regression analysis, 86 percent of clinicians’ composite score can be attributed to their participation (or lack thereof) across categories (R-squared value of 0.86). As a result, only 14 percent of the variation in clinicians MIPS score related to their performance within categories (data not shown).

The importance of participation to overall scores is well illustrated by six composite scores that are far more common than would be expected by chance and account for 45.9 percent of all participating clinicians’ composite scores (Exhibit 4). For instance, 8.6 percent of participating clinicians achieved a composite score of 40 by only participating in the ACI and IA categories. Similarly, 20.8 percent of clinicians achieved a composite score of 100. These clinicians achieved this score either by participating in all 3 categories and achieving the maximum score in each, only participating in the quality and improvement categories and receiving an exemption from the ACI category (2.4 percent of all clinicians; 11.7 percent of clinicians scoring 100), or by only participating in the ACI and IA categories and receiving an exemption from the quality category (0.3 percent of clinicians; 1.5 percent of clinicians scoring 100).

Exhibit 4.

Most Common Composite Scores in 2017 MIPS

| Composite MIPS Score | # of Clinicians | Percent of Reporting Clinicians | Explanation |

|---|---|---|---|

| 100 | 183,029a | 20.8b | Clinicians achieved the maximum possible score and participated in all three categories or received an exemption from Quality or ACI and were re-weighted. |

| 0 | 87,274 | 9.9 | Clinician reported to MIPS but did not receive a positive score on any individual category. |

| 40 | 75,406 | 8.6 | Clinicians that participated in ACI & IA and achieved the maximum possible score in each but did not receive an exemption from the quality category. |

| 15 | 21,641 | 2.5 | Clinicians that only participated in the IA category, received the maximum possible score and were not exempt from the quality category. |

| 7.5 | 19,777 | 2.3 | Clinicians that only participated in the IA category, received half credit, and were not exempt from the quality category. |

| 3 | 16,215 | 1.8 | Clinicians that reported the minimum number of quality measures for few patients. |

| Total | 403,342 | 45.9 percent |

Source: Authors’ analysis of 2017 MIPS performance data from CMS Physician Compare database.

Notes:

15,384 received exemption.

8.4% received exemptions

Given the varied participation rates across categories, it should not be surprising that the proportion of the realized composite score from each category varied from the proportion of the MIPS-designed weight of each category. That is, the IA component contributed more to realized scores than planned, on average: 23 percent compared to MIPS’ planned 15 percent, or 54 percent more. As a result, Quality and ACI each contributed less than planned (Appendix Exhibit A3).12

DISCUSSION

We analyzed detailed data from the first year of MIPS, the payment system for Medicare Part B clinicians based on performance. Though CMS reported that over 90 percent of eligible clinicians participated in MIPS, we found that almost one half of participating clinicians did not participate in all 3 scoring categories. High composite scores were achieved because the vast majority of clinicians that did participate in each category achieved very high scores in those categories. Overall performance on MIPS was therefore driven by participation in each category of the program rather than performance across categories, raising concerns that the first year of MIPS did not put clinicians in a position to incrementally improve performance across all 3 categories.

The first year of the program was meant to serve as a transitional year, and CMS may have intended for clinicians to avoid payment penalties with relative ease even when selectively participating in the program. Still, it is unlikely that CMS anticipated selective participation in categories at the rates we observe, and at the very least, these rates are not clear from previous reports. Indeed, this frequent selective participation runs contrary to CMS’ stated intent for MIPS to encourage simultaneous focus on three categories of performance.13 In our data, the most frequently skipped category was ACI, which likely posed the most challenging initial hurdle to participation and potentially required costly improvements to clinicians’ information technology systems. Still, one quarter of clinicians did not participate in the quality category, indicating that reporting criteria alone posed a substantial barrier.

While many factors may have influenced selective participation in MIPS, one key factor was likely clinicians’ sense of the cost and benefits of participating. For most clinicians, there was minimal additional benefit to participating in all categories: among the 395,533 clinicians that did not participate in at least one category, 292,009 (73.8 percent) nevertheless received a positive payment adjustment, with 19.4 percent receiving an “exceptional” score warranting bonus payment. The low marginal benefit of participation was in part due to the low threshold for positive payment adjustments, which was set at just 3 of 100 possible points. The relatively small maximum positive adjustment and the two-year delay in payment receipt14 likely further diminished the salience of positive financial incentives. Selective participation may also have been driven by high perceived cost and complexity of participating, as noted in a recent physician survey.7 Ongoing monitoring of participation in all MIPS categories will be critical to achieving programmatic goals of simultaneous focus on these performance categories.

We found that the realized contribution of each category to clinicians’ composite scores differed from the contributions planned in the first year of MIPS, with improvement activities contributing substantially more than the intended 15 percent. Notably, the emphasis on practice improvement activities fits with a nationwide survey of physicians who suggested that improvement activities should count for 28 percent of the composite score rather than the planned 15 percent for both 2017 and 2018.13

POLICY IMPLICATIONS

Our findings have several implications that underscore concerns raised by the Medicare Payment Advisory Commission (MedPAC) and others in the lead-up to MIPS implementation.5 Our results suggest the future of MIPS might be hindered by the limited incentives for clinicians to improve in early years, and they underline important questions about whether the program can be improved or, as MedPAC recommended, should not be pursued.

First, our results highlight the importance of considering the various routes that clinicians can take to achieve baseline performance scores in developing program requirements. CMS incentivized four MIPS categories (adding cost) in 2018 and beyond. To achieve the goal of ‘simultaneous focus’ on these 4 categories, policy makers may consider modifying payment adjustments for providers who do not participate in all categories, or else could consider taking selective participation and clinicians’ emphasis on improvement activities into account when updating requirements and category contributions to the composite MIPS performance score. In January 2020, CMS released 2018 MIPS performance results that again illustrate high composite scores, with 98 percent of participating eligible clinicians receiving a positive payment adjustment.4 Still, these reports do not show precisely how these scores were achieved, masking the degree to which clinicians continued to selectively participate across MIPS categories and may have received positive payment adjustments despite incomplete participation in all program domains.

Second, by highlighting very high average performance within categories among participants, and the extent to which participation - rather than performance - drove variation in composite scores, our analysis supports concerns that high performance in each category may have been too easy to effectively incentivize improvement. CMS should consider strategies to make high performance more challenging and ensure a meaningful ‘spread’ of performance scores as the program leaves the transition period. One approach would be to take a more aggressive stance in removing measures that are almost always scored at the maximum, which currently only occurs after three years of consistent performance above 95 percent.15 This timeline could be accelerated or cutpoint reduced. Similarly, CMS could reduce the credit given to relatively easy all-or-nothing measures under ACI/”Promoting Interoperability” and IA and place more emphasis on more difficult metrics. Finally, CMS’s approach to gradually raising composite score cutoffs for positive payment adjustments may help shift the emphasis from category participation to performance. For example, the threshold to receive a positive payment moved from 3 in 2017 to 15 in 2018. However, this modest increase is unlikely to reverse the trend we observe of wherein most clinicians performed well above the threshold despite partial participation.

Third, in our analysis we were not able to use data on individual quality metrics because the data available on Physician Compare was incomplete for reasons related to reliability and validity of data (for more detail, see Appendix section Individual Performance Measures).12 It seems reasonable that any quality measures deemed sufficiently reliable and valid to influence incentive payments would also be of high enough quality to make publicly available except where low counts make patient privacy a concern. It is also notable that the 2017 measures were only publicly available in late 2019, while CMS was finalizing rules for the 2021 performance period. More complete and rapid reporting of these measures would improve the transparency of the program and allow the community to more actively participate in improvement.

Fourth, the appropriateness of exemptions from MIPS categories should be carefully considered. We have shown that a relatively large number of clinicians were exempted from Advancing Care Information (ACI) and quality components in 2017. Rather than leading to more weight placed on non-exempt categories, the practical effect of exemptions in early years was to make high overall achievement easier because, as stated, high performance was very common given participation. The prevalence of exemptions is likely to increase in later years when, for instance, all clinicians participating in practices of 15 or fewer clinicians can receive exemptions from the Promoting Interoperability (PI) category (a renaming of the ACI category).15 Judging by Physician Compare data, this would have excluded 272,146 clinicians in 2017. CMS should seek to carefully balance exempting clinicians that are under undue hardship with incentivizing performance, and may disallow exemption of categories as they did with improvement activities. Our analysis raises the question of whether that balance has been achieved.

Finally, our analysis raises concerns about whether MIPS incentivized clinicians to prepare for later years of the program when increasingly difficult requirements and larger payment ramifications are put in place. It seems that across the performance spectrum, MIPS clinicians faced limited incentive to incrementally improve. The large number of clinicians that did not participate in individual categories may not incrementally improve because the cost of initial participation represents a large initial leap which likely outweighs the marginal benefit of a slightly higher payment adjustment, as noted previously. On the other end of the performance spectrum, clinicians easily achieving high scores may not have felt any pressure to incrementally improve performance. Even clinicians that participated and achieved low levels of performance were subject to very limited financial incentives to improve. Taken together, incentives in the first year of MIPS may not have pushed clinicians to begin implementing changes to improve performance. These trends appear to have persisted into the second year of MIPS, when even more clinicians (at least partially) participated, achieved above the performance threshold and achieved “exceptional performance” bonuses.4

The ability to achieve high performance while not participating in all categories of MIPS, combined with the small size of the incentive payment in both 2017 and 2018, was close to a ‘soft repeal’ of the penalties associated with the MU and Physician Quality Reporting System (PQRS) programs. Thus, while these early years of the program are likely intended as transition years to more stringent performance metrics, they may in reality represent a step backward from prior policies. While public data is not yet available, it is likely that this slow ‘transition’ continued into 2018 and 2019, such that clinicians may continue to face little immediate incentive to prepare for the more stringent penalties that MACRA mandates go into effect in 2022, when by statute one half of MIPS-participating clinicians will be penalized.16 This should raise concerns that political opposition will lead to delay or repeal of those planned penalties.

Supplementary Material

References

- 1.Andy Slavitt puts meaningful use on ice; Read his J.P. Morgan speech transcript. Healthcare IT News. https://www.healthcareitnews.com/news/andy-slavitt-puts-meaningful-use-ice-read-his-jp-morgan-speech-transcript. Published January 13, 2016. Accessed November 7, 2019.

- 2.Quality Payment Program. MIPS Scoring 101 Guide for the 2017 Performance Period. Centers for Medicare and Medicaid Services; 2017. https://qpp-cm-prod-content.s3.amazonaws.com/uploads/74/MIPS%20Scoring%20101%20Guide_Remediated%202018%2001%2017.pdf. [Google Scholar]

- 3.Verma S Quality Payment Program (QPP) Year 1 Performance Results. CMS Blog. November 2018. https://www.cms.gov/blog/quality-payment-program-qpp-year-1-performance-results. Accessed November 8, 2019. [Google Scholar]

- 4.Verma S 2018 Quality Payment Program (QPP) Performance Results. The Centers for Medicare and Medicaid Services Blog. January 2020. https://www.cms.gov/blog/2018-quality-payment-program-qpp-performance-results. Accessed January 11, 2020. [Google Scholar]

- 5.Crosson FJ, Bloniarz K, Glass D, Mathews J. MedPAC’s Urgent Recommendation: Eliminate MIPS, Take A Different Direction | Health Affairs. HealthAffairs Blog. March 2018. https://www.healthaffairs.org/do/10.1377/hblog20180309.302220/full/. Accessed January 10, 2020. [Google Scholar]

- 6.Eggleton K, Liaw W, Bazemore A. Impact of Gaps in Merit-Based Incentive Payment System Measures on Marginalized Populations. Ann Fam Med 2017;15(3):255–257. doi: 10.1370/afm.2075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Berdahl CT, Easterlin MC, Ryan G, Needleman J, Nuckols TK. Primary Care Physicians in the Merit-Based Incentive Payment System (MIPS): a Qualitative Investigation of Participants’ Experiences, Self-Reported Practice Changes, and Suggestions for Program Administrators. J Gen Intern Med. 2019;34(10):2275–2281. https://link.springer.com/article/10.1007/s11606-019-05207-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Navathe AS, Dinh CT, Chen A, Liao JM. Findings And Implications From MIPS Year 1 Performance Data. Health Affairs Blog. https://www.healthaffairs.org/do/10.1377/hblog20190117.305369/full/. Published January 18, 2019. Accessed November 10, 2019. [Google Scholar]

- 9.Liao Joshua M. Chen Anders Navathe Amol S.. The Real Opportunities of MIPS Payment Adjustments. Health Affairs Blog October 2018. https://www.healthaffairs.org/do/10.1377/hblog20181026.940799/full/. Accessed November 8, 2019. [Google Scholar]

- 10.Verma S Quality Payment Program Releases 2017 Physician Compare Data and Sees Increases in Clinician Participation Rates and Success for 2018. CMS Blog. https://www.cms.gov/blog/quality-payment-program-releases-2017-physician-compare-data-and-sees-increases-clinician. Published July 11, 2019. Accessed November 8, 2019. [Google Scholar]

- 11.The Centers for Medicare and Medicaid Services. Physician Compare Datasets. DataMedicareGov August 2019. https://data.medicare.gov/data/physician-compare. Accessed November 2019. [Google Scholar]

- 12. To access the appendix, click on the Details tab of the article online.

- 13.Liao JM, Shea JA, Weissman A, Navathe AS. Physician Perspectives In Year 1 Of MACRA And Its Merit-Based Payment System: A National Survey. Health Aff 2018;37(7):1079–1086. doi: 10.1377/hlthaff.2017.1485 [DOI] [PubMed] [Google Scholar]

- 14.Nuckols TK. With the Merit-Based Incentive Payment System, Pay for Performance Is Now National Policy. Ann Intern Med. 2017;166(5):368–369. doi: 10.7326/M16-2947 [DOI] [PubMed] [Google Scholar]

- 15.Centers for Medicare and Medicaid Services. Support for Small, Underserved, and Rural Practices [Internet]. Quality Payment Program; [cited 2020 Mar 17]. Available from: https://qpp.cms.gov/about/small-underserved-rural-practices?py=2018 [Google Scholar]

- 16.Centers for Medicare & Medicaid Services (CMS), HHS. Medicare Program; Merit-Based Incentive Payment System (MIPS) and Alternative Payment Model (APM) Incentive Under the Physician Fee Schedule, and Criteria for Physician-Focused Payment Models. Final rule with comment period. Fed Regist. 2016;81(214):77008–77831. https://www.ncbi.nlm.nih.gov/pubmed/27905815. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.