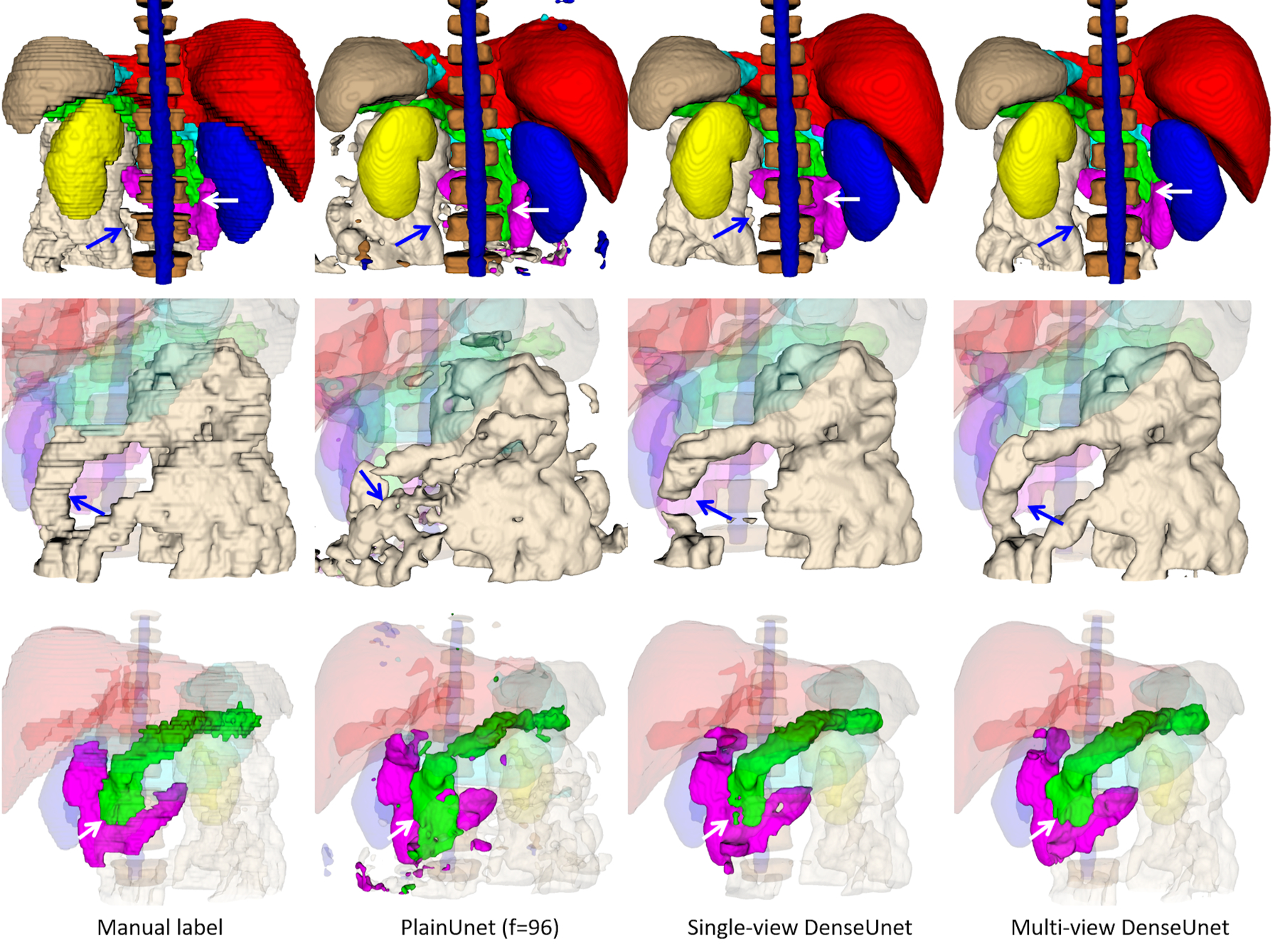

Figure 3.

Segmentation results on a random test case with manual label, PlainUnet (f=96), single-view (transversal) version of DenseUnet, and multi-view inference with majority voting: liver(red), spleen(gray), pancreas(green), right kidney(blue), left kidney(yellow), stomach(cyan), duodenum(purple), small intestine(white), spinal cord (blue) and vertebral bodies (dark brown). The first row shows the overall segmentation results, while the 2nd and 3rd row show the zoom-in view of small intestine and pancreas and duodenum in better angels. The multi-view inference correctly segments the small intestine that is missed in single-view inference, as pointed by the blue arrow. Additionally, it produces a more accurate boundary of the pancreas and duodenum, as pointed by the white arrow. Both proposed DenseUnets provide better segmentation quality than the popular PlainUnet.