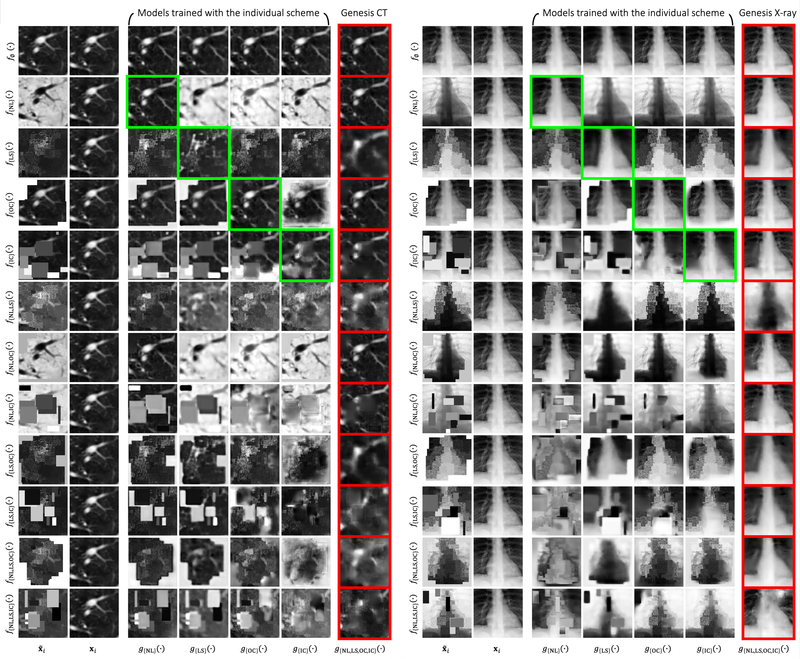

Fig. D.12:

The left and right panels show the qualitative assessment of image restoration quality using Genesis CT and Genesis X-ray, respectively. These models are trained with different training schemes, including four individual schemes (Columns 3—6) and a combined scheme (Column 7). As discussed in Fig. 1, each original image xi can possibly undergo twelve different transformations. We test the models with all these possible twelve transformed images . We specify types of the image transformation f(·) for each row and the training scheme g(·) for each column. First of all, it can be seen that the models trained with individual schemes can restore previously unseen images that have undergone the same transformation very well (framed in green), but fail to handle other transformations. Taking non-linear transformation f(NL)(·) as an example, any individual training scheme besides non-linear transformation itself cannot invert the pixel intensity from transformed whitish to the original blackish. As expected, the model trained with the combined scheme successfully restores original images from various transformations (framed in red). Second, the model trained with the combined scheme shows it is superior to other models even if they are trained with and tested on the same transformation. For example, in the local-shuffling case f(LS)(·), the image recovered from the local-shuffling pre-trained model g(LS)(·) is noisy and lacks texture. However, the model trained with the combined scheme g(NL,LS,OC,IC)(·) generates an image with more underlying structures, which demonstrates that learning with augmented tasks can even improve the performance on each of the individual tasks. Third, the model trained with the combined scheme significantly outperforms models trained with individual training schemes when restoring images that have undergone seven different combined transformations (Rows 6—12). For example, the model trained with non-linear transformation g(NL)(·) can only recover the intensity distribution in the transformed image undergone f(NL,IC)(·) but leaves the inner cutouts unchanged. These observations suggest that the model trained with the proposed unified self-supervised learning framework can successfully learn general anatomical structures and yield promising transferability on different target tasks. The quality assessment of image restoration further confirms our experimental observation, provided in Sec. 4.1, that the combined learning scheme exceeds each individual in transfer learning.