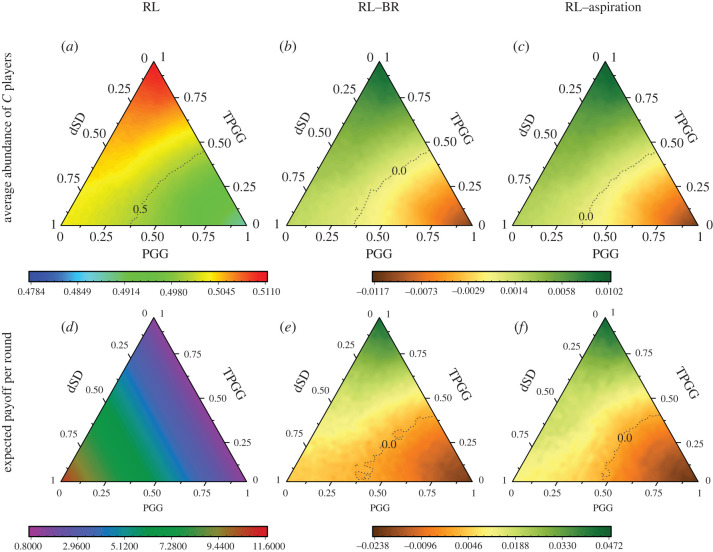

Figure 3.

Differences in the average abundance of C players and the expected payoff of players per round between the reinforcement learning (RL) and two non-learning updates. In (a,d), we show the average abundance of C players and the expected payoff per round when players update actions via the RL, respectively. Taking them as the benchmark, (b,e) illustrate the differences between the RL and the smoothed best response (BR), while (c,f) show the gaps between the RL and the aspiration-based rule (aspiration). The population structure is a lattice network (see electronic supplementary material, figures S1–S4, for other population structures with different network degrees). Parameter values: N = 400, d = 5, β = 0.01, , T = [d/2] + 1, , rs = 3 in the PGG while rs = 4 in the TPGG.