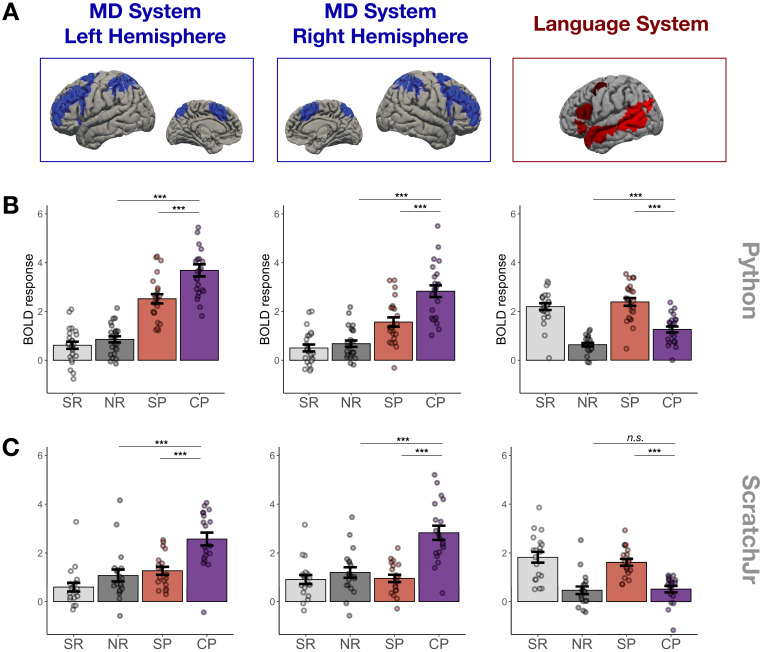

Figure 2. Main experimental results.

(A) Candidate brain systems of interest. The areas shown represent the ‘parcels’ used to define the MD and language systems in individual participants (see Materials and methods and Figure 3—figure supplement 1). (B, C) Mean responses to the language localizer conditions (SR – sentence reading and NR – nonwords reading) and to the critical task (SP – sentence problems and CP – code problems) in systems of interest across programming languages (B – Python, C – ScratchJr). In the MD system, we see strong responses to code problems in both hemispheres and to both programming languages; the fact that this response is stronger than the response to content-matched sentence problems suggests that it reflects activity evoked by code comprehension per se rather than just activity evoked by problem content. In the language system, responses to code problems elicit a response that is substantially weaker than that elicited by sentence problems; further, only in Experiment 1 do we observe responses to code problems that are reliably stronger than responses to the language localizer control condition (nonword reading). Here and elsewhere, error bars show standard error of the mean across participants, and the dots show responses of individual participants.