Abstract

This personal hybrid review piece, written in light of my recipience of the UIPAB 2020 young investigator award, contains a mixture of my scientific biography and work so far. This paper is not intended to be a comprehensive review, but only to highlight my contributions to computation-related aspects of super-resolution microscopy, as well as their origins and future directions.

Keywords: Super resolution microscopy, Point-spread function engineering, Single particle tracking, Deep learning

The past

In 2008–2013, I was a graduate student at the Technion – Israel Institute of Technology. My research, under the supervision of Prof. Mordechai (Moti) Segev in collaboration with Prof. Yonina Eldar, dealt with recovering seemingly lost information in optical signals, such as phase and high spatial frequencies, by using the prior knowledge of signal sparsity (Shechtman et al. 2010; 2014a, b; Szameit et al. 2012). Towards the end of my studies, I took a class in 3D imaging, where I learned something fascinating, that would change the trajectory of my career: the point spread function (PSF) of an imaging system can be manipulated wildly, such that a point source no longer appears as a point at all—but rather as two points, with an orientation that depends on the distance of the source from the imaging system (Pavani et al. 2009) (Fig. 1). I was immediately hooked by this elegant method to encode depth information in a 2D image, and the simplicity of its implementation: placing a phase mask in the back focal plane of the imaging system. I asked the professor, Yoav Schechner, whether this PSF was the optimal shape to encode 3D information in a 2D image, not knowing at the time that this question would occupy my mind for the next few years.

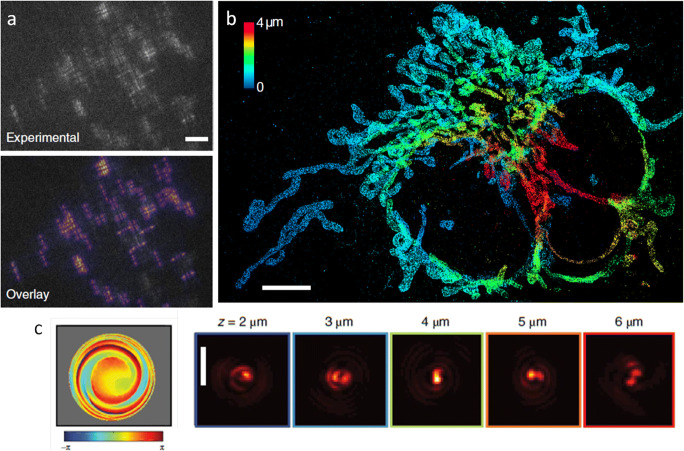

Fig. 1.

The double-helix PSF encodes depth in the shape of the 2D PSF. Top: imaging setup consisting of an inverted microscope augmented by a 4F system with a phase mask (SLM) in the Fourier plane. Bottom: measured images of a fluorescent bead as it appears for different defocus values. Adapted from (Pavani et al. 2009)

After my PhD, I decided to take a step towards applying my optics and engineering background to biological problems, very broadly defined. I was fortunate enough to find a perfect place for this ambition: W.E. Moerner’s group at Stanford University. At Stanford, I started working on a beautiful system for single-molecule measurements based on electrokinetic trapping, known as the Anti-Brownian ELectrokinetic trap (ABEL) trap (Cohen and Moerner 2005; Squires et al. 2018; Wang and Moerner 2014). This is a system that enables observation of single molecule kinetics in solution over extended durations, by exerting force that negates their Brownian motion, keeping them trapped inside a small observation region. My plan was to apply compressed sensing (Candès 2006; Donoho 2006) to improve the photon efficiency of the trap. Quickly, however, it became clear to me that this system has been already engineered so well by previous group members, that my contribution will be marginal at best, and not worth the precious postdoc time.

At the same time, I rekindled my interest in 3D imaging. The Moerner lab pioneered the use of sophisticated PSFs for 3D single-molecule microscopy (Backer and Moerner 2014; Backlund et al. 2012; Badieirostami et al. 2010; Pavani et al. 2009), namely, PSF engineering, and I became increasingly motivated to find the optimal PSF for 3D imaging. To briefly explain how PSF engineering works, a phase mask, e.g., an etched dielectric material or a programmable liquid-crystal spatial light modulator (SLM), is placed in the back focal plane (the Fourier plane) of a microscope (Fig. 1a). This acts as a phase filter for spatial frequencies, with the result being a modification of the PSF of the microscope, which can encode 3D information in the 2D PSF shape. Figure 2 shows several examples PSFs modified to encode depth information. Importantly, there is a mapping between the pattern on the phase mask and the resulting PSF. The mathematical relation is that the PSF is, to a good approximation, proportional to the absolute value squared of the Fourier transform of the phase pattern on the mask, multiplied by a depth-dependent defocus term (Petrov et al. 2017).

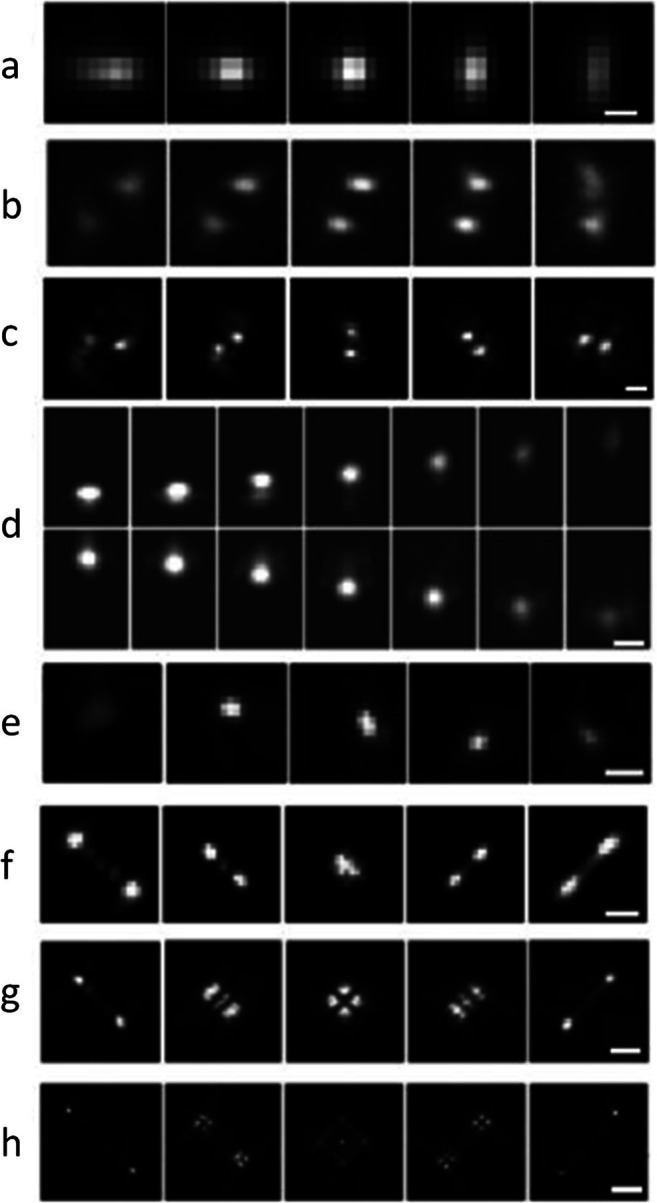

Fig. 2.

Example of measured PSFs encoding depth in their shapes, shown as a function of defocus; each PSF spans a different axial range. a Astigmatism (0.8 μm) (Huang et al. 2008). b Phase ramp (1 μm) (Baddeley et al. 2011). c Double-helix (3 μm) (Pavani et al. 2009). d Accelerating beam (3 μm) (Jia et al. 2014). e Corkscrew (3 μm) (Lew et al. 2011). f Saddle point (3 μm) (Shechtman et al. 2014a, b). g/h Tetrapod (6/20 μm) (Shechtman et al. 2015). Scale bars (a, c, d, e, f, g, h): 0.5, 2, 1, 1, 1, 2, and 5 μm. Figures adapted from Von Diezmann et al. (2017)

Different phase masks generate different PSFs. Some of these are useful for 3D imaging because they contain information on depth in their shape (Fig. 2), and some are exactly the opposite, extended depth of field PSFs (Ben-Eliezer et al. 2003; Dowski and Cathey 1995), where a point source looks pretty much the same no matter how defocused it is, within some axial range which is designed to be larger than the depth of field of the system. The question on my mind, therefore, was the one from my graduate class: what would be the optimal phase pattern to use in order to best encode the 3D position of a microscopic point source? The first step towards answering this question was to define it better.

Intuitively, a “good” 3D PSF is one that changes dramatically as a function of z, but also, importantly, does not spread the photons too much, otherwise it will be buried in the noise; namely, it should probably consist of some arrangement of spots. But how do you define this mathematically? After testing several unsuccessful definitions, in the sense that they led to no useful solutions (for example, minimizing the inner products between PSFs at different axial positions), I chose to focus on maximizing a mathematical quantity known as Fisher information (Kay 1995), which was shown earlier to be very valuable in single-molecule localization microscopy (Badieirostami et al. 2010; Ober et al. 2004). This quantity determines the lower bound on the variance of an estimated parameter from a set of measurements, using any unbiased estimator. In our case, this corresponds to a lower bound on localization precision. Simply put a PSF with high Fisher information is likely to exhibit better 3D localization precision.

We solved the optimization problem numerically, directing the computer to seek a phase pattern that would yield a PSF with maximal 3D Fisher information over a predetermined axial range. The result is the saddle-point PSF (Shechtman et al. 2014a, b) later extended to the tetrapod PSFs which exhibit larger axial ranges (Shechtman et al. 2015) (Fig. 2 f–h). Our 3D PSF optimization work was published in late September 2014, and 12 days later, it was announced that my advisor, W.E. Moerner, was awarded the Nobel prize in Chemistry for his pioneering work in single-molecule measurements. Overall, it was a good month.

Later, we realized it was also possible to encode color in the shape of the PSF of single molecules (Shechtman et al. 2016), a concept also used by other groups (Broeken et al. 2014; Jesacher et al. 2014). This made it possible to simultaneously image or track in 3D multiple-colored fluorescent emitters on a single optical channel, with no spectral filtering, and differentiate between them according to the shapes of their PSFs.

The present

In September 2016, I returned to the Technion in Israel as a PI, to start my lab in the department of Biomedical Engineering, as part of the Technion’s interdisciplinary program. The main mission of the lab is to develop optical and computational tools to observe life on the nanoscale. Research at this unique interface between engineering and the life sciences requires a highly interdisciplinary mixture of expertise, and I was fortunate to be able to assemble a team of excellent researchers with backgrounds ranging from physics, chemistry, biology, and engineering.

With the resurgence of machine learning and its amazing utility in a variety of image processing challenges (Dong et al. 2016; Kim et al. 2016; Rivenson et al. 2017; Wang et al. 2015), it was obvious that deep learning would be useful for solving difficult problems in super-resolution microscopy. Some of these directions I immediately started pursuing via a fruitful and ongoing collaboration with Tomer Michaeli from the Technion’s electrical engineering department.

First, we focused on single-molecule localization microscopy (SMLM). In SMLM, its most notable variants being (F)PALM and STORM (Betzig et al. 2006; Hess et al. 2006; Rust et al. 2006), the goal is to generate an image at super-resolution, namely beyond the resolution of the microscope, by sequential localization of blinking fluorescent emitters. Determining the position of a single fluorescent molecule from its PSF can be done to a precision much better than the size of the PSF, up to about an order of magnitude smaller, and so, if the molecules are labeling a structure of interest, this structure can be recovered computationally at a practical resolution of tens of nanometers (Hell et al. 2015).

One of the limitations of SMLM is that obtaining a single image requires the acquisition of a movie with typically thousands of frames, which limits the temporal imaging resolution to be on the order of minutes. This is because, traditionally, each frame must contain only a sparse subset of the emitters, to make image analysis feasible. To improve temporal resolution, therefore, a necessary condition is to handle localization of very densely spaced emitters, such that their PSFs overlap. Consequently, many methods have been developed to address the problem of overlapping emitters in SMLM (Barsic et al. 2014; Cox et al. 2011; Dertinger et al. 2009; Gazagnes et al. 2017; Holden et al. 2011; Huang et al. 2011; Hugelier et al. 2016; Qu et al. 2004; Sergé et al. 2008; Solomon et al. 2018; Zhu et al. 2012). Some of these methods can handle dense emitters very well, however, at the cost of long computation and a considerable amount of manual parameter tuning.

We developed a fast, precise, and parameter-free tool based on deep learning for dense emitter localization in SMLM, termed Deep-STORM(Nehme et al. 2018). The idea was to train a neural net to output super-resolved images from a large amount of simulated blinking movies. Advantageously, training on simulated data, with some adjustments using a small quantity of experimental data to refine the imaging model (Ferdman et al. 2020), proved to be possible. Also, since the training data consists of randomly positioned emitters, it does not use a priori structural information and is therefore inherently robust to different sample types.

The problem of emitter overlap is, naturally, even worse for 3D localization with engineered PSFs, because they are larger and therefore overlap even at lower densities. Therefore, we extended our deep learning approach to engineered PSFs with DeepSTORM3D (Nehme et al. 2020a, b). This is conceptually a more difficult task than 2D, because in 3D, different emitters exhibit very different PSFs, depending on their depths, whereas in 2D all emitter PSFs appear very similar. In fact, no existing algorithm was capable of handling such overlap of different PSFs; deep learning proved to be uniquely powerful in solving this difficult inverse problem, producing super-resolved 3D images of cellular structures from dense blinking frames using engineered PSFs (Fig. 3a–b).

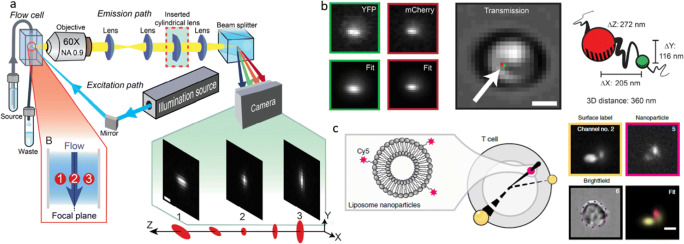

Fig. 3.

DeepSTORM3D solves the 3D dense emitter localization using a neural network. a Top: Example experimentally measured frame. Each shape (PSF) is a single fluorescent molecule. Bottom: Rendered frame from recovered emitters by the neural net. Scale bar: 5 μm. b Super-resolved mitochondria image from thousands of frames. Color encodes depth. Scale bar: 5 μm. c An optimal phase mask (left) and PSF (right) for dense emitters in 3D, learned by the neural net. Scale bar: 3 μm. Figure adapted from Nehme et al. (2020b)

Importantly, in DeepSTORM3D, we addressed another challenge that was unsolvable by standard approaches: how to design the optimal 3D PSF for a high density of emitters. While the Tetrapod (Fig. 2f–h) is optimal for localizing a single point source, it is laterally large, and therefore would cause severe overlap for multiple adjacent PSFs. It is reasonable to think that a PSF with a smaller lateral footprint would perform better for dense localization. But, how to find the optimal PSF for dense localization? This is not an easy task to solve with classical methods such as Fisher information maximization, because that would require considering numerous individual cases of spatial overlaps and emitter quantities. Therefore, to engineer the optimal 3D PSF for dense localization, we trained a neural net to jointly optimize the phase mask alongside the image recovery part, by training on many instances of randomly and densely positioned emitters. The resulting PSF is shown in Fig. 3c. Interestingly, it exhibits some rotation as well as asymmetric axially dependent spreading—known attributes of previously developed depth-encoding PSFs.

We recently extended the DeepSTORM3D PSF design approach to a two-channel system (Nehme et al. 2020a, b), which is advantageous at very high emitter densities—where the two channels are synergistically used to encode complementary information. In a similar manner, we have also derived the optimal multicolor PSF, namely, a wavelength-dependent PSF, that optimally encodes the colors of point emitters in a grayscale image (Hershko et al. 2019). Nowadays, deep learning has become an extremely useful tool in super-resolution microscopy, with a variety of applications ranging from single-image super-resolution to depth imaging (Belthangady and Royer 2019; Ouyang et al. 2018; Weigert et al. 2018; Zhang et al. 2018), with ever-increasing accessibility to non-expert end users (von Chamier et al. 2020).

A daunting challenge in 3D microscopy is that of limited throughput; Obtaining a 3D image is typically more time-consuming than obtaining a 2D image, especially when it necessitates scanning. Notably, there are methods to image objects at high rates—a particularly successful one is imaging flow cytometry (Basiji et al. 2007; George et al. 2004; Kay et al. 1979; Kay and Wheeless 1976), where cells are flowing in a microfluidic channel and are imaged as they move through an illuminated region. This typically produces a single 2D image per cell, although light sheet microscopy and holography have been incorporated into image flow cytometry (Dardikman et al. 2018; Gualda et al. 2017; Sung et al. 2014; Wu et al. 2013). Recently, we combined imaging flow cytometry with PSF engineering, to enable 3D co-localization inside live cells at a very high throughput of thousands of cells per minute. This was achieved by integrating a phase-modulating element (we used both a cylindrical lens and a phase mask) inside the imaging path of a commercial imaging flow cytometer (Weiss et al. 2020). This encoding of depth information enabled gathering large statistics about 3D co-localization over short periods of time. We used our method to demonstrate the characterization of physical distances between two labeled DNA loci in live yeast cells, dynamically on a population scale, as well as quantification of cellular entry of nanoparticles designed for therapeutic delivery by Avi Schroeder at the Technion (Fig. 4).

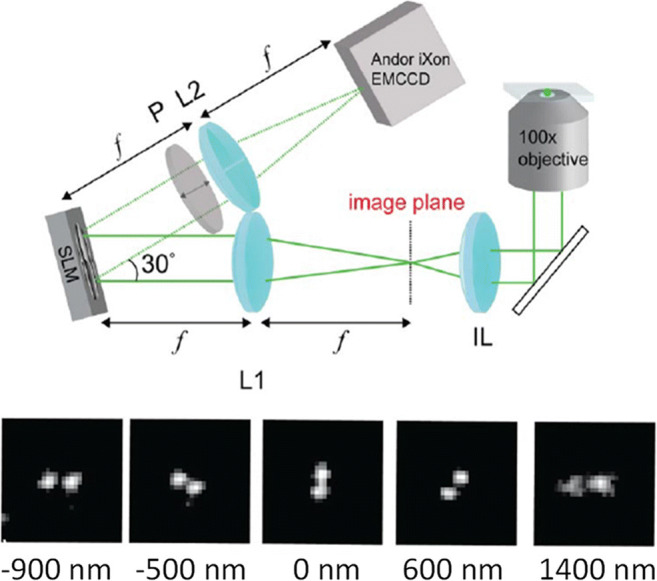

Fig. 4.

Imaging flow cytometry with PSF engineering. a Flow imaging setup, incorporating a cylindrical lens to encode depth via astigmatism. b Measuring 3D chromatin compaction in live yeast cells, using a 2-color fluorescent operator-repressor system for DNA labeling. Scale bar: 2 μm. c Localizing liposome nanoparticles (pink spots) in human T lymphocyte. Yellow spots represent surface-adhered fluorescent beads, encoding the 3D spherical cell shape. Scale bar: 5 μm. Figure adapted from Weiss et al. (2020)

Another application where deep learning has proved to be powerful is extracting information from challenging single-particle tracking data (Dosset et al. 2016; Granik et al. 2019; Kowalek et al. 2019; Muñoz-Gil et al. 2020). A common problem in particle-tracking experiments is that the acquired trajectories can be very short, e.g., due to photobleaching, and therefore, deriving statistical information from them can be difficult. In a collaboration with Yael Roichman from Tel Aviv University, we trained a neural net to classify single-particle trajectories by the type of diffusion—Brownian, fractional Brownian, or continuous time random walk (Granik et al. 2019). A main advantage of our deep learning approach is that it outputs an accurate classification from a relatively small number of steps (~ 25) and can also efficiently handle sets of multiple short trajectories—which is often the only kind of available data.

The future

The field of computational microscopy, in which the captured image need not bear any resemblance to the desired image, holds tremendous potential for capturing seemingly hidden data in biological samples. Freeing microscopes from the burden of generating images that are appealing to the human eye opens up fascinating degrees of freedom.

Specifically, neural nets successfully tackle various challenging inverse problems in microscopy, such as denoising, clustering, volumetric imaging, and more (Belthangady and Royer 2019). However, beyond their use in image analysis, an extremely appealing application for neural nets is in optical design, i.e., learning an optimal imaging system for a specific task. This is especially powerful when the route towards optimizing the design metric is unclear: How would one rationally design an optimal depth-/color-encoding PSF for dense emitters in 3D (Hershko et al. 2019; Nehme et al. 2020a, b), or an optimal illumination system for diagnosing malaria in blood smears (Muthumbi et al. 2019)? How does one achieve fast and robust autofocusing (Pinkard et al. 2019)? These are questions that neural nets are already answering, and they are likely to continue to contribute to related areas.

Our research group will continue to pursue various directions in computational microscopy development, as well as to apply existing techniques to possible bio-medical applications; these include observing chromatin dynamics in live cells by single-particle tracking (Nehme et al. 2020a, b), developing diagnostic tools for bacterial growth detection (Ferdman et al. 2018), dynamic microscopic surface profiling (Gordon-Soffer et al. 2020), and sensitive bio-molecule measurements.

Acknowledgments

All work presented here is the result of group effort. I have been extremely fortunate in the scientific environments in which I worked, and I have learned from every mentor, colleague, and student I had worked with.

Funding

The author received support from the Zuckerman foundation; from the various funding agencies for the work presented here; and from the private companies, philanthropists, and tax-paying citizens of Israel, the USA, the European Union, and other countries who ultimately pay for this work.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Backer AS, Moerner WE. Extending single-molecule microscopy using optical Fourier processing. J Phys Chem B. 2014;118(28):8313–8329. doi: 10.1021/jp501778z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Backlund MP, Lew MD, Backer AS, Sahl SJ, Grover G, Agrawal A, Piestun R, Moerner WE. Simultaneous, accurate measurement of the 3D position and orientation of single molecules. Proc Natl Acad Sci U S A. 2012;109(47):19087–19092. doi: 10.1073/pnas.1216687109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley D, Cannell MB, Soeller C. Three-dimensional sub-100 nm super-resolution imaging of biological samples using a phase ramp in the objective pupil. Nano Res. 2011;4(6):589–598. doi: 10.1007/s12274-011-0115-z. [DOI] [Google Scholar]

- Badieirostami M, Lew MD, Thompson MA, Moerner WE. Three-dimensional localization precision of the double-helix point spread function versus astigmatism and biplane. Appl Phys Lett. 2010;97(16):161103. doi: 10.1063/1.3499652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsic A, Grover G, Piestun R. Three-dimensional super-resolution and localization of dense clusters of single molecules. Sci Rep. 2014;4:5388. doi: 10.1038/srep05388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Basiji DA, Ortyn WE, Liang L, Venkatachalam V, Morrissey P. Cellular image analysis and imaging by flow cytometry. Clin Lab Med. 2007;27(3):653–670. doi: 10.1016/j.cll.2007.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belthangady C, Royer LA (2019) Applications, promises, and pitfalls of deep learning for fluorescence image reconstruction. Nat Methods. 10.1038/s41592-019-0458-z [DOI] [PubMed]

- Ben-Eliezer E, Zalevsky Z, Marom E, Konforti N. All-optical extended depth of field imaging system. J Opt A Pure Appl Opt. 2003;5(5):S164–S169. doi: 10.1088/1464-4258/5/5/359. [DOI] [PubMed] [Google Scholar]

- Betzig E, Patterson GH, Sougrat R, Lindwasser OW, Olenych S, Bonifacino JS, Davidson MW, Lippincott-Schwartz J, Hess HF. Imaging intracellular fluorescent proteins at nanometer resolution. Science (New York, NY) 2006;313(5793):1642–1645. doi: 10.1126/science.1127344. [DOI] [PubMed] [Google Scholar]

- Broeken J, Rieger B, Stallinga S. Simultaneous measurement of position and color of single fluorescent emitters using diffractive optics. Opt Lett. 2014;39(11):3352–3355. doi: 10.1364/OL.39.003352. [DOI] [PubMed] [Google Scholar]

- Candès EJ. Compressive sampling. Proceedings of the international congress of mathematicians. 2006;3:1433–1452. [Google Scholar]

- Cohen AE, Moerner WE. Method for trapping and manipulating nanoscale objects in solution. Appl Phys Lett. 2005;86(9):1–3. doi: 10.1063/1.1872220. [DOI] [Google Scholar]

- Cox S, Rosten E, Monypenny J, Jovanovic-Talisman T, Burnette DT, Lippincott-Schwartz J, Jones GE, Heintzmann R. Bayesian localization microscopy reveals nanoscale podosome dynamics. Nat Methods. 2011;9(2):195–200. doi: 10.1038/nmeth.1812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dardikman G, Nygate YN, Barnea I, Turko NA, Singh G, Javidi B, Shaked NT (2018) Integral refractive index imaging of flowing cell nuclei using quantitative phase microscopy combined with fluorescence microscopy. Biomed Optics Express. 10.1364/boe.9.001177 [DOI] [PMC free article] [PubMed]

- Dertinger T, Colyer R, Iyer G, Weiss S, Enderlein J. Fast, background-free, 3D super-resolution optical fluctuation imaging (SOFI) Proc Natl Acad Sci. 2009;106(52):22287–22292. doi: 10.1073/pnas.0907866106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dong C, Loy CC, He K, Tang X. Image super-resolution using deep convolutional networks. IEEE Trans Pattern Anal Mach Intell. 2016;38(2):295–307. doi: 10.1109/TPAMI.2015.2439281. [DOI] [PubMed] [Google Scholar]

- Donoho DL (2006) Compressed sensing. IEEE Trans Inf Theory. 10.1109/TIT.2006.871582

- Dosset P, Rassam P, Fernandez L, Espenel C, Rubinstein E, Margeat E, Milhiet PE (2016) Automatic detection of diffusion modes within biological membranes using back-propagation neural network. BMC Bioinformatics. 10.1186/s12859-016-1064-z [DOI] [PMC free article] [PubMed]

- Dowski ER, Cathey WT. Extended depth of field through wave-front coding. Appl Opt. 1995;34(11):1859. doi: 10.1364/AO.34.001859. [DOI] [PubMed] [Google Scholar]

- Ferdman B, Weiss LE, Alalouf O, Haimovich Y, Shechtman Y. Ultrasensitive refractometry via supercritical angle fluorescence. ACS Nano. 2018;12(12):11892–11898. doi: 10.1021/acsnano.8b05849. [DOI] [PubMed] [Google Scholar]

- Ferdman B, Nehme E, Weiss LE, Orange R, Alalouf O, Shechtman Y (2020) VIPR: vectorial implementation of phase retrieval for fast and accurate microscopic pixel-wise pupil estimation. Opt Express. 10.1364/oe.388248 [DOI] [PubMed]

- Gazagnes S, Soubies E, & Blanc-Féraud L (2017) High density molecule localization for super-resolution microscopy using CEL0 based sparse approximation. ISBI 2017-IEEE Int Symp Biomed Imaging, 4

- George TC, Basiji DA, Hall BE, Lynch DH, Ortyn WE, Perry DJ, Seo MJ, Zimmerman CA, Morrissey PJ. Distinguishing modes of cell death using the ImageStream® multispectral imaging flow cytometer. Cytometry A. 2004;59A(2):237–245. doi: 10.1002/cyto.a.20048. [DOI] [PubMed] [Google Scholar]

- Gordon-Soffer R, Weiss LE, Eshel R, Ferdman B, Nehme E, Bercovici M, Shechtman Y. Microscopic scan-free surface profiling over extended axial ranges by point-spread-function engineering. Sci Adv. 2020;6(44):eabc0332. doi: 10.1126/sciadv.abc0332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Granik N, Weiss LE, Nehme E, Levin M, Chein M, Perlson E, Roichman Y, Shechtman Y. Single-particle diffusion characterization by deep learning. Biophys J. 2019;117(2):185–192. doi: 10.1016/j.bpj.2019.06.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gualda EJ, Pereira H, Martins GG, Gardner R, Moreno N. Three-dimensional imaging flow cytometry through light-sheet fluorescence microscopy. Cytometry A. 2017;91(2):144–151. doi: 10.1002/cyto.a.23046. [DOI] [PubMed] [Google Scholar]

- Hell SW, Sahl SJ, Bates M, Zhuang X, Heintzmann R, Booth MJ, Bewersdorf J, Shtengel G, Hess H, Tinnefeld P, Honigmann A, Jakobs S, Testa I, Cognet L, Lounis B, Ewers H, Davis SJ, … Cordes T (2015) The 2015 super-resolution microscopy roadmap. In Journal of Physics D: Applied Physics (Vol. 48, Issue 44). Institute of Physics Publishing. 10.1088/0022-3727/48/44/443001

- Hershko E, Weiss LE, Michaeli T, Shechtman Y (2019) Multicolor localization microscopy and point-spread-function engineering by deep learning. Opt Express. 10.1364/oe.27.006158 [DOI] [PubMed]

- Hess ST, Girirajan TPK, Mason MD. Ultra-high resolution imaging by fluorescence photoactivation localization microscopy. Biophys J. 2006;91(11):4258–4272. doi: 10.1529/biophysj.106.091116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holden SJ, Uphoff S, Kapanidis AN. DAOSTORM: an algorithm for high- density super-resolution microscopy. Nat Methods. 2011;8(4):279–280. doi: 10.1038/nmeth0411-279. [DOI] [PubMed] [Google Scholar]

- Huang B, Wang W, Bates M, Zhuang X. Three-dimensional super-resolution imaging by stochastic optical reconstruction microscopy. Science. 2008;319(8):810–813. doi: 10.1126/science.1153529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang F, Schwartz SL, Byars JM, Lidke KA. Simultaneous multiple-emitter fitting for single molecule super-resolution imaging. Biomed Optics Express. 2011;2(5):1377. doi: 10.1364/BOE.2.001377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hugelier S, De Rooi JJ, Bernex R, Duwé S, Devos O, Sliwa M, Dedecker P, Eilers PHC, Ruckebusch C (2016) Sparse deconvolution of high-density super-resolution images. Sci Rep. 10.1038/srep21413 [DOI] [PMC free article] [PubMed]

- Jesacher A, Bernet S, Ritsch-Marte M. Colour hologram projection with an SLM by exploiting its full phase modulation range. Opt Express. 2014;22(17):20530–20541. doi: 10.1364/OE.22.020530. [DOI] [PubMed] [Google Scholar]

- Jia S, Vaughan JC, Zhuang X. Isotropic three-dimensional super-resolution imaging with a self-bending point spread function. Nat Photonics. 2014;8(4):302–306. doi: 10.1038/nphoton.2014.13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay SM. Technometrics. 1995. Fundamentals of statistical signal processing: estimation theory; p. 303. [Google Scholar]

- Kay DB, Wheeless LL. Laser stroboscopic photography. Technique for cell orientation studies in flow. J Histochem Cytochem. 1976;24(1):265–268. doi: 10.1177/24.1.768371. [DOI] [PubMed] [Google Scholar]

- Kay DB, Cambier JL, Wheeless LL. Imaging in flow. J Histochem Cytochem. 1979;27(1):329–334. doi: 10.1177/27.1.374597. [DOI] [PubMed] [Google Scholar]

- Kim J, Lee JK, & Lee KM (2016) Accurate image super-resolution using very deep convolutional networks. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 10.1109/CVPR.2016.182

- Kowalek P, Loch-Olszewska H, Szwabiński J (2019) Classification of diffusion modes in single-particle tracking data: feature-based versus deep-learning approach. Phys Rev E. 10.1103/PhysRevE.100.032410 [DOI] [PubMed]

- Lew MD, Lee SF, Badieirostami M, Moerner WE (2011) Corkscrew point spread function for far-field three-dimensional nanoscale localization of pointlike objects. Opt Lett. 10.1364/ol.36.000202 [DOI] [PMC free article] [PubMed]

- Muñoz-Gil G, Garcia-March MA, Manzo C, Martín-Guerrero JD, Lewenstein M (2020) Single trajectory characterization via machine learning. New J Phys. 10.1088/1367-2630/ab6065

- Muthumbi A, Chaware A, Kim K, Zhou KC, Konda PC, Chen R, Judkewitz B, Erdmann A, Kappes B, Horstmeyer R (2019) Learned sensing: jointly optimized microscope hardware for accurate image classification. Biomed Optics Express. 10.1364/boe.10.006351 [DOI] [PMC free article] [PubMed]

- Nehme E, Weiss LE, Michaeli T, Shechtman Y. Deep-STORM: super-resolution single-molecule microscopy by deep learning. Optica. 2018;5(4):458. doi: 10.1364/OPTICA.5.000458. [DOI] [Google Scholar]

- Nehme E, Ferdman B, Weiss LE, Naor T, Freedman D, Michaeli T, & Shechtman Y (2020a) Learning an optimal PSF-pair for ultra-dense 3D localization microscopy. http://arxiv.org/abs/2009.14303

- Nehme E, Freedman D, Gordon R, Ferdman B, Weiss LE, Alalouf O, Naor T, Michaeli T, Shechtman Y (2020b) DeepSTORM3D: dense 3D localization microscopy and PSF design by deep learning. Nat Methods [DOI] [PMC free article] [PubMed]

- Ober RJ, Ram S, Ward ES. Localization accuracy in single-molecule microscopy. Biophys J. 2004;86(2):1185–1200. doi: 10.1016/S0006-3495(04)74193-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ouyang W, Aristov A, Lelek M, Hao X, Zimmer C. Deep learning massively accelerates super-resolution localization microscopy. Nat Biotechnol. 2018;36(5):460–468. doi: 10.1038/nbt.4106. [DOI] [PubMed] [Google Scholar]

- Pavani SRP, Thompson MA, Biteen JS, Lord SJ, Liu N, Twieg RJ, Piestun R, Moerner WE. Three-dimensional, single-molecule fluorescence imaging beyond the diffraction limit by using a double-helix point spread function. Proc Natl Acad Sci. 2009;106(9):2995–2999. doi: 10.1073/pnas.0900245106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrov PN, Shechtman Y, Moerner WE. Measurement-based estimation of global pupil functions in 3D localization microscopy. Opt Express. 2017;25(7):7945. doi: 10.1364/OE.25.007945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinkard H, Phillips Z, Babakhani A, Fletcher DA, Waller L (2019) Deep learning for single-shot autofocus microscopy. Optica. 10.1364/optica.6.000794

- Qu X, Wu D, Mets L, Scherer NF. Nanometer-localized multiple single-molecule fluorescence microscopy. Proc Natl Acad Sci U S A. 2004;101(31):11298–11303. doi: 10.1073/pnas.0402155101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivenson Y, Göröcs Z, Günaydin H, Zhang Y, Wang H, Ozcan A. Deep learning microscopy. Optica. 2017;4(11):1437. doi: 10.1364/OPTICA.4.001437. [DOI] [Google Scholar]

- Rust MJ, Bates M, Zhuang X. Sub-diffraction-limit imaging by stochastic optical reconstruction microscopy (STORM) Nat Methods. 2006;3(10):793–796. doi: 10.1038/nmeth929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sergé A, Bertaux N, Rigneault H, Marguet D. Dynamic multiple-target tracing to probe spatiotemporal cartography of cell membranes. Nat Methods. 2008;5(8):687–694. doi: 10.1038/nmeth.1233. [DOI] [PubMed] [Google Scholar]

- Shechtman Y, Gazit S, Szameit A, Eldar YC, Segev M. Super-resolution and reconstruction of sparse images carried by incoherent light. Opt Lett. 2010;35(8):1148–1150. doi: 10.1364/OL.35.001148. [DOI] [PubMed] [Google Scholar]

- Shechtman Y, Beck A, Eldar YC. GESPAR: efficient phase retrieval of sparse signals. IEEE Trans Signal Process. 2014;62(4):928–938. doi: 10.1109/TSP.2013.2297687. [DOI] [Google Scholar]

- Shechtman Y, Sahl SJ, Backer AS, Moerner WE. Optimal point spread function design for 3D imaging. Phys Rev Lett. 2014;113(3):133902. doi: 10.1103/PhysRevLett.113.133902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shechtman Y, Weiss LE, Backer AS, Sahl SJ, Moerner WE. Precise three-dimensional scan-free multiple-particle tracking over large axial ranges with tetrapod point spread functions. Nano Lett. 2015;15(6):4194–4199. doi: 10.1021/acs.nanolett.5b01396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shechtman Y, Weiss LE, Backer AS, Lee MY, Moerner WE. Multicolour localization microscopy by point-spread-function engineering. Nat Photonics. 2016;10(9):590–594. doi: 10.1038/nphoton.2016.137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solomon O, Mutzafi M, Segev M, Eldar YC. Sparsity-based super-resolution microscopy from correlation information. Opt Express. 2018;26(14):18238. doi: 10.1364/oe.26.018238. [DOI] [PubMed] [Google Scholar]

- Squires A, Cohen A, Biophysics W M-E of & 2018 U . Anti-Brownian traps. Berlin: Springer; 2018. [Google Scholar]

- Sung Y, Lue N, Hamza B, Martel J, Irimia D, Dasari RR, Choi W, Yaqoob Z, So P (2014)Three-dimensional holographic refractive-index measurement of continuously flowing cells in a microfluidic channel. Phys Rev Appl. 10.1103/PhysRevApplied.1.014002 [DOI] [PMC free article] [PubMed]

- Szameit A, Shechtman Y, Osherovich E, Bullkich E, Sidorenko P, Dana H, Steiner S, Kley EB, Gazit S, Cohen-Hyams T, Shoham S, Zibulevsky M, Yavneh I, Eldar YC, Cohen O, Segev M. Sparsity-based single-shot subwavelength coherent diffractive imaging. Nat Mater. 2012;11(5):455–459. doi: 10.1038/nmat3289. [DOI] [PubMed] [Google Scholar]

- von Chamier L, Laine R, Jukkala J, Spahn C, Krentzel D, Nehme E, Lerche M, Hernández-Pérez S, Mattila P, Karinou E, Holden S, Solak AC, Krull A, Buchholz T-O, Jones M, Royer L, Leterrier C, et al. ZeroCostDL4Mic: an open platform to use deep-learning in microscopy. BioRxiv. 2020;2020(03):20.000133. doi: 10.1101/2020.03.20.000133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Von Diezmann A, Shechtman Y, & Moerner WE (2017)Three-dimensional localization of single molecules for super-resolution imaging and single-particle tracking. In Chemical Reviews (Vol. 117, Issue 11, pp. 7244–7275). 10.1021/acs.chemrev.6b00629 [DOI] [PMC free article] [PubMed]

- Wang Q, Moerner WE. Single-molecule motions enable direct visualization of biomolecular interactions in solution. Nat Methods. 2014;11(5):555–558. doi: 10.1038/nmeth.2882. [DOI] [PubMed] [Google Scholar]

- Wang Z, Liu D, Yang J, Han W, & Huang T (2015) Deep networks for image super-resolution with sparse prior. Proceedings of the IEEE International Conference on Computer Vision 10.1109/ICCV.2015.50

- Weigert M, Schmidt U, Boothe T, Müller A, Dibrov A, Jain A, Wilhelm B, Schmidt D, Broaddus C, Culley S, Rocha-Martins M, Segovia-Miranda F, Norden C, Henriques R, Zerial M, Solimena M, Rink J et al (2018)Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat Methods. 10.1038/s41592-018-0216-7 [DOI] [PubMed]

- Weiss LE, Shalev Ezra Y, Goldberg S, Ferdman B, Adir O, Schroeder A, Alalouf O, Shechtman Y (2020)Three-dimensional localization microscopy in live flowing cells. Nat Nanotechnol. 10.1038/s41565-020-0662-0 [DOI] [PubMed]

- Wu J, Li J, Chan RKY (2013) A light sheet based high throughput 3D-imaging flow cytometer for phytoplankton analysis. Opt Express. 10.1364/oe.21.014474 [DOI] [PubMed]

- Zhang P, Liu S, Chaurasia A, Ma D, Mlodzianoski MJ, Culurciello E, Huang F. Analyzing complex single-molecule emission patterns with deep learning. Nat Methods. 2018;15(11):913–916. doi: 10.1038/s41592-018-0153-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu L, Zhang W, Elnatan D, Huang B. Faster STORM using compressed sensing. Nat Methods. 2012;9(7):721–723. doi: 10.1038/nmeth.1978. [DOI] [PMC free article] [PubMed] [Google Scholar]