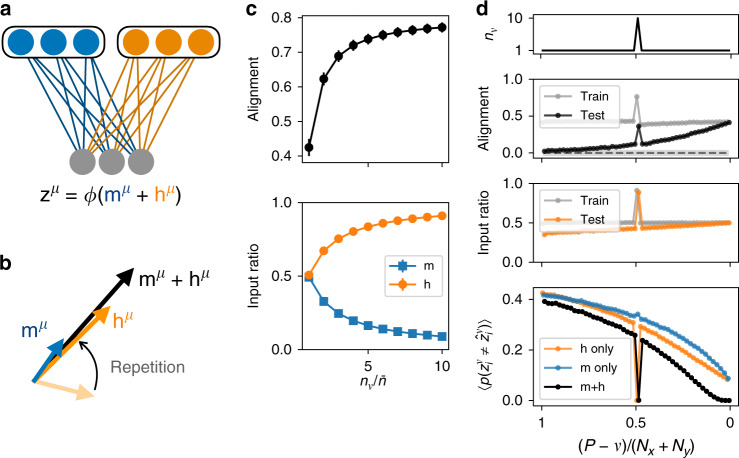

Fig. 3. Inputs to the downstream population become aligned through repetition.

a A downstream population receives input from two pathways, where the first input m is trained with fast supervised learning, while the second input h is modified with slow Hebbian learning. b Through repetition, the second input becomes increasingly aligned with the first, while its projection along the readout direction grows. c Top: The normalized overlap between m and h for one particular pattern that is repeated nν times. Bottom: The ratio of the projection of each input component along the readout direction ( or ) to the total input along the readout direction (). d Top: In a two-pathway network trained on P patterns sequentially, one pattern is repeated 10 times during training, while others are trained only once. Middle panels: The alignment for each pattern during sequential training and testing (upper panel, where shaded region is chance alignment for randomly oriented vectors), and the ratio of the input h along to the total input along (lower panel). Bottom: The error rate, averaged over Nz readout units, for the trained network with either both inputs intact (black) or with one input removed (blue and orange) (curves offset slightly for clarity). All points in (c) and (d) are simulations with α = β = 1 and Nx = Ny = Nz = 1000, averaged over n = 1000 randomly initialized networks. Error bars denote standard deviations and are, where not visible, smaller than the plotted points.