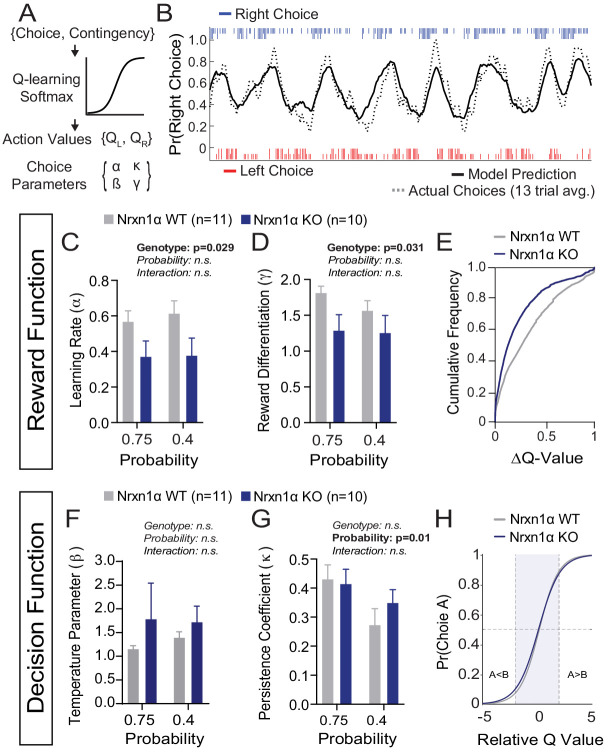

Figure 4. A deficit in value updating underlies abnormal allocation of choices in Neurexin1α mutants.

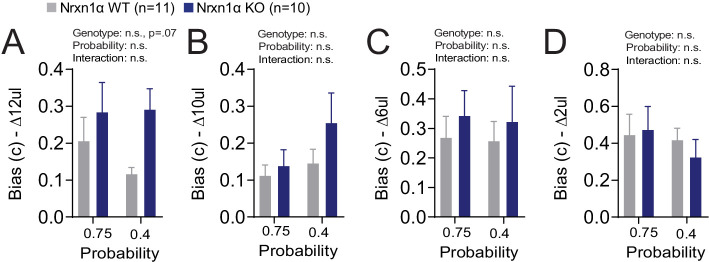

(A) Q-learning reinforcement model. Mouse choice was modeled as a probabilistic choice between two options of different value (QL,QR) using a softmax decision function. Data from each reinforcement rate were grouped before model fitting. (B) Example of model prediction versus actual animal choice. Choice probability calculated in moving window of 13 trials. Long and short markers indicate large and small reward outcomes. (C and D) As compared to littermate controls (gray, n = 11), Nrxn1α mutants (blue, n = 10) exhibit a deficit in the learning rate, α, which describes the weight given to new reward information and γ, a utility function that relates how sensitively mice integrate rewards of different magnitudes (two-way RM ANOVA). (E) Nrxn1α KOs exhibit an enrichment of low ΔQ-value trials. (F and G) Nrxn1α mutants do not exhibit significant differences in explore–exploit behavior (F, captured by β) or in their persistence toward previously selected actions (G, captured by κ). (K) There is no significant difference in the decision function of Nrxn1α wild-type and mutant animals. All data represented as mean ± SEM. Bias figures can be found in Figure 4—figure supplement 1.