Abstract

Aims

International early warning scores (EWS) including the additive National Early Warning Score (NEWS) and logistic EWS currently utilise physiological snapshots to predict clinical deterioration. We hypothesised that a dynamic score including vital sign trajectory would improve discriminatory power.

Methods

Multicentre retrospective analysis of electronic health record data from postoperative patients admitted to cardiac surgical wards in four UK hospitals. Least absolute shrinkage and selection operator-type regression (LASSO) was used to develop a dynamic model (DyniEWS) to predict a composite adverse event of cardiac arrest, unplanned intensive care re-admission or in-hospital death within 24 h.

Results

A total of 13,319 postoperative adult cardiac patients contributed 442,461 observations of which 4234 (0.96%) adverse events in 24 h were recorded. The new dynamic model (AUC = 0.80 [95% CI 0.78−0.83], AUPRC = 0.12 [0.10−0.14]) outperforms both an updated snapshot logistic model (AUC = 0.76 [0.73−0.79], AUPRC = 0.08 [0.60−0.10]) and the additive National Early Warning Score (AUC = 0.73 [0.70−0.76], AUPRC = 0.05 [0.02−0.08]). Controlling for the false alarm rates to be at current levels using NEWS cut-offs of 5 and 7, DyniEWS delivers a 7% improvement in balanced accuracy and increased sensitivities from 41% to 54% at NEWS 5 and 18% to –30% at NEWS 7.

Conclusions

Using an advanced statistical approach, we created a model that can detect dynamic changes in risk of unplanned readmission to intensive care, cardiac arrest or in-hospital mortality and can be used in real time to risk-prioritise clinical workload.

Keywords: Cardiac surgery, Dynamic prediction, Early warning scores, National early warning score, Postoperative deterioration

Introduction

The National Early Warning Score (NEWS) utilises vital sign snapshots to detect patients at high risk of clinical deterioration.1, 2, 3 Despite NHS endorsement as the ‘gold standard’, the predictive value of any given NEWS score is uncertain and subject to inter-specialty variation.4, 5 Although it seems intuitive that vital sign trajectory should be an important determinant of the need for clinical review, no currently used Early Warning Score (EWS) includes vital sign trends.6, 7, 8, 9

We have recently developed a logistic early warning score (logEWS) that was better than NEWS at discriminating patients who had an adverse event after cardiac surgery from those who did not.10 Any given logEWS score reflects the percentage chance of acute deterioration within a 24 h time period. As well as offering greater transparency, the logEWS concept introduces the potential for specialty specific calibration.

Electronic calculation of logEWS using a web-based app reveals wide differences in percentage chance of deterioration for many common clinical scenarios at the two recommended escalation thresholds of NEWS ≥ 5 and ≥7.10, 11, 12 Patients with stable or improving physiology, with so-called ‘soft NEWS scores’ contribute to the high ‘non-event’ rate at both escalation thresholds and loss of confidence in NEWS.13

We hypothesise that individual patient trajectory should be factored into the EWS model by giving additional weighting to the deteriorating patient and reduced weighting to the improving patient. Using an advanced statistical approach, a dynamic scoring model was developed that takes into account both improving or deteriorating physiology and the rate of that change over time.

Methods

Study population and data collection

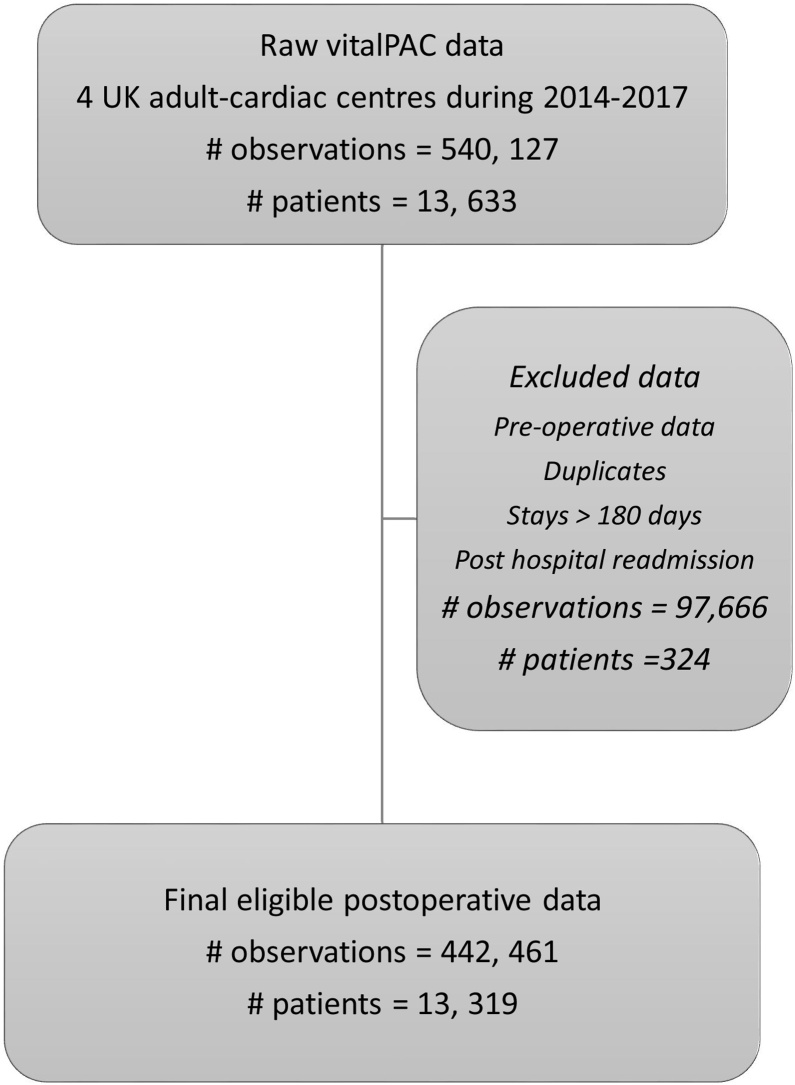

The Health Research Authority approval was granted for this study and ethics approval was not applicable. The study population and data sources have been described previously in our earlier snapshot logistic early warning score paper.10 Briefly, we studied adult patients undergoing risk-stratified major cardiac surgery over a three-year period 1st April 2014–31st March 2017 in four UK Adult Cardiac Surgical Centres–Coventry, Middlesbrough, Papworth and Wolverhampton. All centres used vitalPAC™ (CareFlows Vitals, System C Healthcare, Maidstone, Kent, UK) to electronically record patients’ vital signs on the postoperative surgical wards. Note that data have been curated from our earlier work. We excluded observations: with missing values due to software errors and unused oxygen delivery values, before cardiac surgery, long stayers >180 days post-surgery, after readmission to hospital following surgery and duplicates (Fig. 1). The final dataset contains no missing data.

Fig. 1.

Flow chart of data processing steps for vitalPAC™.

Outcomes

Our primary objective was to develop a dynamic scoring model that uses information on patient trajectory, comparing it against simple snapshot logistic model and the additive NEWS to predict a composite adverse event of cardiac arrest, unplanned intensive care re-admission or in-hospital death within 24 h. Secondary objectives included highlighting specific features in patient vital sign trends that cause greatest predictive value of adverse events.

Score calculation

We developed a dynamic prediction model (DyniEWS) that uses both snapshot and individual patient trajectories of vital signs. To directly compare against NEWS we constructed the model using the same snapshot variables. We increased the number of categories for oxygen therapy from two used by NEWS to four: category 0, room air; category 1, FiO2 0.25−0.34, Venturi mask or nasal cannulae with oxygen flow <5 l.min−1; category 2, FiO2 0.35−0.44, standard oxygen face mask or nasal cannulae with oxygen flow ≥5 l.min−1; and category 3, FiO2 ≥ 0.45 or reservoir oxygen mask. Following previous findings, we allowed for non-symmetric effects of continuous predictors by breaking each physiological measurement into two variables reflecting positive (min (0, value-median value)) and negative (min (0, abs (value-median value)) deviations from the median value. To capture the vital trends of each patient, we constructed, for each vital sign, the difference from the most recent value (the most recent rate of change), average (level) and standard deviation (variability) of three most recent values (i.e. a rolling window of three previous records) as well as those across all sequential values. Frequency of measurements in 6 h prior to each vital record was also included in the predictor set under the clinical hypothesis that the monitoring frequency is associated with deteriorating patient characteristics. More discussions on the choice of the observation window are available in Supplementary Section 2.5 and Supplementary Table 8.

Statistical analysis

Least absolute shrinkage and selection operator–type regression (LASSO) was used to perform variable selection by imposing a penalty to models with a large number of predictors and shrinking the coefficients of the less influential variables to zero.14 This statistical approach improves the accuracy of prediction while reducing the model complexity. DyniEWS was compared against the snapshot logEWS2 (a revised version of the original logEWS)10 and the classical NEWS.1 Sensitivity analyses for a number of alternative forms of DyniEWS were also performed (Supplementary Section 2.2).

Model evaluation

Model performance was assessed by area under the receiver operating curve (AUC), area under the precision-recall curve (AUPRC), sensitivity, specificity and balanced accuracy.15, 16 Considering clinical utility, sensitivity was assessed when specificity was fixed at NEWS values of 5 and 7 respectively.

Published comparisons of different EWS have traditionally utilised AUC to measure discriminatory performance and ability to predict SAE. There is accumulating evidence that for heavily imbalanced data with very low incidences of SAE, AUC may be misleading as it can remain high even when most or all of the rare events (SAEs) are misclassified.17, 18 The precision-recall curve, on the other hand, focuses on patients with events and plots precision (the fraction of the predicted positives that are true positives) against recall (sensitivity). The reference is presented by the horizontal line indicating prevalence. It has been recommended that both ROC and PRC should be presented for imbalanced outcomes typically encountered in these studies.16, 17 More discussions are available in Supplementary Sections 1.2 and 2.5.

Model validation

The time series feature of patient records allows for a temporal model validation procedure which reflects both the association of consecutive records in the patient record trajectory and also the amount of heterogeneity across patients.19 The 36-month data in each hospital were split into nine 4-month folds in temporal order. The last 4-month fold in each hospital (roughly 10% of all observations) was used as the held-out test set while the remaining 32-month data (i.e. the training set) was used for model development and internal temporal validation. AUC, calibration slope (ideal value is 1; values >1 indicates under-fitting and <1, over-fitting), calibration-in-the-large (ideal value is 0; values >0 indicates under-fitting and <1, over-fitting) and calibration plots were reported. Details explaining the statistical and validation schemes are available in the Supplementary Sections 2.3 and 2.5.

Materials

All analyses were performed using the R statistical software version 3.5.3 (packages: pROC, caret, dplyr, ggplot2). Programming scripts are fully available in the supplementary materials. Model results were reported following the TRIPOD multivariable prediction model checklist and the STROBE checklist for observational studies.

Results

Data description

A total of 13,319 patients contributed 442,461 records across four hospitals with 4234 preceding composite adverse events in 24 h (0.96% of records and 4.1% of patients). Record-level data were used across all analyses. Patient characteristics and the distribution of vitals are reported in Table 1. Descriptive statistics for other hospitals and for all variables that capture the trajectory of vitals are available in Supplementary Tables 1.1 & 1.2.

Table 1.

Distribution of records, outcomes and snapshot patient vitals.

| Characteristics | Total |

|---|---|

| Patient and events | |

| #Patients | 13,319 |

| #Records | 442,461 |

| #Repeated measurements per patient | |

| Median(Q1,Q3) | 39 (25, 66) |

| #Days in wards | |

| Median(Q1,Q3) | 7 (4, 13) |

| #Composite adverse events in 24 h | |

| n (%) | 4234 (0.96) |

| NEWS score | |

| Mean(SD) | 2.2 (1.7) |

| #Records in 6 h before each new record | |

| Median(Q1,Q3) | 1 (1, 1) |

| Physiological vitals | |

| FiO2 category, n (%) | |

| Room air | 297,723 (67.3) |

| Nasal cannula | 125,321 (28.3) |

| Simple mask | 19,211 (4.3) |

| Reservoir mask | 206 (0.1) |

| Level of consciousness | |

| Alert | 441,126 (99.7) |

| Others | 1335 (0.3) |

| Respiratory rate (breaths min−1) | |

| Mean(SD) | 17 (2.4) |

| Oxygen saturation (%) | |

| Mean(SD) | 96 (2.0) |

| Temperature (°C) | |

| Mean(SD) | 36.6 (0.5) |

| Systolic blood pressure (mmHg) | |

| Mean(SD) | 120 (18) |

| Heart rate (beats min−1) | |

| Mean(SD) | 82 (16) |

# Patients = number of patients, # records = number of records, ... and so on

DyniEWS and feature importance

The final model, DyniEWS, contains the frequency of measurements in 6 h prior to each new record, positive and negative deviations from the median of each snapshot measurement of each vital, the most recent rate of change of each vital, the average and standard deviation of the most recent three measurements of each vital and the average of all historical values of FiO2 categories. In general, we find that the four most influential predictors are: snapshot FiO2 oxygen therapy categories (low, medium or high FiO2), “Not alert” conscious level, the average of all sequential values of ordered FiO2 categories and the frequency of measurements in the previous 6 h (Table 2). Among the five snapshot physiological measurements, above-median respiratory rate, below-median systolic blood pressure, below-median oxygen saturation, and below-median temperature and above-median heart rate are the most influential predictors of a SAE in 24 h. Among their sequential values, rolling averages of oxygen saturation and respiratory rate were ranked the most important while for the most recent rate of change, heart rate, systolic blood pressure and respiratory rate were the only influential features. Of the total importance of predictors to SAEs in 24 h, snapshot measurements account for 82%, trajectory information accounts for 18%, of which the frequency of measurements in the previous 6 h alone accounts for4%. More details are available in Supplementary Table 4.

Table 2.

Ranking (from highest to lowest) of important features selected into the final DyniEWS model. Relative importance of each feature computed as percentages of the largest effect based on standardised features.

| Rank (from highest to lowest) | Most important features |

|---|---|

| 1 | FiO2 categories (Reservoir mask (100%), Simple mask (73.5%), Nasal cannula (41.5%)) |

| 2 | Level of consciousness (Not alert (31.1%)) |

| 3 | Average of all historical values of the FiO2 categories (16.2%) |

| 4 | Frequency of measurements in the previous 6 h (13.5%) |

| Rank (from highest to lowest) | Relative importance of 5 physiological measurements (%) |

|

|---|---|---|

| Snapshot records | Sequential records | |

| 1 | Respiratory_rate_plus(8.9) | rollfio2_mean(10.1) |

| 2 | BP_Systolic_minus(7.7) | rollfio2_sd(6.3) |

| 3 | SATS_minus(7.0) | rollSATS_mean(4.1) |

| 4 | Temperature_minus(5.2) | rollAVPU_2_sd(3.4) |

| 5 | Heart_rate_plus(4.7) | rollRespiratory_rate_mean(2.9) |

| 6 | Respiratory_rate_minus(1) | rollTemperature_mean(2.3) |

| 7 | BP_Systolic_plus(0.5) | diffHeart_rate(1.1) |

| 8 | Temperature_plus(0.4) | diffBP_Systolic(0.7) |

| 9 | Heart_rate_minus(0) | rollAVPU_2_mean(0.7) |

| 10 | SATS_plus(0) | diffRespiratory_rate(0.4) |

| 11 | rollHeart_rate_mean(0.1) | |

roll: rolling summaries of the most recent three values.

diff: the most recent rate of change.

mean: average.

sd: standard deviation.

plus: positive deviation from the median.

minus: negative deviation from the median.

Comparing DyniEWS to other models

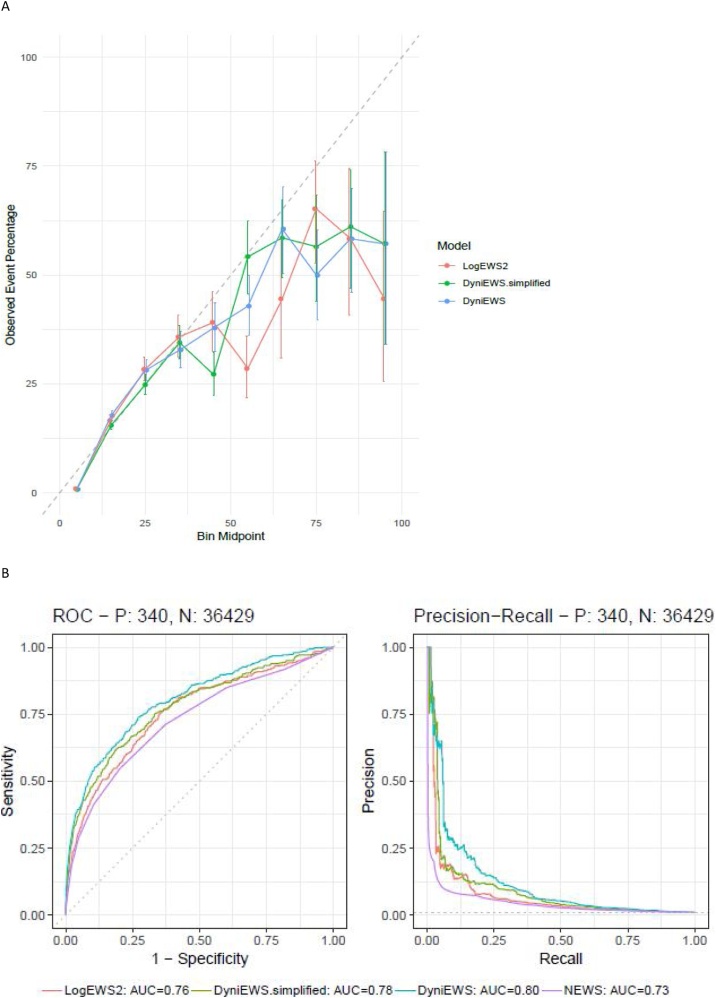

Internal temporal validation shows that all candidate models are well-calibrated overall (calibration slope at 1 and calibration-in-the-large at 0; more details in Supplementary Table 2). Detailed inspection via a calibration plot of 10 equal-sized probability groups (Fig. 2A) find that probabilities below 40% are well-calibrated. Similar to our previous findings,10 higher probabilities (rare events, roughly 1 in 5000 incidence) are not well-calibrated and need to be interpreted with caution. Among the candidate models, DyniEWS has the highest discriminability (median AUC = 0.79 [Q1–Q3: 0.78–0.80] vs LogEWS2 = 0.76 [0.75−0.77]). Youden’s best threshold for an alarm was found to be 0.97% [0.92 %–1.23 %] from this internal validation (Supplementary Table 5).

Fig. 2.

Assessments of model performance on training and test data. (A) Calibration plot for internal temporal validation using training data (the number of observations = 405,692, the number of patients = 12,307). (B) (Left): Receiver-operating characteristic curves for fitting each method to the test data (the number of observations = 36,769, the number of patients = 1,150, the reference random-classification gives a 45-degree straight line with area under the curve at 50%). (B) (Right): Precision-recall curves for fitting each method to the test data (the reference is the horizontal line with precision equal to the prevalence of adverse events, 0.93%). P denotes the number of adverse events and N denotes the number of non-events.

Focusing on the performance of each model on the test set (Fig. 2B, Supplementary Fig. 3 and Table 6), we find that DyniEWS has a clear advantage (AUC = 0.80 [95% CI 0.78, 0.83], AUPRC = 0.12 [0.10, 0.14]) over NEWS (AUC = 0.73 [0.70, 0.76], AUPRC = 0.05 [0.02, 0.08]) and snapshot LogEWS2 (AUC = 0.76 [0.73, 0.79], AUPRC = 0.08 [0.06, 0.10]). Note that the revised AUC value for NEWS is lower than previously reported10 due to further data curation and the introduction of a new temporal validation scheme that further corrects for the optimism in model performance. Fixing false alarm (false positive) rates at current levels using NEWS cut-offs of 5 and 7, a clear improvement (7%) of DyniEWS in balanced accuracy was also observed and sensitivity was found to have increased from that of NEWS by 13% at cut-off 5 and 12% at cut-off 7 (Supplementary Table 4). Given the poor performance of NEWS, the relative improvement is sizable (i.e. 32% (13%/41%) and 67% (12%/18%), respectively). In terms of actual patient outcomes, using test data, Table 3, shows that NEWS (cut-off values of 5 and 7) is better at identifying non-events (over 90% non-events were correctly non-flagged) at the cost of failure to pick up true events (less than 41% events were flagged). DyniEWS performs better than NEWS and LogEWS2 by correctly flagging 69% of events.

Table 3.

A comparison of actual patient outcomes using four scoring systems. Numbers of cases for “events + alarms from the system”, “non-events + no alarm”, “events + no alarm” and “non-events + alarm” are reported for the unseen test data (total number of observations = 36,769 across 4 months in 4 centres), of which 340 were adverse events and 36,429 were non-events. Percentages in the last two columns were computed as the % of total no-alarms followed by SAEs and that of total alarms followed by SAEs, respectively. Youden’s thresholds (cut-off values) for LogEWS2, DyniEWS.simplified and DyniEWS that maximised the sum of sensitivity and specificity were derived from internal validation.

| Methods | Event & alarm | Non-event & no-alarm | Event & no-alarm | Non-event & alarm | Total alarm | Total no-alarm |

|---|---|---|---|---|---|---|

| N (% 340 expected cases) | N (% 36,429 expected cases) | N (% total no-alarms) | N (% total alarms) | N | N | |

| NEWS (cut-off = 3) | 242(71) | 22,827(63) | 98(0.4) | 13,602(98) | 13,844 | 22,925 |

| NEWS (cut-off = 5) | 140(41) | 32,644(90) | 200(0.6) | 3785(96) | 3925 | 32,844 |

| NEWS (cut-off = 7) | 63(19) | 35,624(98) | 277(0.8) | 805(93) | 868 | 35,901 |

| LogEWS2 (cut-off = 1.01%) | 207(61) | 27,648(76) | 133(0.5) | 8781(98) | 8918 | 27,851 |

| DyniNEWS.simplified (cut-off = 1.14%) | 211(62) | 29,422(81) | 129(0.4) | 7007(97) | 7218 | 29,551 |

| DyniEWS (cut-off = 0.97%) | 233(69) | 28,127(77) | 107(0.4) | 8302(97) | 8535 | 28,234 |

Practical clinical utility

Two examples of hypothetical, but relatively common hypotensive and respiratory failure clinical scenarios where stable NEWS may offer false reassurance highlight practical clinical utility (Table 4). Observation set H represents an initially stable ward-based hypotensive patient (H1), suffering a significant deterioration in 1 h (H2) and is resuscitated with fluids (H3). As opposed to a stable NEWS of 7, DyniEWS takes into account the patient’s recent trajectory and dynamically updates the probability of a SAE in 24 h from 2% in H1 to 7% and 4% in the following hours. Observation set R represents a patient with progressively worsening hypoxia from R1 to R3. DyniEWS scores 2%, 5% and 16% in 4 h, correctly indicating a worsening trend that NEWS fails to recognise.

Table 4.

Comparison of NEWS and DyniEWS scores for two hypothetical dynamic clinical scenarios. H denotes a set of three consecutive records for a hypotensive patient over 2 h. R denotes a set of three consecutive records over 4 h for a worsening patient with respiratory failure. Decision threshold for each model are indicated in brackets. Physiological parameter and oxygen therapy categories are shown in colour to demonstrate how total additive NEWS score is calculated in each set of observations (score 0 = black, score 1 = green, score 2 = blue and score 3 = red).

|

Discussion

Added knowledge

Our newly derived dynamic score offers substantially improved discriminatory performance (AUC = 0.80 [95% CI 0.78−0.83]) and AUPRC 0.12 [0.10−0.14] versus the current gold standard additive NEWS (AUC = 0.73 [0.70−0.76]) and AUPRC 0.05 [0.05−0.08]. Improved sensitivity from 41% to 54% at the lower NEWS threshold of 5 and 18% to –30% at the higher NEWS thresholds of 7 are of potential clinical importance.

Strengths

The consensus based NEWS is a simple additive score whereas DyniEWS is an evidence based, completely data-driven dynamic score. Recognition of the asymmetric contribution of above- and below-median values of 5 physiological observations and using patient trajectory are key advantages of DyniEWS. Unlike data-hungry deep-learning or machine-learning algorithms, DyniEWS is not a black-box approach and is able to produce more robust and clinically interpretable results.

Our study reinforces the case for revising the relative weightings of vital signs and subdividing oxygen therapy based on FiO2 whilst illustrating the benefits of including patient trajectory in future EWS models.10 Traditional EWS models give equal weighting to positive and negative divergences from median values.20 Although hypertension, bradypnoea, bradycardia and hyperthermia are undesirable, our study highlights the greater comparative risks of hypotension, tachypnoea, tachycardia, hypoxia and hypothermia.10, 21 Another recent study has similarly reported that subdivision of FiO2 into three subcategories improves NEWS discriminatory performance.22 Our findings suggest that the greatest gains of including patient trajectory are achieved by the most recent three records of each patient. With the exception of historical FiO2 categories, the contribution of other vital sign trends to the incidence over a longer trajectory is small. The clinical scenario R1–R3 (Table 4) emphasises the important relative contribution of oxygen therapy–particularly with higher FiO2 categories.

Our model introduces ‘frequency of observations in previous 6 h as a new independent risk factor. The magnitude of this variable’s effect was one of the top five most influential predictors. Frequency of ward observations in a postoperative cardiac surgical ward is dependent on three main factors: time out from intensive care, new patient symptoms and ‘nursing concern’. A very weak correlation between frequency of ‘records in the previous 6 h’ and ‘time out of ICU’ (0.092) provides circumstantial evidence for the inclusion of nursing concern in future EWS models.23, 24

Dynamic individual patient trajectory prediction is an advanced, highly interpretable and computationally efficient statistical method. Instead of leaving one centre out for external validation, we believe that it is essential to develop the model using data from all available centres to better capture patient heterogeneity and case-mix, maximise the use of data and reduce optimism in the final model’s predictive performance. The temporal order of vital signs allows for an external validation procedure using held-out test data that were collected after all data had been used for model development.

The potential added value of DyniEWS is best illustrated by two relatively common clinical scenarios (Table 4) that demonstrate the potential clinical utility of a dynamic model in situations where a stable NEWS could provide false reassurance. Scenarios H1 and R1 are both common and frequently initially deemed low-risk after escalation and clinical review. Further deterioration may not be appropriately escalated in both scenarios due to stable NEWS being mistaken for clinical stability.25

Weaknesses

The absence of an ‘event’ does not mean the absence of any ‘therapeutic intervention’. Many NEWS 5 and 7 alarms result in ward-based therapeutic interventions that do not require ICU readmission. Accurate measurement of this proportion of alarms leading to ward level interventions is notoriously challenging for multiple reasons-including major inter-individual differences in definition and documentation of ward interventions. For this reason, EWS validation studies invariably focus on events which can be objectively measured.5, 20, 21, 26, 27, 28

As with all EWS, the high apparent non-event rate after an alarm (Table 3) is a cause for concern. The constant burden of alarms leading to ‘alarm fatigue’ may lead to scores being ignored and loss of confidence and possible abandonment of physiological hospital-wide surveillance.13, 29 In the test data, our total incidence of 8302 so called ‘non-events and alarms’ with DyniEWS translates into ∼519 alarms per week over four hospitals or 19 alarms per cardiac surgical unit per day (Table 3). Clinical experience suggests that a small number of sick patients will account for the majority of these ‘non-events and alarms’ and many of these ‘alarms’ will subsequently result in (often undocumented) ward-level therapeutic interventions. Although we believe this frequency of alarms will be acceptable, prospective studies are required to optimize alarm thresholds and confirm clinical utility.

Our model is a probabilistic model primarily predicting risk of ICU admission. The choice of threshold warrants careful consideration of clinical utility and the decision making process needs to take into account the relative weightings of false positives and negatives to decide on optimal trade-off. 30, 31

Future studies and practice changes

Currently, DyniEWS has been derived, validated and calibrated in postoperative cardiac surgical patients. Recalibration in other surgical, medical and paediatric specialties is entirely possible. Despite DyniEWS being focussed entirely on NEWS parameters, consideration could be given to including other strong predictors such as renal function.

NEWS3 is scheduled to be launched in 2022. By this time the majority of hospitals in the developed world will be using electronic observation charts. We believe this provides the opportunity for radical revisions to NEWS2 and a shift away from outdated ‘consensus-based’ scores towards an ‘evidence-based’ model specifically calibrated for the patient group it aims to protect. NEWS3 should recognise the additional safety benefits of a dynamic score including patient trajectory and observation frequency. After 2022, for the minority of hospitals still using ‘pen & paper’ charts, consideration should be given to subdivision of supplementary oxygen in an updated additive NEWS. Changes to the efferent response to DyniEWS with tiered thresholds for escalation would also need to be developed, agreed and implemented. Methodologically, more black-box-type machine learning methods may also be considered but the trade-off between clinical interpretability, data representativeness and computational efficiency should be carefully evaluated.

Conclusions

There is a worldwide trend towards investing in electronic observations. A dynamic EWS with specialty specific recalibration offers the potential to substantially reduce missed event rates and improve patient safety. The failure of current snapshot models to distinguish between rapidly deteriorating and improving situations is a major potential weakness. Scoring systems should utilise and process what is important rather than just what is easy.

Authors’ contributions

Study conception design: YZ, YDC, SSV, JHM.

Data acquisition: JC, JWB, MVP, DJM, JHM.

Data analysis and model construction: YZ, YDC, SSV.

Interpreting the results: YZ, YDC, SSV, JHM.

Initial drafting of manuscript: YZ, JHM.

Critical revision of manuscript: YZ, YDC, SSV, JC, JHM.

Conflicts of interest

The authors declare that they have no conflicts of interest.

Funding

Sofia S. Villar thanks the National Institute for Health Research Cambridge Biomedical Research Centre at Cambridge University Hospitals NHS Foundation Trust and the UK Medical Research Council (grant number: MC_UU_00002/15) for their funding.

CRediT authorship contribution statement

Yajing Zhu: Conceptualization, Data curation, Formal analysis, Methodology, Validation, Writing - original draft, Writing - review & editing. Yi-Da Chiu: Conceptualization, Data curation, Methodology, Writing - review & editing. Sofia S. Villar: Conceptualization, Formal analysis, Funding acquisition, Supervision, Writing - review & editing. Jonathan W. Brand: Investigation, Resources, Writing - review & editing. Mathew V. Patteril: Investigation, Resources, Writing - review & editing. David J. Morrice: Investigation, Resources, Writing - review & editing. James Clayton: Data curation, Resources, Writing - review & editing. Jonathan H. Mackay: Conceptualization, Investigation, Project administration, Supervision, Writing - original draft, Writing - review & editing.

Acknowledgements

This study was conducted in partnership with the Papworth Trials Unit Collaboration. Yajing Zhu is currently a salaried employee of Roche Products Ltd, UK. Y.Z. is extremely grateful to Roche for generously granting her visiting researcher time at MRC Biostatistics Unit, Cambridge to enable completion of this project. We are grateful to many people in each study centre for their significant contributions - including those responsible for maintaining accurate surgical, ICU re-admission and cardiac arrest databases. Special thanks also to the analysts in each centre for successfully extracting their VitalPAC data: Coventry, G. Georgiades and S. Kumar; Middlesbrough, M. Ahmed, A. Goodwin, I. Pattinson, T. Smailes and C. Williams; Papworth, J. Bracken, C Goddard, E. Gorman, V. Hughes, J. Machiwenyika, J. Quigley and M. Sale; Wolverhampton, R. Giri, Y. Li, S. Murphy, S. Rowles and N. Wise.

Footnotes

Supplementary material related to this article can be found, in the online version, at doi:https://doi.org/10.1016/j.resuscitation.2020.10.037.

Contributor Information

Yajing Zhu, Email: yajing.zhu@roche.com.

Yi-Da Chiu, Email: yi-da.chiu@nhs.net.

Sofia S. Villar, Email: sofia.villar@mrc-bsu.cam.ac.uk.

Jonathan W. Brand, Email: Jonathan.brand@nhs.net.

Mathew V. Patteril, Email: Mathew.Patteril@uhcw.nhs.uk.

David J. Morrice, Email: D.Morrice@nhs.net.

James Clayton, Email: james.clayton1@nhs.net.

Jonathan H. Mackay, Email: jonmackay@doctors.org.uk.

Appendix A. Supplementary data

The following are Supplementary data to this article:

References

- 1.National Early Warning Score (NEWS) 2 | RCP London. 2017. (Accessed 25 May 2020). Available from: https://www.rcplondon.ac.uk/projects/outputs/national-early-warning-score-news-2.

- 2.Prytherch D.R., Smith G.B., Schmidt P.E., Featherstone P.I. ViEWS-towards a national early warning score for detecting adult inpatient deterioration. Resuscitation. 2010;81:932–937. doi: 10.1016/j.resuscitation.2010.04.014. [DOI] [PubMed] [Google Scholar]

- 3.Pimentel M.A.F., Redfern O.C., Gerry S. A comparison of the ability of the National Early Warning Score and the National Early Warning Score 2 to identify patients at risk of in-hospital mortality: a multi-centre database study. Resuscitation. 2019;134:147–156. doi: 10.1016/j.resuscitation.2018.09.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.NHS England. Resources to Support the Safe Adoption of the Revised National Early Warning Score (NEWS2). 2018. (Accessed 25 May 2020). Available from: https://www.england.N.H.S.uk/2018/04/safe-adoption-of-news2/.

- 5.Bartkowiak B., Snyder A.M., Benjamin A. Validating the Electronic Cardiac Arrest Risk Triage (eCART) score for risk stratification of surgical inpatients in the postoperative setting: retrospective cohort study. Ann Surg. 2019;269:1059–1063. doi: 10.1097/SLA.0000000000002665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Churpek M.M., Adhikari R., Edelson D.P. The value of vital sign trends for detecting clinical deterioration on the wards. Resuscitation. 2016;102:1–5. doi: 10.1016/j.resuscitation.2016.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Brekke I.J., Puntervoll L.H., Pedersen P.B., Kellett J., Brabrand M. The value of vital sign trends in predicting and monitoring clinical deterioration: a systematic review. PLoS One. 2019;14 doi: 10.1371/journal.pone.0210875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.de Grooth H.J., Girbes A.R., Loer S.A. Early warning scores in the perioperative period: applications and clinical operating characteristics. Curr Opin Anesthesiol. 2018;31:732–738. doi: 10.1097/ACO.0000000000000657. [DOI] [PubMed] [Google Scholar]

- 9.Oglesby K.J., Sterne J.A.C., Gibbison B. Improving early warning scores-more data, better validation, the same response. Anaesthesia. 2020;75:149–151. doi: 10.1111/anae.14818. [DOI] [PubMed] [Google Scholar]

- 10.Chiu Y.D., Villar S.S., Brand J.W. Logistic early warning scores to predict death, cardiac arrest or unplanned intensive care unit re-admission after cardiac surgery. Anaesthesia. 2020;75:162–170. doi: 10.1111/anae.14755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cambridge MRC Logistic Early Warning Score App for Cardiac Surgial Patients. 2019. Available from: https://yidachiu.shinyapps.io/vitalpac_log_ews_app/. (Accessed 25 May 2020).

- 12.Mackay J.H., Brand J.W., Chiu Y.-D., Villar S.S. Improving early warning scores–more data, better validation, the same response. Anaesthesia. 2020;75:550. doi: 10.1111/anae.14878. [DOI] [PubMed] [Google Scholar]

- 13.Bedoya A.D., Clement M.E., Phelan M., Steorts R.C., O’Brien C., Goldstein B.A. Minimal impact of implemented early warning score and best practice alert for patient deterioration. Crit Care Med. 2019;47:49–55. doi: 10.1097/CCM.0000000000003439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc Ser B. 1996;58:267–288. [Google Scholar]

- 15.Kelleher J.D., Mac Namee B., D’Arcy A. MIT Press; Boston: 2015. Fundamentals of Machine Learning for Predictive Data Analytics: Algorithms, Worked Examples, and Case Studies. [Google Scholar]

- 16.Fritz B.A., Cui Z., Zhang M. Deep-learning model for predicting 30-day postoperative mortality. Br J Anaesth. 2019;123:688–695. doi: 10.1016/j.bja.2019.07.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Saito T., Rehmsmeier M. The Precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. Brock G, editor. PLoS One. 2015 doi: 10.1371/journal.pone.0118432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lever J., Krzywinski M., Altman N. Points of significance: classification evaluation. Nat Methods. 2016;13:603–604. [Google Scholar]

- 19.Bergmeir C., Hyndman R.J., Koo B. A note on the validity of cross-validation for evaluating autoregressive time series prediction. Comput Stat Data Anal. 2018;120:70–83. [Google Scholar]

- 20.Watkinson P.J., Pimentel M.A.F., Clifton D.A., Tarassenko L. Manual centile-based early warning scores derived from statistical distributions of observational vital-sign data. Resuscitation. 2018;129:55–60. doi: 10.1016/j.resuscitation.2018.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ghosh E., Eshelman L., Yang L., Carlson E., Lord B. Early deterioration indicator: data-driven approach to detecting deterioration in general ward. Resuscitation. 2018;122:99–105. doi: 10.1016/j.resuscitation.2017.10.026. [DOI] [PubMed] [Google Scholar]

- 22.Malycha J., Farajidavar N., Pimentel M.A.F. The effect of fractional inspired oxygen concentration on early warning score performance: a database analysis. Resuscitation. 2019;139:192–199. doi: 10.1016/j.resuscitation.2019.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Romero-Brufau S., Gaines K., Nicolas C.T., Johnson M.G., Hickman J., Huddleston J.M. The fifth vital sign? Nurse worry predicts inpatient deterioration within 24 hours. JAMIA Open. 2019;2:465–470. doi: 10.1093/jamiaopen/ooz033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Douw G., Schoonhoven L., Holwerda T., Huisman-de Waal G. Nurses’ worry or concern and early recognition of deteriorating patients on general wards in acute care hospitals: a systematic review. Crit Care. 2015;19:230. doi: 10.1186/s13054-015-0950-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Reason J. Human error: models and management. BMJ. 2000;320:768–770. doi: 10.1136/bmj.320.7237.768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Smith G.B., Prytherch D.R., Meredith P., Schmidt P.E., Featherstone P.I. The ability of the National Early Warning Score (NEWS) to discriminate patients at risk of early cardiac arrest, unanticipated intensive care unit admission, and death. Resuscitation. 2013;84:465–470. doi: 10.1016/j.resuscitation.2012.12.016. [DOI] [PubMed] [Google Scholar]

- 27.Kovacs C., Jarvis S.W., Prytherch D.R. Comparison of the National Early Warning Score in non-elective medical and surgical patients. Br J Surg. 2016;103:1385–1393. doi: 10.1002/bjs.10267. [DOI] [PubMed] [Google Scholar]

- 28.Gerry S., Bonnici T., Birks J. Early warning scores for detecting deterioration in adult hospital patients: systemic review and critical appraisal of methodology. BMJ. 2020;369 doi: 10.1136/bmj.m1501. m1501Schmidt PE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Meredith P., Prytherch D.R. Impact of introducing an electronic physiological surveillance system on hospital mortality. BMJ Qual Saf. 2015;24:10–20. doi: 10.1136/bmjqs-2014-003073. [DOI] [PubMed] [Google Scholar]

- 30.Fronczek J., Polok K., Devereaux P.J. External validation of the Revised Cardiac Risk Index and National Surgical Quality Improvement Program Myocardial Infarction and Cardiac Arrest calculator in noncardiac vascular surgery. Br J Anaesth. 2019;123:421–429. doi: 10.1016/j.bja.2019.05.029. [DOI] [PubMed] [Google Scholar]

- 31.Vickers A.J., Van Calster B., Steyerberg E.W. Net benefit approaches to the evaluation of prediction models, molecular markers, and diagnostic tests. BMJ. 2016;352 doi: 10.1136/bmj.i6. i6. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.