Abstract

Collecting and analyzing massive data generated from smart devices have become increasingly pervasive in crowdsensing, which are the building blocks for data-driven decision-making. However, extensive statistics and analysis of such data will seriously threaten the privacy of participating users. Local differential privacy (LDP) was proposed as an excellent and prevalent privacy model with distributed architecture, which can provide strong privacy guarantees for each user while collecting and analyzing data. LDP ensures that each user’s data is locally perturbed first in the client-side and then sent to the server-side, thereby protecting data from privacy leaks on both the client-side and server-side. This survey presents a comprehensive and systematic overview of LDP with respect to privacy models, research tasks, enabling mechanisms, and various applications. Specifically, we first provide a theoretical summarization of LDP, including the LDP model, the variants of LDP, and the basic framework of LDP algorithms. Then, we investigate and compare the diverse LDP mechanisms for various data statistics and analysis tasks from the perspectives of frequency estimation, mean estimation, and machine learning. Furthermore, we also summarize practical LDP-based application scenarios. Finally, we outline several future research directions under LDP.

Keywords: local differential privacy, data statistics and analysis, enabling mechanisms, applications

1. Introduction

With the rapid development of wireless communication techniques, Internet-connected devices (e.g., smart devices and IoT appliances) are ever-increasing and generate large amounts of data by crowdsensing [1]. Undeniably, these big data have brought our rich knowledge and enormous benefits, which deeply facilitates our daily lives, such as traffic flow control, epidemic prediction, and recommendation systems [2,3,4]. To make better collective decisions and improve service quality, a variety of applications collect users data through crowdsensing to analyze statistical knowledge of the social community [5]. For example, the third-parties learn rating aggregation by gathering preference options [6], present a crowd density map by recording users locations [7], and estimate the power usage distributions from meter readings [8,9]. Almost all data statistics and analysis tasks fundamentally depend on a basic understanding of the distribution of the data.

However, collecting and analyzing data has incurred serious privacy issues since such data contain various sensitive information of users [10,11,12]. Even worse is that, driven by advanced data fusion and analysis techniques, the private data of users are more vulnerable to attack and disclosure in the big data era [13,14,15]. For example, the adversaries can infer the daily habits or behavior profiles of family members (e.g., the time of presence/absence in the home, certain activities such as watching TV, cooking) by analyzing the usage of appliances [16,17,18], and even obtain = identification information, social relationships, and attitudes towards religion [19].

Therefore, it is an urgent priority to put great attention on preventing personal data from being leaked when collecting data from various devices. At present, the European Union (EU) has published the GDPR [20] that regulates the EU laws of data protection for all individual citizens and contains the provisions and requirements pertaining to the processing of personal data. Besides, the NIST of the U.S. is also developing the privacy frameworks [21] currently to better identify, access, manage, and communicate about privacy risks so that individuals can enjoy the benefits of innovative technologies with greater confidence and trust.

From the perspective of privacy-preserving techniques, differential privacy (DP) [22] was proposed for more than ten years and recognized as a convincing framework for privacy protection, which also refers to global DP (or centralized DP). (Without loss of generality, DP appears in the rest of this article refers to global DP (i.e., centralized DP).) With strict mathematical proofs, DP is independent of the background knowledge of adversaries and capable of providing each user with strong privacy guarantees, which was widely adopted and used in many areas [23,24]. However, DP can be only used to the assumption of a trusted server. In many online services or crowdsourcing systems, the servers are untrustworthy and always interested in the statistics of users’ data.

Based on the definition of DP, local differential privacy (LDP) [25] is proposed as a distributed variant of DP, which achieves privacy guarantees for each user locally and is independent of any assumptions on the third-party servers. LDP was imposed as the cutting-edge of research on privacy protection and risen in prominence not only from theoretical interests, but also subsequently from a practical perspective. For example, many companies deployed LDP-based algorithms in real systems, such as Apple iOS [26], Google Chrome [27], Windows system [28].

Due to its powerfulness, LDP was widely adopted to alleviate the privacy concerns of each user while conducting statistical and analytic tasks, such as frequency and mean value estimation [29], heavy hitters discovery [30], k-way marginal release [31], empirical risk minimization (ERM) [32], federated learning [33], and deep learning [34].

Therefore, a comprehensive survey of LDP is very necessary and urgent for future research in Internet of Things. To the best of our knowledge, only a little literature focuses on reviewing LDP and the most existing surveys only pay attention to a certain field. For example, Wang et al. [35] summarized several LDP protocols only for frequency estimation. The tutorials in [36,37,38] reviewed the LDP models and introduced the current research landscapes under LDP, but the detailed descriptions are rather insufficient. Zhao et al. [39] reviewed the existing LDP-based mechanisms only towards the Internet of connected vehicles. The reviews in [40,41] also provided a survey of statistical query and private learning with LDP. However, the detailed technical points and specific data types when using LDP are still insufficiently summarized. Therefore, it is still necessary and urgent to carry out a comprehensive survey on LDP toward data statistics and analysis to help newcomers understand the complex discipline of this hot research area.

In this survey, we conduct an in-depth overview of LDP with respect to its privacy models, the related research tasks for various data, enabling mechanisms, and wide applications. Our main contributions are summarized as follows.

We firstly provide a theoretical summarization of LDP from the perspectives of the LDP models, the general framework of LDP algorithms, and the variants of LDP.

We systematically investigate and summarize the enabling LDP mechanisms for various data statistics and analysis tasks. In particular, the existing state-of-the-art LDP mechanisms are thoroughly concluded from the perspectives of frequency estimation, mean value estimation, and machine learning.

We explore the practical applications with LDP to show how LDP is to be implemented in various applications, including in real systems (e.g., Google Chrome, Apple iOS), edge computing, hypothesis testing, social networks, and recommendation systems.

We further distinguish some promising research directions of LDP, which can provide useful guidance for new researchers.

Figure 1 presents the main research categories of LDP and also shows the main structure of this survey. We first provide a theoretical summarization of LDP, which includes the LDP model, the framework of LDP algorithms, and the variants of LDP. Then, from the perspective research tasks, we summarize the existing LDP-based privacy-preserving mechanisms into three categories: frequency estimation, mean estimation and machine learning. We further subdivide each category into several subtasks based on different data types. In addition, we summarize the applications of LDP in real practice and other fields.

Figure 1.

An overview of the main research categories with LDP.

The rest paper is organized as follows. Section 2 theoretically summarizes the LDP. The diverse LDP mechanisms for frequency estimation, mean estimation and machine learning are introduced thoroughly in Section 3, Section 4 and Section 5, respectively. Section 6 summarizes the wide application scenarios of LDP and Section 7 presents some future research directions. Finally, we conclude the paper in Section 8.

2. Theoretical Summarization of LDP

Formally, let N be the number of users, and denote the i-th user. Let denote the data record of , which is sampled from the attribute domain that consists of d attributes . For categorical attribute, its discrete domain is denoted as , where k is the size of the domain and . Notations commonly used in this paper are listed in Table 1.

Table 1.

The commonly used notations.

| Notation | Explanation |

|---|---|

| Data record of user | |

| Domain of categorical attribute with size | |

| v/ | Input value/Perturbed value |

| B | Vector of the encoded value |

| N | Number of users |

| // | The true/reported/estimated number of value v |

| A | Attribute |

| d | Dimension |

| / | Privacy budget/Probability of failure |

| Perturbation probability | |

| / | The true/estimated frequency of value v |

| /H | Hash function universe/Hash function |

2.1. LDP Model

Local differential privacy is a distributed variant of DP. It allows each user to report her/his value v locally and send the perturbed data to the server aggregator. Therefore, the aggregator will never access to the true data of each user, thus providing a strong protection. Here, user’s value v acts as the input value of a perturbation mechanism and the perturbed data acts as the output value.

2.1.1. Definition

Definition 1

(-Local Differential Privacy ([-LDP) [25,42]). A randomized mechanism satisfies ϵ-LDP if and only if for any pairs of input values v, in the domain of , and for any possible output , it holds

(1) where denotes probability and ϵ is the privacy budget. A smaller ϵ means stronger privacy protection, and vice versa.

Sequential composition is a key theorem of LDP, which plays important roles in some complex LDP algorithms or some complex scenarios.

Theorem 1

(Sequential Composition). Let be an -LDP algorithm on an input value v, and is the sequential composition of . Then satisfies -LDP.

2.1.2. The Principle Method for Achieving LDP

Randomized response (RR) [43] is the classical technique for achieving LDP, which can also be used for achieving global DP [44]. The main idea of RR is to protect user’s private information by answering a plausible response to the sensitive query. That is, one user who possesses a private bit x flips it with probability p to give the true answer and with probability to give other answers.

For example, the data collector wants to count the true proportion f of the smoker among N users. Each user is required to answer the question “Are you a smoker?” with “Yes” or “No”. To protect privacy, each user flips an unfair coin with the probability p being head and the probability being tail. If the coin comes up to the head, the user will respond the true answer. Otherwise, the user will respond the opposite answer. In this way, the probabilities of answering “Yes” and “No” can be calculated as

| (2) |

| (3) |

Then, we estimate the number of “Yes” and “No” that are denoted as and . From Equations (2) and (3), we have and . Therefore, we can compute the estimated proportion of the smoker is

| (4) |

Observe that in the above example the probability of receiving “Yes” varies from p to depending on the true information of users. Similarly, the probability of receiving “No” also varies from p to . Hence, the ratio of probabilities for different answers of one user can be at most . By letting and based on Equation (1), it can be easily verified that the above example satisfies -LDP. To ensure , we should make sure that .

Therefore, RR achieves LDP by providing plausible deniability for the responses of users. In this case, users no longer need to trust a centralized curator since they only report plausible data. Based on RR, there are plenty of other mechanisms for achieving LDP under different research tasks, which will be introduced in the following Sections.

2.1.3. Comparisons with Global Differential Privacy

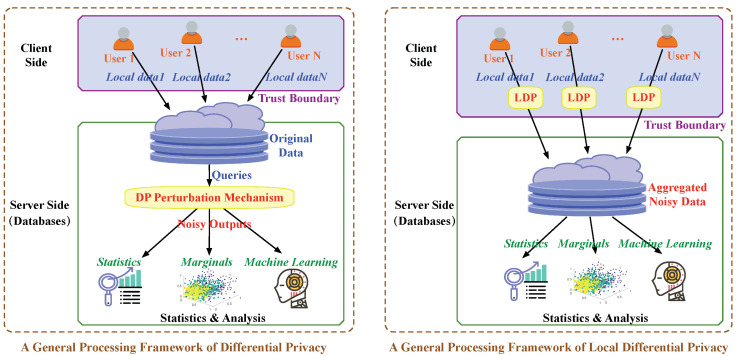

We compare LDP with global DP from different perspectives, as shown in Table 2. At first, the biggest difference between LDP and DP is that LDP is a local privacy model with no assumption on the server while DP is a central privacy model with the assumption of a trusted server. Correspondingly, the general processing frameworks of DP and LDP are different. As shown in the left part of Figure 2, under the DP framework, the data are directly sent to the server and the noises are added to query mechanisms in the server-side. In contrast, under the LDP framework, each user’s data are locally perturbed in the client-side before uploading to the server, as shown in the right part of Figure 2.

Table 2.

Comparisons between LDP and DP.

| Notion | Model | Server | Neighboring Datasets | Basic Mechanism | Property | Applications |

|---|---|---|---|---|---|---|

| DP [22,45] | Central | Trusted | Two datasets | Laplace/Exponential Mechanisms [45,46] |

Sequential Composition, Post-processing |

Data collection, statistics, publishing, analysis |

| LDP [25,42] | Local | No requirement | Two records | Randomized Response [42,43] |

Figure 2.

The general processing frameworks of DP and LDP.

The neighboring datasets in LDP are defined as two different records/values of the input domain. While in DP, the neighboring datasets are defined as two datasets that differ only in one record. For example, given a dataset, we can get its neighboring dataset by deleting/modifying one record. The most two common perturbation mechanisms of achieving DP are Laplace mechanism and Exponential mechanism [45,46] that inject random noises based on privacy budget and sensitivity. In contrast, the randomized response technique [42,43] is most commonly used to achieve LDP. As shown in Table 2, LDP holds the same sequential composition and post-processing properties as DP. Both DP and LDP are widely adopted by many applications, such as data collection, publishing, analysis, and so on.

2.1.4. LDP Model Settings

This section summarizes the model settings of LDP, which holds two paradigms, i.e., interactive setting and non-interactive setting [42,47].

Since LDP no longer assumes a trusted third-party data curator, the interactive and non-interactive privacy model settings of LDP [47] are different from that of DP [23]. Figure 3 shows the interactive and non-interactive settings of LDP.

Figure 3.

LDP model settings.

Let be the input sequences, and be the corresponding output sequences. As shown in left part of Figure 3, in interactive setting, the i-th output depends on the i-th input and the previous outputs , but is independent of the previous inputs . Particularly, the dependence and conditional independence correlations can be formally denoted as for any .

In contrast, as shown in the right part of Figure 3, the non-interactive setting is much simpler than interactive setting. The i-th output only depends on the i-th input . In formal, the dependence and conditional independence correlations can be denoted as .

Therefore, the main difference between interactive and non-interactive settings of LDP is whether to consider the correlations between the output results. The work in [48,49] further investigated the power of interactivity in LDP.

2.2. The Framework of LDP Algorithm

The general privacy-preserving framework with LDP includes three modules: Randomization, Aggregation and Estimation, as shown in Algorithm 1. The randomization is conducted in the client side and both aggregation and estimation happen in the server side.

| Algorithm 1: The General Procedure of LDP-based Privacy-preserving Mechanisms |

|

2.3. The Variants of LDP

Since the introduction of LDP, designing the variant of LDP was an important research direction to improve the utility of LDP and to make LDP more relevant in targeted IoT scenarios. This section summarizes the current research progresses on LDP variant, as shown in Table 3.

Table 3.

Summary of LDP variants (LDP is also listed for reference).

| LDP Variants | Definition | Purpose | Design Idea | Target Data Type | Main Protocol | = LDP? |

|---|---|---|---|---|---|---|

| LDP [35] | - | - | All data type | RR-based method | - | |

|

-LDP [61,62] |

See Formula (5) | A relaxed variant of LDP |

LDP fails with a small probability |

All data type | RR-based method | When |

| BLENDER [52] |

same as -DP | Improve data utility by combine global DP and LDP |

Group user pool | Categorical data | Laplace mechanism | - |

| Local d-privacy [54] |

Enhance data utility for metric spaces |

Metric-based method | Metric data, e.g., location data |

Discrete Laplace Geometric mechanisms |

- | |

| CLDP [56] | Solve the problem of a small number of users |

Metric-based method | Categorical data | Exponential mechanism |

- | |

| PLDP [57] | Achieve granular privacy constraints |

Advanced combination [57] PCE [7] |

Categorical data | RR-based method | When | |

| ULDP [58] | See Definition 6 | Optimize data utility |

Only provide privacy guarantees for sensitive data |

Categorical data | RR-based method | When and |

| ID-LDP [59] | Provide input-discriminative protection for different inputs |

Quantify indistinguishability |

Categorical data | Unary Encoding | When for each value v |

|

| PBP [60] | See Definition 9 | Achieve privacy amplification of LDP |

Keep privacy parameters secret |

Categorical data | RR-based method | - |

2.3.1. -LDP

Similar to the case that -DP [50] is a relaxation of -DP, -LDP (also called approximate LDP) is a relaxation of -LDP (also called pure LDP).

Definition 2

([-Local Differential Privacy ([-LDP) [51]). A randomized mechanism satisfies -LDP if and only if for any pairs of input values v and in the domain of , and for any possible output , it holds

(5) where δ is typically small.

Loosely speaking, -LDP means that a mechanism achieves -LDP with probability at least . By relaxing -LDP, -LDP is more general since the latter in the special case of becomes the former.

2.3.2. BLENDER

BLENDER [52] is a hybrid model by combining global DP and LDP, which improves data utility with desired privacy guarantees. The BLENDER is achieved by separating the user pool into two groups based on their trust in the data aggregator. One is called opt-in group that contains the users who have higher trust in the aggregator. Another is called clients that contains the remaining users. Then, the BLENDER can maximize the data utility by balancing the data obtained from participation of opt-in users with that of other users. The privacy definition of BLENDER is the same as -DP [50].

2.3.3. Local -Privacy

Geo-indistinguishability [53] was initially proposed for location privacy protection under global DP, which is defined based on the geographical distance of data. Geo-indistinguishability was quite successful when the statistics are distance-sensitive. In the local settings, Alvim et al. [54] also pointed out that the metric-based LDP can provide better utility that standard LDP. Therefore, based on -privacy [55], Alvim et al. [54] proposed local -privacy that is as defined as follows.

Definition 3

(Local -Privacy). A randomized mechanism satisfies local -privacy if and only if for any pairs of input values v and in the domain of , and for any possible output , it holds

(6) where is a distance metric.

Local -privacy can relax the privacy constraint by introducing a distance metric when , thus improving data utility. In other words, the relaxation of local -privacy is reflected in that the two data becomes more distinguishable as their distance increases. Therefore, local -privacy is quite appropriate for distance-sensitive data, such as location data, energy consumption in smart meters.

2.3.4. CLDP

LDP played an important role in data statistics and analysis. However, the standard LDP will suffer a poorly data utility when the number of users is small. To address this, Gursoy et al. [56] introduced condensed local differential privacy (CLDP) that is also a metric-based privacy notation. Let be a distance metric. Then, CLDP is defined as follows.

Definition 4

(-CLDP).A randomized mechanism satisfies α-CLDP if and only if for any pairs of input values v and in the domain of , and for any possible output , it holds

(7) where.

By definition, in CLDP, must decrease to compensate as distance increases. Thus, it holds that . In addition, Gursoy et al. [56] also adopted a variant of the Exponential Mechanism (EM) to design several protocols that achieve CLDP with better data utility when there is a small number of users.

2.3.5. PLDP

Instead setting a global privacy constraint for all users, personalized local differential privacy (PLDP) [7,57] is proposed to provide granular privacy constraints for each participating user. That is, under PLDP, each user can select the privacy demand (i.e., ) according to his/her own preference. In formal, PLDP is defined as follows.

Definition 5

( -PLDP). A randomized mechanism satisfies -PLDP if and only if for any pairs of input values v and in the domain of and a user U, and for any possible output , it holds

(8) where is the privacy budget belonging to user U.

To achieve PLDP, Chen et al. [7] proposed personalized count estimation (PCE) protocol and further leveraged a user group clustering algorithm to apply PCE to users with different privacy level. In addition, Nie et al. [57] proposed the advanced combination strategy to compose multilevel privacy demand with an optimal utility.

2.3.6. ULDP

The standard LDP regards all user data equally sensitive, which leads to excessive perturbations. In fact, not all personal data are equally sensitive. For example, answer a questionnaires such as: “Are you a smoker?” Obviously, “Yes” is a sensitive answer, whereas “No” is not sensitive. To improve data utility, Utility-optimized LDP (ULDP) [58] was proposed as a new privacy notation to provide privacy guarantees only for sensitive data. In ULDP, let be the sensitive data set, and be the remaining data set. Let be the protected data set, and be the invertible data set. Then, ULDP is formally defined as follows.

Definition 6

( -ULDP). Given , , a randomized mechanism provides -PLDP if it satisfies the following properties:

(i) For any , there exists an such that

(9) (ii) For any and any ,

(10)

For an intuitive understanding for Definition 6, -ULDP maps sensitive data to only protected data set. Specifically, we can see from Formula (9) that no privacy protects are provided for non-sensitive data since each output in reveals the corresponding input in . Also, we can also find from Formula (10) that -ULDP provides the same privacy protections as -LDP for all sensitive data .

2.3.7. ID-LDP

In ULDP, Murakami et al. [58] considered the sensitivity level of input data by directly separating the input data into sensitive data and non-sensitive data. However, Gu et al. [59] further indicated that different data have distinct sensitivity levels. Thus, they presented the Input-Discriminative LDP (ID-LDP) which is a more fine-grained version of LDP. The notion of ID-LDP is defined as follows.

Definition 7

( -ID-LDP). For a given privacy budget set , a randomized mechanism satisfies -ID-LDP if and only if for any pairs of input values v and , and for any possible output , it holds

(11) where is a function of two privacy budget.

It can be seen from Definition 7 that ID-LDP introduces the function to quantify the indistinguishability between input values v and that have different privacy levels with privacy budget and . The work in [58] mainly considers the minimum function between and and formalizes the MinID-LDP as follows.

Definition 8

(MinID-LDP). A randomized mechanism satisfies -MinID-LDP if and only if it satisfies -ID-LDP with .

That is, MinID-LDP always guarantees the worse-case privacy for the pair. Thus, MinID-LDP ensures better data utility by providing distinct protection for different inputs than standard LDP that provides the worse-case privacy for all data.

2.3.8. PBP

In addition, Takagi et al. [60] pointed that data providers can naturally choose and keep their privacy parameters secret since LDP perturbations occur in device side. Thus, they proposed a new privacy model Parameter Blending Privacy (PBP) as a generalization of standard LDP. PBP can not only keep the privacy parameters secret, but only improves the data utility through privacy amplification.

Let be the domain of the privacy parameter. Given a privacy budget , let be the ratio of the number of times that is chosen to the number of users. Then, PBP is defined as follows.

Definition 9

(r-PBP). A randomized mechanism satisfies r-PBP iff , , it holds

(12) where the privacy function returns a real number that denotes the strength of privacy protection.

Comparisons and discussions.Table 3 briefly summaries the various LDP variants from different perspectives. With various purposes, these variants extend the standard LDP into more generalized or granular versions based on different design ideas. Meanwhile, the main protocols for achieving such LDP variants are also proposed. Nonetheless, there are still some issues in new privacy notions remaining unsolved. For example, PBP only focuses on the privacy parameters that are chosen at the user-level. In other words, the correlations between data and privacy parameters are neglected in PBP. Similarly, ULDP can’t be directly applied to scenarios that sensitive data and non-sensitive are correlated. Besides, MinID-LDP considers the minimum function to decide the privacy budget. There might be other functions that can provide better data utility.

3. Frequency Estimation with LDP

This section summarizes the state-of-the-art LDP algorithms for frequency estimation. Frequency estimation, which is equivalent to histogram estimation, aims at computing the frequency of each given value , where . Besides, we further subdivide the frequency-based task under LDP into several more specific tasks. In what follows, we will introduce each LDP protocol in the view of randomization, aggregation, and estimation, as described in Section 2.2.

Based on the Definition 1 of LDP, a more visual definition of LDP protocol [35] can be given as follows.

Definition 10

( -LDP Protocol). Consider two probabilities . A local protocol given by such that a user reports the true value with p and reports each of other values with q, will satisfy ϵ-LDP if and only if it holds .

Based on the Theorem 2 in [35], the variance for the noisy number of the value v (i.e., ) among N users will be , where is the frequency of the value . Thus, the variance is

| (13) |

It can be seen that the variance of Equation (13) will be dominated by the first term when is small. Hence, the approximation of the variance in Equation (13) can be denoted as

| (14) |

In addition, it holds that Var = Var when .

3.1. General Frequency Estimation on Categorical Data

This section summarizes the general LDP protocols for frequency estimation on categorical data and shows the performance of each protocol. The encoding principle of the existing LDP protocols can be concluded as direct perturbation, unary encoding, hash encoding, transformation, and subset selection.

3.1.1. Direct Perturbation

The most basic building block for achieving LDP is direct perturbation that perturbs data directly by randomization.

Binary Randomized Response (BRR) [43,63] is the basic randomized response technique that focuses on binary values, i.e., the cardinality of value domain is 2. Section 2.1.2 introduced the basic randomized response technique that focuses on binary values. Based on this, BRR is formally defined as follows.

Randomization. Each value v is perturbed by

| (15) |

Aggregation and Estimation. Let be the total number of received value v after aggregation. The estimated frequency of value v can be computed as .

Observe that the probability that varies from to . The ratio of the respective probabilities for different values of v will be at most . Therefore, BRR satisfies -LDP. Based on Equation (14), the variance of BRR is

| (16) |

Generalized Randomized Response (GRR) [64,65] extends the BRR to the case where the cardinality of total values is more than 2, i.e., . GRR is also called Direct Encoding (DE) in [35] or k-RR in [64]. The process of GRR is given as follows.

Randomization. Each value v is perturbed by

| (17) |

Aggregation and Estimation. Let be the total number of received value v after aggregation. The estimated frequency of value v can be computed as

| (18) |

Observe that the probability that varies from to . The ratio of the respective probabilities for different values of v will be at most . Therefore, GRR satisfies -LDP. Based on Equation (14), the variance of GRR is

| (19) |

3.1.2. Unary Encoding

Instead of perturbing the original value, we can perturb each bit of a vector that is generated by encoding the original value v. This method is called Unary Encoding (UE) [35] that is achieved as follows.

Randomization. UE encodes each value into a binary bit vector B with size k, where the v-th bit is 1, i.e., . Each bit of B is perturbed by

| (20) |

where .

Aggregation and Estimation. Assume the number of ones in the v-th bit among all original N vectors and all received N vectors are and , respectively. Based on Equation (20), we have . Thus, the estimated number of value v is . Then, the frequency of the value v is computed as

| (21) |

Based on Equation (20), for any inputs and , and the output , it holds that

| (22) |

where “≤” is achieved since the bit vectors differ only in positions and . There are four cases when choosing values for positions and . That is,

| (23) |

It can be verified that a vector with position being 1 and position being 0 will maximize the ratio (i.e., the case ➂).

Based on Equation (20), UE satisfies -LDP if and only if it follows that

| (24) |

Therefore, letting the equal sign in Equation (24) hold, we can set p as follows:

| (25) |

Applying Equation (25) to Equation (13), the variance of UE is

| (26) |

Symmetric UE (SUE) [35] is the symmetric version of UE when choosing p and q such that . Based on this observation and Equation (25), we can derive and . Then, the frequency can be computed based on Equation (21). The variance of SUE is

| (27) |

Optimized UE (OUE) [35] is to minimize the Equation (26). By making the partial derivative of Equation (26) with respect to q equals to 0, we can get the formula . By solving this, we can obtain

| (28) |

The estimated frequency can be computed by Equation (21). By combining the Equations (26) and (28), the variance of OUE is

| (29) |

3.1.3. Hash Encoding

In the same way of UE, Basic RAPPOR [27] encodes each value into a length-k binary bit vector B and conducts Randomization with the following two steps.

Step 1: Permanent randomized response. Generate with the probability

| (30) |

where r is a user-tunable parameter that controls the level of longitudinal privacy guarantee.

Step 2: Instantaneous randomized response. Perturb with the following probability distribution (i.e., UE)

| (31) |

From the proof in [27], the Permanent randomized response (i.e., Step 1) achieves -LDP for . The communication and computing cost of Basic RAPPOR is for each user, and for the aggregator. However, Basic RAPPOR does not scale to the cardinality k.

RAPPOR [27] adopts Bloom filters [66] to encode each single element based on a set of m hash functions . Each hash function firstly outputs an integer in . Then, each value v is encoded as a k-bit binary vector B by

| (32) |

Next, RAPPOR uses the same processes (i.e., Equations (30) and (31)) as Basic RAPPOR to conduct randomization.

From the proof in [27], RAPPOR achieves -LDP for . Moreover, the communication cost of RAPPOR is for each user. However, the computation cost of the aggregator in RAPPOR is higher than Basic RAPPOR due to the LASSO regression.

O-RAPPOR [64] is proposed to address the problem of holding no prior knowledge about the attribute domain. Kairouz et al. [64] examined discrete distribution estimation when the open alphabets of categorical attributes are not enumerable in advance. They applied hash functions to map the underlying values at first. Then, the hashed values will be involved in a perturbation process, which is independent of the original values. On the basis of RAPPOR, Kairouz et al. adopted the idea of hash cohorts. Each user will be assigned to a cohort that is sampled i.i.d. from a uniform distribution over . Each provides an independent view of the underlying distribution of strings. Based on hash cohorts, O-RAPPOR applies hash functions on a value v in cohort c before using RAPPOR and generates an independent h-bit hash Bloom filter for each cohort c, where the j-th bit of is 1 if for any . Next, the perturbation on follows the same strategy in RAPPOR.

O-RR [64] is proposed to deal with non-binary attributes. It integrates hash cohorts into k-RR to deal with the case where the domain of attribute is unknown. Users in a cohort use their cohort hash function to project the value space into k disjoint subsets, i.e., . Next, the O-RR perturbs the input value v as follows:

| (33) |

Please note that Equation (33) contains a factor of C compared to Equation (17). This is because each value v belongs to one of the cohorts. The error bound of O-RR is the same as k-RR, but incurs more time cost due to hash and cohort operations.

To reduce communication and computation cost, local hashing (LH) [35] is proposed to hash the input value into a domain such that . Denote as the universal hash function family. Each input value is hashed into a value in by hash function . The universal property requires that

| (34) |

Randomization. Given any input value , LH first outputs a value x in by hashing, i.e., . Then, LH perturbs x with the following distribution

| (35) |

After perturbation, each user sends to the aggregator. Based on Equation (35), we can know that LH satisfies -LDP since it always holds that .

Aggregation and Estimation. Assume we aim to estimate the frequency of the value v. The aggregator counts the total number that supports value v, denoted as . That is, for each report , if it holds that , then . Based on Equation (35), it holds that

| (36) |

where is the probability of keeping unchanged of an input value and is the probability of flipping an input value.

Then, while aggregating in the server, we have

| (37) |

Based on Equations (36) and (37), we can get the estimated frequency of the value v, i.e.,

| (38) |

By taking , into Equation (13), the variance of LH is

| (39) |

Local hashing will become Binary Local Hashing (BLH) [35] when . In BLH, each hash function hashes an input from into one bit.

Randomization. Based on Equation (35), the randomization of BLH follows the probability distribution as

| (40) |

Aggregation and Estimation. Based on LH, it holds that and . When the reported supports of value v is , based on Equation (38). the estimated frequency can be computed as

| (41) |

The variance of BLH is

| (42) |

Optimized LH (OLH) [35] aims to choose an optimized g to compromise the information losses between hash step and randomization step. Based on Equation (39), we can minimize the variance of LH by making the partial derivative of Equation (39) with respect to g equals to 0. That is, it is equivalent to solve the following equation . By solving it, the optimal g is , where in practice. When the reported supports of value v is , based on Equation (38), the estimated frequency is . In addition, the variance of OLH is

| (43) |

3.1.4. Transformation

The transformation-based method is usually adopted to reduce the communication cost.

S-Hist [61] is proposed to produce a succinct histogram that contains the most frequent items (i.e., “heavy hitters”). Bassily and Smith [61] have proved that S-Hist achieves asymptotically optimal accuracy for succinct histogram estimation. S-Hist randomly selects only one bit from the encoded vector based on random matrix projection, which reduces the communication cost. The specific process of S-Hist is as follows, which includes an additional initialization step.

Initialization. The aggregator generates a random projection matrix , where each element of is extracted from the set . The magnitude of each column vector in is 1, and the inner product of any two different column vectors is 0. Here b is a constant parameter determined by error bound, where error is defined as the maximum distance between the estimated and true frequencies, i.e., .

Randomization. Assume the input value v is the v-th element of domain . We encode v as , where j is chosen uniformly at random from , and x is the v-th element of the j-th row of , i.e, . Then, we randomize x as follows:

| (44) |

where . After perturbation, each user sends to the aggregator.

Aggregation and Estimation. Upon receiving the report of each user , the estimation for the v-th element of is computed by

| (45) |

Based on Equation (44), it is easy to know that S-Hist satisfies -LDP for every choice of the index j. Furthermore, Bassily and Smith [61] proved that the -error of S-Hist is bounded by with probability as least .

Hadamard Randomized Response (HRR) [26,31,67] is a useful tool to handle sparsity by transforming the information contained in sparse vectors into a different orthonormal basis. HRR adopts Hadamard transformation (HT) to handle the situation where the inputs and marginals of individual users are sparse. HT is also called discrete Fourier transform, which is described by an orthogonal and symmetric matrix with dimension . Each row/column in is denoted as , where denotes the number of 1’s that i and j agree on in their binary representation. When a value is presented as a sparse binary vector , the full Hadamard transformation of the input is the -th column of , i.e., the Hadamard coefficient .

Randomization. User i samples an index and perturbs by using BRR that keeps true value with probability p and flips the value with probability . Then, the user i reports the perturbed coefficient and the index j to the aggregator. As we can see, the communication cost is .

Aggregation and Estimation. Assume the observed sum of all received perturbed coefficient with index j is . Then, the unbiased estimation of the j-th Hadamard coefficient (with the factor scaled) is computed by

| (46) |

In this way, the aggregator can compute the unbiased estimations of all coefficients and apply inverse transformation to produce the final frequency estimation .

Based on the proof in [31,68], the variance of HRR is

| (47) |

By setting to ensure LDP, the variance is . Thus, HRR provides a good compromise between accuracy and communication cost. Besides, the computation overhead in the aggregator is , versus for OLH.

Furthermore, Jayadev et al. [67] designed a general family of LDP schemes. Based on Hadamard matrices, they choose the optimal privatization scheme from the family for high privacy with less communication cost and higher efficiency.

3.1.5. Subset Selection

The main idea of subset selection is randomly select items from the domain .

-Subset Mechanism (-SM) [69,70] is proposed to randomly reports a subset z with size of the original attribute domain , i.e., . Essentially, the output space z is the power set of the data domain . In addition the conditional probabilities of any input , output are as follows:

| (48) |

As we can see, when , the 1-SM is equivalent to generalized randomized response (GRR) mechanism.

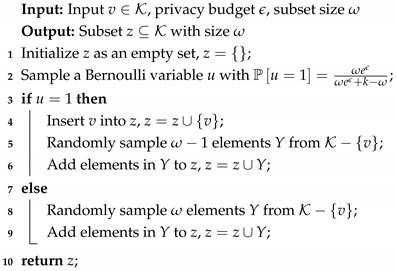

Randomization. Based on Equation (48), the randomization procedure of -SM is shown in Algorithm 2. Observe that the core part of randomization is randomly sampling or elements from without replacement.

| Algorithm 2: The Randomization of -SM |

|

Aggregation and Estimation. Denote and as the real and the received frequency of the i-th value , respectively. Upon receiving a private view , it will increase for each . Based on Algorithm 2, we can know that the true positive rate is , which is the probability of staying unchanged when the input value is . In addition the false positive rate is , which is the probability of showing in the private view when the input value is . Therefore, the expectation of is .

Thus, we can get the estimated frequency of is

| (49) |

Comparisons. Table 4 summarizes the general LDP protocols from the perspective of encoding principles. The error bound is measured by -norm. BRR and GRR are direct perturbation-based methods, which are suitable for low-dimensional data. BRR has a communication cost of and has a smaller error bound than other mechanisms. GRR is the general version of BRR when , of which the communication cost and error bound are both sensitive to domain size k. Both SUE and OUE are unary encoding-based methods. They have the same communication cost and error bound. RAPPOR, O-RAPPOR, O-RR, BLH, and OLH are hash encoding-based methods. RAPPOR, O-RAPPOR, and O-RR have larger error bounds and are relatively harder to implement since they involve Bloom filters and hash cohorts. BLH and OLH have smaller error bounds and are applicable to all privacy regime and any attribute domain. S-Hist and HRR are transformation-based methods, which have a lower communication cost. -SM is a subset selection-based method, which reduces the communication cost.

Table 4.

Comparisons of general LDP protocols for frequency estimation.

| Encoding Principle | LDP Algo. | Comm. Cost | Error Bound | Variance | Know Domain? |

|---|---|---|---|---|---|

| Direct Perturbation | BRR [43,63] | Y | |||

| GRR [64] (or, DE/k-RR) | Y | ||||

| Unary Encoding | SUE [35] | Y | |||

| OUE [35] | Y | ||||

| Hash Encoding | RAPPOR [27] | Y | |||

| O-RAPPOR [64] | N | ||||

| O-RR [64] | N | ||||

| BLH [35] | Y | ||||

| OLH [35] | Y | ||||

| Transformation | S-Hist [61] | Y | |||

| HRR [31] | Y | ||||

| Subset Selection | -SM [69,70] | Y |

Discussions. Section 3.1 discusses the general frequency estimation protocols with LDP. When focusing on multiple attributes (i.e., d-dimensional data), we can directly use the above protocols to estimate the frequency of each attribute. We can also estimate joint frequency distributions of multiple attributes by using the above protocols as long as we regard the Cartesian product of the values of multiple attributes as the total domain. However, the error bound will be d times greater than dealing with a single attribute. Even worse is that the total domain cardinality will increase exponentially with the dimension d, which leads to huge computation overhead and low data utility. Therefore, several studies [31,71,72,73,74,75] have investigated on estimating joint probability distributions of d-dimensional data, which are summarized in Section 3.6.

3.2. Frequency Estimation on Set-Valued Data

This section summarizes the mechanisms for frequency estimation on set-valued data, including items distribution estimation, frequent items mining, and frequent itemsets mining.

Set-valued data denotes a set of items. Let be the domain of items. Set-valued data of user i is denoted as a subset of , i.e., . Different users may have different number of items. Table 5 shows a set-valued dataset of six users with item domain . In what follows, we will introduce each frequency-based task on set-valued data.

Table 5.

Example of set-valued dataset.

3.2.1. Item Distribution Estimation

The basic frequency estimation task on set-valued data is to analyze the distributions over k items. For an item , its frequency is defined as the fraction of times that v occurs. That is, . Let be the number of users whose data include v. Then, we have and .

To tackle set-valued data with LDP, there are two tough challenges [76]. (i) Huge domain: supposing there are total k items and each user has at most l items, then the number of possible combinations of items for each user could reach to . (ii) Heterogeneous size: different users may have different numbers of items, varying from 0 to l. To address heterogeneous size, one of the most general methods is to add the padding items to the below-sized record. Then, the naive method is to treat the set-valued data as categorical data and use LDP algorithms in Section 3.1. However, this method needs to divide the privacy budget into smaller parts, thereby introducing excessive noise and reducing data utility.

Wang et al. [76] proposed PrivSet mechanism which has a linear computational overhead with respect to the item domain size. PrivSet pre-processed the number of items of each user to l, which addresses the issue of heterogeneous size. When the item size is beyond l, it simply truncates or randomly samples l items from the original set. When the item size is under l, it adds padding items to the original set. After pre-processing, each padded set-valued data belongs to , where is the item domain after padding. Then, PrivSet randomized data based on the exponential mechanism. For each padded data , the randomization component of PrivSet selects and outputs an element with probability

| (50) |

where is the probability normalizer and equals to . As analyzed in [76], the randomization of PrivSet reduces the computation cost from to , which is linear to item domain size. PrivSet also holds a lower error bound over other mechanisms.

Moreover, LDPart [77] is proposed to generate sanitized location-record data with LDP, where location-record data are treated as a special case of set-valued data. LDPart uses a partition tree to greatly reduce the domain space and leverages OUE [35] to perturb the input values. However, the utility of LDPart quite relies on two parameters, i.e., the counting threshold and the maximum length of the record. It’s very difficult to calculate the optimal values of these two parameters.

3.2.2. Frequent Items Mining

Frequent items mining (also known as heavy hitters identification, top- hitters mining, or frequent terms discovery) has played important roles in data statistics. Based on the notations in Section 3.2.1, we say an item v is -heavy (-frequent) if its multiplicity is at least , i.e., . The task of frequent items mining is to identify all -heavy hitters from the collected data. For example, as shown in Table 5, the 3-heavy items are A, D, and E.

Bassily and Smith [61] focused on producing a succinct histogram that contains the most frequent items of the data under LDP. They leveraged the random matrix projection to achieve much lower communication cost and error bound than that of earlier methods in [78,79]. The specific process of S-Hist is introduced in Section 3.1. Furthermore, a follow-up work in [80] proposed TreeHist that computes heavy hitters from a large domain. TreeHist transforms the user’s value into a binary string and constructs a binary prefix tree to compute frequent strings, which improves both efficiency and accuracy. Moreover, with strong theoretical analysis, Bun et al. [81] proposed a new heavy hitter mining algorithm that achieves the optimal worst-case error as a function of the domain size, the user number, the privacy budget, and the failure probability.

To address the challenge that the number of items in each user record is different, Qin et al. [30] proposed a Padding-and-Sampling frequency oracle (PSFO) that first pads user’s items into a uniform length l by adding some dummy items and then makes each user sample one item from possessed items with the same sampling rate. They designed LDPMiner based on RAPPOR [27] and S-Hist [61]. LDPMiner adopts a two-phase strategy with privacy budgets and , respectively. In phase 1, LDPMiner identifies the potential candidate set of frequent items (i.e., the top- frequent items) by using a randomized protocol with . The aggregator broadcasts the candidate set to all users. In phase 2, LDPMiner refines the frequent items from the candidates with the remaining privacy budget and outputs the frequencies of the final frequent items. LDPMiner is much wiser on budget allocation than naive method. However, LDPMiner still needs to split the privacy budget into parts at both phases, which limits the data utility.

Wang et al. [75] proposed a prefix extending method (PEM) to discover heavy hitters from an extremely large domains (e.g., ). To address the computing challenge, PEM iteratively identifies the increasingly longer frequent prefixes based on a binary prefix tree. Specifically, PEM first divides users into g equal-size groups, making each group associate with a particular prefix length , such that . Then each user reports the private value using LDP protocol and the server iterates through the groups. Obviously, group size g is a key parameter that influences both the computation complexity and data utility. Therefore, Wang et al. [75] further designed a sensitivity threshold principle that computes a threshold to control the false positives, thus maintaining the effectiveness and accuracy of PEM. Jia et al. [82] have pointed out that prior knowledge can be used to improve the data utility of the LDP algorithms. Thus, Calibrate was designed to incorporate the prior knowledge via statistical inference, which can be appended to the existing LDP algorithms to reduce estimation errors and improve the data utility.

3.2.3. Frequent Itemset Mining

Frequent itemset mining is much similar to frequent items mining, except that the desired results of the former will become a set of itemsets rather than items. Frequent itemsets mining is much more challenging since the domain size of itemsets is exponentially increased.

Following the definition of frequent item mining in Section 3.2.2, the frequency of any itemset is defined as the fraction of times that itemset occurs. That is, . The count of any itemset is defined as the total number of users whose data include as a subset. That is, . An -heavy itemset is such that its multiplicity is at least , i.e., . The task of frequent itemset mining is to identify all -heavy itemsets from the collected data. For example, the 3-heavy itemsets in Table 5 are , , , and .

Sun et al. [83] proposed a personalized frequent itemset mining algorithm that provides different privacy levels for different items under LDP. They leveraged the randomized response technique [43] to distort the original data with personalized privacy parameters and reconstructed itemset supports from the distorted data. This method distorted each item in domain separately, which leads to an error bound of that is super-linear to k. As introduced in Section 3.2.2, LDPMiner mines frequent items over set-valued data by using a PSFO protocol. Inspired by LDPMiner, Wang et al. [84] also padded users items into size l and sampled one item from the possessed items of each user, which could ensure the frequent items can be reported with high probability even though there still exist unsampled items. Specifically, Wang et al. [84] designed a Set-Value Item Mining (SVIM) protocol, and then based on the results from SVIM, they proposed a more efficient Set-Value ItemSet Mining (SVSM) protocol to find frequent itemsets. To further improve the data utility of SVSM, they also investigated the best-performing-based LDP protocol for each usage of PSFO by identifying the privacy amplification property of each LDP protocol.

3.2.4. New Terms Discovery

This section introduces the task of new terms discovery that focuses on the situation where the global knowledge of item domain is unknown. Discovering top- frequent new terms is an important problem for updating a user-friendly mobile operating system by suggesting words based on a dictionary.

Apple iOS [26,85], macOS [86], and Google Chrome [27,87] have integrated with LDP to protect users privacy when collecting and analyzing data. RAPPOR [27] is first used for frequency estimation under LDP, which is introduced in Section 3.1. Afterward, its augmented version A-RAPPOR [87] is proposed and applied in the Google Chrome browser for discovering frequent new terms. A-RAPPOR reduces the huge domain of possible new terms by collecting n-grams instead of full terms. Suppose the character domain is C. Then there will be such n-gram groups. For each group, A-RAPPOR constructs the significant n-grams that will be used to construct a m-partite graph, where . Thus, the frequent new terms can be found efficiently by finding m-cliques in a m-partite graph. However, A-RAPPOR has rather low utility since the n-grams cannot always represent the real terms. The variance of each group is limited as .

To improve the accuracy and reduce the huge computational cost, Wang et al. [88] proposed PrivTrie which leverages an LDP-complaint algorithm to iteratively construct a trie. When constructing a trie, the naive method is to uniformly allocate the privacy budget to each level of the trie. However, this will lead to inaccurate frequency estimations, especially when the height of the trie is large. To address this challenge, PrivTrie only requires to estimate a coarse-grained frequency for each prefix based on an adaptive user grouping strategy, thereby remaining more privacy budget for actual terms. PrivTrie further enforces consistency in the estimated values to refine the noisy estimations. Therefore, PrivTrie achieved much higher accuracy and outperformed the previous mechanisms. Besides, Kim et al. [89] proposed a novel algorithm called CCE (Circular Chain Encoding) to discover new words from keystroke data under LDP. CCE leveraged the chain rule of n-grams and a fingerprint-based filtering process to improve computational efficiency.

Comparisons and discussions. Table 6 shows the comparisons of LDP-based protocols for frequency estimation on set-valued data, including communication cost, key technique, and whether need to know the domain in advance. As for set-valued data, the padding-and-sampling technique is always adopted to solve the problem of the heterogeneous item size of different users, such as in [30,76,84]. The padding size l is a key parameter for both efficiency and accuracy. How to choose an optimal l needs further study. Besides, tree-based method is also widely used in [75,77,80,88] to reconstruct set-valued data. The tree-based method usually requires partition users into different groups or partition privacy budget for each level of the tree, which limits the data utility. In this case, the optimal budget allocation strategy needs to be designed. Meanwhile, the adaptive user grouping technique is also a better way to improve data utility, such as in [88].

Table 6.

Comparisons of frequency estimation mechanisms for set-valued data with LDP.

| Task | LDP Algorithm | Comm. Cost | Key Technique | Know Domain? |

|---|---|---|---|---|

| Item distribution estimation |

PrivSet [76] | Padding-and-sampling; Subset selection | Y | |

| LDPart [77] | Tree-based (partition tree); Users grouping | Y | ||

| Frequent items mining |

TreeHist [80] | Tree-based (binary prefix tree) | Y | |

| LDPMiner [30] | Padding-and-sampling; Wiser budget allocation | Y | ||

| PEM [75] | Tree-based (binary prefix tree); Users grouping | Y | ||

| Calibrate [82] | Consider prior knowledge | Y | ||

| Frequent itemset mining |

Personalized [83] | Personalized privacy regime | Y | |

| SVSM [84] | Padding-and-sampling; Privacy amplification | Y | ||

| New terms discovering |

A-RAPPOR [87] | Select n-grams; Construct partite graph | N | |

| PrivTrie [88] | Tree-based (trie); Adaptive users grouping; Consistency constraints |

N |

1 is the output size of randomization, which is smaller than total domain size k. 2 is the maximum number of nodes among all layers of the tree.

3.3. Frequency Estimation on Key-Value Data

Key-value data [90] is such data that has a key-value pair including a key and a value, which is commonly used in big data analysis. For example, the key-value pairs (KV pair) that denote diseases and their diagnosis values are listed as , , , etc. While collecting and analyzing key-value data, there are four challenges to consider. (i) Key-value data contain two heterogeneous dimensions. The existing studies mostly focus on homogeneous data. (ii) There are inherent correlations between keys and values. The naive method that deals with key-value data by separately estimating the frequency of key and the mean of value under LDP will lead to the poor utility. (iii) One user may possess multiple key-value pairs that need to consume more privacy budget, resulting in larger noise. (iv) The overall correlated perturbation mechanism on key-value data should consume less privacy budget than two independent perturbation mechanisms for key and value respectively. We can improve data utility by computing the actually consumed privacy budget.

Ye et al. [90] proposed that retains the correlations between keys and values while achieving LDP. adopts Harmony [91] to perturb the value v of a KV pair into with privacy budget and converts the pair into canonical form that is perturbed by

| (51) |

Noted that in , the value of a key-value pair is randomly drawn from the domain of when users do not own a key-value pair (i.e., the users have no apriori knowledge about the distribution of true values). Thus, PrivKV suffers from low accuracy and instability. Therefore, Ye et al. [90] built two algorithms and by multiple iterations to address this problem. Intuitively, as the number of iterations increases, the accuracy will be improved since the distribution of the values will be close to the distributions of the true values.

Based on , Sun et al. [92] proposed several mechanisms for key-value data collection based on direct encoding and unary encoding techniques [35]. Sun et al. [92] also introduced conditional analysis for key-value data for the first time. Specifically, they proposed several mechanisms that support L-way conditional frequency and mean estimation while ensuring good accuracy.

However, the studies in [90,92] lack exact considerations on challenges (iii) and (iv) mentioned previously. On the one hand, they simply sample a pair when facing multiple key-value data pairs, which cannot make full use of the whole data pairs and may not work well for a large domain. On the other hand, they neglect the privacy budget composition when considering the inherent correlations of key-value data, thus leading to limited data utility.

Gu et al. [93] proposed the correlated key/value perturbation mechanism which reduces the privacy budget consumption and enhances data utility. They designed a Padding-and-Sampling protocol for key-value data to deal with multiple pairs of each user. Thus, it is no longer necessary to sample a pair from the whole domain (e.g., PrivKVM[90]), but sample from the key-value pairs possessed by users, thus eliminating the affects of large domain size. Then, they proposed two protocols PCKV-UE by adopting unary encoding and PCKV-GRR by adopting the generalized randomized response. Rather than sequential composition, both PCKV-UE and PCKV-GRR involve a near-optimal correlated budget composition strategy, thereby minimizing the combined mean square error.

Comparisons and discussions. Table 7 shows the comparisons of frequency/mean estimations on key-value data with LDP. To solve the challenge of each user has multiple data pairs, simple sampling [90,92] is adopted to sample a pair from the whole domain and padding-and sampling [93] is adopted to sample a pair from the key-value pairs possessed by users. Besides, [90] and PCKV-UE/PCKV-GRR [93] consider the correlations between key and value by iteration and correlated perturbation, respectively. However, CondiFre [92] lacks of the consideration on learning correlations when conducting conditional analysis. Furthermore, both [90] and CondiFre [92] achieve LDP based on two independent perturbations with fixed privacy budget by sequential composition. PCKV-UE/PCKV-GRR [93] holds a tighter privacy budget composition strategy that makes the optimal allocation of privacy budget.

Table 7.

Comparisons of LDP-based protocols for frequency/mean estimation on key-value data.

| LDP Algorithm | Goal | Address Multiple Pairs | Learn Correlations |

Composition | Allocation of |

|---|---|---|---|---|---|

| [90] | Mean value of values; frequency of keys |

Simple sampling | Mechanism iteration |

Sequential | Fixed |

| CondiFre [92] | Mean value of values; frequency of keys; L-way conditional analysis |

Simple sampling | Not consider | Sequential | Fixed |

| PCKV-UE/ PCKV-GRR [93] |

Mean value of values; frequency of keys |

Padding-and-sampling | Correlated perturbation |

Tighter bound | Optimal |

3.4. Frequency Estimation on Ordinal Data

Compared to categorical data, ordinal data has a linear ordering among categories, which is concluded as ordered categorical data, discrete numerical data (e.g., discrete sensor/metering data), and preference ranking data makes the optimal allocation of privacy budget.

When quantifying the indistinguishability of two ordinal data with LDP, the work in [53] measured the distance of two ordinal data by -geo-indistinguishability. That is, a mechanism satisfies -DP if it holds for any possible pairs . The distance of ordinal data and can be measured by Manhattan distance or squared Euclidean distance. Based on -geo-indistinguishability, Wang et al. [70,94] proposed subset exponential mechanism (SEM) that is realized by a tweaked version of exponential mechanism [22]. Besides, they also proposed a circling subset exponential mechanism (CSEM) for ordinal data with uniform topology. For both SEM and CSEM, the authors have provided the theoretical error bounds to show their mechanisms can reduce nearly a fraction of error for frequency estimation.

Preference ranking data is also one of the most common representations of personal data and highly sensitive in some applications, such as preference rankings on political or service quality. Essentially, preference ranking data can be regarded as categorical data that hold an order among different items. Given an item set , a preference ranking of is an ordered list that contains all k items in . Denote a preference ranking as , where means that item ’s rank under is j. The goal of collecting preference rankings is to estimate the distribution of all different rankings from N users. It can easily verify that the domain of all rankings is , which leads to excessive noises and low accuracy when k is large. Yang et al. [95] proposed SAFARI that approximates the overall distributions over a smaller domain that is chosen based on a riffle independent model. SAFARI greatly reduces the noise amount and improves data utility.

Voting data is to some extent a kind of preference ranking. By aggregating the preference rankings based on one of the certain positional voting rules (e.g., Borda, Nauru, Plurality [96,97,98]), we can obtain the collective decision makings. To avoid leaking personal preferences in a voting system, the work in [99] collected and aggregated voting data with LDP while ensuring the usefulness and soundness. Specifically, weighted sampling mechanism and additive mechanism are proposed for LDP-based voting aggregation under general positional voting rules. Compared to the naïve Laplace mechanism, weighted sampling mechanism and additive mechanism can reduce the maximum magnitude risk bound from to and , respectively, where k is the size of vote candidates (i.e., the domain size), N is the number of users.

As one of the fundamental data analysis primitives, range query aims at estimating the fractions or quantiles of the data within a specified interval [100,101], which is also an analysis task on ordinal data. The studies in [68,102] have proposed some approaches to support range queries with LDP while ensuring good accuracy. They designed two methods to describe and analyze the range queries based on hierarchical histograms and the Haar wavelet transform, respectively. Both methods use OLH [35] to achieve LDP with low communication cost and high accuracy. Besides, local d-privacy is a generalized notion of LDP under distance metric, which is adopted to assign different perturbation probabilities for different inputs based on the distance metrics. Gu et al. [103] used local d-privacy to support both range queries and frequency estimation. They proposed an optimization framework by solving the linear equations of perturbation probabilities rather than solving an optimization problem directly, which not only reduces computation cost but also makes the optimization problem always solvable when using d-privacy.

3.5. Frequency Estimation on Numeric Data

Most existing studies compute frequency estimations on categorical data. However, there are many numerical attributes in nature, such as income, age. Computing frequency estimation of numeric data also plays important role in reality.

For numerical distribution estimation with LDP, the naïve method is to discretize the numerical domain and apply the general LDP protocols directly. However, the data utility of the naïve method relies heavily on the granularity of discretization. Even worse is that an optimal discretization strategy depends on privacy parameters and the original distributions of the numeric attributes. Thus, it is a big challenge to find the optimal discretization strategy. Li et al. [104] used the ordered nature of the numerical domain to compromise a better trade-off between privacy and utility. They proposed a novel mechanism based on expectation-maximization and smoothing techniques, which improves the data utility significantly.

3.6. Marginal Release on Multi-Dimensional Data

The marginal table is “the workhorse of data analysis” [31]. When obtaining marginal tables of a set of attributes, we can learn the underlying distributions of multiple attributes, identify the correlated attributes, describe the probabilistic relationships between cause and effects. Thus, k-way marginal release was widely investigated with LDP [31,72,73].

Denote as the d attributes of d-dimensional data. For each attribute , the domain of is denoted as , where is the i-th value of and is the cardinality of . The marginal table is defined as follows.

Definition 11

(Marginal Table [ 31 ]). Given d-dimensional data, marginal operator computes all frequencies of different attribute combinations that are decided by , where denotes the number of s in β, and . The marginal table contains all the returned results of .

Example 1.

When and , it means that we estimate the probability distributions of all combination of the second and third attributes. returns the frequency distributions of all combinations.

The k-way marginal is the probability distributions of any k attributes in d attributes.

Definition 12

(k-way Marginal [31]). The k-way marginal is the probability distributions of k attributes in d attributes, i.e., . For a fixed k, the set of all possible k-way marginals correspond to all distinct ways of picking k attributes from d, which called full k-way marginals.

The k-way marginal release is to estimate k-way marginal probability distribution for any k attributes chosen from A. The k-way marginal distribution of attributes is denoted as . It has

| (52) |

3.6.1. k-Way Marginal Probability Distribution Estimation

Randomized response technique [27] can be naïvely leveraged to achieve LDP when computing k-way marginal probability distributions. However, both the efficiency and accuracy will be seriously affected by the “curse of high-dimensionality”. The total domain cardinality will be , which increases exponentially as k increases.

The EM-based algorithm with LDP [87] is restricted to 2-way marginals. When k is large, it will lead to high time/space overheads. The work in [71] proposed a Lasso-based regression mechanism that can estimate high-dimensional marginals efficiently by extracting key features with high probabilities. Besides, Ren et al. [72] proposed LoPub to find compactly correlated attributes to achieve dimensionality reduction, which further reduces the time overhead and improves data utility.

Nonetheless, the k-way marginals release still suffers from low data utility and high computational overhead when k becomes larger. To solve this, the work in [73] proposed to leverage Copula theory to synthesize multi-dimensional data with respect to marginal distributions and attribute dependence structure. It only needs to estimate one and two-marginal distributions instead of k-way marginals, thus circumventing the exponential growth of domain cardinality and avoiding the curse of dimensionality. Afterward, Wang et al. [105] further leveraged C-vine Copula to take the conditional dependencies among high-dimensional attributes into account, which significantly improves data utility.

Cormode et al. [31] have investigated marginal release under under different kinds of LDP protocols. They further proposed to materialize marginals by a collection of coefficients based on the Hadamard transform (HT) technique. The underlying motivation of using HT is that the computation of k-way marginals require only a few coefficients in the Fourier domain. Thus, this method improves the accuracy and reduces the communication cost. Nonetheless, this method is designed for binary attributes. The non-binary attributes need to be pre-processed to binary types, leading to higher dimensions. To further improve the accuracy, Zhang et al. [74] proposed a consistent adaptive local marginal (CALM) algorithm. CALM is inspired by PriView [106] that builds k-way marginal by taking the form of m marginals each of the size l (i.e., synopsis). Besides, the work in [107,108] focused on answering multi-dimensional analytical queries that are essentially formalized as computing k-way marginals with LDP.

Comparisons and discussions. Table 8 summarized the LDP-based algorithms for k-way marginal release. To improve efficiency, the existing methods try to reduce the large domain space by various techniques, such as HT and dimensionality reduction. As we can see, the variances of the existing methods are relatively large, leading to limited data utility. Although the subset selection is a useful way to reduce the communication cost and variance, it suffers from the sampling error when constructing low-dimensional synopsis. Therefore, designing mechanisms with high data utility and low costs still faces big challenges when d is large.

Table 8.

Comparisons of LDP-based algorithms for k-way marginal release of d-dimensional data.

| LDP Algorithm | Key Technique | Comm. Cost | Variance | Time Complexity | Space Complexity |

|---|---|---|---|---|---|

| RAPPOR [27] | Equal to naïve method when d>2 |

Var | High | High | |

| Fanti et al. [87] | Expectation Maximization (EM) |

Var | |||

| LoPub [72] | Lasso regression; Dimensionality and sparsity reduction |

Var | Medium | High | |

| Cormode et al. [31] | Hadamard Transformation (HT) |

Var | |||

| LoCop [105] | Lasso-based regression; Attribute correlations learning |

Var | Low | High | |

| CALM [74] | Subset selection; Consistency constraints |

Var |

1 Var is the variance of estimating a single cell in the full contingency table; 2 l is the size of m low marginals of dataset.

3.6.2. Conditional Probability Distribution Estimation

The conditional probability is also important for statistics. Sun et al. [92] have investigated on conditional distribution estimation for the keys in key-value data. They formalized k-way conditional frequency estimation and applied the advanced LDP protocols to compute k-way conditional distributions. Besides, Xue et al. [109] proposed to compute the conditional probability based on k-way marginals and further train a Bayes classifier.

3.7. Frequency Estimation on Evolving Data

So far, most academic literature focuses on frequency estimation for one-time computation with LDP. However, privacy leaks will gradually accumulate as time continues to grow under centralized DP [110,111,112], so does LDP [28,113]. Therefore, when applying LDP for dynamic statistics over time, an LDP-compliant method should take time factor into account. Otherwise, the mechanism is actually difficult to achieve the expected privacy protection over long time scales. For example, Tang et al. [86] have pointed out that the privacy parameters provided by Apple’s implementation on MacOS will actually become unreasonably large even in relatively short time periods. Therefore, it requires careful considerations for longitudinal attacks on evolving data.

Erlingsson et al. [27] adopted a heuristic memoization technique to provide longitudinal privacy protection in the case that multiple records are collected from the same user. Their method includes Permanent randomized response and Instantaneous randomized response. These two procedures will be performed in sequence with a memoization step in between. The Permanent randomized response outputs a perturbed answer which is reused as the real answer. The Instantaneous randomized response reports the perturbed answer over time, which prevents possible tracking externalities. In particular, the longitudinal privacy protection in work [27] assumes that the user value does not change over time, such as the continual observation model [114]. Thus, the approach in [27] cannot guarantee strong privacy for the users who have numeric values with frequent changes.

Inspired by [27], Ding et al. [28] used the permanent memoization for continual counter data collection and histogram estimation. They first designed -bit mechanism BitFlip to estimate frequency of counter values in a discrete domain with k buckets. In BitFlip, each user randomly draws bucket numbers without replacement from , denoted as . At each time t, each user randomizes her data and reports a vector , where () is a random 0/1 bit with

| (53) |

Assume the sum of received 1 bit is . Then, the estimated frequency of is

| (54) |

As we can see, BitFlip will be the same as the one in Duchi et al. [115] when . Ding et al. [28] have proved that BitFlip holds an error bound of .

In naïve memoization, each user reports a perturbed value based on the mapping , which leads to privacy leakage. To tackle this, Ding et al. [28] proposed -bit permanent memoization mechanism BitFlipPM based on BitFlip. BitFlipPM reports each response in a mapping , which avoids the privacy leakage since multiple buckets are mapped to the same response.

Moreover, Joseph et al. [113] proposed a novel LDP-compliant mechanism THRESH for collecting up-to-date statistics over time. The key idea of THRESH is to update the global estimation only when it might become sufficiently inaccurate. To identify these update-needed epochs, Joseph et al. designed a voting protocol that requires users to privately report a vote for whether they believe the global estimation needs to be updated. The THRESH mechanism can ensure that the privacy guarantees only degrade with the number of times of the statistics changes, rather than the number of times the computation of the statistics. Therefore, it can achieve strong privacy protection for frequency estimation over time while ensuring good accuracy.

4. Mean Value Estimation with LDP

This Section summarizes the task of mean value estimation for numeric data with LDP, including mean value estimation on numeric data and mean value estimation on evolving data.

In formal, let be the data of all users, where N is the number of users. Each tuple denotes the data of the i-th user, which consists of d numeric attributes . Each denotes the value of the j-th attribute of the i-th user. Without loss of generality, the domain of each numeric attribute is normalized into . The mean estimation is to estimate the mean value of each attribute over N users, i.e., .

4.1. Mean Value Estimation on Numeric Data

Let be the perturbed d-dimensional data of user i. Given a perturbation mechanism , we use to denote the expectation of the output given an input . Therefore, to achieve LDP, a perturbation mechanism should satisfy the following two constraints, that is,

| (55) |

| (56) |

The first constraint (i.e., Equation (55)) shows that the mechanism should be unbiased. The second constraint (i.e., Equation ()) shows that the sum of probabilities of the outputs must be one, where is the output range of .