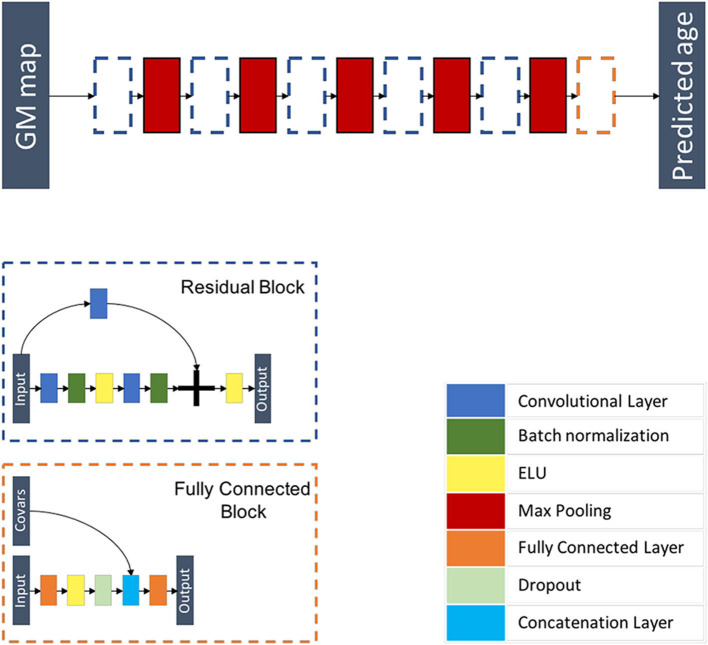

Figure 3.

Flowchart showing the components of the proposed ResNet architecture. The network is composed of five residual blocks, each followed by a max pooling layer and a fully connected block. Each residual block is a combination of layers that are repeated twice. Each layer is composed of a 3D convolutional layer, a batch re-normalization layer, and an exponential linear unit (ELU) activation function. A skip connection is added before the last activation function. The fully connected block is composed of a fully connected layer, an ELU activation function, a dropout layer, a layer concatenating additional co-variables, and a fully connected layer.