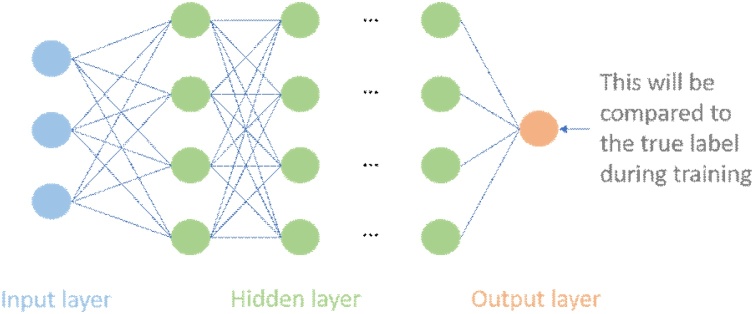

Fig. 2.

We take the most common artificial neural network, multilayer perceptron or feedforward neural network as an example. The output of the j-th unit at layer i-th is , where is the value of the output of the previous layer after the nonlinear function transformation called activation function. Similarly, the output of each layer is passed to the next layer after the activation function, and then the same calculation is performed until the network produces the final output. The training data is fed to the input layer and then the streaming calculation is performed, where the output and derivative of each node are recorded. Finally, the difference between the prediction and the label at the output layer is measured by the loss function. The choice of loss function has a critical impact on the performance of the entire task, so its choice is crucial, common ones include mean absolute error, mean squared error. You can also manually design the loss function, which is often more attractive. The derivative of the loss function will be used as a feedback signal, and then propagate backward through the network, and the weight of the network will be updated to reduce errors. This is a technique called backward propagation [36,37], which follows the chain rule to calculate the gradient of the objective function with respect to the weights in each node. Then gradient descent [38] is used to update all the weights.