Abstract

Objective

Determination of appropriate endoscopy sedation strategy is an important preprocedural consideration. To address manual workflow gaps that lead to sedation-type order errors at our institution, we designed and implemented a clinical decision support system (CDSS) to review orders for patients undergoing outpatient endoscopy.

Materials and Methods

The CDSS was developed and implemented by an expert panel using an agile approach. The CDSS queried patient-specific historical endoscopy records and applied expert consensus-derived logic and natural language processing to identify possible sedation order errors for human review. A retrospective analysis was conducted to evaluate impact, comparing 4-month pre-pilot and 12-month pilot periods.

Results

22 755 endoscopy cases were included (pre-pilot 6434 cases, pilot 16 321 cases). The CDSS decreased the sedation-type order error rate on day of endoscopy (pre-pilot 0.39%, pilot 0.037%, Odds Ratio = 0.094, P-value < 1e-8). There was no difference in background prevalence of erroneous orders (pre-pilot 0.39%, pilot 0.34%, P = .54).

Discussion

At our institution, low prevalence and high volume of cases prevented routine manual review to verify sedation order appropriateness. Using a cohort-enrichment strategy, a CDSS was able to reduce number of chart reviews needed per sedation-order error from 296.7 to 3.5, allowing for integration into the existing workflow to intercept rare but important ordering errors.

Conclusion

A workflow-integrated CDSS with expert consensus-derived logic rules and natural language processing significantly reduced endoscopy sedation-type order errors on day of endoscopy at our institution.

Keywords: decision support systems, endoscopy, sedation, workflow, agile

INTRODUCTION

Endoscopy is a widely performed diagnostic and therapeutic procedure in gastroenterology. In the US, more than 6.9 million upper, 11.5 million lower, and 228 000 biliary endoscopies are performed annually.1 Successful coordination of endoscopy involves a complex workflow to allocate facilities, resources, and personnel. The choice of procedural sedation has important implications for patient comfort and safety as well as procedural success.2,3 Errors in sedation orders cause significant workflow disruption, potential last-minute cancellation of cases, and frustration for providers, staff, and patients. Although clinical decision support systems (CDSS) have helped reduce medication prescribing errors4–8 and increase medication and radiology appropriateness,6,9–11 use cases such as endoscopy sedation have not received much attention.

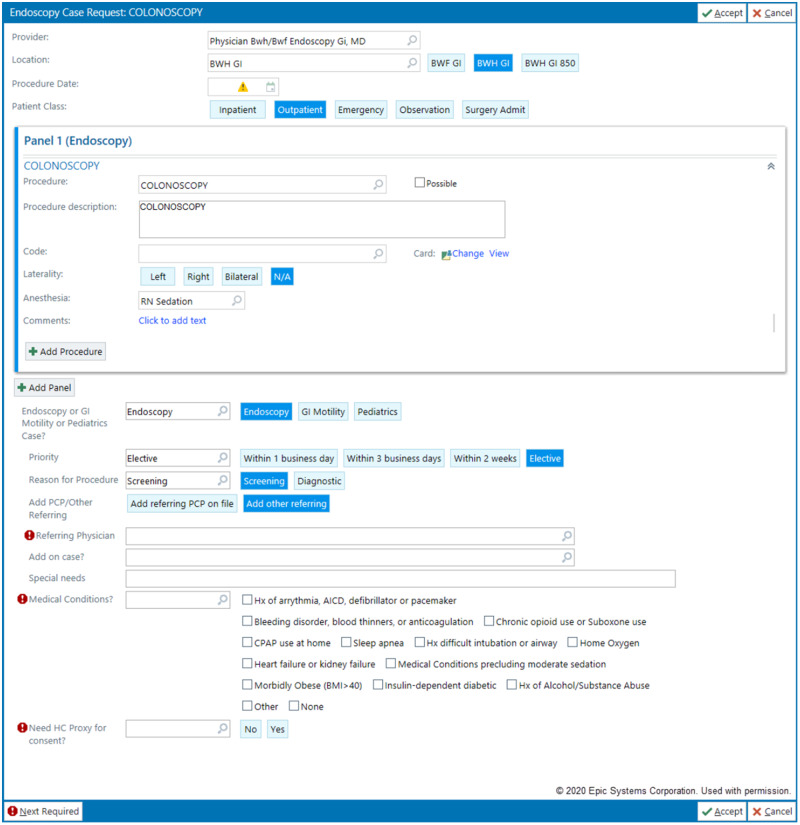

At our institution, which performs over 20 000 endoscopies per year, most patients receive moderate sedation (intravenous opiates and benzodiazepines) by GI providers, while a minority require deep sedation (including general anesthesia) or monitored care by anesthesia providers due to comorbidities or moderate sedation intolerance. Endoscopies can be ordered either by gastroenterologists or by providers in other departments. The order panel for endoscopy includes fields for requested sedation type as well as patient comorbidities that may need anesthesia support. Unfortunately, the endoscopy order panel is complicated, with many necessary fields reflecting a myriad of considerations (Figure 1). This complexity can lead to rare but impactful ordering errors. Given the sheer volume of cases performed, not all endoscopy sedation orders can be reviewed manually before the procedure. In the existing institutional workflow, manual triage is focused on direct-booking cases where a comorbidity is indicated (roughly one-third of procedures). The remaining two-thirds of procedures are presumed “low-risk” for order errors and receive no further triage until the day of procedure. When rare sedation order errors occur (defined as anesthesia needed but not assigned), they are almost always discovered on the day of procedure, resulting in last-minute scrambling or cancellation.

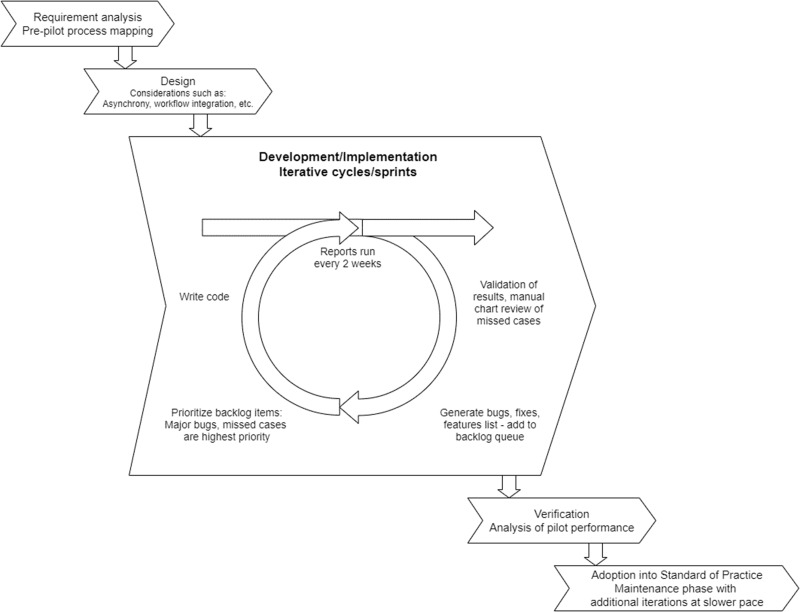

Figure 2.

Agile project management approach. After assessing requirements and designing general strategy, an iterative development and implementation approach was utilized throughout the pilot. This had several major benefits including rapid deliverables to maintain stakeholder buy-in and flexibility in the development process to meet unforeseen requirements/bug fixes.

Figure 1.

Endoscopy case request order panel. In this panel, the ordering provider can indicate the desired anesthesia level for the case. Additionally, comorbid medical conditions which could indicate need for anesthesia can be designated with checkboxes. Case requests with comorbidity checkbox responses are sent for manual triage in both the preexisting as well as pilot workflow. This panel requires completion of multiple input fields, all of which are necessary for endoscopy planning, but the complexity increases the likelihood of inaccurate or incomplete information.

OBJECTIVE

In order to address a gap in existing workflow at our institution that allowed for undetected endoscopy sedation order errors, we designed and implemented a CDSS to add a software-assisted triage layer for patients undergoing outpatient endoscopy. The goal was to leverage an informatics solution to process a large amount of data, identify possible sedation order errors in a low-prevalence patient population, and incorporate the solution into standard workflow. This endoscopy support CDSS was developed and implemented in a 12-month pilot with an agile development strategy. This study evaluated the effectiveness of the CDSS in reducing sedation order errors at time of endoscopy before and during the 12-month pilot period.

MATERIAL AND METHODS

Setting

The study site was Brigham and Women’s Hospital, a 793-bed academic medical center in Boston, MA. Endoscopies were performed at the main hospital and a community ambulatory endoscopy center, with a combined volume of ∼21 000 procedures per year. Epic (Epic Systems Corporation, Verona, WI) electronic health record (EHR) and Provation MD (Provation Medical, Inc., Minneapolis, MN) endoscopy software package were the health information systems used.

CDSS design

We used an agile (iterative development) approach for this project (Figure 2). Process mapping prior to the pilot determined overall project requirements, and design and development continued in iterative cycles throughout the pilot. We identified high-level goals applicable to most CDSSs and problem-specific requirements.

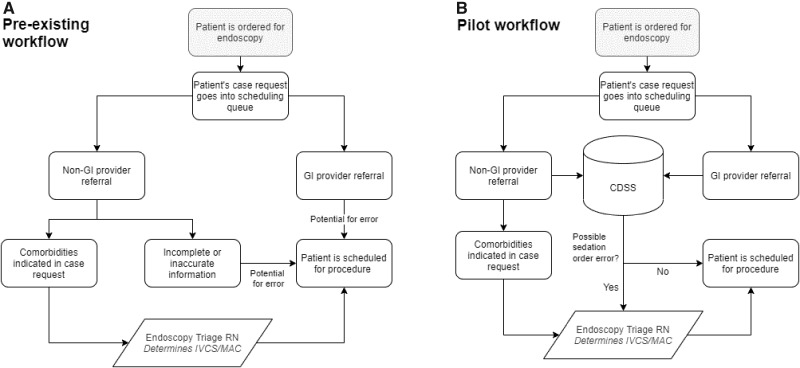

Requirement 1 (specific): CDSS must process all case requests. The main gap in the existing workflow was a workforce/inefficiency limitation because of large case numbers and overall low prevalence of errors. All case requests, from non-GI providers and GI providers, may have had inaccurate or incomplete information and contained errors that persisted until day of endoscopy (Figure 3A).

Figure 3.

Pre-existing workflow vs Pilot workflow. Prior workflow had 2 potential sources of sedation order errors, which received no further screening prior to scheduling. In the pilot, all cases are screened by the clinical decision support system (CDSS) where any potential errors are sent to the endoscopy triage nurse for further review. IVCS = intravenous conscious sedation (moderate sedation), MAC = monitored anesthesia care (anesthesia support).

Requirement 2 (high-level): CDSS must integrate back into the existing workflow with a human check. The reintegration point needed to give ultimate decision-making responsibility to an endoscopy triage nurse, a health professional qualified to make such a medical decision. (Figure 3B).

Requirement 3 (high-level): No impact on user experience. The solution could not change the experience for the ordering provider (order panel), as endoscopies could be ordered by any provider in our institution. Prior attempts to change workflow for clinicians were met with resistance and would have required large scale education and change management. Additionally, order panel changes required enterprise-wide approval as the EHR instance is used at all hospitals within our multisite health system.

We formed a clinical expert panel with 3 gastroenterology leaders (the Endoscopy Director, Clinical Director, and Quality and Safety Director), a gastroenterologist/clinical informatician, and an endoscopy triage nurse. The panel secured leadership backing and clinical and workflow expertise as well as key stakeholder buy-in. Two senior clinical informaticians provided technical and strategic guidance and, together with the expert panel, comprised the main working group. A pre-pilot review of sedation order error events revealed that the single best predictor for requiring endoscopic anesthesia support was a prior history of endoscopic anesthesia support. A CDSS decision tree was designed around this criterion.

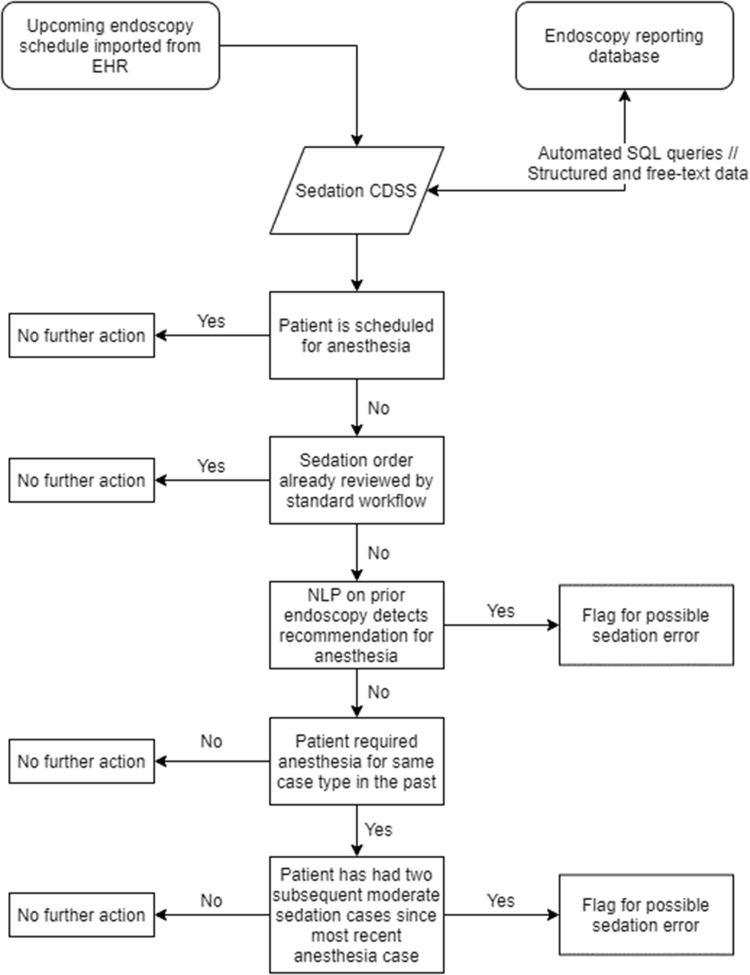

The overall CDSS process consisted of: 1) extraction of case-related details of upcoming endoscopies from the EHR, 2) query of historical endoscopy records, 3) automated review of prior endoscopies with application of expert consensus-derived logic and natural language processing (NLP), 4) delivery of potential errors in sedation type to an endoscopy triage nurse for manual review, and 5) interception of true positive cases. The CDSS was done in an asynchronous fashion on a biweekly schedule looking at upcoming outpatient cases in a 4-week window.

The CDSS was written in R programming language. Endoscopy scheduling data was obtained from the EHR and used to automatically generate SQL queries to pull historical endoscopy data from the endoscopy reporting database over a secure connection. Simple NLP using heuristic check of certain keywords was used to parse free-text data. This list of keywords was derived from expert consensus to be appropriate for the required task. Case-insensitive string matching for keywords, partial word fragments, common abbreviations, and misspellings were used to flag relevant free-text. Additional details can be found in the Supplementary Appendix.

Logic rules flagged patients if: 1) the patient was scheduled for moderate sedation but required anesthesia support for past endoscopy, or 2) the patient had recommendations for future anesthesia support in prior procedure reports. Negation logic rules included: 1) anesthesia used for certain procedure types was ignored, including endoscopic ultrasound, endoscopic retrograde cholangiopancreatography, and endoscopic submucosal dissection, as these are routinely performed with anesthesia support, and 2) if a patient required anesthesia support for a procedure type but subsequently underwent the same procedure type in at least 2 additional instances with moderate sedation. The overall logic flow is depicted in Figure 4 and the stepwise process is described in the Supplementary Appendix.

Figure 4.

Decision flow diagram for endoscopy clinical decision support system (CDSS). The key logic driving the CDSS is to look for either recommendation or requirement of anesthesia during prior endoscopies using NLP or the need for anesthesia in prior cases.

A formatted report of “high-risk patients” was sent on a regular schedule to an endoscopy triage nurse for manual review. This report was external to the EHR and contained case details plus rationale on why the case was flagged (either due to prior history of anesthesia with case-history list, or due to NLP flag with relevant free-text included). True positive cases were intercepted, the patient contacted, and subsequently rescheduled to an anesthesia-capable endoscopy slot. The 12-month pilot (12/2018–11/2019) consisted of numerous development iteration cycles coinciding with 2-week reporting periods. Regular review of CDSS performance and error analysis allowed refinement of the logic rules, addition of features, and bug fixes.

Evaluation study

A retrospective analysis was conducted to evaluate the effectiveness of the CDSS on sedation-type order errors at time of endoscopy. Two time periods were included: 1) a 4-month period prior to the start of the pilot, and 2) a 12-month pilot period. The 4-month pre-pilot period consisted of systematic data collection, process-mapping, and CDSS design, and ended with the pilot launch. All ambulatory patients undergoing routine endoscopies at both our main hospital campus and our ambulatory endoscopy center were included. Patients who were undergoing advanced procedures that required anesthesia-support by protocol were excluded as additional sedation decision-making was unnecessary.

Data collected included patient age, sex, procedure type, sedation type ordered at time of scheduling, sedation type ordered on day of scheduled endoscopy, and CDSS output. The primary outcome was the rate of sedation-type order error on day of endoscopy, defined as anesthesia needed but not assigned. The reported CDSS metrics represented an average of the performance of all CDSS versions/iterations during the 12-month period. Pilot-specific data points included number of cases flagged, confirmed positives after manual review, missed cases, and version changes/features added. Number of charts needed to review was also calculated, defined as the number of cases flagged by the CDSS divided by the number of intercepted errors.

Statistical analysis was performed in R programming language (v3.5.2, R Foundation) and RStudio (v1.1.463, RStudio, Inc., Boston, MA). Continuous variables were compared using student’s t-test, and categorical variables were compared using Pearson’s Chi-squared and Fisher’s exact test as appropriate. P value threshold of .05 was used for statistical significance. The study was reviewed and approved by the Partners Healthcare Institutional Review Board.

RESULTS

A total of 22 755 endoscopy cases were included in the study. 6434 cases were performed in the 4-month pre-pilot evaluation period and 16 321 cases were performed in the 12-month pilot period. 7082 procedures were performed in the pre-pilot period and 17 220 procedures were performed during the pilot. Some cases represented double procedures (eg, an upper endoscopy followed by colonoscopy) and is the reason procedures outnumber cases. There was a statically significant difference in case-type distribution between the pre-pilot period and the pilot period (P = .001). The mean patient age was similar (P = .88) and there was a female predominance in both periods (pre-pilot 62.0%, pilot 61.8%) which was similar (P = .79) (Table 1).

Table 1.

Pre-pilot assessment period vs pilot period

| Pre-pilot (4 months) | Pilot (12 months) | P value | |

|---|---|---|---|

| Total cases | 6434 | 16 321 | |

| Procedure types a | P = .001 | ||

| Upper endoscopy | 1978 (28.0%) | 5077 (29.5%) | |

| Colonoscopy | 4476 (63.2%) | 10 492 (60.9%) | |

| Flexible sigmoidoscopy | 219 (3.1%) | 658 (3.8%) | |

| Others | 409 (5.8%) | 993 (5.8%) | |

| Total proceduresb | 7082 | 17220 | |

| Sex | |||

| Male | 2444 (38.0%) | 6230 (38.2%) | P = .79 |

| Female | 3990 (62.0%) | 10 091 (61.8%) | |

| Mean Age ± Standard Deviation | 57.4 ± 14.2 | 57.4 ± 14.5 | P = .88 |

Others included less common procedure types such as ileoscopies and pouchoscopies. There was a significant difference in the distribution of case types during the pre-pilot and pilot periods by Pearson’s Chi-squared test.

Some cases were “double procedures” when a patient underwent more than 1 procedure such as both upper endoscopy and colonoscopy in a single instance of sedation, thus the total number of individual procedures was greater than the total number of cases.

There were 25 and 55 sedation order errors at time of scheduling during the pre-pilot and pilot periods, respectively. The prevalence of sedation-type order error at time of scheduling was similar (P = .54) in the pre-pilot (0.39%) and pilot periods (0.34%). There was a significant decrease in the primary outcome of sedation-type order error rate on day of endoscopy between the pre-pilot (0.39%) and pilot (0.037%) groups with an odds ratio = 0.094, Fisher’s exact P value < 1e–8 (Table 2). For the pilot overall, 3.5 patients were manually reviewed for every patient intercepted.

Table 2.

Sedation order error rates

| Pre-pilot (4 months) | Pilot (12 months) | P value | |

|---|---|---|---|

| Total cases | 6434 | 16 321 | |

| Prevalence of sedation order errors at time of scheduling (error rate) | 25 (0.39%) | 55 (0.34%) | P = .54 |

| Sedation-type order errors detected prior to procedure | N/A | 49 (89.1%) | |

| Sedation-type order errors on day of endoscopy (error rate) | 25 (0.39%) | 6 (0.037%) | P < 1e-8 |

Note: Error rates were calculated by number of errors over total number of cases for that time period. The prevalence of sedation order errors at scheduling was similar in the time period before and during the pilot. There was no mechanism to intercept those order errors prior to the pilot while 89.1% of errors were intercepted prior to the endoscopy during the pilot. There was a significant decrease in the rate of sedation order errors at time of endoscopy in the pilot period.

During the pilot, 172 of the 16 321 total cases (1.1%) were identified as containing potential sedation errors by the CDSS. Forty-nine of these cases were determined to be true positives and intercepted. Six cases of sedation error were not detected during the pilot (false negatives) and were discovered on the day of procedure. The CDSS had precision/positive predictive value = 28.5%, negative predictive value = 99.9%, recall/sensitivity = 89.1%, and specificity = 99.2% (Table 3).

Table 3.

Confusion matrix for clinical decision support system (CDSS) during 12-month pilot

| Sedation Error | No Sedation Error | Totals | |

|---|---|---|---|

| CDSS Positive | 49 | 123 | 172 |

| CDSS Negative | 6 | 16 143 | 16 149 |

| Totals | 55 | 16 266 | 16 321 |

Note: Precision/PPV = 28.5%, NPV = 99.9%, Recall/Sensitivity = 89.1%, Specificity = 99.2%.

DISCUSSION

Sedation-type order error in endoscopy is a rare but important occurrence at our institution. Using an agile development approach, a software-based CDSS with expert consensus-derived logic rules and NLP was developed and implemented into an endoscopy coordination workflow that was effective in reducing sedation order errors on day of endoscopy.

These errors were a significant pain point at our institution. The pre-pilot workflow and the EHR endoscopy case request order panel represented the culmination of years of effort to remedy them. The remaining instances of errors persisted despite those efforts, and it was previously deemed too impractical to expend any more personnel-based workflow resources. Our CDSS is an example of how a simple informatics solution, when designed using an iterative development strategy in close collaboration with clinical experts, technical experts, and stakeholders, creates a novel solution to an old problem.

Error analysis

The CDSS had a recall of 89.1% and a precision of 28.5%. For each false negative, the cause was identified and addressed with subsequent improvement to the algorithm. Of the 6 false negatives, 2 resulted because the relevant case was not listed in upcoming cases due to overly strict EHR query criteria. Two other false negatives resulted when anesthesia recommendations were noted in historical endoscopy report free-text during early development when the CDSS could only process structured data. One case was missed because it was a last-minute add-on and not evaluated in time by the CDSS due to limitations of the asynchronous reporting window. The final missed case was because the patient’s prior endoscopy was performed at another hospital, and the associated report was unavailable.

There were several reasons for low overall precision during the pilot. The CDSS was biased to err on the side of recall over precision as it existed within a larger workflow with a subsequent layer, much like a screening test with a secondary confirmatory test. This bias was especially important given the low prevalence of background error (0.4%). The goal of the CDSS was not to be fully autonomous but rather to create an enriched cohort that makes secondary human review practical. False positives fell in 4 main categories: 1) outlier cases, 2) workflow detection gaps, 3) logic rule gaps, and 4) provider differences. For outlier cases, false positives included patients who required anesthesia for extenuating circumstances (eg, a hypotensive patient with an acute GI bleed). These patients presumably would not need anesthesia for standard outpatient procedure assuming the reason for requirement during acute illness had resolved. We could not find a reliable way to discern the specific nuances of these cases so opted to include them as criteria for flagging; however, we included admission status when reporting them in the output as they were nearly always performed in an inpatient or emergency setting. Workflow detection gaps occurred early in the pilot, causing multiple false positives for cases that had previously been reviewed. We addressed this by creating 1) the ability to import the decisions of standard manual triage, 2) creating an index of CDSS-reviewed cases, and 3) suppressing duplicate alerts for cases within a 30-day window. Logic rule gaps caused false positives in a subgroup of patients who had required anesthesia for procedures but subsequently did well with moderate sedation. The development of previously described exclusion rules decreased this type of false positive. Lastly, patient and provider preferences played a role in sedation decisions. In instances of uncertainty, the endoscopy triage nurse would reach out to the endoscopist to clarify. Major error analysis rationale and subsequent changes are shown in Table 4.

Table 4.

Error analysis examples for the clinical decision support system (CDSS): rationale for error is listed in the first column with the subsequent change made in the second column

| Rationale | Subsequent change |

|---|---|

| Patient scheduled with colorectal surgeon, CDSS only evaluated cases scheduled with gastroenterologists. | Colorectal surgeons added to scheduled case search criteria. |

| Free-text recommendation for anesthesia support in future procedures found in prior endoscopy report but no ability to process unstructured data from prior reports. | NLP capability added to detect free-text recommendations made in prior endoscopy reports. |

| Case not detected when case type had custom name (as entered by ordering physician). | Scheduled case search criteria changed from inclusion-based (list of all procedure types) to exclusion based (all procedures performed at specific location, but ignore non-endoscopy cases). |

| Screening window for CDSS set 2–4 weeks into the future but patient scheduled for procedure within 2 weeks. | Screening window changed to +1 day 4 weeks into future. Created overlap period of 2 weeks where add-on cases have higher likelihood of being detected. |

| Patient previously screened and flagged never underwent procedure and rescheduled for later date, flagged again at that time even though determination for sedation had already been made. | Added indexing feature to CDSS to track patients/cases already reviewed and to ignore duplicates. Built-in auto-expire feature to take patients off exclusion list after a set period of 60 days. |

| Patient previously screened by existing manual screen also flagged by algorithm causing duplication. | Added ability to detect if patient had been previously reviewed and to exclude automatically. |

| Patient needed anesthesia support for inpatient procedure due to acute illness. | Added patient admission status to historical reporting (inpatient vs outpatient) to help identify possible outlier cases. |

Abbreviations: CDSS, clinical decision support system; NLP, natural language processing.

Although we report the precision for the entire 12-month pilot, the CDSS performance improved with each iterative cycle. During the first 2 months of the pilot, recall was 81.8% (9/11) and precision was 28.1% (9/32), but by the final 2 months of the pilot, recall was 91.6% (11/12) and precision was 52.4% (11/21). These performance metrics were insufficient to allow for autonomous function, but they continued to improve the efficiency of secondary human review.

CDSS design considerations

Even in the modern age of computerized physician order entry, order errors continue to be a persistent challenge. Studies examining medication ordering errors have reported a wide range for prevalence ranging from 1.4%-77.7%.5,7,8,12,13 To our knowledge, no studies have previously reported on the rate of sedation-type order errors related to endoscopy. Although we found low prevalence (< 0.4%) for this type of error at our institution, the downstream ramifications necessitated improvement. With process mapping and requirement analysis, we identified the main barriers to catching sedation-type order errors: high volume of endoscopies, density of EHR information, and low prevalence. This made secondary manual review of appropriateness of all endoscopic sedation orders impractical and a good target for an informatics-based solution.

Process mapping was also important to recognize how to integrate the CDSS into existing live workflow. Firstly, we determined that all recommendations made by the CDSS must be validated by a qualified human prior to implementation. There were 2 main rationales: 1) the decision branch point of sedation modality had significant downstream implications for both logistics and patient safety, and 2) we did not expect CDSS performance to be sufficiently reliable for independent use. The classification task was especially challenging given the low prevalence of sedation error in this population (< 0.4%). Further, given human validation of the CDSS recommendations, we needed to balance precision and recall of the algorithm such that they were feasible for human review. For the pilot overall, 3.5 patients were manually reviewed for every patient intercepted. Without the CDSS, 296.7 patients would have to be manually reviewed for every patient intercepted (number of total cases divided by number of sedation-type order errors at time of scheduling). This critical boost in efficiency made human review practical and addressed the main workflow challenge identified during the pre-pilot period.

Based on the need for secondary manual review to validate recommendations, we determined that the system did not have to behave in real-time/synchronous fashion but rather could be scheduled at regular intervals. The working group determined that biweekly intervals with a 4-week prospective window was appropriate based on the expected endoscopy volume at our institution. This asynchronous approach had 2 major advantages: 1) it reduced the technical requirements of integration significantly, 2) it permitted reasonable lead time for secondary human review and case interception when appropriate. It also allowed easy integration into the preexisting workflow. A synchronous approach was also considered with optimal time for CDSS at the time of endoscopy scheduling. However, the endoscopy schedulers at our institution are not medically qualified to evaluate the appropriateness of the algorithm’s recommendation, making a synchronous approach impractical to implement.

Project development approach

The agile approach to project development is common in the modern software development industry and is gaining popularity in healthcare products.14,15 We found this approach especially advantageous for niche problems. As compared to a single development cycle strategy, iterative development cycles allowed constant improvement in performance while concurrently decreasing relative risk of failure. Additionally, by demonstrating results (intercepted errors) concurrent with ongoing development, we continued to garner support from leaders and key stakeholders.

The expert panel involvement was also critical to success. With expert input, we developed a relatively simple yet effective algorithm to drive the CDSS. This panel also guided planning of live workflow integration even in the early stages of development, which allowed seamless fast-tracking of the final solution into standard-of-care at our institution at the pilot’s conclusion.

Key components of our project approach include: 1) identification of a pain point, 2) process mapping the existing workflow to identify potential targets for an informatics solution, 3) assembly of an expert panel and working group to design a solution, 4) plan for integration of solution into workflow, 5) iterative development with concurrent pilot, and 6) concurrent performance analysis, testing, and improvement. This strategy can be generalized to other areas and projects. Within our group, this method is presently being used for a project aimed at developing a CDSS to improve quality of bowel preparations for colonoscopies.

Limitations

A major limitation of our system is its reliance on historical endoscopy data. Furthermore, we only had access to our institution’s endoscopy system. Post-pilot database query revealed that 41.8% cases involved patients without prior endoscopy in our database. However, the CDSS was specifically designed for gaps within our institutional workflow, and the entire mechanism (manual + CDSS workflow) was successful in assigning correct sedation in all but a handful of cases. Historical data was the single best predictor of anesthesia need, simplifying the algorithm design.

Future plans for the system include expansion to endoscopy-naïve patients. We have already developed functionality to extract medical history, problem lists, medications, and labs from the EHR and have used them for other automated triaging projects. Direct application of these patient features to determine appropriate sedation, however, is difficult as no predictors have sufficiently high correlation to sedation requirements to be used independently in a decision algorithm. These factors are nuanced and complex, with interactions that require a multivariate prediction model. Development and validation of such a model was outside the scope of our pilot and would have delayed implementation significantly. Our group is currently working on a machine-learned model based on additional patient features to predict need for anesthesia in a separate research endeavor. Such a model, after validation, would be able to triage endoscopy-naïve patients. For this pilot, the simple model was able to start intercepting errors within 2 months of project inception.

Another limitation is that our CDSS only detects unidirectional errors: patients who are ordered for moderate sedation but require anesthesia. Triaging patients correctly to anesthesia care is challenging for most GI practices. Some in the GI community have advocated for anesthesia involvement in all cases, but others have argued that such a policy would incur medical waste when moderate sedation is sufficient. At our institution, there are not enough anesthesia resources to provide monitored anesthesia care for all patients undergoing endoscopy. Therefore, our CDSS was designed to detect the error type that causes workflow disruption and potential patient safety concerns.

Our project setting was a single academic medical center. The generalizability of the final product is limited by differences in workflows between different hospitals. The customizations that made the CDSS effective in our hospital would require adjustments to be deployed in another setting. However, we believe the overall development strategy and algorithm approach can be adopted for outside use. Additional algorithm details can be found in the Supplementary Appendix.

The comparison groups in the analysis were not randomized nor propensity-score matched. There were small but statistically significant differences in the overall procedure type composition. One explanation is that the pre-pilot period included the last 3 months of 2018 and could reflect seasonal differences in procedures. The relatively higher percentage of colonoscopies could reflect patient desire to complete procedures prior to the end of the insurance year. Another possibility is that these differences reflect gradual shifts in practice patterns at our institution. The analysis to explore these possibilities was outside the scope of our study. The choice of a 4-month pre-pilot period was purely practical, starting when we began systematic order error data collection and ending at pilot launch. Nonetheless, the most important feature, the prevalence of sedation-type order errors at time of scheduling, was equivalent between our groups despite a nonrandom/matched study design.

Lastly, we recognize that there are numerous approaches to the problem we presented. Some of these approaches may have better performance than the approach we used. Ultimately, our goal was a practical one: to develop and implement a solution that could benefit our patients quickly, we opted to implement a good and easy system over a perfect but more difficult to implement system. We were able to deploy our system and benefit patients within 2 months of identifying workflow gaps. Components such as improved structured data capture at time of ordering, changes to the user interface, and creation of a prediction model for endoscopy-naïve patients, are all planned as part of future work.

CONCLUSION

A workflow-integrated, software-based CDSS with expert consensus-derived logic rules and NLP was successful in significantly reducing sedation-type order errors at our institution. The system was able to produce an enriched cohort of at-risk patients for manual review from a low prevalence population, facilitating the interception of sedation-type order errors prior to procedure. Our experience demonstrates the effectiveness of strategic project planning with early involvement of stakeholders, subject-matter experts, and end users, using an iterative design strategy. The end solution was a customized, relatively simple, and effective product that considered the specific requirements and characteristics of our clinical practice environment. This approach is suitable for important problems that may be difficult to solve using traditional methods.

FUNDING

This work was supported in part by NIH grant T32 DK007533-35, a training grant from the National Institute of Diabetes and Digestive and Kidney Diseases.

AUTHOR CONTRIBUTIONS

All authors made substantial contributions to the conception and design of the work. LS was responsible for data acquisition. LS, AL, and AW were responsible for the data analysis and interpretation. LS and AL drafted the manuscript. All authors made critical revisions to the manuscript, gave final approval to the version to be published, and agreed to be accountable for all aspects of the work.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

This project would not have been possible without the help of Irina Filina RN, CGRN, whose constant efforts considerably improve patient safety and outcomes in our endoscopy department. We would also like to thank Bob MacNeil from Brigham Health Information Systems, whose help allowed us to solve many of the technical challenges presented during our project. Lastly, we would like to thank Dr. Jessica Hu for her repeated readings and edits of various drafts of this manuscript.

CONFLICT OF INTEREST STATEMENT

LS is a member of the Gastroenterology Steering Board for Epic Systems. AL is a consultant for the Abbott Medical Device Cybersecurity Council. The other authors have no competing interests to declare.

REFERENCES

- 1. Peery AF, Dellon ES, Lund J, et al. Burden of gastrointestinal disease in the United States: 2012 update. Gastroenterology 2012; 143 (5): 1179–87.e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Dossa F, Medeiros B, Keng C, et al. Propofol versus midazolam with or without short-acting opioids for sedation in colonoscopy: A systematic review and meta-analysis of safety, satisfaction, and efficiency outcomes. Gastrointest Endosc 2020; 91 (5): 1015–26.e7. [DOI] [PubMed] [Google Scholar]

- 3. Amornyotin S. Sedation and monitoring for gastrointestinal endoscopy. World J Gastrointest Endosc 2013; 5 (2): 47–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Vélez-Díaz-Pallarés M, Pérez-Menéndez-Conde C, Bermejo-Vicedo T.. Systematic review of computerized prescriber order entry and clinical decision support. Am J Heal Pharm 2018; 75 (23): 1909–21. [DOI] [PubMed] [Google Scholar]

- 5. Wu X, Wu C, Zhang K, et al. Residents’ numeric inputting error in computerized physician order entry prescription. Int J Med Inform 2016; 88: 25–33. [DOI] [PubMed] [Google Scholar]

- 6. Patterson BW, Pulia MS, Ravi S, et al. Scope and influence of electronic health record–integrated clinical decision support in the emergency department: a systematic review. Ann Emerg Med 2019; 74 (2): 285–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Korb-Savoldelli V, Boussadi A, Durieux P, et al. Prevalence of computerized physician order entry systems–related medication prescription errors: a systematic review. Int J Med Inform 2018; 111: 112–22. [DOI] [PubMed] [Google Scholar]

- 8. Westbrook JI, Baysari MT, Li L, et al. The safety of electronic prescribing: manifestations, mechanisms, and rates of system-related errors associated with two commercial systems in hospitals. J Am Med Inform Assoc 2013; 20 (6): 1159–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Bizzo BC, Almeida RR, Michalski MH, et al. Artificial intelligence and clinical decision support for radiologists and referring providers. J Am Coll Radiol 2019; 16 (9): 1351–6. [DOI] [PubMed] [Google Scholar]

- 10. Goehler A, Moore C, Manne-Goehler JM, et al. Clinical decision support for ordering CTA-PE studies in the emergency department—a pilot on feasibility and clinical impact in a Tertiary Medical Center . Acad Radiol 2019; 26 (8): 1077–83. [DOI] [PubMed] [Google Scholar]

- 11. Westbrook JI, Gospodarevskaya E, Li L, et al. Cost-effectiveness analysis of a hospital electronic medication management system. J Am Med Inform Assoc 2015; 22 (4): 784–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Kadmon G, Pinchover M, Weissbach A, et al. Case not closed: prescription errors 12 years after computerized physician order entry implementation. J Pediatr 2017; 190: 236–40.e2. [DOI] [PubMed] [Google Scholar]

- 13. Gates PJ, Meyerson SA, Baysari MT, et al. The prevalence of dose errors among paediatric patients in hospital wards with and without health information technology: a systematic review and meta-analysis. Drug Saf 2019; 42 (1): 13–25. [DOI] [PubMed] [Google Scholar]

- 14. Giordanengo A, Øzturk P, Hansen AH, et al. Design and development of a context-aware knowledge-based module for identifying relevant information and information gaps in patients with type 1 diabetes self-collected health data. JMIR Diabetes 2018; 3 (3): e10431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Kannan V, Basit MA, Bajaj P, et al. User stories as lightweight requirements for agile clinical decision support development. J Am Med Inform Assoc 2019; 26 (11): 1344–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.