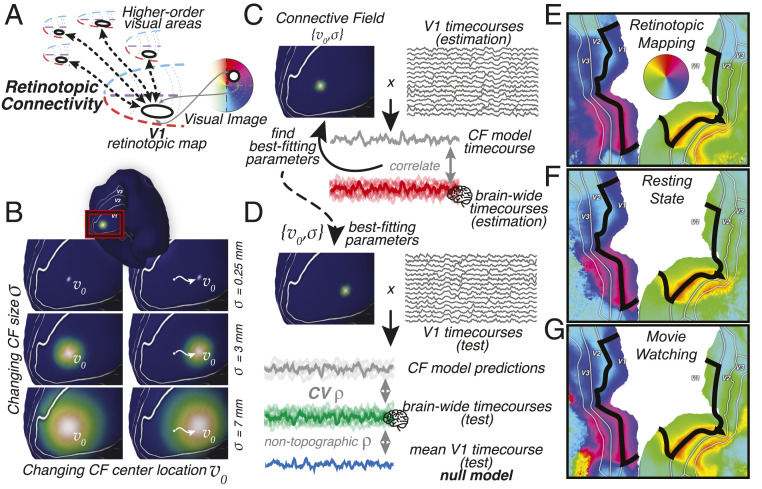

Fig. 1.

(A) Visual processing in higher-order brain regions reveals itself through spatially specific retinotopic connectivity with V1. V1’s map of visual space allows us to translate retinotopic connectivity to representations of visual space. (B) Retinotopic connectivity is quantified by modeling responses throughout the brain as emanating from Gaussian connective fields on the surface of V1. Example Gaussian CF model profiles with different size () and location () parameters are shown on the inflated surface. (C) Predictions are generated by weighting the ongoing BOLD signals in V1 with these CF kernels. Estimating CF parameters for all locations throughout the brain captures significant variance also outside the nominal visual system (non-V1 time courses). (D) Cross-validation procedure. CV prediction performance of the CF model is assessed on a left-out, test dataset and is corrected for the performance of a nontopographic null model, the average V1 time course. (E–G) CF modeling results can be used to reconstruct the retinotopic structure of visual cortex. Polar angle preferences outside the black outline are reconstructed solely based on their topographic connectivity with V1, within the black outline. CF modeling was performed on data from retinotopic-mapping, resting-state, and movie-watching experiments separately. Visual field preferences are stable across cognitive states, as evidenced by the robust locations of polar angle reversals at the borders between V2 and V3 field maps. Additional retinotopic structure visualizations are in SI Appendix, Figs. S1 and S2.