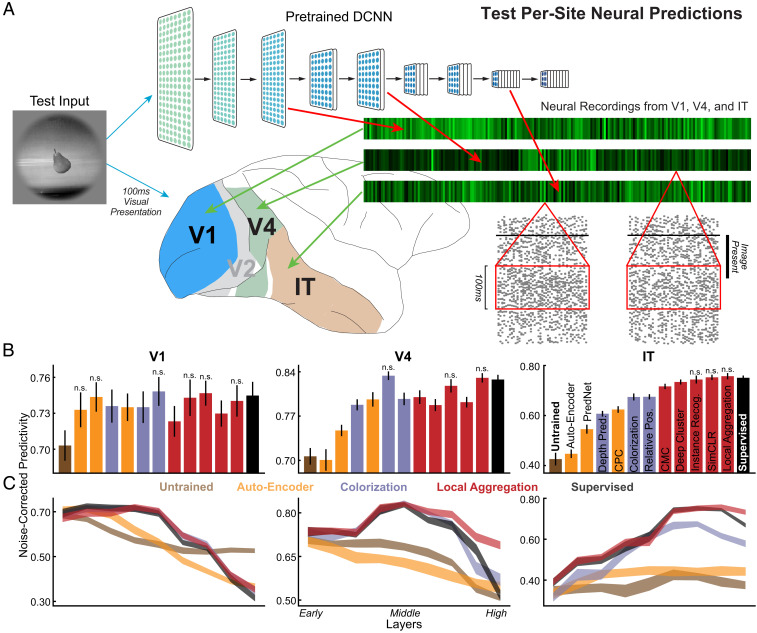

Fig. 2.

Quantifying similarity of unsupervised neural networks to visual cortex data. (A) After being trained with unsupervised objectives, networks were run on all stimuli for which neural responses were collected. Network unit activations from each convolutional layer were then used to predict the V1, V4, and IT neural responses with regularized linear regression (51). For each neuron, the Pearson correlation between the predicted responses and the recorded responses was computed on held-out validation images and then corrected by the noise ceiling of that neuron (Materials and Methods). The median of the noise-corrected correlations across neurons for each of several cortical brain areas was then reported. (B) Neural predictivity of the most-predictive neural network layer. Error bars represent bootstrapped standard errors across neurons and model initializations (Materials and Methods). Predictivity of untrained and supervised categorization networks represents negative and positive controls, respectively. Statistical significance of the difference between each unsupervised method and the supervised model was computed through bootstrapping methods. The methods with comparable neural predictivity are labeled with “n.s.,” and other methods without any annotations are significantly worse than the supervised model () (SI Appendix, Fig. S5). (C) Neural predictivity for each brain area from all network layers, for several representative unsupervised networks, including AutoEncoder, colorization, and local aggregation.