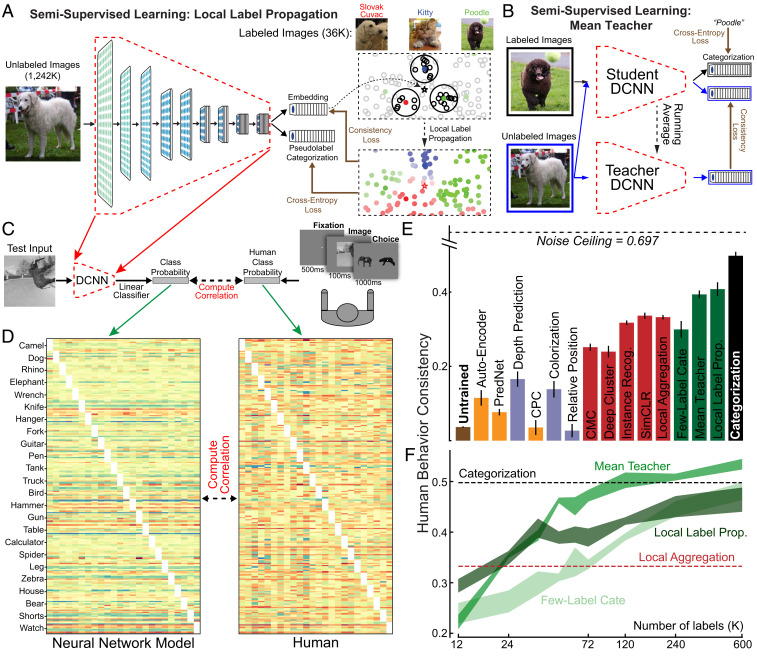

Fig. 4.

Behavioral consistency and semisupervised learning. (A) In the LLP method (64), DCNNs generated an embedding and a category prediction for each example. The embedding () of an unlabeled input was used to infer its pseudolabel considering its labeled neighbors (colored points) with voting weights determined by their distances from and their local density (the highlighted areas). DCNNs were then optimized with per-example confidence weightings (color brightness) so that its category prediction matched the pseudolabel, while its embedding was attracted toward the embeddings sharing the same pseudolabels and repelled by the others. (B) To measure behavioral consistency, we trained linear classifiers from each model’s penultimate layer on a set of images from 24 classes (21, 49). The resulting image-by-category confusion matrix was compared to data from humans performing the same alternative forced-choice task, where each trial started with a 500-ms fixation point, presented the image for 100 ms, and required the subject to choose from the true and another distractor category shown for 1,000 ms (21, 49). We report the Pearson correlation corrected by the noise ceiling. (C) Example confusion matrices of human subjects and model (LLP model trained with 36,000 labels). Each category had 10 images as the test images for computing the confusion matrices. (D) Behavioral consistency of DCNNs trained by different objectives. Green bars are for semisupervised models trained with 36,000 labels. “Few-Label” represents a ResNet-18 trained on ImageNet with only 36,000 images labeled, the same amount of labels used by MT and LLP models. Error bars are standard variances across three networks with different initializations. (E and F) Behavioral consistency (E) and categorization accuracy in percentage (F) of semisupervised models trained with differing numbers of labels.