Abstract

With the emergence and rapid proliferation of social media platforms and social networking sites, recent years have witnessed a surge of misinformation spreading in our daily life. Drawing on a large-scale dataset which covers more than 1.4M posts and 18M comments from an online social media platform, we investigate the propagation of two distinct narratives–(i) conspiracy information, whose claims are generally unsubstantiated and thus referred as misinformation to some extent, and (ii) scientific information, whose origins are generally readily identifiable and verifiable. We find that conspiracy cascades tend to propagate in a multigenerational branching process whereas science cascades are more likely to grow in a breadth-first manner. Specifically, conspiracy information triggers larger cascades, involves more users and generations, persists longer, and is more viral and bursty than science information. Content analysis reveals that conspiracy cascades contain more negative words and emotional words which convey anger, fear, disgust, surprise and trust. We also find that conspiracy cascades are much more concerned with political and controversial topics. After applying machine learning models, we achieve an AUC score of nearly 90% in discriminating conspiracy from science narratives using the constructed features.

We further investigate user’s role during the growth of cascades. In contrast with previous assumption that misinformation is primarily driven by a small set of users, we find that conspiracy cascades are more likely to be controlled by a broader set of users than science cascades, imposing new challenges on the management of misinformation. Although political affinity is thought to affect the consumption of misinformation, there is very little evidence that political orientation of the information source plays a role during the propagation of conspiracy information; Instead, we find that conspiracy information from media outlets with left or right orientation triggers smaller cascades and is less viral than information from online social media platforms (e.g., Twitter and Imgur) whose political orientations are unclear. Our study provides complementing evidence to current misinformation research and has practical policy implications to stem the propagation and mitigate the influence of misinformation online.

Keywords: Misinformation, Conspiracy, Information cascades, Online community, Political affinity

Introduction

The emergence of digital technologies such as e-mail, online social networks and instant messages, has dramatically shifted the way we get and consume information and provides an unprecedented opportunity to novel investigations of the information aggregation in networks on a large scale [3, 10, 15, 29, 36, 40]. Although new social technologies are thought to foster the aggregation and consumption of news, they may also contribute to fueling the dissemination of rumors or misinformation in today’s society.

Misinformation research has attracted increasing attention in recent years, primarily under the political context such as the US presidential election [1, 7, 20, 23, 28] and partisanship [22, 37], but it also has arisen in a variety of topics such as extreme events [25, 26, 46], social bots [43, 47] and rumor detection [2, 13, 39, 44]. More recently, the growing surge of misinformation about COVID-19 disease even poses risks to global health.1 Misinformation is able to exacerbate distress during terrorist attack, alter public opinion, affect election outcome and create a climate of mistrust, as such it’s of prime significance to understand the propagation and social origins of it [12, 48].

As more and more people are reading news online [19, 34], online social media sites yield invaluable sources for probing the underlying mechanisms behind the formation and propagation of misinformation due to the ready availability of large-scale datasets [31]. Several valuable efforts have been made to the investigation of fake news or misinformation [7, 20, 48], but most of them have focused largely, if not exclusively, on user-based platforms such as Facebook or Twitter in the main analysis. While for interest- or community-based platforms such as StackExchange or Reddit, there is still an immense shortage of investigation on the circulation of misinformation on such platforms, especially for communities or interest groups where misinformed or unsubstantiated messages are chronically circulated. Moreover, user engagement, which measures the intensity of user involvement during the propagation of misinformation, has been largely overlooked in previous studies [1, 7, 20, 22, 23]. In addition, although people with different political orientations are inclined to consume specific kinds of misinformation [23], whether political orientation of the information source matters in the circulation of misinformation in particular communities is still poorly understood.

Leveraging data from two Reddit2 communities or subreddits–r/conspiracy and r/science, we conduct a large-scale quantitative analysis through extensive comparison between conspiracy and science discussion threads in respective community. In this work, we follow the practice in [12], where conspiracy theories are considered unsubstantiated due to their lack of verifiability while science narratives are generally identifiable and verifiable. Note that, we don’t claim that all conspiracy narratives in r/conspiracy are fake; Instead, we refer them as misinformation or low-credibility contents due to their nature of uncertainty and incitement [12]. For example, a lot of users in r/conspiracy doubt about the veracity of the Apollo moon landing project which is a widely used case by conspiracists. If these misinformed or unsubstantiated messages are proliferating over the Internet, it would be dangerous for the form of public opinion in the real world. On the contrary, r/science is a community to share and discuss scientific news and advances whose origins are generally identifiable and verifiable. For instance, many conspiracy theories claim that the COVID-19 coronavirus, which is causing the pandemic, is created in a lab, while scientific analysis would suggest that the coronavirus is not a purposefully manipulated virus. Also note that we focus on the possibility of verification of the information rather than the quality of the information. Therefore, the systematic comparison between conspiracy and science discussion cascades should be able to yield a clear understanding of the patterns that misinformation differs from substantiated information.

The present work

In this work, we first analyze the cascade dynamics of conspiracy and science threads in respective community. Generally, a discussion thread consists of a post as well as comments under the post arranged in a hierarchical way, and can be naturally mapped into a cascade tree with the post acting as the root and the hierarchical comments forming the branches and leaves in a sequential order. We mainly consider the differences between conspiracy and science cascades in terms of several crucial structural and temporal properties, including cascade size, depth, virality and lifetime. Our analysis suggests that conspiracy information tends to trigger larger cascades, get more individuals involved, propagate further, survive longer, and is more viral and bursty than science information. Our findings are consistent with previous studies [12, 48] in terms of these structural and temporal properties.

We also find that conspiracy cascades tend to circulate in a multigenerational branching manner, while science cascades are likely to grow in a breadth-first way where science cascades attract more users at each generation or depth compared with conspiracy cascades. In general, it takes conspiracy cascades less time to reach the same depth as science cascades, but it will cost much more time for them to grow into larger cascades (e.g., ≥ 20). It is also important to note that there are more science cases than conspiracy cases among the largest cascades (e.g., ≥ 1,000), which is not found in user-based platforms [12, 48].

After applying content analysis, we find that compared with science cascades, conspiracy cascades are much richer in sentiment and convey more emotions of anger, fear, disgust, surprise and trust in their contents. Some of the findings are also consistent with previous study [48]. Topic modeling further reveals that conspiracy narratives tend to pay more attention to political and controversial topics. In light of the disparity between conspiracy and science cascades in terms of dynamic, emotion and topic features, we implement a serial of classification tasks to distinguish conspiracy narratives from science ones. We achieve good classification performance–an AUC score of nearly 90%–using all three sets of features in ten random trials where 80% of the data are assigned as the training set while the rest 20% of the data as the test set.

To study the intensity of user engagement during the propagation of conspiracy or science narratives, two comment networks are constructed based on the explicit comment paths between users in each community. After applying percolation process on comment networks, we find that conspiracy comment network is less concentrated on focal nodes compared with science comment network, suggesting that conspiracy cascades are less likely to be driven by a few set of users than expected.

We further explore the relationship between political orientation and circulation of misinformation. Leveraging a fact-checking list of media outlets and the URLs embedded in the contents, we are able to assign conspiracy narratives into different political subgroups, such as left, left leaning, center, right leaning and right. However, there is very little evidence that political orientation contributes to the circulation of conspiracy information as the size, depth and virality of cascades from different political subgroups are either smaller than or nearly equal to the general case. Instead, we find that conspiracy information from online social media platforms like Twitter and Imgur generally induces larger cascades, propagates further and is more viral than the general.

Our work presents important findings complementing previous misinformation research and could offer valid insights for the fight with misinformation under current situation.

Related work

There are mainly four lines of research related to our current work:

Misinformation

Recent years have brought the term “misinformation” into broad circulation due to the prevalence of fake news in online social media platforms. The use of the term misinformation could vary drastically from the view of researchers from different backgrounds [42]. Here we follow the practice in [5, 12] where conspiracy narratives are treated as misinformation due to their lack of verifiability.

The circulation of misinformation over the Internet has great potential to endanger the democratic election, increase public distress during terror attacks, induce misallocation of resources after natural disasters and damage the credibility of regulatory agencies [4, 26, 28, 48]. Although there has been a surge of misinformation research recently, most of them either use small data samples [1, 23] or only focus on user-based platforms [7, 48]. As a complement, our study is based on two interest-based communities in Reddit, which are homes for sharing and discussing conspiracy and scientific narratives. The dataset is thought to cover comprehensive historical data of discussion threads in each community with a time span of more than ten years.

Information cascades

The growing availability of large scale digital traces, along with the development of computational techniques to analyze them, has fostered extensive empirical investigations on information cascades. Recently, there has been a surge of interests in terms of diverse information cascades, such as reshare of photos [10], retweet of posts [12, 29, 48], adoption of new products [3, 15] and formation of social groups [40]. For example, in [12] the successive sharing of news in Facebook is conceptualized as information cascade.

Discussion threads, which involve elaborated conversational interactions between users, can also be characterized as cascades [17, 18, 27]. Our study focuses on discussion threads, where the successive ‘comment-to’ relations indicate the information flows. From the view of network analysis, discussion threads can be naturally represented by tree structure, with nodes representing a post and the comments under it and edges representing comment-to actions [17, 27, 30]. We would apply network analysis in our study to depict several crucial structural properties from the constructed discussion cascades.

Text mining

Text mining provides technical support for further investigation of contents beyond merely structural or temporal properties of cascades. A number of studies have adopted the word-emotion association lexicon to make automatic evaluation of sentiments and emotions embedded in texts [8, 11, 41, 48]. In addition, topic modeling is another widely used method to quantify the underlying topic concerns that characterize a set of documents [6, 21, 35, 45, 48, 50]. For example, there are significant emotional gaps between true and false news [48], and users’ topic concerns are also shown to be helpful to predict their adoption behaviors [21]. In this study, we follow these practices to elicit sentiments, emotions and topics that are conveyed in the narratives, and explore to what extent these features help to discriminate conspiracy narratives from scientific ones.

Political affinity

Political affinity is thought to affect the consumption and spread of information. As documented in the literature, Trump supporters are likely to visit the most fake news websites [23], and people with the right political affinity are more likely to share fake news on Twitter than people on the left do so [20]. It also has been shown that traditional center and left leaning news spreaders have stronger influence on the activity of Clinton supporters than that of Trump supporters during the 2016 US presidential election [7]. These analyses have focused exclusively on the political affinity of news spreaders instead of the political orientation of the information source. Here in our study, we aim to investigate the role of the political orientation (e.g., left, center or right) of conspiracy narratives plays during the propagation of misinformed cascades.

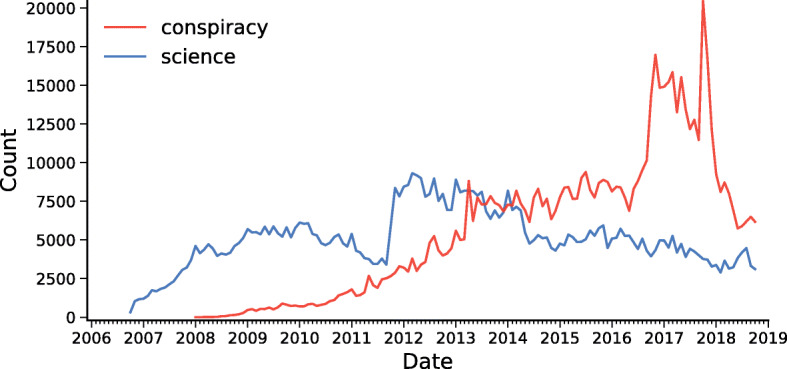

Data

We use data from Reddit,3 a social news and discussion website, which ranks in the top 20 websites in the world according to the traffic. The dataset covers more than 1.4M posts and 18M comments under two distinct online communities or subreddits (r/science and r/conspiracy) over a time span of more than ten years (until October 2018). Figure 1 shows the monthly cascade count for both conspiracy and science posts. Clearly, we see a surge of conspiracy cascades during the US presidential election (e.g., the 2016 presidential election), which may indicate that the prevalence of misinformation is likely to be catalyzed by political events.

Fig. 1.

Monthly cascade count (2006-2018)

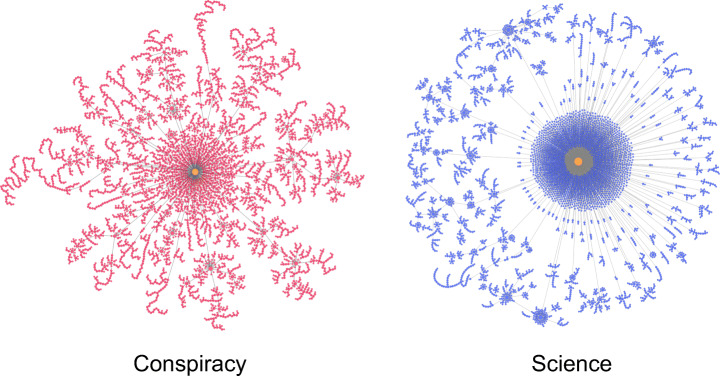

Figure 2 illustrates two examples of conspiracy and science cascades, where the source (i.e., the post) of each cascade is highlighted in orange and the edge indicates the comment path (e.g., means that B is a successor or child of A). Intuitively, conspiracy cascade tends to comprise a multi-step propagation process where any one node directly connects only a few others, while science cascade corresponds to a broadcast-dominated propagation process where a small set of nodes directly connects a large proportion of nodes. We will elaborate on this point in the next section.

Fig. 2.

Two cascade examples from conspiracy and science subreddits

Cascade dynamics

Primary indicators

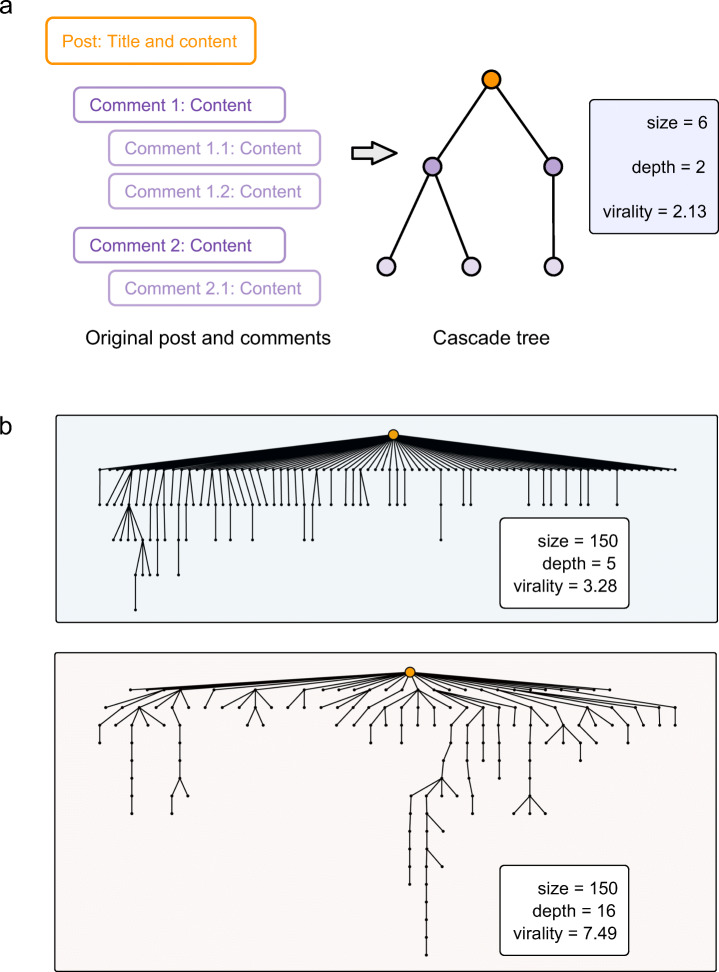

Figure 3a delineates the process of how a post (colored in orange) and the hierarchical comments (colored in purple) under it are transformed into a cascade tree, where the root of the tree is colored in orange. The primary indicators of the cascade tree that we are interested in are described as follows:

size: number of nodes in a cascade tree (including the root).

unique users: number of unique users involved in a cascade tree.

depth: maximum number of hops from the root of a cascade tree.

virality: the average path length between all pairs of nodes in a cascade tree (also known as “Wiener index” or “structural virality”) [14, 15].

lifetime: time span (in minutes) of the last comment under a post since the post is published.

burstiness: a measure which interpolates between comments that come in a periodic manner and those come in a bursty manner [16]. Formally, for a Poisson process, where each discrete signal records the moment when the event occurs, the interevent time, τ, is defined as the time difference between two consecutive events. The burstiness parameter is then defined as , where mτ is the mean of τ and στ is the standard deviation of τ.

Fig. 3.

Cascade tree. a Post and hierarchical comments to a cascade tree. b Two cascades with the same size but varying depth

These indicators form a set of crucial structural and temporal features that characterize a cascade. Note that, for cascade trees with only one node (i.e., the root), their depth, virality and lifetime are defined as 0.

According to the definition above, the example cascade in Fig. 3a has a size of 6, a depth of 2 and a virality measure of 2.13. Figure 3b further illustrates two example cascades from the data, which have the same size but varying depth and virality. The upper cascade comes from the science community while the lower cascade comes from the conspiracy community. Both of the cascade trees have 150 nodes, but most of the nodes are immediately or intermediately connected with the root (i.e., one or two hop away from the root) for the upper one while a large proportion of nodes are beyond one hop away from the root for the lower one. As expected, the virality of the lower cascade tree is much larger than that of the upper one (7.49 versus 3.28). In other words, the lower cascade is more viral than the upper cascade.

Cascade dynamics

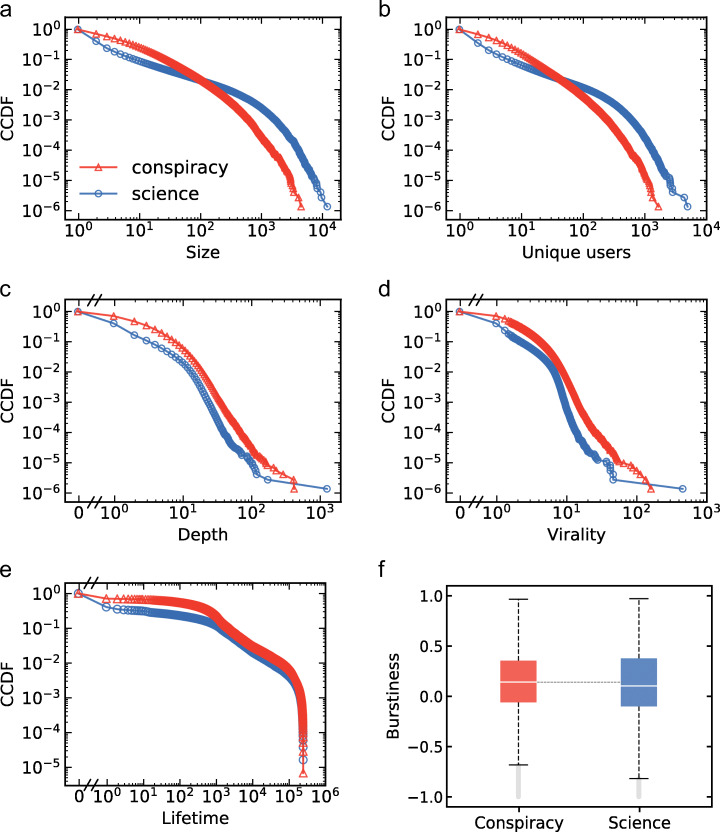

In light of the introduced structural and temporal indicators of a cascade, we analyze the dynamics of cascades induced by conspiracy and science information. To construct the cascade trees, we simply remove comments imposed by the Reddit bot–u/AutoModerator. Analysis reveals that, generally speaking, conspiracy information triggers larger cascades, gets more users involved, propagates ‘deeper’, persists longer, and is more viral and bursty than science information (Fig. 4a-f; for all Kolmogorov-Smirnov (K-S) tests;4 see also Table 2 in Appendix for details).

Fig. 4.

Cascade dynamics of conspiracy and science information. a CCDF (Complementary Cumulative Distribution Function) of cascade size.b CCDF of the number of unique users. c CCDF of cascade depth. d CCDF of virality. e CCDF of cascade lifetime (in minutes). f Burstiness of cascades

Table 2.

Data descriptions

| Subreddit | Count | Mean | Std | Min | 50% | Max |

|---|---|---|---|---|---|---|

| Cascade size (KS-test: D = 0.34323006611689794, ) | ||||||

| Conspiracy | 731882 | 0.776 | 0.487 | 1 | 3 | 4617 |

| Science | 734327 | 0.515 | 0.425 | 1 | 1 | 12412 |

| Unique users (KS-test: D = 0.3331162726782291, ) | ||||||

| Conspiracy | 731882 | 0.670 | 0.383 | 1 | 3 | 1698 |

| Science | 734327 | 0.469 | 0.348 | 1 | 1 | 5049 |

| Depth (KS-test: D = 0.3074821114225904, ) | ||||||

| Conspiracy | 731882 | 0.418 | 0.357 | 0 | 1 | 429 |

| Science | 734327 | 0.190 | 0.279 | 0 | 0 | 1293 |

| Virality (KS-test: D = 0.34323006611689794, ) | ||||||

| Conspiracy | 731882 | 0.369 | 0.285 | 0 | 1.333 | 163.156 |

| Science | 734327 | 0.176 | 0.239 | 0 | 0 | 462.819 |

| Lifetime (KS-test: D = 0.3767388086135375, ) | ||||||

| Conspiracy | 731882 | 1.872 | 1.410 | 0 | 189 | 259185 |

| Science | 734327 | 0.877 | 1.324 | 0 | 0 | 259195 |

| Burstiness (KS-test: D = 0.05736421439137, ) | ||||||

| Conspiracy | 364115 | 0.133 | 0.330 | -1.0 | 0.141 | 0.965 |

| Science | 133797 | 0.128 | 0.383 | -1.0 | 0.102 | 0.970 |

When calculating the mean values and standard deviations, quantities are log-transformed by log10(x + 1) (except for Burstiness) before the calculations

Specifically, nearly 60% of science posts receive no comment (i.e., cascade size is one), while only about 29% of conspiracy posts do so. More than 24% of conspiracy cascades grow into more than the size of 10, but only less than 9% of science cascades do so (Fig. 4a). However, we also find that only 0.035% of conspiracy cascades (roughly 1 out of every 3,000 cascades) have a size of more than 1000, while 0.264% of science cascades (roughly 1 out of every 400 cascades) do so (Fig. 4a). Similar patterns are also found in terms of number of unique users involved during the growth of cascades (Fig. 4b). Moreover, conspiracy information is found to propagate deeper (Fig. 4c) and be more viral (Fig. 4d) than science information. For example, more than 15% of conspiracy cascades go beyond a depth of 5, but less than 5% of science cascades do so. The majority of conspiracy cascades also have a longer lifetime than science cascades (Fig. 4e). We also find that conspiracy cascades are slightly more bursty than science cascades (Fig. 4f), suggesting that conspiracy cascades are formed in shorter timeframes than science cascades.

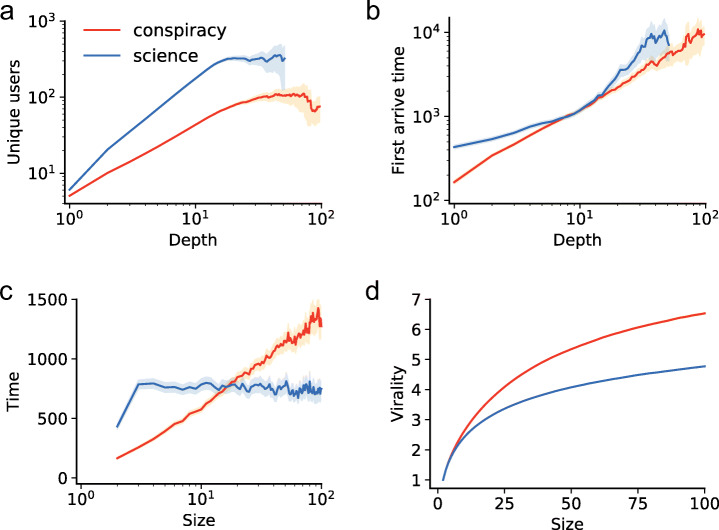

With a close look at the growth process of cascades, we find that when cascade depth is controlled, science cascades tend to reach more users than conspiracy cascades (Fig. 5a), which further corroborates that science cascades tend to grow in a breadth-first manner compared with conspiracy cascades. As expected, it takes less time for conspiracy cascades to reach the same depth as science cascades (Fig. 5b). When cascade size is controlled, we find mixed patterns of the dynamic growth of cascades: it takes conspiracy cascades less time to reach small sizes (e.g., size= 5) and much more time to reach relatively large sizes (e.g., size= 50) (Fig. 5c). More importantly, for the same cascade size, conspiracy cascades tend to be more viral than science cascades (Fig. 5d). That said, conspiracy cascades are more likely to grow in a multigenerational branching manner compared with science cascades.

Fig. 5.

Analysis of the growth of cascades. a Number of unique users reached at every depth. b-c The time (in minutes) it takes for conspiracy and science cascades to reach any b depth and c size. d The virality of cascades when conspiracy and science cascades reach any size. For ease of computation and visualization, cascade depth and size beyond 100 during the growth process are not shown. Shading areas indicate the 95% Confidence Intervals (CIs)

Content analysis

Contents embedded in the cascades are thought to provide additional ingredients to characterize the propagation of information. For instance, negative moods can stimulate online trolling behaviors [11]; emotional words are able to catalyze the diffusion of messages [8]; and political news is more likely to be retweeted than general news [48]. Here we probe into sentiments, emotions and topics conveyed in posts and comments of conspiracy and science cascades.

Sentiment and emotion analysis

To elicit the sentiments and emotions contained in posts and comments, we adopt a leading word-emotion association lexicon managed by National Research Council Canada (NRC) [32, 33]. This lexicon covers a manually annotated list of 14,182 words and their associations with two sentiments (positive and negative) and eight emotions (anger, anticipation, disgust, fear, joy, sadness, surprise and trust). Based on the lexicon, the distributions of each post and comment over the two sentimental and eight emotional dimensions are then calculated by the word frequency and further normalized by the content length. For example, if a ten-word post contains one positive word, three negative words, one word of anger, two words of fear and two words of trust, the acquired sentimental and emotional dictionaries are then depicted as {positive : 1/10,negative : 3/10} and {anger : 1/10,fear : 2/10,trust : 2/10}, respectively.

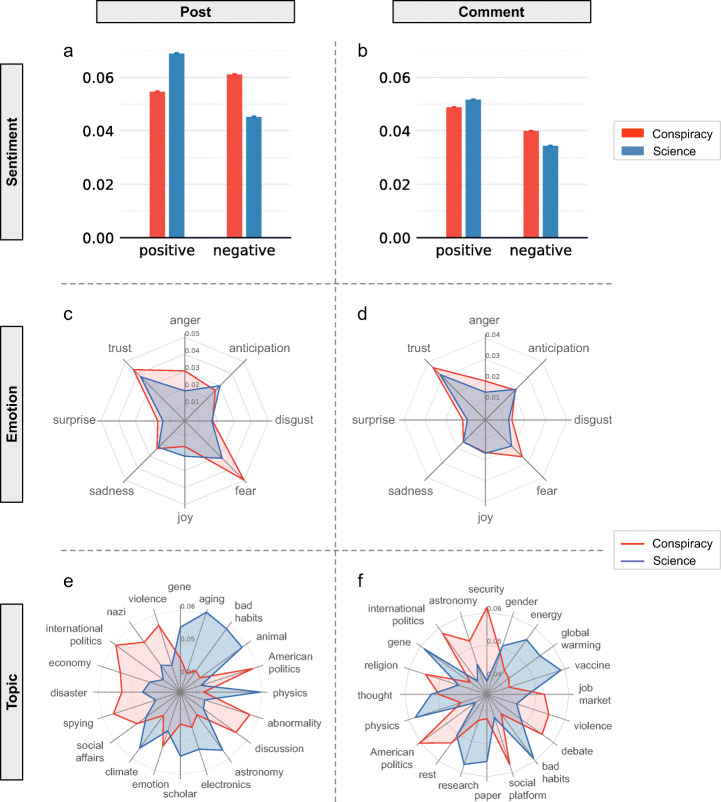

Sentiment

Figure 6a illustrates the sentiment distribution of conspiracy and science posts, and Fig. 6b shows the sentiment distribution of comments under these posts. As we can see, conspiracy posts contain far more negative words than science posts (K-S test = 0.165, ), where, on average, a 100-word conspiracy post contains 6.1 negative words while a 100-word science post contains only 4.5 negative words (Fig. 6a). As expected, conspiracy posts contain less positive words than science posts (K-S test = 0.077, ) (Fig. 6a). Similarly, conspiracy posts inspire more negative (K-S test = 0.054, ) and less positive (K-S test = 0.049, ) contents than science posts in their comments (Fig. 6b).

Fig. 6.

Sentiment, emotion and topic distributions. a-b Sentiment distributions in posts and comments. Error bars indicate standard error of the mean. c-d Emotion distributions in posts and comments. e-f Topic distributions in posts and comments

Emotion

The emotion distributions of posts and comments in conspiracy and science cascades over eight dimensions are shown in Fig. 6c and d. At the macro level, conspiracy cascades convey more emotional contents than science cascades in both posts and comments, despite the fact that the emotional gap (indicated by the difference of the shading areas) is larger in posts than in comments. At the micro level, conspiracy cascades consistently convey more emotional contents of anger, fear, disgust and surprise than science cascades in both posts and comments ( for all K-S tests). To our surprise, conspiracy cascades also contain more contents of trust than science cascades ( for all K-S tests). With a close look at the raw contents, we find that many conspiracy posts are apt to use phrases like “the truth about something” which appeal to the public and thereby would contribute to getting more traffics they need. In short, the higher level of emotions expressed in conspiracy posts and comments may inspire more people to fuel the circulation of conspiracy information than that of science information.

Topic analysis

To extract the topical concerns from contents, we adopt Latent Dirichlet Allocation (LDA) [6, 38], a widely used method for topic modeling in the literature [21, 35, 45, 48–50], in current study. We implement two topic models with both 20 topics by LDA, one for posts and another for comments. Note that, for ease of presentation we set the number of topics as 20, but other reasonable choices, such as 50 or 100, would present similar results. In practice, we first remove stop words, punctuations, URLs, and retain only top 10,000 words according to their tf-idf (term frequency-inverse document frequency) weights in posts or comments. The remaining corpora are then fed into LDA topic models. After that we obtain a probability distribution over 20 topics for each post or comment under the respective topic model.

Figure 6e and f illustrate the topical concerns of posts and comments in conspiracy and science cascades over 20 dimensions. As shown in the figure, conspiracy and science cascades occupy quite different topic spaces ( for all K-S tests in all paired comparisons between conspiracy and science cascades). For example, conspiracy posts are keen on topics like politics, disaster, violence and economy, while science posts mainly focus on socio-scientific related topics, such as gene, climate and physics (Fig. 6e). Similar patterns are also found in comments, as conspiracy comments are keen on topics like politics, security, violence and religion, while science comments still focus on socio-scientific related issues, including energy, global warming, vaccine and bad habits (Fig. 6f). The clear topic differences between conspiracy and science cascades also imply that topical concerns are of potential utility to distinguish conspiracy cascades from science ones.

Cascade classification

To validate the utility of the described features above, we consider a simple binary prediction task to determine whether a given post belongs to conspiracy or science category. We adopt Random Forest as the classifier due to its high performance in classification tasks. In practice, We use the implementation from the scikit-learn5 Python package with a forest of 200 trees.

Feature sets

There are mainly three kinds of features that we would like to consider:

dynamic: cascade dynamics, including cascade size, depth, virality, lifetime and number of unique users involved.

emotion: sentiments and emotions conveyed in the posts, including two sentiments (positive and negative) and eight emotions (anger, anticipation, disgust, fear, joy, sadness, surprise and trust).

topic: topics embedded in the posts over 20 dimensions.

For the three sets of features, there are seven combinations of them in total. For example, topic indicates that only topic features are used for the classification task, while all/topic indicates that all sets of features, except for topic features, are used for classification.

Classification results

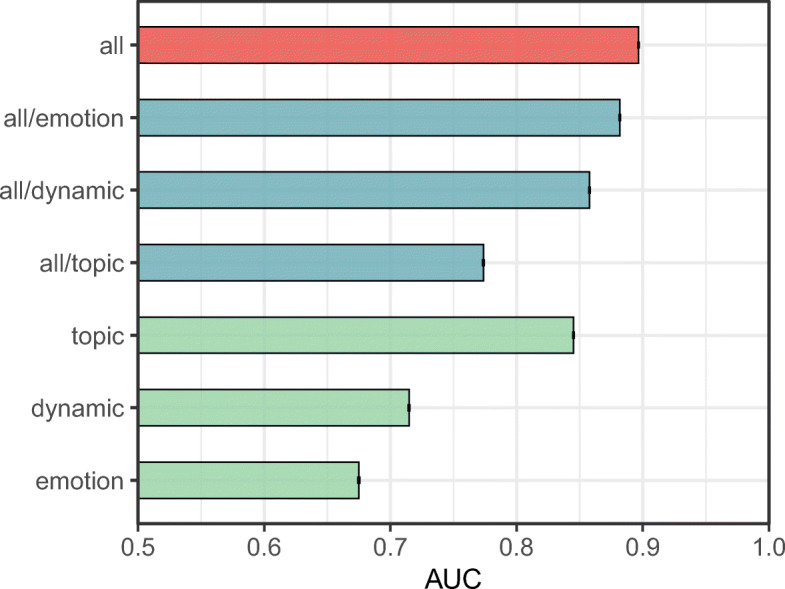

For the classification task, there are 731,882 conspiracy and 734,327 science posts in total. We randomly split the dataset into training (80%) and test (20%) sets. The random forest classifier is first trained on the training set and then tested on the test set. Figure 7 presents the prediction performance in terms of the AUC (area under the curve) score on the test set using any combination of feature sets as the predictors in ten random trials. Note that the baseline–random guessing–would obtain an AUC score of 0.5 in our setting. As such, our approach achieves very strong performance, with an AUC score of nearly 0.9 using all three sets of features.

Fig. 7.

Classification performance using the obtained features. Error bars indicate the standard errors of the average AUCs obtained from ten random training-test dataset splits

As we can see from the figure, although each feature set alone significantly outperforms random guessing in the prediction task, it’s the topic features that provide the most prominent predictive power (AUC= 0.845), followed by dynamic features (AUC= 0.715) and emotion features (AUC= 0.675). In addition, the combination of topic features with dynamic features achieves an AUC score of 0.882, and the combination of topic features with emotion features achieves an AUC score of 0.858. However, the combination of dynamic features with emotion features only achieves an AUC score of 0.774, which is even worse than the performance of topic features alone. This also implies that topical concerns could be the primary factors that discriminate conspiracy information from science information. Taken together, the classification results further favor the effectiveness of the proposed cascade features in depicting the differences between conspiracy and science narratives.

Community participation

In this section, we examine the engagement of users during the propagation of conspiracy and science narratives. We find that conspiracy cascades are less likely to be concentrated on focal users compared with science cascades.

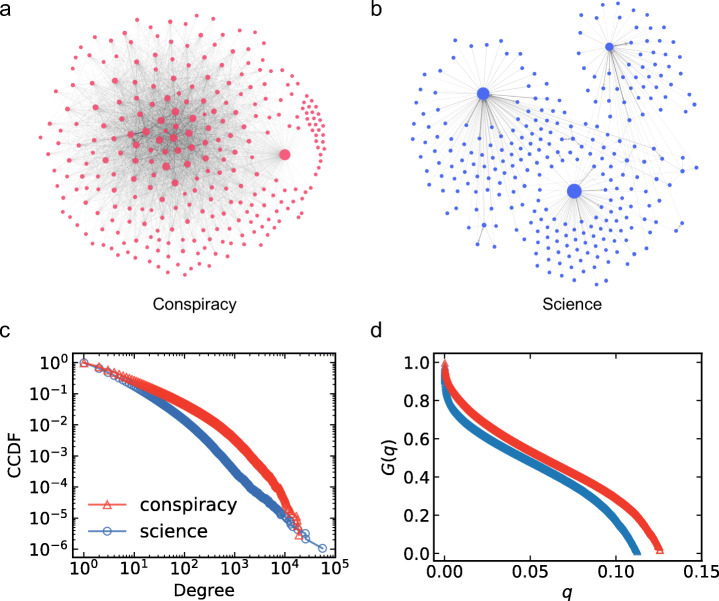

To do so, we first construct two comment networks–one for conspiracy community and another for science community–where if user A leaves a comment to user B, there will be a directed link from B to A. In practice, users whom are hard to be identified (e.g., users denoted as ‘deleted’) are neglected during the construction of comment networks. Figure 8a and b illustrate two subnetworks that are randomly drawn from conspiracy and science comment networks, respectively. Intuitively, conspiracy comment network is more densely connected than science comment network. The degree (note that degree equals to the sum of indegree and outdegree for nodes in directed networks) distributions of nodes in conspiracy and science comment networks are shown in Fig. 8c. As shown in the figure, nodes in the conspiracy comment network tend to have higher degrees than nodes in the science comment network, suggesting that users engaged in the circulation of conspiracy cascades are likely to interact with more other users than those of users in science cascades. More detailed descriptions about conspiracy and science comment networks can be found in Table 1.

Fig. 8.

Comment network. a Subnetwork extracted from conspiracy comment network. b Subnetwork extracted from science comment network. c Degree distributions of comment networks. d Relative size G(q) of the giant connected component as a function of the fraction of removed nodes q in comment networks

Table 1.

Comment network description

| Subreddit | #nodes | #edges | < k > | ||

|---|---|---|---|---|---|

| Conspiracy | 344,311 | 5,120,940 | 29.746 | 10,304 | 16,290 |

| Science | 922,189 | 4,754,843 | 10.312 | 7,689 | 55,491 |

To investigate to what extent the comment network is concentrated and the possible strategies for mitigating the propagation process, we implement a simple percolation process [9] on conspiracy and science comment networks respectively, where node with the largest degree is removed at each step until the network is disconnected or no large component exists. Figure 8d illustrates the relative size of the giant connected component (measured by size of the giant connected component divided by the original network size) as nodes are removed step by step during the percolation process. After the removal of less than 15% of nodes, both conspiracy and science comment networks are collapsed as the relative size of the giant connected component is less than 0.01 compared with the original network size. However, science comment network is much more fragmented than conspiracy comment network for the same fraction of node removal, which indicates that user engagement in science cascades is more concentrated than that of conspiracy cascades. In other words, conspiracy cascades are less likely to be driven by a few focal users compared with science cascades. This also hints that to prevent the circulation of conspiracy narratives on the platform, simply blocking some focal users or a small set of influencers may be less effective than the same procedure implemented for science narratives.

Political orientation

Political affinity is thought to play a role in the circulation of misinformation as people of different political orientations tend to consume specific kinds of misinformation [1, 7, 20, 23]. Here we examine whether the political orientation of the information source matters in the circulation of conspiracy narratives.

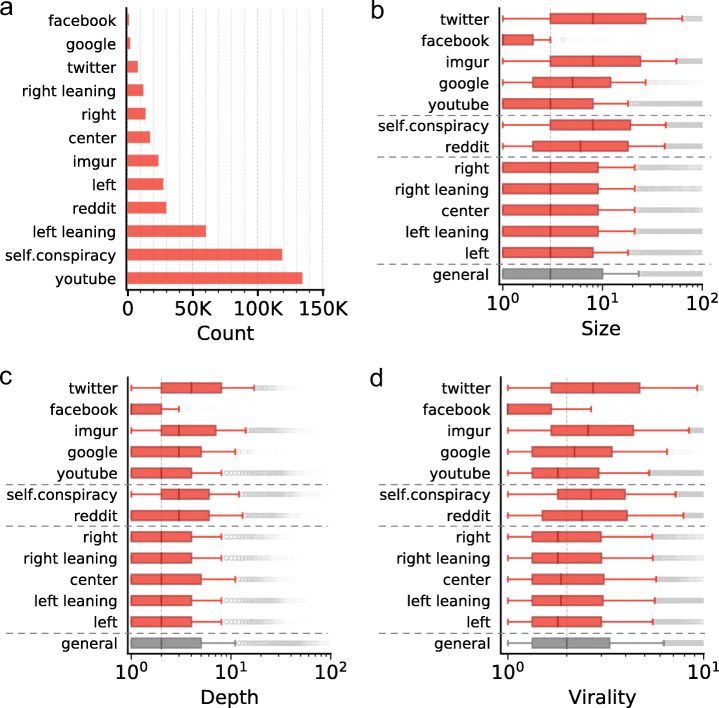

Most conspiracy posts contain url links in their contents, providing a feasible way to track where the information comes from. Based on the affiliated url links (if any) and a classification of media outlets curated by a fact-checking organization,6 we are able to assign posts to different political subgroups. An example list of media outlets and their political orientations is given in Table 3 in Appendix. Specifically, the information sources are sorted into several subgroups in three main categories: (i) social media sites, including Twitter, Facebook, Imgur, Google and Youtube; (ii) media outlets, including media outlets with left (e.g., MSNBC), left leaning (e.g., the New York Times), center (e.g., Reuters), right leaning (e.g., the Wall Street Journal) and right (e.g., Fox News) political orientations; (iii) homegrown news, such as news without a url or from other subreddits or communties in Reddit.

Table 3.

Media outlets and their political orientations

| Domain | N | Domain | N | Domain | N | Domain | N |

|---|---|---|---|---|---|---|---|

| Left | Left leaning | Right leaning | Right | ||||

| huffingtonpost.com | 2,577 | theguardian.com | 5,178 | telegraph.co.uk | 2,031 | breitbart.com | 2,341 |

| cnn.com | 2,175 | nytimes.com | 4,117 | wikileaks.org | 1,393 | foxnews.com | 2,038 |

| alternet.org | 1,684 | washingtonpost.com | 3,977 | nypost.com | 1,008 | dailycaller.com | 1,379 |

| rawstory.com | 1,391 | independent.co.uk | 2,519 | washingtontimes.com | 988 | wnd.com | 821 |

| salon.com | 1,329 | news.yahoo.com | 1,849 | russia-insider.com | 885 | washingtonexaminer.com | 702 |

| wsws.org | 1,303 | businessinsider.com | 1,839 | forbes.com | 770 | express.co.uk | 585 |

| commondreams.org | 1,225 | bloomberg.com | 1,676 | reason.com | 492 | theblaze.com | 468 |

| counterpunch.org | 1,122 | thehill.com | 1,615 | news.antiwar.com | 452 | thenewamerican.com | 467 |

| thedailybeast.com | 1,117 | bbc.co.uk | 1,597 | wsj.com | 425 | freebeacon.com | 459 |

| democracynow.org | 988 | bbc.com | 1,485 | original.antiwar.com | 386 | thesun.co.uk | 309 |

We show the top 10 domain names under each category and the corresponding number (N) of posts found in the data. For space constrains, only categories of left, left leaning, right leaning and right are shown

We restrict our analysis to conspiracy posts that either contain a url pointing to domains of the defined subgroups or have no url in the content. Figure 9a illustrates the amounts of posts from different subgroups, where self.conspiracy indicates posts without any url inside. We can see that left and left leaning media outlets are more prevalent than right and right leaning media outlets in the conspiracy community. We further compare the cascade size, depth and virality for different subgroups (Fig. 9b-d). For ease of visualization, only cascades whose depth and virality are greater than or equal to 1 are considered in the analysis of cascade depth and virality (Fig. 9c-d), but this doesn’t alter the results as the conclusions still hold true even after all cascades are included in the analysis. For ease of comparison, we also show the corresponding quantities for the general case, where cascade size, depth and virality for the complete conspiracy cascades are shown in grey and denoted as general in Fig. 9b-d.

Fig. 9.

Political orientation. a Cascade count by subgroups. b Cascade size by subgroups. c Cascade depth by subgroups. d Cascade virality by subgroups. We show the box plot of cascade size, depth and virality in b-d, where the line inside each box indicates the median value and the grey circles indicate the outliers of the corresponding data. For ease of comparison, cascade size, depth and virality of all conspiracy cascades are shown in grey (denoted as general), and the median values are shown in vertical dashed lines in b-d

As shown in Fig. 9b, the size of cascades from different political subgroups, such as left, left leaning, right and right leaning, is nearly the same with the general case, indicating that political orientation has very few effects in driving the ultimate cascade size. Instead, we find that posts from online social media sites like Twitter and Imgur trigger larger cascades than the general, with the exceptions of Facebook and Youtube. Similar patterns are also found in terms of cascade depth (Fig. 9c). We also find that cascades from different political subgroups are slightly less viral than the general case, but cascades from online social media sites are generally more viral than other cascades (Fig. 9d).

Taken together, we find very little evidence that political orientation of the information source contributes to the circulation of conspiracy narratives in current study. Instead, we find that posts from social media sites with unclear political orientations generally trigger larger cascades and are more viral than the general.

Discussion

The over-proliferation of misinformation online could spark “digital wildfires” in our hyperconnected world [24] and has triggered heated public debate in the aftermath of the 2016 US presidential election [1, 7, 20]. Our study presents a large-scale quantitative analysis toward the understanding of the formation and growth of discussion cascades in online communities. Specifically, we analyze the cascade dynamics and contents of two distinct narratives: conspiracy theories, whose claims are generally unsubstantiated, and scientific information, whose origins are largely verifiable. The results reveal systematic differences between conspiracy and science narratives in terms of cascade dynamics and contents. Through a serial of classification tasks, we show that topic concerns act as the primary factors that discriminate conspiracy narratives from science ones, followed by dynamic and emotion features. After the implementation of a simple percolation process on comment networks, we find that the circulation of scientific information, rather than conspiracy theories, relies more on focal users. With a close look at the origins of conspiracy narratives, we also find that, generally speaking, posts from social media sites with unclear political orientations, instead of posts from left- or right-wing media outlets, trigger larger cascades and are more viral than the general.

Our study has practical implications for current social media and misinformation research. First, as unsubstantiated news tends to focus on political and violent topics, it’s anticipated that future regulations on misinformation should pay close attention to such areas. Second, as we have shown in the main text, conspiracy narratives are less likely to be driven by focal users compared with scientific information, implying that, to prevent the dissemination of misinformation, simply removing or blocking some participants during the diffusion process may be less effective than expected. Third, as news from social media sites generally triggers larger cascades and is more viral than the general, it’s worth pointing out that social media sites could be important sources of misinformation, thereby highlighting the urgent needs of proper regulations to suppress or mitigate the fabrication and dissemination of misinformation on social media sites.

The present work has several limitations as well. Our results are the outputs of one study conducted on two representative communities from Reddit, but additional studies are urgently needed to validate and generalize our findings in other kinds of online communities or social domains (such as [12, 48]). Our study is based on descriptive and statistical analysis of observational data, but confounds in the data may more or less skew the results. For instance, norms and incentives for how posts are submitted in these communities may be different, which could also influence how information propagates in specific communities. As such, future studies may follow rigorous causal inference approaches [4] (e.g., controlled experiments) to probe the social factors that catalyze the diffusion of misinformation and elicit causal effects of misinformation on various social outcomes like distress and elections. In this comparative study, we have focused on the possibility of verification–instead of the quality–of conspiracy and science information. Future studies may take the quality of information source into consideration. In addition, current study doesn’t completely rule out the the role that social bots play in the circulation of information. But to what extent is social diffusion vulnerable to social bots is still an open and important question, and needs more comprehensive explorations and discussions in the future.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant Nos. 61773255 and 61873167), Hong Kong RGC (GRF 11505119) and City University of Hong Kong (CCR 9360120 and HKIDS 9360163). The authors would like to thank Tai-Quan “Winson” Peng for critical reading of the early draft.

Appendix

Footnotes

The raw data we used in this study are acquired from and publicly available at https://files.pushshift.io/reddit.

Kolmogorov-Smirnov test is abbreviated as K-S test hereafter.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Yafei Zhang, Email: yflyzhang@gmail.com.

Lin Wang, Email: wanglin@sjtu.edu.cn.

Jonathan J. H. Zhu, Email: j.zhu@cityu.edu.hk

Xiaofan Wang, Email: xfwang@sjtu.edu.cn.

References

- 1.Allcott H, Gentzkow M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017;31(2):211–36. doi: 10.1257/jep.31.2.211. [DOI] [Google Scholar]

- 2.Almaatouq A, Shmueli E, Nouh M, Alabdulkareem A, Singh VK, Alsaleh M, Alarifi A, Alfaris A, et al. If it looks like a spammer and behaves like a spammer, it must be a spammer: analysis and detection of microblogging spam accounts. Int. J. Inf. Secur. 2016;15(5):475–491. doi: 10.1007/s10207-016-0321-5. [DOI] [Google Scholar]

- 3.Anderson, A., Huttenlocher, D., Kleinberg, J., Leskovec, J., Tiwari, M.: Global diffusion via cascading invitations: Structure, growth, and homophily. In: Proceedings of the 24th International Conference on World Wide Web. ACM, pp. 66–76 (2015)

- 4.Aral S, Eckles D. Protecting elections from social media manipulation. Science. 2019;365(6456):858–861. doi: 10.1126/science.aaw8243. [DOI] [PubMed] [Google Scholar]

- 5.Bessi A, Coletto M, Davidescu GA, Scala A, Caldarelli G, Quattrociocchi W. Science vs conspiracy: Collective narratives in the age of misinformation. PloS ONE. 2015;10(2):e0118093. doi: 10.1371/journal.pone.0118093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Blei DM, Ng AY, Jordan MI. Latent dirichlet allocation. J. Mach. Learn. Res. 2003;3(Jan):993–1022. [Google Scholar]

- 7.Bovet A, Makse HA. Influence of fake news in Twitter during the 2016 US presidential election. Nat. Commun. 2019;10(1):7. doi: 10.1038/s41467-018-07761-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Brady WJ, Wills JA, Jost JT, Tucker JA, Van Bavel JJ. Emotion shapes the diffusion of moralized content in social networks. Proc. Natl. Acad. Sci. 2017;114(28):7313–7318. doi: 10.1073/pnas.1618923114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Callaway DS, Newman ME, Strogatz SH, Watts DJ. Network robustness and fragility: Percolation on random graphs. Phys. Rev. Lett. 2000;85(25):5468. doi: 10.1103/PhysRevLett.85.5468. [DOI] [PubMed] [Google Scholar]

- 10.Cheng, J., Adamic, L., Dow, P.A., Kleinberg, J.M., Leskovec, J.: Can cascades be predicted?. In: Proceedings of the 23rd International Conference on World Wide Web. ACM, pp 925–936 (2014)

- 11.Cheng, J., Bernstein, M., Danescu-Niculescu-Mizil, C., Leskovec, J.: Anyone can become a troll: Causes of trolling behavior in online discussions. In: Proceedings of the 2017 ACM conference on Computer Supported Cooperative Work & Social Computing. ACM, pp 1217–1230 (2017) [DOI] [PMC free article] [PubMed]

- 12.Del Vicario M, Bessi A, Zollo F, Petroni F, Scala A, Caldarelli G, Stanley HE, Quattrociocchi W. The spreading of misinformation online. Proc. Natl. Acad. Sci. 2016;113(3):554–559. doi: 10.1073/pnas.1517441113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Friggeri, A., Adamic, L., Eckles, D., Cheng, J.: Rumor cascades. In: Proceedings of the International AAAI Conference on Web and Social Media. Association for the Advancement of Artificial Intelligence, vol. 8, pp 101–110 (2014)

- 14.Goel S, Anderson A, Hofman J, Watts DJ. The structural virality of online diffusion. Manag. Sci. 2015;62(1):180–196. [Google Scholar]

- 15.Goel, S., Watts, D.J., Goldstein, D.G.: The structure of online diffusion networks. In: Proceedings of the 13th ACM conference on Electronic Commerce. ACM, pp 623–638 (2012)

- 16.Goh KI, Barabási AL. Burstiness and memory in complex systems. EPL Europhys. Lett. 2008;81(4):48002. doi: 10.1209/0295-5075/81/48002. [DOI] [Google Scholar]

- 17.Gómez, V., Kappen, H.J., Kaltenbrunner, A.: Modeling the structure and evolution of discussion cascades. In: Proceedings of the 22nd ACM conference on Hypertext and Hypermedia, pp 181–190 (2011)

- 18.Gómez V, Kappen HJ, Litvak N, Kaltenbrunner A. A likelihood-based framework for the analysis of discussion threads. World Wide Web. 2013;16(5-6):645–675. doi: 10.1007/s11280-012-0162-8. [DOI] [Google Scholar]

- 19.Gottfried, J., Shearer, E.: Americans’ online news use is closing in on TV news use, Pew Res. Cent. https://www.pewresearch.org/fact-tank/2017/09/07/americans-online-news-use-vs-tv-news-use/ (2017)

- 20.Grinberg N, Joseph K, Friedland L, Swire-Thompson B, Lazer D. Fake news on Twitter during the 2016 US presidential election. Science. 2019;363(6425):374–378. doi: 10.1126/science.aau2706. [DOI] [PubMed] [Google Scholar]

- 21.Guan, L., Zhang, Y., Zhu, J.: Segmenting and characterizing adopters of e-books and paper books based on Amazon book reviews. In: Chinese National Conference on Social Media Processing. Springer, pp 85–97 (2016)

- 22.Guess A, Nagler J, Tucker J. Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Sci. Adv. 2019;5(1):eaau4586. doi: 10.1126/sciadv.aau4586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Guess A, Nyhan B, Reifler J. Selective exposure to misinformation: Evidence from the consumption of fake news during the 2016 US presidential campaign. Eur. Res. Counc. 2018;9(3):4. [Google Scholar]

- 24.Howell L, et al. Digital wildfires in a hyperconnected world. World Econ. Forum Rep. 2013;3:15–94. [Google Scholar]

- 25.Huang, Y.L., Starbird, K., Orand, M., Stanek, S.A., Pedersen, H.T.: Connected through crisis: Emotional proximity and the spread of misinformation online. In: Proceedings of the 18th ACM conference on Computer Supported Cooperative Work & Social Computing. ACM, pp 969–980 (2015)

- 26.Jones NM, Thompson RR, Schetter CD, Silver RC. Distress and rumor exposure on social media during a campus lockdown. Proc. Natl. Acad. Sci. 2017;114(44):11,663–11,668. doi: 10.1073/pnas.1708518114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kumar, R., Mahdian, M., McGlohon, M.: Dynamics of conversations. In: Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 553–562 (2010)

- 28.Lazer DM, Baum MA, Benkler Y, Berinsky AJ, Greenhill KM, Menczer F, Metzger MJ, Nyhan B, Pennycook G, Rothschild D, et al. The science of fake news. Science. 2018;359(6380):1094–1096. doi: 10.1126/science.aao2998. [DOI] [PubMed] [Google Scholar]

- 29.Liang H. Broadcast versus viral spreading: the structure of diffusion cascades and selective sharing on social media. J. Commun. 2018;68(3):525–546. doi: 10.1093/joc/jqy006. [DOI] [Google Scholar]

- 30.Medvedev AN, Delvenne JC, Lambiotte R. Modelling structure and predicting dynamics of discussion threads in online boards. J. Compl. Netw. 2019;7(1):67–82. doi: 10.1093/comnet/cny010. [DOI] [Google Scholar]

- 31.Mocanu D, Rossi L, Zhang Q, Karsai M, Quattrociocchi W. Collective attention in the age of (mis) information. Comput. Hum. Behav. 2015;51:1198–1204. doi: 10.1016/j.chb.2015.01.024. [DOI] [Google Scholar]

- 32.Mohammad, S.M., Turney, P.D.: Emotions evoked by common words and phrases: Using mechanical turk to create an emotion lexicon. In: Proceedings of the NAACL HLT 2010 workshop on computational approaches to analysis and generation of emotion in text. Association for Computational Linguistics, pp 26–34 (2010)

- 33.Mohammad SM, Turney PD. Crowdsourcing a word–emotion association lexicon. Comput. Intell. 2013;29(3):436–465. doi: 10.1111/j.1467-8640.2012.00460.x. [DOI] [Google Scholar]

- 34.Newman, N., Fletcher, R., Kalogeropoulos, A., Levy, D., Nielsen, R.K: Reuters institute digital news report, pp 2017 (2017)

- 35.Park PS, Blumenstock JE, W. MM. The strength of long-range ties in population-scale social networks. Science. 2018;362(6421):1410–1413. doi: 10.1126/science.aau9735. [DOI] [PubMed] [Google Scholar]

- 36.Pei S, Muchnik L, Tang S, Zheng Z, Makse HA. Exploring the complex pattern of information spreading in online blog communities. PloS ONE. 2015;10(5):e0126,894. doi: 10.1371/journal.pone.0126894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Pennycook G, Rand DG. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proc. Natl. Acad. Sci. 2019;116(7):2521–2526. doi: 10.1073/pnas.1806781116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Phan, X.H., Nguyen, C.T.: GibbsLDA++: A C/C++ implementation of Latent Dirichlet Allocation (LDA). Tech Rep (2007)

- 39.Qazvinian, V., Rosengren, E., Radev, D.R., Mei, Q.: Rumor has it: Identifying misinformation in microblogs. In: Proceedings of the conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, pp 1589–1599 (2011)

- 40.Qiu, J., Li, Y., Tang, J., Lu, Z., Ye, H., Chen, B., Yang, Q., Hopcroft, J.E.: The lifecycle and cascade of Wechat social messaging groups. In: Proceedings of the 25th International Conference on World Wide Web. ACM, pp 311–320 (2016)

- 41.Romero, D.M., Uzzi, B., Kleinberg, J.: Social networks under stress. In: Proceedings of the 25th International Conference on World Wide Web. ACM, pp 9–20 (2016)

- 42.Ruths D. The misinformation machine. Science. 2019;363(6425):348–348. doi: 10.1126/science.aaw1315. [DOI] [PubMed] [Google Scholar]

- 43.Shao C, Ciampaglia GL, Varol O, Yang KC, Flammini A, Menczer F. The spread of low-credibility content by social bots. Nat. Commun. 2018;9(1):4787. doi: 10.1038/s41467-018-06930-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Shu K, Sliva A, Wang S, Tang J, Liu H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explor. Newsl. 2017;19(1):22–36. doi: 10.1145/3137597.3137600. [DOI] [Google Scholar]

- 45.Singer, P., Lemmerich, F., West, R., Zia, L., Wulczyn, E., Strohmaier, M., Leskovec, J.: Why we read Wikipedia. In: Proceedings of the 26th International Conference on World Wide Web. ACM, pp 1591–1600 (2017)

- 46.Starbird, K., Maddock, J., Orand, M., Achterman, P., Mason, R.M.: Rumors, falseflags, and digital vigilantes: Misinformation on Twitter after the 2013 Boston Marathonbombing. In: iConference 2014 Proceedings, pp. 654–662. iSchools (2014)

- 47.Stella M, Ferrara E, De Domenico M. Bots increase exposure to negative and inflammatory content in online social systems. Proc. Natl. Acad. Sci. 2018;115(49):12,435–12,440. doi: 10.1073/pnas.1803470115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Vosoughi S, Roy D, Aral S. The spread of true and false news online. Science. 2018;359(6380):1146–1151. doi: 10.1126/science.aap9559. [DOI] [PubMed] [Google Scholar]

- 49.Way, S.F., Larremore, D.B., Clauset, A.: Gender, productivity, and prestige in computer science faculty hiring networks. In: Proceedings of the 25th International Conference on World Wide Web. ACM, pp. 1169–1179 (2016)

- 50.Zhang, Y., Guan, L., Chen, H., Zhu, J.: Using text mining to measure diffusion of innovation. In: The 67th Annual Conference of the International Communication Association: Interventions: Communication Research and Practice (2017)