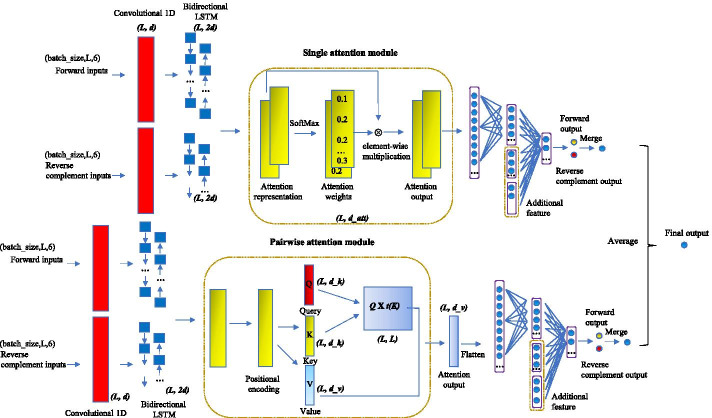

Fig. 1.

The general framework of the two attention modules of DeepGRN. The diagram of the deep neural network architecture. Convolutional and bidirectional LSTM layers use both forward and reverse complement features as inputs. In the single attention module, attention weights are computed from hidden outputs of LSTM and are used to generate the weighted representation through an element-wise multiplication. In the pairwise attention module, three components: Q(query), K(key), and V(value) are computed from LSTM output. The multiplication of Q and transpose of K are used to calculate the attention weights for each position of V. The multiplication of V and attention scores is the output of the pairwise attention module. Outputs from attention layers are flattened and fused with non-sequential features (genomic annotation and gene expression). The final score is computed through dense layers with sigmoid activation and merging of both forward and reverse complement inputs. The dimensions of each layer are shown beside each component