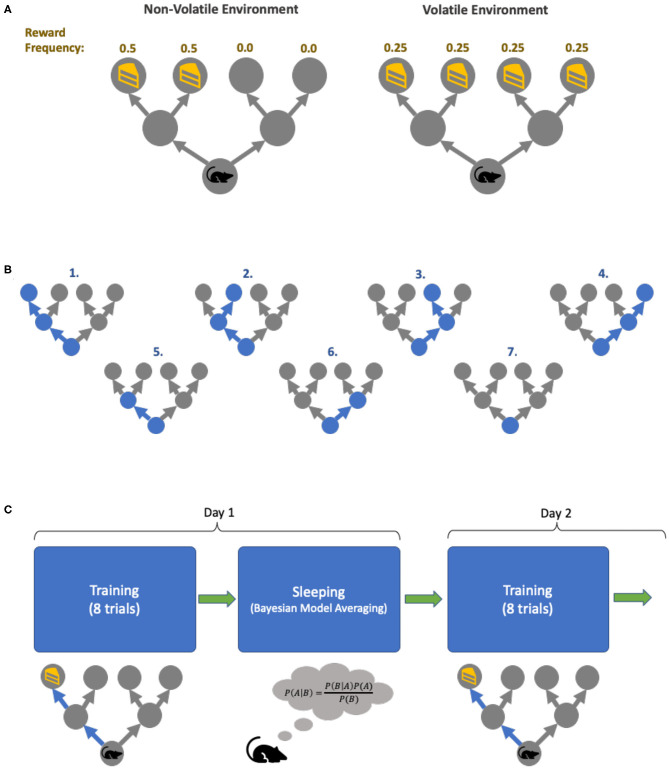

Figure 3.

Simulation task set-up. (A) The two environments in which the agents are trained. The environment can be non-volatile (left), in which the reward always appears on the left of the initial location, with equal frequency. The volatile environment (right) has reward appearing in all four final locations with equal frequencies. (B) The agent's policies. In our simulation, our agents each have 7 policies it can pursue: the first four policies correspond to the agent going to one of the final locations, policies 5–6 has the agent going to one of the intermediate locations and staying there, and policy 7 has the agent not moving from its initial location for the entire duration of a trial. (C) The training cycles. Each day, each agent is trained for 8 trials in their respective environment, and in between days the agent goes to “sleep” (and perform Bayesian model averaging to find more optimal policy concentrations). This process is repeated for 32 days.