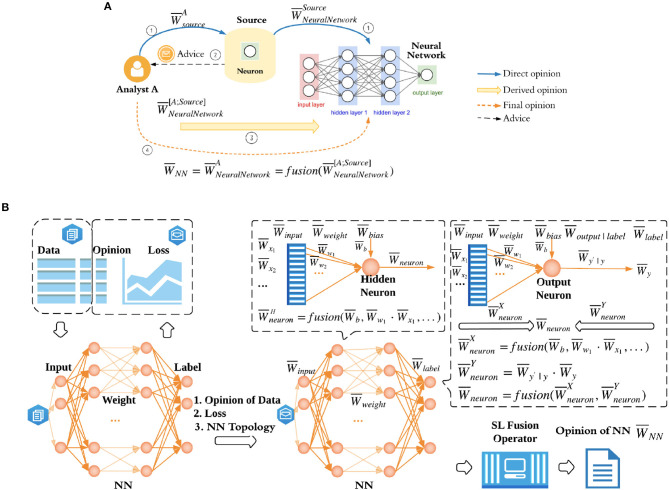

Figure 1.

DeepTrust: subjective trust network formulation for multi-layered NNs and NN opinion evaluation. (A) Subjective trust network formulation for a multi-layered NN. To quantify the opinion of network , i.e., human observer's opinion of a particular neural network , human observer as an analyst relies on sources, in this case neurons in network, which hold direct opinions of neuron network. and are analyst A's opinion of source and source's opinion of neural network. Then the derived opinion of neural network is calculated as . (B) NN opinion evaluation. Dataset in DeepTrust contains Data, i.e., features and labels the same way as a normal dataset, in addition to the Opinion on each data point. If a data point doesn't convey the information as other data points do, for example, one of the features is noisy or the label is vague, we consider this data as uncertain, and hence introduce uncertainty into dataset. Rather than assigning different opinions to each feature and label, we assign a single opinion to the whole data point. The reason is described in section 4.3. Given NN topology, opinion of data, and training loss, DeepTrust can calculate the trust of NN. Note that, the trust of hidden neurons and trust of output neurons are quantified differently as shown in this figure. Each neuron in output layer is a source which provides advice to analyst, so that the analyst can derive its own opinion of the NN. is represented by for simplicity. Detailed computation and explanation are summarized in Section 4. After trust of all output neurons are evaluated, SL fusion operator described in Section 3.4 is used to generate final opinion of neural network .