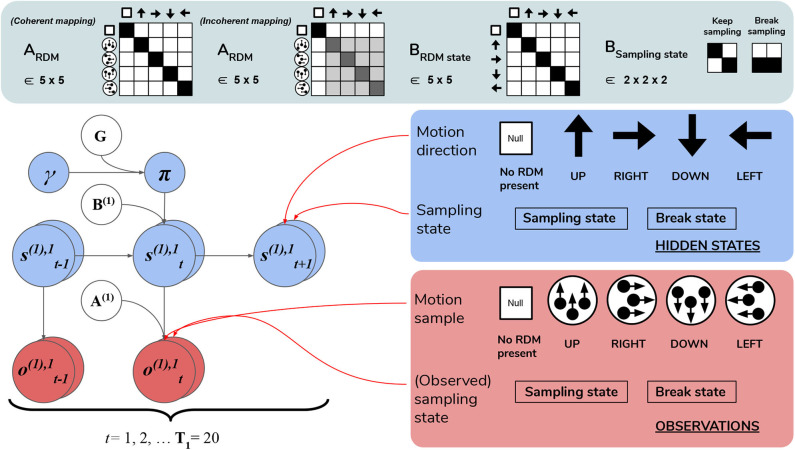

Figure 6.

Level 1 MDP. Level 1 of the hierarchical POMDP for scene construction (see Section 4.2.1 for details). Level 1 directly interfaces with stochastic motion observations generated by the environment. At this level hidden states correspond to: (1) the true motion direction s(1),1 underlying visual observations at the currently-fixated region of the visual array and (2) the sampling state s(1),2, an aspect of the environment that can be changed via actions, i.e., selections of the appropriate state transition, as encoded in the B matrix. The first hidden state factor s(1),1 can either correspond to a state with no motion signal (“Null,” in the case when there is no RDM or a categorization decision is being made) or assume one of the four discrete values corresponding to the four cardinal motion directions. At each time step of the generative process, the current state of the RDM stimulus s(1),1 is probabilistically mapped to a motion observation via the first-factor likelihood A(1),1 (shown in the top panel as ARDM). The entropy of the columns of this mapping can be used to parameterize the coherence of the RDM stimulus, such that the true motion states s(1),1 cause motion observations o(1),1 with varying degrees of fidelity. This is demonstrated by two exemplary ARDM state matrices in the top panel (these correspond to A(1),1): the left-most matrix shows a noiseless, “coherent” mapping, analogized to the situation of when an RDM consists of all dots moving in the same direction as described by the true hidden state; the matrix to the right of the noiseless mapping corresponds to an incoherent RDM, where instantaneous motion observations may assume directions different than the true motion direction state, with the frequency of this deviation encoded by probabilities stored in the corresponding column of ARDM. The motion direction state doesn't change in the course of a trial (see the identity matrix shown in the top panel as BRDM, which simply maps the hidden state to itself at each subsequent time step)—this is true of both the generative model and the generative process. The second hidden state factor s(1),2 encodes the current “sampling state” of the agent; there are two levels under this factor: “Keep-sampling” or “Break-sampling.” This sampling state (a factor of the generative process) is directly represented as a control state in the generative model; namely, the agent can change it by sampling actions (B-matrix transitions) from the posterior beliefs about policies. The agent believes that the “Break-sampling” state is a sink in the transition dynamics, such that once it is entered, it cannot be exited (see the right-most matrix of the transition likelihood BSampling state). Entering the “Break-sampling” state terminates the POMDP at Level 1. The “Keep-sampling” state enables the continued generation of motion observations as samples from the likelihood mapping A(1),1. A(1),2 (the “proprioceptive” likelihood, not shown for clarity) deterministically maps the current sampling state s(1),2 to an observation o(1),2 thereof (bottom row of lower right panel), so that the agent always observes which sampling state it is in unambiguously.