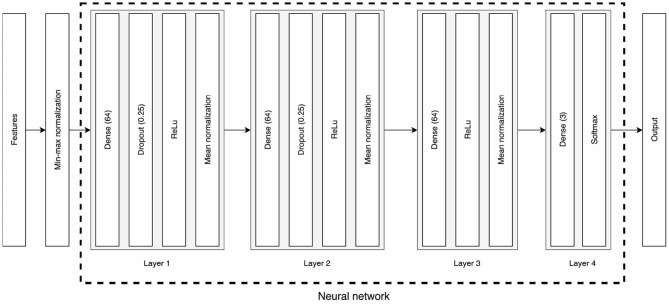

Figure 2.

Architecture of the classifiers. Features first go through a min-max normalization of the data before being input to the neural network. The first three layers consist of dense layers with 64 neurons, a dropout for the first two, ReLu activations, and either a batch or a stratified normalization. The last layer is a 3-neuron dense layer that outputs the classification prediction through a Softmax function.