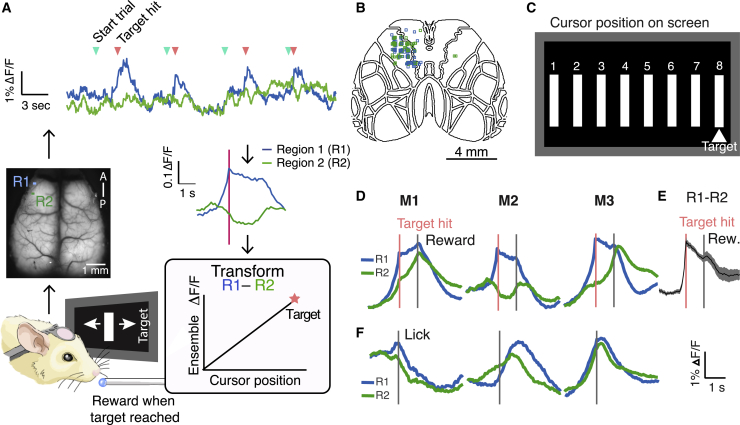

Figure 1.

A widefield-imaging-based brain machine interface

(A) Task schematic. Clockwise starting from illustration of mouse: wide-field signals were imaged from head-fixed animals in real time and transmuted into the position of a visual cursor. Two small regions (R1 and R2) were used for controlling the cursor, and activity recorded from these areas was fed into a decoder such that their activity opposed one another. Example dF/F for the 2 regions is shown at top, with blue arrows denoting trial starts, and pink arrows denoting target hits. Activity averaged around hits for 1 example animal, 1 day, shown for R1 and R2. Animals had to increase activity in R1 relative to R2 to bring the cursor to a rewarded position at the center of their visual field, at which point they could collect a reward after a 1-s delay.

(B) Positions of control ROIs (R1 in blue, R2 in green) for all 7 animals over the course of training (averaging 15 days each), superimposed on the Allen Brain Atlas (totaling 104 pairs).

(C) Feedback schematic: the cursor could take 1 of 8 potential positions on screen, with position 8, the target, rewarded.

(D) ΔF/F in control regions triggered around hits for 3 example animals on 1 day of training, indicating different strategies that animals use to achieve reward. Pink line indicates the time of target hit and gray line indicates reward delivery.

(E) Activity in R1 subtracted by activity in R2, averaged around target hits for all mice on a day of training (n = 7 mice, shading represents SEM).

(F) Animals could not control cursor using lick alone. ΔF/F triggered around lick bouts in spontaneous activity for the same 3 example animals on 1 day of training, indicating animals could not achieve the differential activation of R1 and R2 using lick alone. Gray line indicates time of lick.