Abstract

This paper proposes a method for deriving interpretable common factors based on canonical correlation analysis applied to the vectors of common factors and manifest variables in the factor analysis model. First, an entropy-based method for measuring factor contributions is reviewed. Second, the entropy-based contribution measure of the common-factor vector is decomposed into those of canonical common factors, and it is also shown that the importance order of factors is that of their canonical correlation coefficients. Third, the method is applied to derive interpretable common factors. Numerical examples are provided to demonstrate the usefulness of the present approach.

Keywords: canonical factor analysis, canonical common factor, entropy, factor contribution

1. Introduction

In factor analysis, extracting interpretable factors is important for practical data analysis. In order to carry it out, methods for factor rotation have been studied, e.g., varimax [1] and orthomax [2] for orthogonal rotations and oblimin [3] and orthoblique [4] for oblique rotations. The basic idea for factor rotation in factor analysis is owed to the criteria of simple structures of factor analysis models by Thurstone [5], and the methods of factor rotation are constructed with respect to maximizations of variations of the squared factor loadings in order to derive simple structures of factor analysis models. Let be manifest variables, let be latent variables (common factors), let be unique factors related to , and finally, let be factor loadings that are weights of common factors to explain . Then, the factor analysis model is given as follows:

| (1) |

where

To derive simple structures of factor analysis models, for example, in the varimax method, the following variation function of squared factor loadings is maximized with respect to factor loadings:

| (2) |

where . In this sense, the basic factor rotation methods can be viewed as those for exploratively analyzing multidimensional common-factor spaces. The interpretation of factors is made according to manifest variables with large weights in common factors. As far as we know, novel methods for factor rotation have not been investigated except for rotation methods similar to the above basic ones. In real data analyses, manifest variables are usually classified into some groups of variables in advance that may have common factors and concepts for themselves. For example, suppose we have a test battery including the following five subjects: Japanese, English, Social Science, Mathematics, and Natural Science. It is then reasonable to classify the five subjects into two groups, {Japanese, English, Social Science} and {Mathematics, Natural Science}. In such cases, it is meaningful to determine common factors related to the two manifest variable groups. For this objective, it is useful to develop a novel method to derive the common factors based on a factor contribution measure. In conventional methods of factor rotation, for example, as mentioned above, variation function (2) for the varimax method is not related to factor contribution.

An entropy-based method for measuring factor contribution was proposed by [6], and the method can measure factor contributions to manifest variables vectors and can decompose the factor contributions into those of manifest subvectors and individual manifest variables. By using the method, we can derive important common factors related to the manifest subvectors and the manifest variables. The aim of the present paper is to propose a new method for deriving simple structures based on entropy, that is, extracting common factors easy to interpret. In Section 2, an entropy-based method for measuring factor contribution [6] is reviewed to apply its properties for deriving simple structures in factor analysis models. Section 3 discusses canonical correlation analysis between common factors and manifest variables, and the contributions of common factors to the manifest variables are decomposed into components related to the extracted pairs of canonical variables. A numerical example is given to demonstrate the approach. In Section 4, canonical correlation analysis is applied to obtain common factors easy to interpret, and the contributions of the extracted factors are measured. Numerical examples are given to illustrate the present approach, and finally, Section 5 provides discussions and conclusions to summarize the present approach.

2. Entropy-Based Method for Measuring Factor Contributions

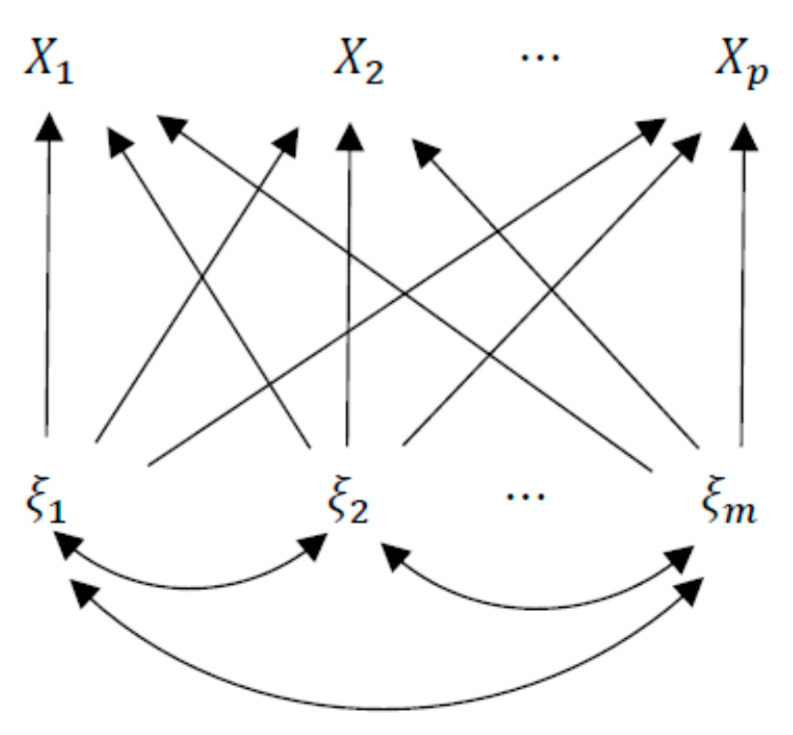

First, in order to derive factor contributions, factor analysis model (1) with error terms , which are normally distributed, can be discussed in the framework of generalized linear models (GLMs) [7]. A general path diagram among manifest variables and common factors in the factor analysis model is illustrated in Figure 1. The conditional density functions of manifest variables of , given the factors , are expressed as follows:

Let and . Then, the above density function is described in a GLM framework as

| (3) |

According to the local independence of the manifest variables in factor analysis model (1), the conditional density function of given is expressed as

| (4) |

Figure 1.

Path diagram of a general factor analysis model.

Let be the joint density function of common-factor vector ; let be the marginal density functions of ; and let us set

| (5) |

| (6) |

where “KL” stands for “Kullback–Leibler information” [8]. From (3) and (4), we have

| (7) |

| (8) |

The above quantities (7) and (8) are interpreted as the signal-to-noise ratios for dependent variables and predictors ; and the signal-to-noise ratio for dependent-variable vectors and common-factor vector , respectively.

From (7) and (8), the following theorem can be derived [6]:

Theorem 1.

In factor analysis model (1), let and . Then,

Consistently, the following theorem, which is actually an extended version of Corollary 1 in [6], can be also obtained:

Theorem 2.

Let manifest variable subvectors be any decomposition of manifest variable vector . Then,

(9)

Following Eshima et al. [6], the contribution of factor vector to manifest variable vector is thus defined as

so that, in Theorem 2, the contributions of factor vector to manifest variable vectors are defined by

Let be subvectors of all variables except from , i.e.,

and let and be the conditional Kullback–Leibler information as defined in (5) and (6). The contributions of common factors are defined by

Remark 1.

Information and can be expressed by using the conditional covariances . For example,

Finally, the following decomposition of holds for orthogonal factors ([6], Theorem 3):

Theorem 3.

If the common factors are mutually independent, it follows that

The entropy coefficient of determination (ECD) [9] between and is defined by

so that the total relative contribution of factor vector to manifest variable vector in entropy can be defined as

while, for a single factor , two relative contribution ratios can be defined:

(see [6] for details).

Second, factor analysis model (1) in a general case is discussed. Let be the variance–covariance matrix of manifest variable vector ; let be the variance–covariance matrix of unique factor vector ; let be the factor loading matrix of ; and let be the correlation matrix of common-factor vector . Then, model (1) can be expressed as

and we have

Now, the above discussion is extended in a general factor analysis model (1) with the following variance–covariance matrix of and :

| (10) |

Let be the predictor vector of manifest variable vector . Then, the contribution of common-factor vector to manifest variable vector is defined by the following generalized signal-to-noise ratio:

| (11) |

where is the cofactor matrix of . The signal is and the noise , and both are positive. Hence, the above quantity is defined as the explained entropy with the factor analysis model, and the same notation as above is used, having to do with the Kullback–Leibler information for the factor analysis model with normal distribution errors (4). Similarly, in the general model, as in (9), signal-to-noise ratio (11) is decomposed into

so the above theorems hold true as well. Thus, the results mentioned above are applicable to factor analysis models with error terms with non-normal distributions.

3. Canonical Factor Analysis

In order to derive interpretable factors from the common-factor space, we propose taking advantage of the results of canonical correlation analysis applied to manifest variables and common factors. This approach can be referred to as “canonical factor analysis” [10]. In the factor analysis model (1), the variance–covariance matrix of and is given by (10). Then, we have the following theorem:

Theorem 4.

For canonical correlation coefficients between and in factor analysis model (1) with (10), it follows that

Proof.

Let , , and be , , and matrices, respectively; let , , and . It is assumed that are the pairs of canonical variables with correlation coefficients ; that matrices and are nonsingular; and that and are statistically independent. Since all pairs of canonical variables and are mutually independent, we have

From Theorem 2, it follows that

This completes the theorem. □

In the proof of the above theorem, we have

| (12) |

It implies that

Theorem 4 shows that the contribution of common-factor vector to manifest variable vector is decomposed into those of canonical common factors , i.e.,

Let us assume

| (13) |

According to the entropy-based criterion in Theorem 4, the order of importance of canonical common factors is that of canonical correlation coefficients. The interpretation of factors can be made with the corresponding manifest canonical variables and the factor loading matrix of canonical common factors . For the canonical common factors, the factor loading matrix can be obtained as . We refer to the canonical correlation analysis in Theorem 4 as canonical factor analysis [10].

Theorem 5.

In factor analysis model (1), for any and nonsingular matrices and , the canonical factor analysis between manifest variable vector and common-factor vector is invariant.

Proof.

Since the variance–covariance matrix of and is given by

the theorem follows. □

Notice that we also have

From the above theorem, the results of the canonical factor analysis do not depend on the initial common factors in factor analysis model (1). For factor analysis model (1), it follows that

implying that

where are the multiple correlation coefficients between manifest variables and factor vector .

Numerical Example 1

Table 1 shows the results of orthogonal factor analysis (varimax method by S-PLUS ver. 8.2) as reported in [6]; the same example is used here to demonstrate the canonical factor analysis mentioned above. In Table 1, manifest variables are scores in some subjects in the liberal arts, while variables are those in the sciences. We refer to the factors as the initial common factors. In this example, from Table 1, the variance–covariance matrices in (10) are given as follows:

where covariance matrix is given in Table 1.

Table 1.

Factor loadings of orthogonal (varimax) factor analysis.

| 0.60 | 0.75 | 0.65 | 0.32 | 0.00 | |

| 0.39 | 0.24 | 0.00 | 0.59 | 0.92 | |

| uniqueness | 0.50 | 0.38 | 0.58 | 0.55 | 0.16 |

Uniqueness is the proportion of unique factor related to manifest variable .

From the above matrices, to obtain the pairs of canonical variables, linear transformation matrices and in Theorem 4 are as follows:

and

By the above matrices, we have the following pairs of canonical variables and their squared canonical correlation coefficients :

According to the above canonical variables, the factor loading for canonical factors is calculated with the initial loading matrix and the rotation matrix , and we have

From the above results, the first canonical factor can be viewed as a general common ability (factor) to solve all five subjects. The second factor can be regarded as a factor related to subjects in the liberal arts, which is independent of the first canonical factor. In the canonical correlation analysis, the contributions of canonical factors are calculated. Since the multiple correlation coefficient between and is and that between and is , we have

Let . From the above results, we have

From this, of the variation of manifest random vector in entropy is explained by the common latent factors . The contribution ratios of canonical common factors are calculated as follows:

The contribution of the first canonical factor is about 2.6 times greater than that of the second one.

4. Deriving Important Common Factors Based on Decomposition of Manifest Variables into Subsets

From (9) in Theorem 2, is decomposed into those for manifest variable subvectors , . Thus, we have the following theorem:

Theorem 6.

Let manifest variable vector be decomposed into subvectors. Let be the canonical correlation coefficients between manifest variable subvectorand common-factor vector ,in the factor analysis model (1), where . Then,is decomposed into canonical components as follows:

Proof.

For manifest variable vector and common-factor vector , applying canonical correlation analysis, we have pairs of canonical variables with squared canonical correlation coefficients . Then, applying Theorem 4 to it follows that

From Theorem 2, the theorem follows. □

Remark 2.

As shown in the above theorem, the following relations hold:

In this sense,

To derive important common factors, the above theorem can be used. In many of the data in factor analysis, manifest variables can be classified into subsets that have common concepts (factors) to be measured. For example, in the data used for Table 1, it is meaningful to classify the five variables into two subsets and , where the first subset is related to the liberal arts and the second one is related to the sciences. In and , it is possible to derive the latent ability for the liberal arts and that for the sciences, respectively.

4.1. Numerical Example 1 (Continued)

For and , two sets of canonical variables are obtained, respectively, as follows:

According to the above canonical variables, we have the following factor contributions:

From the above results, canonical factors and can be interpreted as general common factors for the liberal arts and for the sciences, respectively. By using the factors, the factor loadings are given in Table 2. In this case, Table 2 is similar to Table 1; however, the factor analysis model is oblique and the correlation coefficient between and is 0.374. The contributions of the factors to manifest variable vector are calculated as follows:

In this case, factors and are correlated, so it follows that

Table 2.

Factor loadings by using canonical common factors and .

| 0.62 | 0.80 | 0.70 | 0.31 | ||

| 0.19 | 0.49 | 0.94 | |||

| uniqueness | 0.50 | 0.38 | 0.58 | 0.55 | 0.16 |

4.2. Numerical Example 2

Table 3 shows the results of the maximum likelihood factor analysis (orthogonal) for six scores ([11], pp. 61–65); such results are treated as the initial estimates in the present analysis. In this example, variables are classified into the following three groups: variable is related to the Spearman’s factor; variables , , and account for problem-solving ability; and variables and are associated with verbal ability [11]; however, it is difficult to explain the three factors by using Table 3. In this example, the present approach is employed for deriving the three factors. From (10) and Table 3, the correlation matrix of the manifest variables is given as follows:

Let , let , and let . Canonical correlation analysis is carried out for , , and , and we have the following canonical variables, respectively:

The contributions of canonical factors are calculated as follows:

The common factor can be interpreted as the Spearman’s factor (general intelligence) and canonical common factors and can be interpreted as problem-solving ability and verbal ability, respectively. The correlation coefficients between the three factors are given by

The contributions of the above three factors to manifest variable vector are computed as follows:

The common-factor space is two-dimensional, and the factor loadings with common factors and are calculated as in Table 4. The table shows a clear interpretation of the common factors. Thus, the present method is effective for deriving interpretable factors in situations such as that of this example. The expressions of the factor analysis model can also be given by factor vectors and , respectively. The present method is applicable for any subsets of manifest variables.

Table 3.

The initial maximum likelihood estimates of factor loadings (varimax).

| 0.64 | 0.34 | 0.46 | 0.25 | 0.97 | 0.82 | |

| 0.37 | 0.54 | 0.76 | 0.41 | |||

| uniqueness | 0.45 | 0.59 | 0.21 | 0.77 | 0.04 | 0.33 |

Table 4.

The factor loadings with common factors and .

| 0.49 | 0.63 | 0.89 | 0.48 | 0.07 | ||

| 0.39 | 0.01 | 0.00 | 0.00 | 0.98 | ||

| uniqueness | 0.45 | 0.59 | 0.21 | 0.77 | 0.04 | 0.33 |

5. Discussion

In order to find interpretable common factors in factor analysis models, methods of factor rotation are often used. The methods are based on maximizations of variation functions of squares of factor loadings, and orthogonal or oblique factors are applied. The factors derived by the conventional methods may be interpretable; however, it may be more useful to propose a method for detecting interpretable common factors based on factor contribution measurement, i.e., importance of common factors. An entropy-based method for measuring factor contribution [6] can measure the contribution of the common-factor vector to the manifest variable vector, and one can decompose such a contribution into those of single manifest variables (Theorem 1) and into that of some manifest variable subvectors as well (Theorem 2). A characterization in the case of orthogonal factors can be also given (Theorem 3). The paper shows that the most important common factor with respect to entropy can be identified by using canonical correlation analysis between the factor vector and the manifest variable vector (Theorem 4). Theorem 4 shows that the contribution of the common-factor vector to the manifest variable vector can be decomposed into those of canonical factors and that the order of canonical correlation coefficients is that of factor contributions. In most multivariate data, manifest variables can be naturally classified into subsets according to common concepts as in Examples 1 and 2. By using Theorems 2 and 5, canonical correlation analysis can also be applied to derive canonical common factors from subsets of manifest variables and the initial common-factor vector (Theorem 6). According to the analysis, interpretable common factors can be obtained easily, as demonstrated in Examples 1 and 2. In Example 1, Table 1 and Table 2 have similar factor patterns; however, the derived factors in Table 1 are orthogonal and those in Table 2 are oblique. In Example 2, it may be difficult to interpret the factors in Table 3 produced by the varimax method. On the other hand, Table 4, obtained by using the present method, can be interpreted clearly. Finally, according to Theorem 5, the present method produces results that are invariant with respect to linear transformations of common factors, so that the method is independent of the initial common factors. The present method is the first one to derive interpretable factors based on a factor contribution measure, and the interpretable factors can be obtained easily through canonical correlation analysis between manifest variable subvectors and the factor vectors.

Acknowledgments

The authors would like to thank the three referees for their useful comments and suggestions for improving the first version of this paper.

Author Contributions

Conceptualization, N.E.; methodology, N.E., C.G.B., M.T., T.K.; formal analysis, N.E.; writing—original draft preparation, N.E.; writing—review and editing, C.G.B.; funding acquisition, T.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Grant-in-aid for Scientific Research 18K11200, Ministry of Education, Culture, Sports, Science, and Technology of Japan.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Kaiser H.F. The varimax criterion for analytic rotation in factor analysis. Psychometrika. 1958;23:187–200. doi: 10.1007/BF02289233. [DOI] [Google Scholar]

- 2.Ten Berge J.M.F. A joint treatment of VARIMAX rotation and the problem of diagonalizing symmetric matrices simultaneously in the least-squares sense. Psychometrika. 1984;49:347–358. doi: 10.1007/BF02306025. [DOI] [Google Scholar]

- 3.Jennrich R.I., Sampson P.F. Rotation for simple loadings. Psychometrika. 1966;31:313–323. doi: 10.1007/BF02289465. [DOI] [PubMed] [Google Scholar]

- 4.Harris C.W., Kaiser H.F. Oblique factor analytic solutions by orthogonal transformation. Psychometrika. 1964;29:347–362. doi: 10.1007/BF02289601. [DOI] [Google Scholar]

- 5.Thurstone L.L. Vector of Mind: Multiple Factor Analysis for the Isolation of Primary Traits. University of Chicago Press; Chicago, IL, USA: 1935. [Google Scholar]

- 6.Eshima N., Tabata M., Borroni C.G. An entropy-based approach for measuring factor contributions in factor analysis models. Entropy. 2018;20:634. doi: 10.3390/e20090634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nelder J.A., Wedderburn R.W.M. Generalized linear model. J. R. Stat. Soc. A. 1972;135:370–384. doi: 10.2307/2344614. [DOI] [Google Scholar]

- 8.Kullback S., Leibler R.A. On information and sufficiency. Ann. Math. Stat. 1951;22:79–86. doi: 10.1214/aoms/1177729694. [DOI] [Google Scholar]

- 9.Eshima N., Tabata M. Entropy coefficient of determination for generalized linear models. Comput. Stat. Data Anal. 2010;54:1381–1389. doi: 10.1016/j.csda.2009.12.003. [DOI] [Google Scholar]

- 10.Rao C.R. Estimation and tests of significance in factor analysis. Psychometrika. 1955;20:93–111. doi: 10.1007/BF02288983. [DOI] [Google Scholar]

- 11.Bartholomew D.J. Latent Variable Models and Factor Analysis. Oxford University Press; New York, NY, USA: 1987. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing is not applicable to this article.