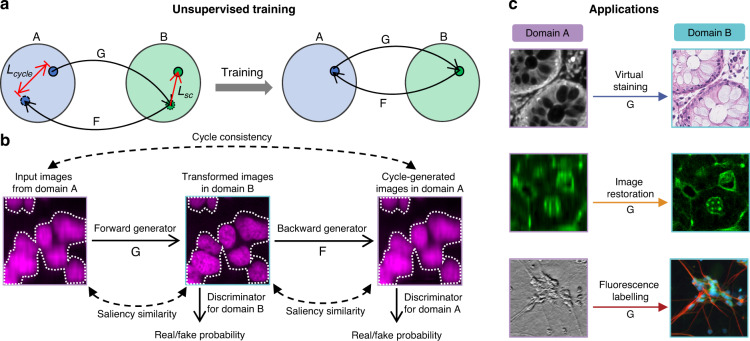

Fig. 1. Principle of UTOM.

a Two image sets, A and B, represent two image domains. No paired training data are required in these two sets. A forward GAN, G, and a backward GAN, F, are trained simultaneously, each to learn one direction of a pair of opposite mappings. A cycle-consistency constraint (Lcycle) and a saliency constraint (Lsc) are enforced to guarantee invertibility and fidelity, respectively. After proper training, reversible mappings between the two domains can be learned and memorized in network parameters. b The unfolded flowchart of UTOM. Input images from domain A are first transformed into the modality of domain B by the forward generator and then mapped back to domain A by the backward generator. Two discriminators are simultaneously trained to evaluate the quality of the mappings by estimating the probability that the transformed images were obtained from the training data rather than the generators. The cycle-consistency constraint is imposed to make the cycle-generated images as close to the input images as possible. The saliency constraint enforces the similarity of the image saliency (annotated by white dotted lines) before and after each mapping. c Once UTOM converges, the forward generator can be loaded to perform domain-transformation tasks such as virtual staining, image restoration, and fluorescence labeling