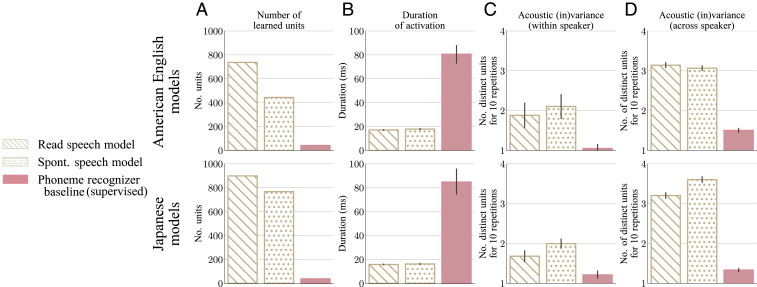

Fig. 4.

Diagnostic test results for our four unsupervised Gaussian mixture models (in beige) and phoneme-recognizer baselines trained with explicit supervision (in pink). (Upper) American English native models. (Lower) Japanese native models. Models are tested on read speech in their native language. (A) Number of units learned by the models. Gaussian mixtures discover 10 to 20 times more categories than there are phonemes in the training language, exceeding any reasonable count for phonetic categories. (B) Average duration of activation of the learned units. The average duration of activation of each unit is computed, and the average and SD of the resulting distribution over units are shown. Learned Gaussian units get activated, on average, for about the quarter of the duration of a phoneme. They are, thus, much too “short” to correspond to phonetic categories. (C) Average number of distinct representations for the central frame of the central phone for 10 repetitions of a same word by the same speaker, corrected for possible misalignment. The number of distinct representations is computed for each word type with sufficient repetitions in the test set, and the average and SD of the resulting distribution over word types are shown. The phoneme-recognizer baseline reliably identifies the 10 tokens as exemplars from a common phonetic category, whereas our Gaussian mixture models typically maintain on the order of two distinct representations, indicating representations too fine-grained to be phonetic categories. (D) As in C, but with repetitions of a same word by 10 speakers, showing that the learned Gaussian units are not speaker-independent. Spont., spontaneous.