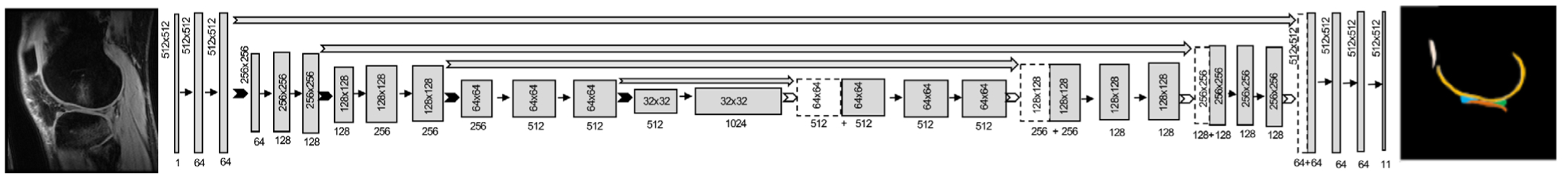

FIGURE 10:

The architecture for the 2D U-Net encoder-decoder convolutional neural network. An input image with pixel dimensions of 512 × 512 is successively downsampled (dimensions in gray box) using 2 × 2 max pooling layers, but with an increasing number of feature maps (dimensions under gray box) in the decoder. In the decoder, the image is similarly upsampled using transposed convolutions to produce a segmentation mask with the same dimensions as the input image, with labels for multiple tissues in the knee.