Abstract

Covid-19 pandemics has fostered a pervasive use of facemasks all around the world. While they help in preventing infection, there are concerns related to the possible impact of facemasks on social communication. The present study investigates how emotion recognition, trust attribution and re-identification of faces differ when faces are seen without mask, with a standard medical facemask, and with a transparent facemask restoring visual access to the mouth region. Our results show that, in contrast to standard medical facemasks, transparent masks significantly spare the capability to recognize emotional expressions. Moreover, transparent masks spare the capability to infer trustworthiness from faces with respect to standard medical facemasks which, in turn, dampen the perceived untrustworthiness of faces. Remarkably, while transparent masks (unlike standard masks) do not impair emotion recognition and trust attribution, they seemingly do impair the subsequent re-identification of the same, unmasked, face (like standard masks). Taken together, this evidence supports a dissociation between mechanisms sustaining emotion and identity processing. This study represents a pivotal step in the much-needed analysis of face reading when the lower portion of the face is occluded by a facemask.

Subject terms: Psychology, Human behaviour, Emotion, Social behaviour

Introduction

Once a rarity outside healthcare, by mid-2020 facemasks have become a pervasive feature in the everyday lives of many citizens and some local authorities made their use compulsory in many circumstances. Pervasive mask-wearing turns out to have, however, two problematic side-effects. First, by making the mouth invisible, facemasks potentially inhibit the capability to perceive a lot of social information of the utmost relevance for everyday interactions across several social contexts. To begin with, the mask can interfere with the recognition of its bearer’s emotional state. Moreover, since affective displays are also thought to affect first impressions of trustworthiness1, facemasks may also alter the perceived trustworthiness of unknown mask-bearers. By making emotional displays harder to interpret, facemasks may also compromise facial mimicry and behavioral synchrony which, in turn, boost social bonds, empathy and playful interactions2–7. The social information loss is even more dramatic in people with hearing deficits, since facemasks impair lip-reading and (to a lesser extent) sign language, which often relies on mouth movements among other things. Second, but equally important, is that—beside the recognition of emotions and trustworthiness—facemasks may jeopardize the re-identification of a previously observed (masked) face. Incidentally, this is the reason why criminals routinely use facemasks.

The impact of facemasks on emotion recognition, trustworthiness and face identity, however, is not necessarily of the same degree. The most prominent theory on face perception suggests that the recognition of emotional expressions and face identity are distinct perceptual processes encoded by independent psychological8 and neural9,10 mechanisms, with emotions and other social attributes heavily reliant on highly mobile facial regions, and facial identity mainly based upon invariant, static traits of the face. More specifically, as concerns emotion recognition and trust attribution, several experimental studies investigated the amount and type of social information conveyed by specific regions of the face, revealing that the mouth is pivotal in recognizing emotions, especially happiness11–14. Similarly, faces are judged as more trustworthy when the contrast of the mouth (and eye) regions is increased by means of experimental manipulations15. While this may suggest that facemasks could impair emotion recognition and trust attribution, by the time we ran this experiment almost every existing study employed explicit experimental manipulations on the mouth (and other facial regions) rather than ecological stimuli such as actual facemasks. As for identity recognition, in contrast, recent findings suggest that the recognition of faces is not necessarily related to internal features16. A recent study shows that a mix of internal and external features seem to weigh more in the recognition of both familiar and unfamiliar faces17. The same study shows that the mouth seems the least relevant feature. Identity recognition is known to be based on configural processing that get easily disrupted when the face percept is presented upside-down18 or its integrity is compromised due to other experimental manipulations19. How deeply the emotion-identity dissociation runs, and whether it depends upon different visual information and neural pathways, is still a matter of contention20,21. Only very recently, some studies on face perception in presence of surgical facemasks highlighted the possible impact of facemasks on emotion recognition, trustworthiness and identity22–24. However, to the best of our knowledge, no study has tested whether these processes are differently impaired by different mask types, e.g. comparing standard facemasks and masks with a transparent window that uncovers the mouth region.

On a practical note, it is worth stressing that the social costs of using facemasks should not be considered as a reason against their adoption. Rather, a deeper understanding of the mechanisms underpinning the processing of emotion, trustworthiness and identity might lead—from an applicative perspective—to the development of new methods to mitigate the loss of social information and, at the same time, to maximize socio-sanitary benefits.

In the present study we recruited a cohort of 122 participants (47 females; age = 33 ± 8) performing an on-line test to investigate to what extent (a) emotion recognition, (b) trust attribution and (c) re-identification are modulated by the presence of a standard mask occluding the entire mouth region, or a transparent mask restoring visual access to the mouth region. For these tasks, we used 48 different stimuli from the Karolinska Directed Emotional Faces (KDEF)25,26 and the Chicago Face Database (CFD)27 (see Experimental stimuli section). Items from KDEF were used for the emotion recognition and trust attribution tasks, while stimuli from CFD were employed for the trust attribution and re-identification tasks. Given that emotion and identity recognition rely on distinct processes, our expectation was that by restoring (to a large extent) visual access to the mouth region, transparent masks can facilitate emotion and trustworthiness judgments, but not necessarily identity re-identification.

Results

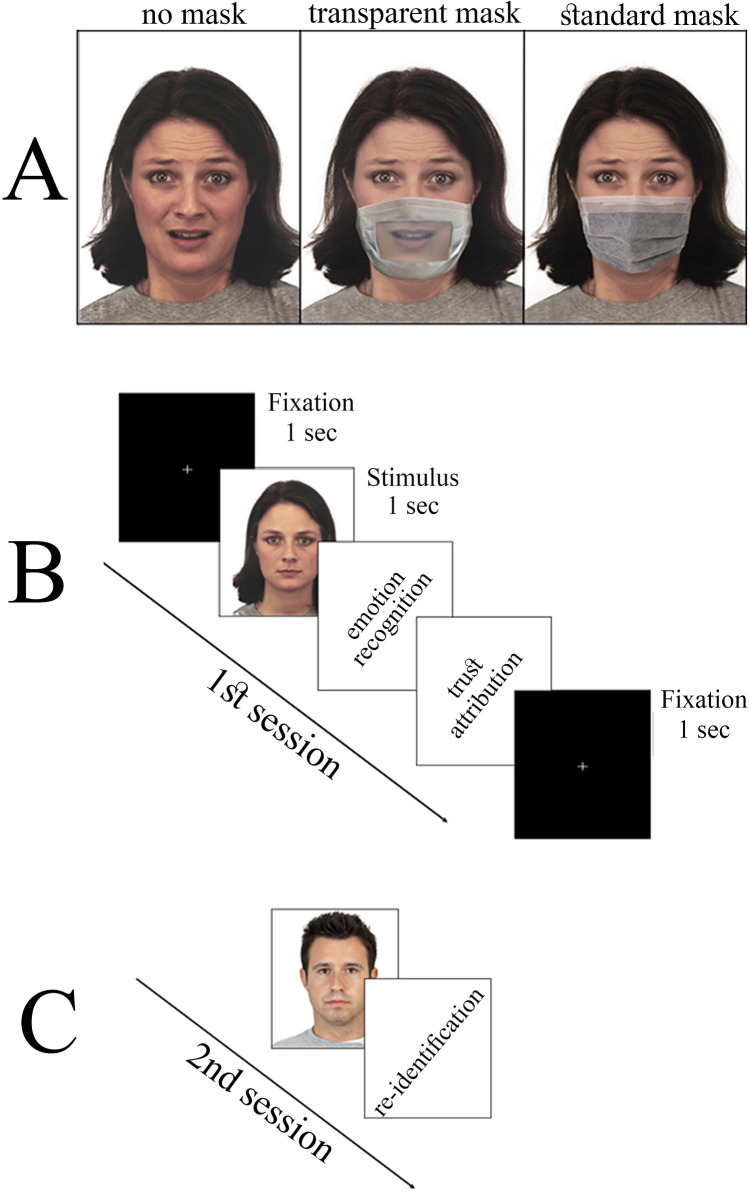

The present study was composed of two sessions. The first session was aimed at evaluating emotion recognition and trust attribution during the observation of faces posing emotional expressions (Fear, Sadness, Happiness and Neutral). Emotional expressions were posed while wearing either (1) standard masks occluding the entire mouth region (SM), (2) transparent masks restoring visual access to the mouth region (TM), or (3) no masks (NM; see Fig. 1A). For each picture participants were requested (a) to recognize the posed emotion, selecting between 4 options (Fear, Sadness, Happiness and Neutral), and (b) to rate the perceived trustworthiness on a 6-point Likert scale (Fig. 1B). In this experiment, we used a mixed experimental design. Due to the constraints of any online procedure, we used a between subjects design regarding the presence (and the type) of the facemask. In doing so, subjects performed only one of the three conditions (i.e., they saw only masked or unmasked faces). On the other hand, in order to assess a difference between each emotion, we implemented a within subjects manipulation by which each participant evaluated all four emotions for each of the selected faces. The second session consisted of a “re-identification task” aimed at investigating the impact of SM and TM in the re-identification of face identity. Pictures of unmasked faces were presented to participants, some of which already presented during the first session, and participants were required to judge whether they have seen the face or not (Fig. 1C).

Figure 1.

Experimental paradigm. (A). Stimuli consisted of faces from the Karolinska Directed Emotional Faces database (KDEF) and from a subset of the Chicago Face Database (CFD) validated for Italian subjects26, presented in unmasked fashion (NM, left panel), or masked with either transparent (TM, central panel) or standard (SM, right panel) medical facemasks. (B). In the first experimental session, participants were presented with KDEF and CFD stimuli shown in NM, TM or SM condition. After each presentation, they were required to indicate the posed emotion selecting between 4 options (Fear, Sadness, Happiness and Neutral) and, subsequently, to rate the perceived trustworthiness on a 6-point Likert scale. (C). In the second experimental session, participants were presented with pictures of unmasked faces from the CDF database. One third (4/12) of the presented stimuli was also presented in the first session in one of the three Conditions (SM, TM, NM). For each picture, participants were required to judge whether they have seen the face or not.

Emotion recognition in masked and unmasked faces

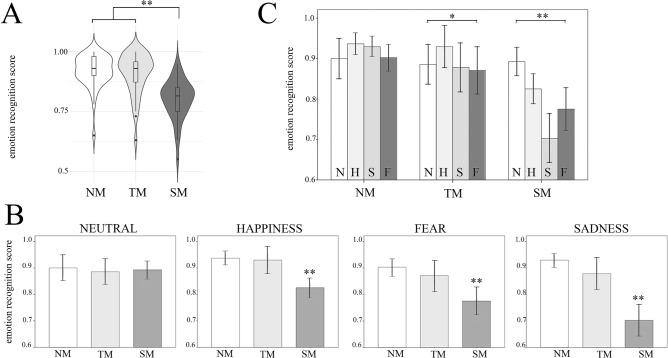

Results concerning the emotion recognition task were based on the scores given by each participant to the Karolinska Directed Emotional Faces (KDEF)25,26 stimuli (n = 40), posing Happiness, Sadness, Fear or Neutral expression. We found a significant main effect of Condition (TM, SM, NM), proving that the presence/type of mask affected the ability to recognize the posed emotions, χ2(2, N = 122) = 40.53, p < 0.001, pMC < 0.001, 99%CI [0.000, 0.001], ε2 = 0.335). Interestingly, post-hoc analysis (Mann–Whitney) showed that the recognition was significantly worse in SM (N = 40 Mdn = 0.81, 95%CI [0.79, 0.83]) than in both NM (N = 41 Mdn = 0.93, 95%CI [0.93, 0.93]) (U = 179, p < 0.001, r = 0.67) and TM (N = 41 Mdn = 0.93, 95%CI [0.93, 0.93]) (U = 303, p < 0.001, r = 0.54). On the contrary, no difference was found between NM and TM (U = 802, p = 0.722, r = 0.03), hence suggesting that the effect of TM was comparable to NM condition, and that the accuracy in SM was significantly lower than the other conditions (see Fig. 2A).

Figure 2.

Emotion recognition task. (A). Violin plots depicting the accuracy of the emotion recognition task, regardless of the posed emotion. Results show a significant main effect of Condition (TM, SM, NM). Emotion recognition in SM was significantly worse than both NM and TM, while no difference was found between NM and TM. (B). The figure illustrates, for each emotion, the impact of the presence/type of mask. Significant effects were found for Happiness, Sadness and Fear, with a significant drop for SM with respect to both TM and NM. No effect was found for the Neutral faces. (C). The figure illustrates, for each condition, which emotions were more affected by the presence/type of mask. Results show a mild but significant main effect of Emotion in TM and a stronger effect in SM, but no effect in NM. See Table 1 for post-hoc results. Error bars indicate confidence intervals. Horizontal bars indicate significant results (*p < .01; ** p < .001). Abbreviations: N: neutral, H: happiness, S: sadness, F: fear.

Since facemasks could have different impact on the four different Emotions (Fear, Sadness, Happiness and Neutral), we ran four Kruskal–Wallis tests to verify if emotions were significantly affected by the presence of the mask. We found a significant main effect of Condition (NM, SM, TM) for Happiness, Sadness and Fear (H: χ2(2, N = 122) = 28.69, p < 0.001, ε2 = 0.237; S: χ2(2, N = 122) = 37.52, p < 0.001, ε2 = 0.310; and F: χ2(2, N = 122) = 19.64, p < 0.001, ε2 = 0.162)). In contrast, no effect was found for the Neutral faces (N: χ2(2, N = 122) = 1.11, p = 0.573, ε2 = 0.009) (Fig. 2B). As concerns Happiness, Sadness and Fear, subsequent post-hoc analysis showed a significant drop for SM with respect to both TM and NM (p < 0.001; Mann–Whitney tests).

To further investigate which emotions were more or less affected by the presence/type of mask, we ran, for each Condition, three Friedman tests on the emotion recognition scores. Coherently with our hypotheses, the test for the NM failed to show a main effect of Emotion (F, S, H, N: χ2(3, N = 41) = 3.81, p = 0.282, W = 0.031), suggesting that participants recognized all the unmasked expressions at the same degree (Fig. 2C). In contrast, in both TM and SM, we found a significant effect of Emotion (F, S, H, N) (TM, χ2(3, N = 41) = 10.36, p = 0.016, W = 0.084 ; SM, χ2(3, N = 41) = 23.22, p < 0.001, W = 0.193)). More specifically, as concerns TM, the ability to correctly recognize emotions was significantly better preserved in the case of Happiness, compared to Neutral (p < 0.05), Sadness (p < 0.05) and Fear (p < 0.01) expressions. As concerns SM, in contrast, the recognition of the Neutral expression was significantly better preserved than all emotional expressions (p < 0.05 for H and p < 0.005 S and F), and that Sadness was the most affected expression (p < 0.001 for N and H; p < 0.05 for F; see Fig. 2C and Table 1 for the whole pattern and the post-hoc analyses).

Table 1.

The table illustrates, for each condition, the results of the post-hoc analyses (Friedman test) comparing couples of posed expressions in both the “Emotion recognition task” (upper panel) and the “Trust attribution task” (lower panel).

| Neutral | Happiness | Sadness | Fear | |||

|---|---|---|---|---|---|---|

| Emotion recognition | No Mask | N | – | − 1.24 | − 0.93 | − 0.08 |

| H | 1.24 | – | 0.36 | 1.67 | ||

| S | 0.93 | − 0.36 | – | 1.39 | ||

| F | 0.08 | − 1.67 | − 1.39 | – | ||

| Transparent | N | – | − 2.3* | 0.45 | 1.01 | |

| H | 2.3* | – | 2.41* | 2.72* | ||

| S | − 0.45 | − 2.41* | – | 0.51 | ||

| F | − 1.01 | − 2.72* | − 0.51 | – | ||

| Standard | N | – | 2.37* | 4.21* | 2.99* | |

| H | − 2.37* | – | 3.43* | 1.69 | ||

| S | − 4.21* | − 3.43* | – | − 2.29* | ||

| F | − 2.99* | − 1.69 | 2.29* | – | ||

| Trust attribution | No Mask | N | – | − 3.64* | 0.79 | 1.78 |

| H | 3.64* | – | 3.41* | 4.02* | ||

| S | − 0.79 | − 3.41* | – | 2.33* | ||

| F | − 1.78 | − 4.02* | − 2.33* | – | ||

| Transparent | N | – | − 4.01* | 0.03 | 1.58 | |

| H | 4.01* | – | 3.35* | 4.13* | ||

| S | − 0.03 | − 3.35* | – | 1.95* | ||

| F | − 1.58 | − 4.13* | − 1.95* | – | ||

| Standard | N | – | − 5.15* | − 0.88 | 0.15 | |

| H | 5.15* | – | 4.65* | 4.77* | ||

| S | 0.88 | − 4.65* | – | 1.64 | ||

| F | − 0.15 | − 4.77* | − 1.64 | – |

Reported values indicate the Z statistic of the Wilcoxon signed-rank test post hoc comparisons (Bonferroni corrected). Asterisks signal significant results.

The analysis of the direction of errors, performed by a chi-square test calculated by comparing for each Emotion (N, H, S, F) the actual responses with the corresponding expected values, showed a significant effect in all conditions (p < 0.0001). Emotions whose real values were significantly higher than the expected ones (i.e. chi-square value exceeds the average value for that emotion) were the following. In both SM and TM, Neutral expressions were mistaken for Sad expressions. In addition, in TM both negative emotions (Sadness and Fear) were mistaken with Neutral expressions. The SM, in contrast, had a different trend, with both negative emotions (Sadness and Fear) reciprocally mistaken. In addition, Happiness was frequently mistaken with Neutral (see Table 2).

Table 2.

The table indicates the direction of errors in the emotion categorization task.

| Neutral | Happiness | Sadness | Fear | ||

|---|---|---|---|---|---|

| No Mask | N | 369 (0.1) | 17 (2.8) | 11 (0.0) | 19 (5.2) |

| H | 2 (7.7) | 384 (0.2) | 3 (6.1) | 1 (9.4) | |

| S | 36 (53.7)* | 8 (1.0) | 381 (0.1) | 20 (6.6) | |

| F | 3 (6.1) | 1 (9.4) | 15 (1.2) | 370 (0.1) | |

| Transparent | N | 363 (0.0) | 18 (0.6) | 32 (19.6)* | 29 (13.3)* |

| H | 9 (2.3) | 381 (0.7) | 0 (14.9) | 2 (11.2) | |

| S | 34 (24.4)* | 8 (3.2) | 360 (0.1) | 22 (3.4) | |

| F | 4 (8.0) | 3 (9.5) | 18 (0.6) | 357 (0.2) | |

| Standard | N | 357 (4.4) | 61 (43.5)* | 38 (4.6) | 32 (1.0) |

| H | 3 (21.2) | 330 (0.3) | 4 (19.4) | 7 (14.7) | |

| S | 29 (0.2) | 7 (14.7) | 281 (4.6) | 51 (21.8)* | |

| F | 11 (9.3) | 2 (23.0) | 77 (93.8) * | 310 (0.3) |

For each condition, columns indicate the presented emotions (n = 410 trials for NM and TM, and n = 400 trial for SM). Rows illustrate the responses given by participants, and the chi-square value calculated by comparing each cell with the corresponding expected value (χ2 in parentheses). Cells in bold indicate cells whose individual chi-square value exceeds the average value in that condition. Asterisks indicate the comparisons where the real value was significantly higher than the expected one.

Trust attribution to masked and unmasked faces

The effect of masks on trustworthiness has been studied by means of two distinct analyses, targeting stimuli of the Chicago Face Database (CFD)27 validated for trustworthiness (n = 8), and KDEF stimuli posing emotional expressions (n = 40), respectively.

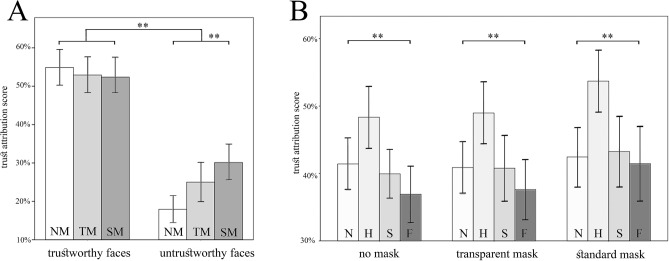

The first analysis investigated to what extent the ratings of trustworthiness attributed to the CFD pictures are influenced by the presence/type of mask. A Kruskal–Wallis test applied to all CFD stimuli throughout the three Conditions (NM, SM, TM) gave no effects (χ2(2, N = 122) = 3.64, p = 0.161, ε2 = 0.030). We then subdivided all stimuli in two sets, i.e. untrustworthy and trustworthy faces, in accord with previous results29,30, confirming that the untrustworthy stimuli obtained significantly lower scores (Z = − 9.23, p < 0.001, r = 0.836; Wilcoxon signed rank test). The same analysis applied to the two sets of stimuli showed that, while no significant effects were obtained to the faces rated as trustworthy (χ2(2, N = 122) = 0.52, p = 0.770, ε2 = 0.004), the untrustworthy faces showed a significant effect of Condition (NM, SM, TM), χ2(2, N = 122) = 13.16, p = 0.001, pMC = 0.002, 99%CI [0.000, 0.004], ε2 = 0.109) (Fig. 3A). Interestingly, Mann–Whitney post-hoc analysis showed a significant increase of the trust scores assigned to the untrustworthy faces in the SM condition (Mdn = 30%, 95%CI [27.5, 30]) compared to the NM condition (Mdn = 20%, 95%CI [15, 25]) (U = 429, p < 0.001, r = 0.41) and, albeit not fully significant, to the TM one (Mdn = 20%, 95%CI [20, 30]) (U = 636, p = 0.057, r = 0.21) – indicating that untrustworthy faces are rated as “less untrustworthy” when wearing TM and, even less, when wearing SM. The same procedure applied to the KDEF stimuli gave no significant results (χ2(2, N = 122) = 2.32, p = 0.313, ε2 = 0.019).

Figure 3.

Trust attribution task. (A). Results for trustworthy and untrustworthy CFD faces, showing a significantly lower score for untrustworthy faces. Stimuli rated as untrustworthy shows a significant effect of Condition. See Table 1 for post-hoc results. (B) A main effect of Emotion was observed in each of the three conditions (NM, TM, SM) of the KDEF stimuli, with Happiness obtaining the highest scores. See Table 1 for post-hoc results. All conventions as in Fig. 2.

The second analysis investigated the effect of different emotions on trust attribution across the three conditions (NM, SM, TM) by analyzing the trust scores obtained by KDEF stimuli. A Friedman test applied to the three Conditions separately showed a main effect of Emotion in all Conditions (NM, χ2(3, N = 41) = 16.32, p = 0.001, W = 0.133); TM, χ2(3, N = 41) = 24.46, p < 0.001, W = 0.199; SM, χ2(3, N = 40) = 45.72, p < 0.001, W = 0.381) (Fig. 3B). In particular, Wilcoxon signed-rank tests for multiple comparisons showed that, in all conditions, Happy faces obtained a higher degree of trust with respect to both negative (Fear, Sadness) and Neutral expressions (p < 0.001). In addition, we found that Sad expressions were scored as more trustworthy than Fearful ones in NM (p < 0.05), and a similar trend was also observed in the TM (p = 0.051; see Table 1).

Re-identification of masked and unmasked faces

The re-identification task was aimed at investigating the capability to correctly re-identify unmasked faces previously observed in masked (TM, SM) or unmasked (NM) fashion. To each participant, we presented pictures of unmasked faces (n = 12), some of which (n = 4) already presented in one of the three Conditions (SM, TM, NM) during the first session. For each picture, participants were required to judge whether they have seen the face or not (Fig. 1C).

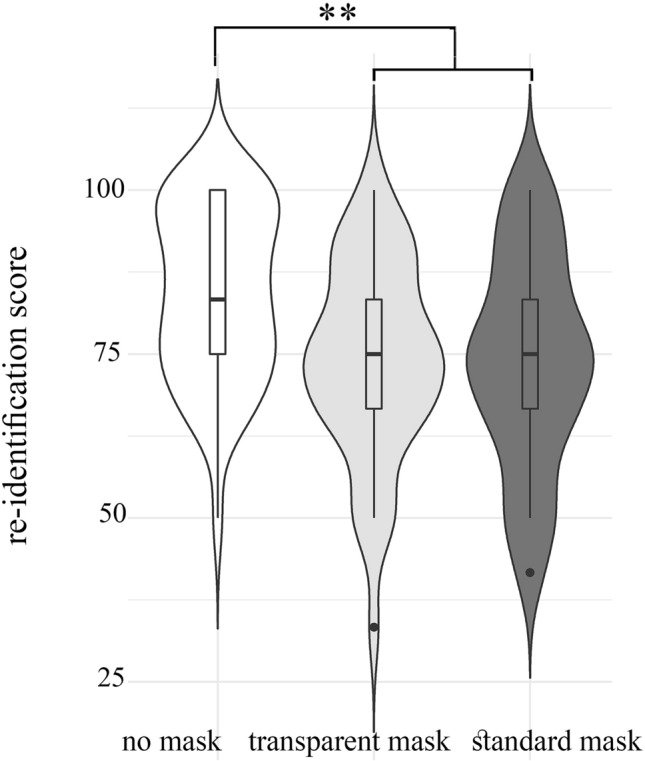

Results showed a significant effect of Condition, χ2(2, N = 122) = 11.64, p = 0.003, pMC = 0.003, 99%CI [0.001, 0.006], ε2 = 0.096, CV < 20%), suggesting that the presence/type of mask affects the subsequent re-identification of that face, presented in an unmasked fashion (Fig. 4). Post-hoc analysis (Mann–Whitney test) showed that, as expected, unmasked faces were identified significantly better when previously presented without masks (NM; Mdn = 83.33%, 95%CI [83.33, 83.33]), with respect to faces previously presented with either transparent (TM; Mdn = 75%, 95%CI [66.67, 83.33]) (U = 520, p = 0.003, r = 0.33) or SM (Mdn = 75%, 95%CI [66.67, 75]) (U = 520, p = 0.004, r = 0.32). More interestingly, no statically significant differences were found between the SM and TM conditions (U = 812, p = 0.943, r = 0.08), indicating that both masks equally impair the subsequent re-identification of that face. Moreover, to keep into account possible random fluctuations, we firstly checked for any response bias (e.g., participants who could have always responded “yes” or “no”) finding no outliers by means of a step of 1.5 × IQR. Later on, we repeated the Kruskal–Wallis test on the re-identification task considering previously seen faces and unseen faces separately. In doing so, a recodification was performed on the recall scores, so that in the case of previously seen faces, 1 point was assigned for each correctly identified face whereas 0 points were assigned for each error (i.e., “No, I’ve never seen this face”). On the contrary, for previously unseen faces, 1 point was assigned to the right answer (i.e., “No, I’ve never seen this face”) and 0 points were assigned for each error (i.e., “Yes, I’ve already seen this face”). What emerged is that the effect was significant in the case of previously seen faces (χ2(2, N = 122) = 6.68, p = 0.029, ε2 = 0.055) and not for unseen faces (χ2(2, N = 122) = 5.60, p = 0.069, ε2 = 0.046). This additional result appears to be reasonable; namely, subjects significantly failed to recognize already seen faces when they were covered by a mask; but they were unlikely to state to have seen a previously unseen face.

Figure 4.

Re-identification task. The analysis of the correct re-identification of unmasked faces previously observed in masked (TM, SM) or unmasked (NM) fashion show a significant effect of Condition, indicating that the presence/type of mask affects the subsequent re-identification of that face, presented in an unmasked fashion. Boxplots within each violin represent interquartile ranges (IQRs). Black horizontal lines indicate median and black points are outliers. All conventions as in Fig. 2.

Lastly, all previous analyses were controlled for gender, age, residence area, and level of education. None of these factors showed significant differences (all ps = NS). Moreover, when we checked for the experiment duration in the whole sample (Mdn = 583 s. 95% C.I. [554, 608]) (Mdn = 583 s. 95% C.I. [554, 608]), we did not find any response pattern alteration (outliers)—also when we took into account the features of the stimuli (i.e., trustworthy/untrustworthy; unseen/seen faces), proving that our manipulations did not affect the response strategies.

Discussion

In the present study we tested to what extent observing an individual wearing a standard (SM) or a transparent facemask (TM), rather than no mask (NM), alters emotion recognition and trust attribution, as well as incidental episodic memory of previously observed face. We found that, as expected, standard masks (a) interfere with emotion recognition and trust attribution, and (b) make it harder to re-identify an already encountered face. More interestingly, we found that transparent masks (c) exert minimal to no effect on emotion recognition and trust attribution, but (d) they complicate re-identification as much as standard masks. In the following sub-sections we briefly discuss each of these aspects along with some possible implications.

Emotion recognition and facemasks

Observing emotional expressions in individuals wearing different types of masks alters the observer’s processing of emotion in a different manner. In particular, while standard masks impair the detection of facial displays, transparent masks—which restore visual access to the mouth region—have virtually no effects on emotion recognition, leading to results that are comparable to those obtained when the face is fully visible. Of note, this effect was particularly strong in the case of the three emotional expressions, but virtually absent in the case of the neutral expression, which was indeed correctly recognized in all conditions.

The evidence that transparent masks do not impair the recognition of emotions suggests that emotional displays are largely detected on the basis of specific individual details—and the mouth in particular—rather than on a holistic processing of the whole face. This hypothesis is in line with huge amount of data highlighting the role of the mouth region in the recognition of many emotional expressions, and in particular happiness13,28–30, thus suggesting that transparent masks provide a workable alternative to standard masks to face the Covid-19 emergency and, at the same time, to allow individuals to share emotions and to convey face-mediated social intentions and non-verbal communication in a standard fashion.

As concerns the standard masks, we observed an overall impairment in emotion recognition, as reported in a previous study22. However, the obstruction of information from the mouth region does not have the same impact on all four emotions. Not only the standard mask spares the detection of the neutral expression, it also has a particular impact on the recognition of sadness. Indeed, the mean accuracy drop for sadness recognition (from 93% in NM to 70% in SM) is more than twice that of happiness recognition (from 94% in NM to 83% in SM). This is particularly intriguing, because the mouth is known to be particularly relevant for detecting happiness over other negative emotions31. However, this may be due to a ceiling effect in the NM condition. Moreover, that happiness is easier to recognize than other emotions is shown both by classical literature on emotion recognition in normal conditions32–34 and by recent literature that makes it harder by adding visual noise11,35. Hence, the addition of a (standard) mask might simply have “unmasked” the higher difficulty of recognizing sadness as compared to happiness. It has been speculated that this higher performance might depend on the fact that, within our stimuli, happiness is the only positive emotion, among the classical six basic emotions36. In fact, Table 2 reveals that in standard mask condition sad faces were often mistaken for fearful faces, and vice-versa. Moreover, while in some cases participants confused either positive (happy) or negative (fearful or sad) expressions with neutral ones, they almost never reverse the valence polarity of expressions (i.e., they seldom confused positive and negative faces; see also Fig. 5 in Carbon’s study22). It is thus likely that, while full facial information facilitates the recognition of a specific emotion category, less facial information (from the upper part) is sufficient to correctly assess the valence of some facial expression, as if valence is redundantly expressed by several facial features. Indeed, the view that the recognition of valence from facial expression (that Russell dubbed “minimal universality”36) is more fundamental than that of specific emotion categories seems supported both on the ground of developmental psychology37, and by recent cross-cultural studies38. Further research may directly address the hypothesis that minimal universality in emotion recognition “pierces the mask”.

Another interesting finding is that, consistently with a previous study22, masks make no difference for identifying that a face is neutral with respect to emotional. While people do seem to treat “neutral face” as a proper category39, the emotional meaning of neutral faces may be influenced by the context40. However, previous literature strongly suggests that the emotional neutrality of a face can be easily decoded by the eyes41,42.On a more practical side, the data suggest that transparent masks almost entirely avoid the “emotional screening” effect of standard masks. Indeed, the accuracy of emotion recognition of faces wearing transparent masks is almost comparable to that obtained with unmasked faces, and significantly better than that obtained with faces wearing standard masks, for all emotions.

Trustworthiness and facemasks

The effect of masks on trustworthiness has been studied by means of two distinct analyses. First, we established to what extent the ratings of trustworthiness attributed to the CFD pictures—where scores for trustworthiness of unmasked faces are validated—are influenced by the presence/type of mask. While the perceived trustworthiness of faces validated as “trustworthy” in the CFD remains stable between NM, TM and SM conditions, things go differently once we consider those faces that, according to the CFD, are “untrustworthy”. In other words, the low trust judgments on untrustworthy faces in the “no mask” condition are consistent with those of the CFD, but their scores are less negative in faces wearing transparent masks, and even less so in faces wearing standard masks—albeit they never reach the score of trustworthy faces. In a sense, it looks like “untrustworthiness” gets screened by masks.

The second analysis, aimed at exploring the link between emotions on trustworthiness, was performed on the results obtained from the presentation of the KDEF stimuli where, however, validated scores for trustworthiness are not available. Here, we found that the posed emotional expression significantly affects the degree of attributed trust, with sad expressions rated as more trustworthy than fearful ones in both NM and TM conditions, but similar in the SM condition. Such assimilation of transparent masks and unmasked faces is in line with our previous observation that these conditions are perceived as very similar, with respect to the standard masks (see above). The fact that sadness has lower impact on trust than other emotions with negative valence, e.g. fear and anger, is already documented in the literature43,44: a possible explanation considers that sadness is not necessarily directed at someone, whereas both fear and anger typically have a specific target. If that target is the trustor, then this gives a good reason not to trust that trustee—it would be risky to rely on someone who is scared of us or mad at us. But even if the emotional target is perceived as external to the trust relationship, it may elicit the assumption that the trustee’s attention is directed elsewhere, which in turn makes him/her not particularly trustworthy. These reasons against trust are absent with respect to sad trustees. Moreover, a sad face is often perceived as particularly vulnerable. In turn, a vulnerable/weak person might be seen as unlikely to defect or backstab the observer, and thus, more deserving of trust.

Happy faces, in contrast, lead to higher degrees of assigned trust over both neutral and negative (sadness and fear) expressions in all conditions, that is, regardless of the presence/type of mask. The high scores in trustworthiness obtained by happy faces can be easily explained by the intrinsic affiliative and approach-oriented nature of smile and laughter3,45–48 and by the evidence that observing such expressions induces automatic facial mimicry and emotional contagion49,50. Note also that observing happy expressions boosts the activation of the same emotional regions controlling the production of the same positive expression, namely a “mirror mechanism” for laughter and smiling45,49,51. The high scores obtained by happy expressions can be also explained from a dimensional standpoint and, in particular, from the hypothesis that trust attribution depends on a combination of valence and dominance52. Following this view, valence and dominance are established through a process of overgeneralization of their similarity with emotional expressions and with cues that signal physical strength, respectively53. In a slogan: the more a face expresses the willing to harm and physical strength, the lesser the trust we attribute to her bearer. Indeed, the trust scores assigned to the emotional and neutral facial stimuli drawn from the KDEF suggest a positive correlation between valence and trustworthiness, with faces expressing happiness rated as more trustworthy than those expressing negative emotions (fear or sadness) or no emotion. However, it is likely that valence alone is not enough: indeed, while sad and fearful faces both express negative emotions, the former are judged as slightly less reliable than the latter in both unmasked faces and transparent masks. This trend vanishes in the case of standard masks, possibly because participants in that condition had more trouble distinguishing between sad and fearful faces.

If trust is based on both valence and dominance, which dimension drives this screen-off? Tentative as it may be, the evidence about emotion recognition is at least suggestive that valence perception is not screened by facemasks. Moreover, the trust judgments of emotional faces discussed above reveal a same pattern of positive correlation between valence and trustworthiness across all three conditions. It is then reasonable to assume that masks screen untrustworthiness by partially obstructing cues relevant for dominance estimation. These findings are in line with a positive correlation between perceived dominance and facial width-to-height ratio (fWHR)54. Since the width of the face is measured on the basis of the distance between cheekbones, that are partially covered by the masks, it is possible that the untrustworthiness-screening effect of masks are mediated by the obstruction of the zygomaticus region, which yields dominance-related cues.

Intriguingly, happy faces turned out to be perceived as significantly more trustworthy in the standard mask than in either no mask or transparent mask condition. Prima facie, this seems at odds with the fact that happy faces are less often recognized as such when covered by a standard mask. While we cannot formulate any definitive explanation for this puzzling finding, three observations are in order. First, we know that some affective properties of happy faces are perceived subconsciously55, and that subconscious perception is sufficient to elicit emotional contagion56. As such, it is possible that the positivity of these faces was unavailable during a conscious categorization task, but still managed to elicit a contagion subconsciously scaffolding trustworthiness judgment. Second, note that the higher trustworthiness observed in the standard mask condition is not limited to the case of happy faces, but is pervasive in each emotion condition. This seems in line with recent findings suggesting that masked faces are perceived on average as more trustworthy than unmasked faces23. We speculate that, as the study was conducted during the pandemic, facemasks are taken as a proxy of social compliance and caring. This effect does not occur for transparent facemasks, possibly because they are not perceived as a medical tool to contrast the pandemic. Third, facemasks may alter the estimated distance between cheekbones, possibly inflating the perceived fWHR in our stimuli. As mentioned above, a larger fWHR may lead to higher dominance scores; and higher dominance usually implies higher trustworthiness when paired with positive valence, as in the case of happy faces.

Re-identification and facemasks

The analysis performed on the re-identification task showed a significant drop in the accuracy when faces were first seen with a mask, as compared to when they were first seen without masks. As expected, individuals previously presented without masks were re-identified better than unmasked individuals previously presented with a mask. This is likely explained by the fact that encoding and retrieval were matched in the unmasked but not in the masked conditions. In contrast, more interesting is the lack of difference between the performances obtained with transparent and standard masks. Indeed, while the translucent window of transparent masks allows to prevent the impairment in emotion recognition characterizing standard masks, and only slightly influences untrustworthiness judgments, when it comes to identity recognition transparent masks are as problematic as standard ones,—suggesting that the last task relies on a different process. How can such dissociation be explained?

Previous studies demonstrate that the recognition of unfamiliar faces (such as those tested in the present study) largely depends on the visibility of external (as opposed to internal) features of the face. However, a recent study verified that the recognition of both familiar and unfamiliar faces is similarly impaired when standard masks are worn. That very same study urged the development of “transparent face coverings that can reduce the spread of disease, while still allowing the identification of the individual underneath”24. Based on our results, we predict that transparent masks like those we employed in this study (i.e. that only uncover the mouth region) will not. Indeed, since the mouth size has been shown to be the least relevant feature for identifying both familiar and unfamiliar faces17, and given that transparent masks only uncover the mouth region, while the external features of the face (e.g. the chin) remain covered, this may explain why they fail to mitigate the accuracy drop observed in the case of standard masks. It is important to bear in mind that what we are referring to constitutes a peculiar kind of re-identification, namely the incidental recognition of a previously observed, unfamiliar, face. This task should not be confused with other types of face identity-processing tasks, such as those related to visual memorization (e.g. the explicit recall to memory of a face), or to semantic processing (e.g. the explicit recognition of an individual’s name and identity). Indeed, participants were never told to memorize faces nor informed about the re-identification task that followed in the second session. In addition, all faces were unfamiliar. We opted for this methodology in the light of a more ecological design as the incidental re-identification is a process that is very similar to what happens in our daily social contacts. Nonetheless, it would be interesting in future studies to investigate the impact of the facemask on intentionally learned or familiar faces.

Barring future studies showing that standard and transparent facemasks exert a different impact on familiar faces, this dissociation between emotion and re-identification seems is in line with dual-route models of face perception positing that facial identity and emotional expressions are processed by separate cognitive mechanisms, triggered by distinct visual features8,20,57. It is widely accepted that, in normal conditions and in healthy observers, the process of identity recognition relies on the processing of the whole face rather than focusing on individual parts19,20. In contrast, emotion recognition is largely based on specific information from the mouth, or the eye, region, depending on which emotion is expressed.

Neuroscientific dual-route models of face perception suggest that emotion and identity recognition are processed by two different sectors of the temporal cortex10,57. Emotion recognition, relying on the identification of the changeable, and dynamic, aspects of the face, is processed in the “dorsal stream” for faces encompassing the visual motion area MT and STS areas. Face identity, in contrast, mainly relies on those aspects of the face structure that are invariant across changes (static), and is processed in the “ventral stream” for faces in the inferotemporal region58,59. On the basis of this perspective, we speculate that, during the presentation of the stimuli, the dorsal stream was minimally affected by the transparent mask, being the mouth fully visible. In contrast, the reduced capability of re-identify previously observed (masked) faces is telling of a more dramatic impairment of the ventral stream. The reduced functioning of the ventral stream can be accounted for by two alternative explanations. First, the capability to recognize the actor’s identity could depend on a holistic processing, which is compromised by both types of masks. While this interpretation is in line with previous hypotheses on identity recognition, one could expect that the disruption, via masking, of the holistic processing of the face should lead to a much more pronounced reduction in accuracy than the one observed in our study. An alternative, and more tempting, interpretation is that the capability to re-identify the actor relies on different types of information, not limited to the mouth/eyes regions, but also relying on cues of the lower half of the face, such as contrast reduction of the jaw and cheeks, small freckles and wrinkles, which are screened by both types of masks. To disentangle between these alternative hypotheses, further studies may compare the effect of semi-transparent vs. fully transparent masks, to investigate whether the latter are able to recover not only the capability to recognize emotions and trustworthiness, but also to better re-identify previously observed (masked) individuals.

Given the dissociation between dynamic and static features encoded by the dorsal and ventral streams respectively, one could argue that such a model cannot account for our results, being all our stimuli static. However, despite the dorsal stream for faces is indeed typically triggered by dynamic facial expressions, Furl and colleagues58 demonstrated that the presentation of static emotional expressions—as the ones used in our study—activated the same STS sectors typically activated by dynamic expressions, hypothesizing that static emotional expressions determine an “implied motion”, hence activating the same neuronal population encoding dynamic expressions.

Implications

To the best of our knowledge, this study represents the first systematic enquiry concerning social readouts from faces with standard and transparent masks. Many more analyses will be needed to get a full grasp over the complex, often context-mediated, interaction between various types of masks and social information based on face perception. The present study could be fruitfully complemented by further within subject designs. Moreover, while for the sake of simplicity we have treated masks only as if they subtract social information by obstructing the face, it is likely that they also add social information of some sort. Fischer and colleagues29 demonstrate that the emotional meaning ascribed to women’s faces covered by a digital manipulation slightly differ from that of the same faces covered by a Niqab (a traditional Muslim veil). More closely to the object investigated here, i.e. the medical facemask, social sciences such as anthropology60 and semiotics61 offer precious insights about how its meaning may change across cultures and across times. Nevertheless, we think that some tentative implications may be legitimately drawn from our data.

First, we have seen that standard, but not transparent, masks compromise the capability to recognize the emotion (albeit probably not the valence) on the basis of facial cues. Being able to see one’s facial movements is not only useful for the sake of knowing mental states. As mentioned above, emotional decoding is likely to involve facial mimicry, which, beside its role in emotion recognition, is also thought to play a role in fostering empathy2–7. These expectations seem supported by a study conducted in Hong Kong after the SARS pandemic62, reporting that primary care doctors visiting patients with a medical facemask were perceived on average as less emphatic, especially when subjects have been patients of the same doctor for a long time. It is thus safe to assume that the possible benefits of transparent masks extend beyond enabling verbal communication with sign language, which originally inspired their design, by also favoring empathy mediated by facial mimicry. Consequently, as the social impairments brought about by facemasks partially explains why some people refuse to employ them, by partially re-enabling social communication transparent masks could mitigate the skepticism toward wearing them. Moreover, as it has been shown that empathy is pivotal in promoting compliant behaviors toward physical distancing and mask wearing63, by restoring the emotional display that scaffolds empathy, transparent facemasks may indirectly promote the diffusion of mask wearing itself.

However, we should refrain from the simplistic conclusion that transparent masks are always preferable to standard ones. Recall that facial first impressions profoundly affect observers’ behavior, often by perpetrating prejudices64,65. For instance, it has been recently shown that, during the triages aimed at establishing the severity and hence the priority of patients in the emergency unit of a hospital, the perceived untrustworthiness of faces predicted less severe categorization66. As this outcome is likely to embed some inequalities, based on our findings that masks reduce perceived untrustworthiness, it would be interesting to speculate whether masked patients would have received a fairer treatment.

A final implication is that masked faces are harder to recognize, even if their mouth region is observable. Trivial as it may seem, further investigating this matter will prove paramount in a context such as forensic. Indeed, as face is the more visible hallmark of personal identity, it is not by chance that in many countries the law forbids to cover the face without necessity in public spaces.

Materials and methods

Participants

The experiment was an on-line test (see below) carried out on 122 Italian native speakers (47 females; age = 33 ± 8), recruited by means of different social media platforms. Before the experiment, participants provided some basic demographic information (available upon request to the corresponding author), read the main instructions, and provided an informed consent. By accessing a single un-reusable link, each participant could run the experiment directly from home on their laptops, smartphones, or tablets. An anti-ballot box stuffing was employed in order to avoid multiple participations from the same device. Informed consents were requested before the experiment started and the whole procedure was approved by the Institutional Review Board (IRB) of Sapienza University of Rome (ID 0001261 - 31.07.2020). All methods were carried out in accordance with relevant guidelines and regulations.

Experimental procedure

The experiment consisted of an on-line test (Qualtrics.com) composed by two distinct sessions. The first session (“emotion recognition and trust attribution”) was aimed at evaluating the impact of SM and TM on emotion and trust attribution. The second session (“re-identification task”) was aimed at investigating the impact of SM and TM in the capability to re-identify face identity. The entire study lasted 10 ± 4 min. Response times for each task and condition gave no significant results and were discarded from further analyses (Emotion Recognition: Mdn = 1.71 s. 95% C.I. [1.63, 1.81]; Trust Attribution: Mdn = 1.61 s. 95% C.I. [1.52, 1.70]; Recall: Mdn = 2.11 s. 95% C.I. [1.92, 2.23] ).

-

Emotion recognition and trust attribution

During the first session participants were asked to observe a randomized series of 48 faces clustered in 4 blocks, presented under one of the three following conditions: (a) unmasked faces (NM); (b) faces wearing a SM occluding the mouth region and (3) faces wearing a TM restoring the mouth region (see below for details). Each trial started with a 1 s fixation cross, followed by a 1 s presentation of a face presented in NM, SM or TM conditions. Regardless of the presence/type of mask, all faces posed one of the following expressions: fearful (F), sad (S), happy (H) or neutral (N). At the end of the stimulus presentation, two questions appeared on the screen. The first question (for emotion recognition) asked participants to answer “which emotion was expressed by the face” and participants were requested to identify the posed expression among four different options (F, S, H, N). In order to avoid any “parachute effect”, namely that undecided subjects selected the only answer implying no emotion, we characterized this option by calling it “neutral expression” instead of “none” or “no emotion. Subsequently, in a different screen, a second question (for trust attribution) asked “to what extent would you trust this person?”, and participants were asked to rate the perceived trustworthiness on a 6-point Likert scale. After the second answer was given, a new trial began. For each participant, the session consisted of 48 trials, and only one of the three conditions (NM, TM, SM) was randomly assigned to each participant (Fig. 1A). Moreover, to avoid any selection bias, the IT platform randomly assigned each subject to one of the three conditions in a balanced manner. This procedure made also sure that each condition was submitted to the same number of participants (NM = 41; TM = 41; SM = 40). The order of the blocks was fixed regardless of the condition, while the presentation order of the stimuli within each block was randomized to avoid any primacy or recency effects (see Table 3). This design ensured the lowest possibility of seeing the same face twice in a row. Each block was balanced for gender and facial expressions. Lastly, through a chi square test, we also verified that conditions did not differ for socio-demographics information (gender, age, residence area, and level of education; all ps = NS).

-

Re-identification task

The second session was aimed at evaluating the capability to recognize an unmasked face previously presented in NM, TM or SM condition. All faces in this session consisted of neutral expressions (from the Chicago Face Database; see below), and were displayed without masks, regardless of the condition the participant was assigned to in the “emotion recognition and trust attribution” session. For each participant, 12 faces were shown, 4 of which were already presented in the previous session, in NM, SM or TM condition. Previously presented faces were alternated, in a random order, with 8 brand new faces. The unmasked face, either previously presented or a new one, was shown on the monitor screen and participants were requested to answer whether or not they had already seen the face in the first session. To minimize both priming and recency effects, the items used in the re-identification task were always shown in the middle blocks (2 and 3) of the first session.

Table 3.

Experimental procedure: blocks order and randomization. K = KDEF, C = CFD; f = female, m = male; N = Neutral, H = Happy, S = Sad, F = Fear, T = Trustworthy face, U = Untrustworthy face. Numbers indicate the faces’ identity.

| St. 1 | St. 2 | St. 3 | St. 4 | St. 5 | St. 6 | St. 7 | St. 8 | St. 9 | St. 10 | St. 11 | St. 12 | St. 13 | St. 14 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Block 1 | Kf1N | Kf2H | Kf3S | Kf4F | Kf5N | Km1N | Km2H | Km3S | Km4F | Km5H | ||||

| Block 2 | Kf1H | Kf2S | Kf3F | Kf4N | Kf5H | Km1H | Km2S | Km3F | Km4N | Km5S | Cm1T | Cm2U | Cf1T | Cf2U |

| Block 3 | Kf1S | Kf2F | Kf3N | Kf4H | Kf5S | Km1S | Km2F | Km3N | Km4H | Km5F | Cm2T | Cf1U | Cm1U | Cf2T |

| Block 4 | Kf1F | Kf2N | Kf3H | Kf4S | Kf5F | Km1F | Km2N | Km3H | Km4S | Km5N |

Block 1, 2, 3, and 4 were presented in order. Vice versa, the order of the stimuli within each block was totally randomized.

Experimental stimuli

Original, unmasked, version of the stimuli (NM) were retrieved by two datasets: the Karolinska Directed Emotional Faces (KDEF)25,26 and the Chicago Face Database (CFD)27. More specifically:

Emotion recognition. To evaluate the effects of facemasks on the recognition of emotional expressions, we used 40 images of faces from the KDEF database. This database includes 70 (unmasked) faces depicting 7 emotional expressions from 5 different perspectives. For the current study, we selected the four following expressions: fear, sadness, joy and neutral expression – from the frontal perspective (Fig. 1A). For each of the four expressions, we selected 10 faces (5 males, 5 females) among those whose emotional recognition ratings were the highest. The selection of two negative emotions (fear and sadness) was aimed at obtaining results distinct categories of emotion sharing the same (negative) valence. In our paradigm, each selected face (N = 10) was shown 4 times (1 per facial expression) in a within subjects fashion.

Trust attribution and re-identification task. Since one of the goals of our study was to investigate the effect of facemasks on perceived trustworthiness, we also included 16 images of faces from the CFD, for which a validation of the degree of trust assigned to each face is already available with an Italian sample 67. The CFD consists of 158 high-resolution, standardized, frontal position photographs of (unmasked) males and females between 18 and 40 years old. For the current study, we selected 16 faces (8 males, 8 females) among the most trustworthy and untrustworthy ones in a balanced fashion. Images were cropped to match the size of those from the KDEF (964 × 678 pixels). As regards the trust attribution task, we selected 8 faces (4 males and 4 females) among those who had the highest and lowest scores in trustworthiness. Each face showed a neutral expression and was shown only once during the first session.

In the re-identification task, we presented our participants with 4 CFD faces (2 males and 2 females) among those of the first session and 8 new (i.e., previously unseen) CFD faces (4 males and 4 females). In the whole design, trustworthy and untrustworthy faces were shown in a balanced manner.

All the original databases used in the current study are publicly available. To obtain visual stimuli for the masked TM and SM conditions, a professional graphic designer edited each unmasked stimulus, creating two versions of the same stimulus by superimposing two different masks: a standard medical mask and a transparent one, in which the mouth could be seen through the transparency of a plastic part (Fig. 1A).

Measurements and Statistical analysis

-

Emotion recognition

The first analysis was conducted on the stimuli from the KDEF, and was aimed at verifying a difference in emotion recognition across the three conditions (NM, SM, TM)—regardless of the emotion type. For each participant, we assigned 1 point for each correctly recognized emotion and 0 points for each error. A composite score was made by averaging the sum of the 40 ratings obtained from the KDEF faces (Confidence Intervals of the median have been computed via bias corrected and accelerated bootstrap—BCa: 1000 samples). Given the violation of normal distribution assumption, we run a (non-parametric) Krusal-Wallis test with Monte-Carlo exact tests (of which we reported the significance level (pMC; 5000 sampled tables) as well its 99% confidence interval) considering the average scores for the three Conditions (NM, SM, TM). In case of significant effects, a Mann–Whitney tests (Bonferroni corrected) was applied as post-hoc test. Effect sizes for Mann–Whitney tests were computed using the following equation: .

The second analysis was aimed at considering the effect of facemasks on the different emotions. We performed four Krusal-Wallis tests considering the average scores for the three Conditions (NM, SM, TM), for each emotion. Post-hoc test was conducted as in the previous analysis.

The third analysis was aimed at considering which emotions were more affected by the presence/type of mask. For each of the three Conditions (NM, SM, TM), due to the repeated measure design, we performed a Friedman test considering the scores attributed to the four different Emotions (N, H, F, S) as dependent variables. In case of significant effects, a Wilcoxon signed-rank test was applied as post-hoc test to detect differences within different emotions.

The direction of errors in the emotion categorization task was assessed by a chi-square test comparing the actual scores with the expected values for correct answers and for errors. Expected values for correct answers and for errors were calculated on the average value of all correct answers and errors, respectively.

-

Trust attribution

The first analysis was conducted on the stimuli from the CFD, and was aimed at investigating the trust attribution across the three conditions (NM, SM, TM), namely whether the presence/type of mask affects both the trust assigned to a specific face, and the consistency between (un)trustworthiness scores assigned to masked and unmasked faces. Analyses were performed on three distinct sets of data: (a) on all trust scores obtained from all stimuli of the CFD, regardless of their trust scores stored in the dataset, (b) on the trust scores obtained from the CFD stimuli validated as highly trustworthy in an Italian sample67 and (c) on the trust scores obtained from the CFD stimuli validated as highly untrustworthy in the same Italian sample. The difference between the scores obtained by trustworthy and untrustworthy faces was assessed by means of a Wilcoxon signed-rank test. In all the previous tests, we applied a (non-parametric) Kruskal–Wallis test considering the average scores for the three Conditions (NM, SM, TM). In case of significant effects, a Mann–Whitney tests (Bonferroni corrected) was applied as post-hoc test. Effect sizes for Mann–Whitney tests were computed as before. The same statistical procedure was conducted on the stimuli from the KDEF.

The second analysis, also conducted on the stimuli from the KDEF, was aimed at considering which emotions were more affected by the presence/type of mask in terms of trust attribution. For each of the three Conditions (NM, SM, TM), due to the repeated measure design, we performed a Friedman test considering the scores attributed to the four different Emotions (N, H, F, S) as dependent variables. In case of significant effects, a Wilcoxon signed-rank test was applied as post-hoc test to detect differences within different emotions.

-

Re-identification task

Analyses were performed to investigate an effect of Condition (NM, SM, TM) on the re-identification scores with the aim of assessing the impact of the mask on the ability to remember a previously displayed face. For each participant we evaluated the percentage of correct answers by scoring each trial on a binary scale (1–0 points for each correct and incorrect responses, respectively). We ran a Kruskal–Wallis test considering the average scores for the three Conditions (NM, SM, TM). In case of significant effects, a Mann–Whitney tests (Bonferroni corrected) was applied as post-hoc test.

Lastly, in order to control subjects and trials variability in the whole experiment, we incorporated i) subjects, ii) trials (i.e. stimuli) and iii) an additional interaction subjects*faces as random effects within three generalized linear mixed models due to the possible heterogeneity of preferences across participants and trials (GLMMs; emotion recognition; trust attribution; re-identification task) that confirmed all previous significance levels (p < 0.05).

Acknowledgements

The authors wish to thank Roberto Gamboni for the photoediting. MV has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Grant Agreement No. 819649 - FACETS, P.I. Massimo Leone), which also supported the photoediting and the publication.

Author contributions

A.A., M.M., F.C., F.P. and M.V. together designed the experiment and interpreted the results. A.A. and M.M. performed data acquisition and analyses and drafted the sections “Methods” and “Results”. M.V. drafted the sections “Introduction” and “Discussion”. F.P. provided comments on trustworthiness. F.C. reworked the entire article. All authors have contributed to, seen, reviewed and approved the manuscript.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Oosterhof NN, Todorov A. Shared perceptual basis of emotional expressions and trustworthiness impressions from faces. Emotion. 2009;9:128–133. doi: 10.1037/a0014520. [DOI] [PubMed] [Google Scholar]

- 2.Palagi E, Celeghin A, Tamietto M, Winkielman P, Norscia I. The neuroethology of spontaneous mimicry and emotional contagion in human and non-human animals. Neurosci. Biobehav. Rev. 2020;111:149–165. doi: 10.1016/j.neubiorev.2020.01.020. [DOI] [PubMed] [Google Scholar]

- 3.Hess U, Fischer A. Emotional mimicry as social regulation. Personal. Soc. Psychol. Rev. 2013;17:142–157. doi: 10.1177/1088868312472607. [DOI] [PubMed] [Google Scholar]

- 4.Hess, U. & Fischer, A. in Oxford Research Encyclopedia of Communication (2017). 10.1093/acrefore/9780190228613.013.433

- 5.Tramacere A, Ferrari PF. Faces in the mirror, from the neuroscience of mimicry to the emergence of mentalizing. J. Anthropol. Sci. 2016;94:113–126. doi: 10.4436/JASS.94037. [DOI] [PubMed] [Google Scholar]

- 6.Dimberg U, Andréasson P, Thunberg M. Emotional empathy and facial reactions to facial expressions. J. Psychophysiol. 2011;25:26–31. doi: 10.1027/0269-8803/a000029. [DOI] [Google Scholar]

- 7.Mancini G, Ferrari PF, Palagi E. In play we trust. Rapid facial mimicry predicts the duration of playful interactions in geladas. PLoS ONE. 2013;8:e66481. doi: 10.1371/journal.pone.0066481. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bruce V, Young A. Understanding face recognition. Br. J. Psychol. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- 9.Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn. Sci. 2000;4:223–233. doi: 10.1016/S1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- 10.Adolphs R. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 2002;1:21–62. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- 11.Blais C, Roy C, Fiset D, Arguin M, Gosselin F. The eyes are not the window to basic emotions. Neuropsychologia. 2012;50:2830–2838. doi: 10.1016/j.neuropsychologia.2012.08.010. [DOI] [PubMed] [Google Scholar]

- 12.Roberson D, Kikutani M, Döge P, Whitaker L, Majid A. Shades of emotion: What the addition of sunglasses or masks to faces reveals about the development of facial expression processing. Cognition. 2012;125:195–206. doi: 10.1016/j.cognition.2012.06.018. [DOI] [PubMed] [Google Scholar]

- 13.Schurgin, M. W. et al. Eye movements during emotion recognition in faces. J. Vis.14, (2014). [DOI] [PubMed]

- 14.Wegrzyn, M., Vogt, M., Kireclioglu, B., Schneider, J. & Kissler, J. Mapping the emotional face. How individual face parts contribute to successful emotion recognition. PLoS One12, (2017). [DOI] [PMC free article] [PubMed]

- 15.Robinson, K., Blais, C., Duncan, J., Forget, H. & Fiset, D. The dual nature of the human face: There is a little Jekyll and a little Hyde in all of us. Front. Psychol.5, (2014). [DOI] [PMC free article] [PubMed]

- 16.Logan AJ, Gordon GE, Loffler G. Contributions of individual face features to face discrimination. Vis. Res. 2017;137:29–39. doi: 10.1016/j.visres.2017.05.011. [DOI] [PubMed] [Google Scholar]

- 17.Abudarham N, Yovel G. Same critical features are used for identification of familiarized and unfamiliar faces. Vision Res. 2019;157:105–111. doi: 10.1016/j.visres.2018.01.002. [DOI] [PubMed] [Google Scholar]

- 18.Yin RK. Looking at upide-down faces. J. Exp. Psychol. 1969;81:141–145. doi: 10.1037/h0027474. [DOI] [Google Scholar]

- 19.Young AW, Hellawell D, Hay DC. Configurational information in face perception. Perception. 2013;42:1166–1178. doi: 10.1068/p160747n. [DOI] [PubMed] [Google Scholar]

- 20.Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- 21.Bernstein M, Yovel G. Two neural pathways of face processing: a critical evaluation of current models. Neurosci. Biobehav. Rev. 2015;55:536–546. doi: 10.1016/j.neubiorev.2015.06.010. [DOI] [PubMed] [Google Scholar]

- 22.Carbon CC. Wearing face masks strongly confuses counterparts in reading emotions. Front. Psychol. 2020;11:566886. doi: 10.3389/fpsyg.2020.566886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Olivera-La Rosa A, Chuquichambi EG, Ingram GPD. Keep your (social) distance: pathogen concerns and social perception in the time of COVID-19. Pers. Individ. Dif. 2020;166:110200. doi: 10.1016/j.paid.2020.110200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Carragher DJ, Hancock P. Surgical face masks impair human face matching performance for familiar and unfamiliar faces. PsyArXiv Prepr. 2020 doi: 10.31234/osf.io/n9mt5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lundqvist D, Flykt A, Öhman A. The Karolinska directed emotional faces—KDEF. Dep. Clin. Neurosci. Psychol. Sect . Karolinska Institutet; 1998. [Google Scholar]

- 26.Goeleven E, De Raedt R, Leyman L, Verschuere B. The Karolinska directed emotional faces: a validation study. Cogn. Emot. 2008;22:1094–1118. doi: 10.1080/02699930701626582. [DOI] [Google Scholar]

- 27.Ma DS, Correll J, Wittenbrink B. The Chicago face database: a free stimulus set of faces and norming data. Behav. Res. Methods. 2015;47:1122–1135. doi: 10.3758/s13428-014-0532-5. [DOI] [PubMed] [Google Scholar]

- 28.Calvo MG, Nummenmaa L. Detection of emotional faces: salient physical features guide effective visual search. J. Exp. Psychol. Gen. 2008;137:471–494. doi: 10.1037/a0012771. [DOI] [PubMed] [Google Scholar]

- 29.Fischer AH, Gillebaart M, Rotteveel M, Becker D, Vliek M. Veiled emotions: the effect of covered faces on emotion perception and attitudes. Soc. Psychol. Personal. Sci. 2012;3:266–273. doi: 10.1177/1948550611418534. [DOI] [Google Scholar]

- 30.Nestor, M. S., Fischer, D. & Arnold, D. “Masking” our emotions: Botulinum toxin, facial expression, and well-being in the age of COVID-19. J. Cosmet. Dermatol. (2020). [DOI] [PMC free article] [PubMed]

- 31.Bombari D, et al. Emotion recognition: the role of featural and configural face information. Q. J. Exp. Psychol. 2013;66:2426–2442. doi: 10.1080/17470218.2013.789065. [DOI] [PubMed] [Google Scholar]

- 32.Nummenmaa L, Calvo MG. Dissociation between recognition and detection advantage for facial expressions: a meta-analysis. Emotion. 2015;15:243–256. doi: 10.1037/emo0000042. [DOI] [PubMed] [Google Scholar]

- 33.Elfenbein HA, Ambady N. On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 2002;128:203–235. doi: 10.1037/0033-2909.128.2.203. [DOI] [PubMed] [Google Scholar]

- 34.Ekman P, Sorenson ER, Friesen WV. Pan-cultural elements in facial displays of emotion. Science (80-) 1969;164:86–88. doi: 10.1126/science.164.3875.86. [DOI] [PubMed] [Google Scholar]

- 35.Blais C, Fiset D, Roy C, Régimbald CS, Gosselin F. Eye fixation patterns for categorizing static and dynamic facial expressions. Emotion. 2017;17:1107–1119. doi: 10.1037/emo0000283. [DOI] [PubMed] [Google Scholar]

- 36.Russell JA. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol. Bull. 1994;115:102–141. doi: 10.1037/0033-2909.115.1.102. [DOI] [PubMed] [Google Scholar]

- 37.Widen SC. Children’s interpretation of facial expressions: the long path from valence-based to specific discrete categories. Emot. Rev. 2013;5:72–77. doi: 10.1177/1754073912451492. [DOI] [Google Scholar]

- 38.Gendron M, Crivelli C, Barrett LF. Universality reconsidered: diversity in making meaning of facial expressions. Curr. Dir. Psychol. Sci. 2018;27:211–219. doi: 10.1177/0963721417746794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Etcoff NL, Magee JJ. Categorical perception of facial expressions. Cognition. 1992;44:227–240. doi: 10.1016/0010-0277(92)90002-Y. [DOI] [PubMed] [Google Scholar]

- 40.Carrera-Levillain P, Fernandez-Dols JM. Neutral faces in context: their emotional meaning and their function. J. Nonverb. Behav. 1994;18:281–299. doi: 10.1007/BF02172290. [DOI] [Google Scholar]

- 41.Smith ML, Cottrell GW, Gosselin F, Schyns PG. Transmitting and decoding facial expressions. Psychol. Sci. 2005;16:184–189. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- 42.Duncan, J. et al. Orientations for the successful categorization of facial expressions and their link with facial features. J. Vis.17, (2017). [DOI] [PubMed]

- 43.Dunn JR, Schweitzer ME. Feeling and believing: the influence of emotion on trust. J. Pers. Soc. Psychol. 2005;88:736–748. doi: 10.1037/0022-3514.88.5.736. [DOI] [PubMed] [Google Scholar]

- 44.Winston JS, Strange BA, O’Doherty J, Dolan RJ. Automatic and intentional brain responses during evaluation of trustworthiness of faces. Nat. Neurosci. 2002;5:277–283. doi: 10.1038/nn816. [DOI] [PubMed] [Google Scholar]

- 45.Caruana F, et al. Mirroring other’s laughter. Cingulate, opercular and temporal contributions to laughter expression and observation. Cortex. 2020;128:35–48. doi: 10.1016/j.cortex.2020.02.023. [DOI] [PubMed] [Google Scholar]

- 46.Wood A, Niedenthal P. Developing a social functional account of laughter. Soc. Personal. Psychol. Compass. 2018;12:e12383. doi: 10.1111/spc3.12383. [DOI] [Google Scholar]

- 47.Martin J, Rychlowska M, Wood A, Niedenthal P. Smiles as multipurpose social signals. Trends Cogn. Sci. 2017;21:864–877. doi: 10.1016/j.tics.2017.08.007. [DOI] [PubMed] [Google Scholar]

- 48.Dunbar, R. I. M. Bridging the bonding gap: the transition from primates to humans. Philos. Trans. R. Soc. London B Biol. Sci.367, (2012). [DOI] [PMC free article] [PubMed]

- 49.Rymarczyk, K., Żurawski, Ł., Jankowiak-Siuda, K. & Szatkowska, I. Neural Correlates of Facial Mimicry: Simultaneous Measurements of EMG and BOLD Responses during Perception of Dynamic Compared to Static Facial Expressions. Front. Psychol.9, (2018). [DOI] [PMC free article] [PubMed]

- 50.Dimberg U. Facial reactions to facial expressions. Psychophysiology. 1982;19:643–647. doi: 10.1111/j.1469-8986.1982.tb02516.x. [DOI] [PubMed] [Google Scholar]

- 51.Caruana F, et al. A mirror mechanism for smiling in the anterior cingulate cortex. Emotion. 2017;17:187–190. doi: 10.1037/emo0000237. [DOI] [PubMed] [Google Scholar]

- 52.Oosterhof NN, Todorov A. The functional basis of face evaluation. Proc. Natl. Acad. Sci. U. S. A. 2008;105:11087–11092. doi: 10.1073/pnas.0805664105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Zebrowitz LA, Montepare JM. Social psychological face perception: why appearance matters. Soc. Personal. Psychol. Compass. 2008;2:1497–1517. doi: 10.1111/j.1751-9004.2008.00109.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Geniole SN, Denson TF, Dixson BJ, Carré JM, McCormick CM. Evidence from meta-analyses of the facial width-to-height ratio as an evolved cue of threat. PLoS ONE. 2015;10:e0132726. doi: 10.1371/journal.pone.0132726. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.De Gelder B, Vroomen J, Pourtois G, Weiskrantz L. Non-conscious recognition of affect in the absence of striate cortex. NeuroReport. 1999;10:3759–3763. doi: 10.1097/00001756-199912160-00007. [DOI] [PubMed] [Google Scholar]

- 56.Tamietto M, et al. Unseen facial and bodily expressions trigger fast emotional reactions. Proc. Natl. Acad. Sci. U. S. A. 2009;106:17661–17666. doi: 10.1073/pnas.0908994106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Pitcher D, Ungerleider LG. Evidence for a third visual pathway specialized for social perception. Trends Cogn. Sci. 2021;25:100–110. doi: 10.1016/j.tics.2020.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Furl N, Hadj-Bouziane F, Liu N, Averbeck BB, Ungerleider LG. Dynamic and static facial expressions decoded from motion-sensitive areas in the macaque monkey. J. Neurosci. 2012;32:15952–15962. doi: 10.1523/JNEUROSCI.1992-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Gerbella M, Caruana F, Rizzolatti G. Pathways for smiling, disgust and fear recognition in blindsight patients. Neuropsychologia. 2019;128:6–13. doi: 10.1016/j.neuropsychologia.2017.08.028. [DOI] [PubMed] [Google Scholar]

- 60.Siu, J. Y. M. Qualitative study on the shifting sociocultural meanings of the facemask in Hong Kong since the severe acute respiratory syndrome (SARS) outbreak: Implications for infection control in the post-SARS era. Int. J. Equity Health15, (2016). [DOI] [PMC free article] [PubMed]

- 61.Leone, M. The semiotics of the medical face mask: east and west. Signs and Media, forthcoming. http://www.facets-erc.eu/wp-content/uploads/2020/05/Massimo-LEONE-2020-The-Semiotics-of-the-Medical-Face-Mask-Final-Version.pdf (2020).

- 62.Wong, C. K. M. et al. Effect of facemasks on empathy and relational continuity: A randomised controlled trial in primary care. BMC Fam. Pract.14, (2013). [DOI] [PMC free article] [PubMed]

- 63.Pfattheicher, S., Nockur, L., Böhm, R., Sassenrath, C. & Petersen, M. B. The emotional path to action: empathy promotes physical distancing and wearing of face masks during the COVID-19 pandemic. Psychol. Sci.31, (2020). [DOI] [PubMed]

- 64.Olivola CY, Funk F, Todorov A. Social attributions from faces bias human choices. Trends Cogn. Sci. 2014;18:566–570. doi: 10.1016/j.tics.2014.09.007. [DOI] [PubMed] [Google Scholar]

- 65.Todorov A, Olivola CY, Dotsch R, Mende-Siedlecki P. Social attributions from faces: determinants, consequences, accuracy, and functional significance. Annu. Rev. Psychol. 2015;66:519–545. doi: 10.1146/annurev-psych-113011-143831. [DOI] [PubMed] [Google Scholar]

- 66.Bagnis A, et al. Judging health care priority in emergency situations: patient facial appearance matters. Soc. Sci. Med. 2020;260:113180. doi: 10.1016/j.socscimed.2020.113180. [DOI] [PubMed] [Google Scholar]

- 67.Felletti S, Paglieri F. Trust your peers! How trust among citizens can foster collective risk prevention. Int. J. Disaster Risk Reduct. 2019;36:101082. doi: 10.1016/j.ijdrr.2019.101082. [DOI] [Google Scholar]