Abstract

Directed acyclic graphs or Bayesian networks that are popular in many AI-related sectors for probabilistic inference and causal reasoning can be mapped to probabilistic circuits built out of probabilistic bits (p-bits), analogous to binary stochastic neurons of stochastic artificial neural networks. In order to satisfy standard statistical results, individual p-bits not only need to be updated sequentially but also in order from the parent to the child nodes, necessitating the use of sequencers in software implementations. In this article, we first use SPICE simulations to show that an autonomous hardware Bayesian network can operate correctly without any clocks or sequencers, but only if the individual p-bits are appropriately designed. We then present a simple behavioral model of the autonomous hardware illustrating the essential characteristics needed for correct sequencer-free operation. This model is also benchmarked against SPICE simulations and can be used to simulate large-scale networks. Our results could be useful in the design of hardware accelerators that use energy-efficient building blocks suited for low-level implementations of Bayesian networks. The autonomous massively parallel operation of our proposed stochastic hardware has biological relevance since neural dynamics in brain is also stochastic and autonomous by nature.

Keywords: Bayesian network, probabilistic spin logic, binary stochastic neuron, magnetic tunnel junction, inference

1. Introduction

Bayesian networks (BN) or belief nets are probabilistic directed acyclic graphs (DAG) popular for reasoning under uncertainty and probabilistic inference in real-world applications such as medical diagnosis (Nikovski, 2000), genomic data analysis (Friedman et al., 2000; Jansen et al., 2003; Zou and Conzen, 2004), forecasting (Sun et al., 2006; Ticknor, 2013), robotics (Premebida et al., 2017), image classification (Arias et al., 2016; Park, 2016), neuroscience (Bielza and Larrañaga, 2014), and so on. BNs are composed of probabilistic nodes and edges from parent to child nodes and are defined in terms of conditional probability tables (CPT) that describe how each child node is influenced by its parent nodes (Heckerman and Breese, 1996; Koller and Friedman, 2009; Pearl, 2014; Russell and Norvig, 2016). The CPTs can be obtained from expert knowledge and/or machine learned from data (Darwiche, 2009). Each node and edge in a BN have meaning representing specific probabilistic events and their conditional dependencies and they are easier to interpret (Correa et al., 2009) than neural networks where the hidden nodes do not necessarily have meaning. Unlike neural networks where useful information is extracted only at the output nodes for prediction purposes, BNs are useful for both prediction and inference by looking at not only the output nodes but also other nodes of interest. Computation of different probabilities from a BN becomes intractable when the network gets deeper and more complicated with child nodes having many parent nodes. This has inspired various hardware implementations of BNs for efficient inference (Rish et al., 2005; Chakrapani et al., 2007; Weijia et al., 2007; Jonas, 2014; Querlioz et al., 2015; Zermani et al., 2015; Behin-Aein et al., 2016; Friedman et al., 2016; Thakur et al., 2016; Tylman et al., 2016; Shim et al., 2017). In this article, we have elucidated the design criteria for an autonomous (clockless) hardware for BN unlike other implementations that typically use clocks.

Recently, a new type of hardware computing framework called probabilistic spin logic (PSL) is proposed (Camsari et al., 2017a) based on a building block called probabilistic bits (p-bits) that are analogous to binary stochastic neurons (BSN) (Ackley et al., 1985; Neal, 1992) of the artificial neural network (ANN) literature. p-bits can be interconnected to solve a wide variety of problems such as optimization (Sutton et al., 2017; Borders et al., 2019), inference (Faria et al., 2018), an enhanced type of Boolean logic that is invertible (Camsari et al., 2017a; Faria et al., 2017; Pervaiz et al., 2017, 2018), quantum emulation (Camsari et al., 2019), and in situ learning from probability distributions (Kaiser et al., 2020).

Unlike conventional deterministic networks built out of deterministic, stable bits, stochastic or probabilistic networks composed of p-bits (Figure 1A), can be correlated by interconnecting them to construct p-circuits defined by two equations (Ackley et al., 1985; Neal, 1992; Camsari et al., 2017a): (1) a p-bit/BSN equation and (2) a weight logic/synapse equation. The output of a p-bit, mi, is related to its dimensionless input Ii by the equation:

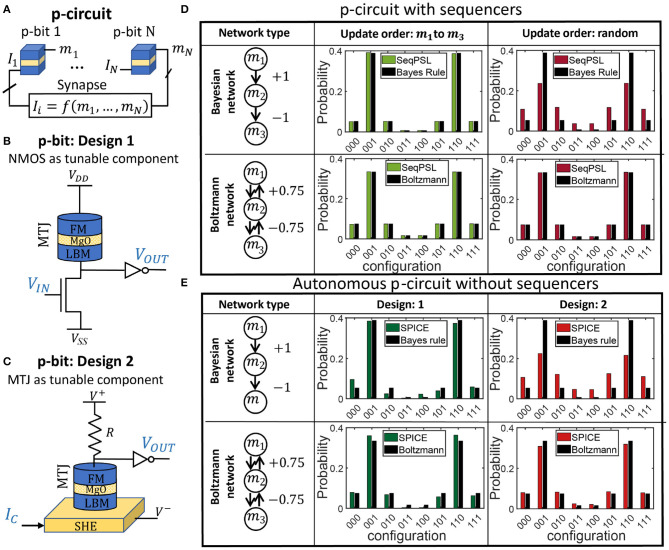

Figure 1.

Clocked vs. autonomous p-circuit: (A) a probabilistic (p-)circuit is composed of p-bits interconnected by a weight logic (synapse) that computes the input Ii to the ith p-bit as a function of the outputs from other p-bits. (B) p-bit design 1 based on stochastic Magnetic Tunnel Junction (s-MTJ) using low barrier nanomagnets (LBMs) and an NMOS transistor as tunable component. (C) p-bit design 2 based on s-MTJ as tunable component. Both designs have been used to build a p-circuit as shown in (A). (D) Two types of p-circuits are built: a directed or Bayesian network and a symmetrically connected Boltzmann network. The p-circuits are sequential (labeled as SeqPSL) that means p-bits are updated sequentially, one at a time, using a clock circuitry with a sequencer. It is shown that for Boltzmann networks update order does not matter and any random update order would produce the correct probability distribution. But for Bayesian networks, a specific, parent-to-child update order is necessary to converge to the correct probability distribution dictated by the Bayes rule. (E) The same Bayesian and Boltzmann p-circuits are implemented on an autonomous hardware built with p-bit design 1 and 2 without any clocks or sequencers. It is interesting to note that for Bayesian networks, design 2 fails to match the probabilities from applying Bayes rule, whereas design 1 works quite well as an autonomous Bayesian network. For every histogram in this figure, 106 samples have been collected.

| (1a) |

where rand(−1, +1) is a random number uniformly distributed between −1 and +1, and τN is the neuron evaluation time.

The synapse generates the input Ii from a weighted sum of the states of other p-bits. In general, the synapse can be a linear or non-linear function, although a common form is the linear synapse described according to the equation:

| (1b) |

where hi is the on-site bias and Jij is the weight of the coupling from jth p-bit to ith p-bit, I0 parameterizes the coupling strength between p-bits, and τS is the synpase evaluation time. Several hardware designs of p-bits based on low barrier nanomagnet (LBM) physics have been proposed and also experimentally demonstrated (Ostwal et al., 2018; Borders et al., 2019; Ostwal and Appenzeller, 2019; Camsari et al., 2020; Debashis, 2020). The thermal energy barrier of the LBM is of the order of a few kBT instead of 40–60 kBT used in the memory technology to retain stability. Because of thermal noise the magnetization of the LBM keeps fluctuating as a function of time with an average retention time τ~τ0exp(EB/kBT) (Brown, 1979), where τ0 is a material-dependent parameter called attempt time that is experimentally found to be in the range of nanosecond or less and EB is the thermal energy barrier (Lopez-Diaz et al., 2002; Pufall et al., 2004). The stochasticity of the LBMs makes them naturally suitable for p-bit implementation.

Figure 1 shows two p-bit designs: Design 1 (Figure 1B) (Camsari et al., 2017b; Borders et al., 2019) and Design 2 (Figure 1C) (Camsari et al., 2017a; Ostwal and Appenzeller, 2019). Designs 1 and 2 both are fundamental building blocks of spin transfer torque (STT) and spin orbit torque (SOT) magnetoresistive random access memory (MRAM) technologies, respectively (Bhatti et al., 2017). Their technological relevance motivates us to explore their implementations as p-bits. Design 1 is very similar to the commercially available 1T/1MTJ (T: Transistor, MTJ: Magnetic Tunnel Junction) embedded MRAM device where the free layer of the MTJ is replaced by an in-plane magnetic anisotropy (IMA) or perpendicular magnetic anisotropy (PMA) LBM. Design 2 is similar to the basic building block of SOT-MRAM device (Liu et al., 2012) where the thermal fluctuation of the free layer magnetization of the stochastic MTJ (s-MTJ) (Vodenicarevic et al., 2017, 2018; Mizrahi et al., 2018; Parks et al., 2018; Zink et al., 2018; Borders et al., 2019) is tuned by a spin current generated in a heavy metal layer underneath the LBM due to SOT effect. The in-plane polarized spin current from the SOT effect in the spin hall effect (SHE) material in design 2 requires an in-plane LBM to tune its magnetization, although a perpendicular LBM with a tilted anisotropy axis is also experimentally shown to work (Debashis et al., 2020). However, design 2 requires spin current manipulation, design 1 does not rely on that as long as circular in-plane LBMs with continuous valued magnetization states that are hard to pin are used. In-plane LBMs also provide faster fluctuation than perpendicular ones leading to faster sampling speed in the probabilistic hardware (Hassan et al., 2019; Kaiser et al., 2019).

The key distinguishing feature of the two p-bit designs (designs 1 and 2) is the time scales in implementing Equation (1a). From a hardware point of view, Equation (1a) has two components: a random number generator (RNG) (rand) and a tunable component (tanh). In design 1, the RNG is the s-MTJ utilizing an LBM and the tunable component is the NMOS transistor, thus having two different time scales in the equation. But in design 2, both the RNG and the tunable component are implemented by a single s-MTJ utilizing an LBM, thus having just one time scale in the equation. This difference in time scales in the two designs is shown in Figure 2. Note that although the two p-bit designs have the same RNG source, namely a fluctuating magnetization, it is the difference in their circuit configuration with or without the NMOS transistor in the MTJ branch that results in different time dynamics of the two designs.

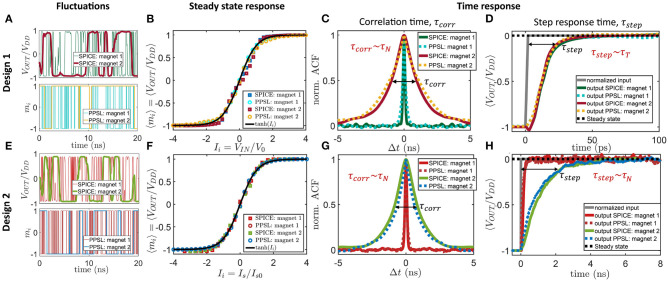

Figure 2.

Autonomous behavioral model for p-bit: (A–D) Behavioral model for the autonomous hardware with design 1 (Figure 1B) is benchmarked with SPICE simulations of the actual device involving experimentally benchmarked modules. The behavioral model (labeled as “PPSL”) shows good agreement with SPICE in terms of capturing fluctuation dynamics (A), steady-state sigmoidal response (B), and two different time responses: autocorrelation time of the fluctuating output under zero input condition labeled as τcorr (C), which is proportional to the LBM retention time τN in the nanosecond range, and the step response time τstep (D) that is proportional to transistor response time τT, which is few picoseconds and much smaller than τN. The magnet parameters used in the simulations are mentioned in section 2. (E–H) Similar benchmarking for p-bit design 2 (Figure 1C). In this case, τstep is proportional to τN. For (B,F), each point for the SPICE simulation was obtained by averaging mi over 1 μs. The step response time for (D,H) is obtained by averaging over 2,000 ensembles where Ii = −5 at t < 0 and Ii = 0 at t > 0.

In traditional software implementations, p-bits are updated sequentially for accurate operation such that after each τS+τN time interval, only one p-bit is updated (Hinton, 2007). This naturally implies the use of sequencers to ensure the sequential update of p-bits. The sequencer generates an Enable signal for each p-bit in the network and ensures that no two p-bits update simultaneously. The sequencer also makes sure that every p-bit is updated at least once in a time step where each time step corresponds to N·(τS+τN), N being the number of p-bits in the network. (Roberts and Sahu, 1997; Pervaiz et al., 2018). For symmetrically connected networks (Jij = Jji) such as Boltzmann machines, the update order of p-bits does not matter and any random update order produces the standard probability distribution described by equilibrium Boltzmann law as long as p-bits are updated sequentially. But for directed acyclic networks (Jij≠0, Jji = 0) or BNs to be consistent with the expected conditional probability distribution, p-bits need to be updated not only sequentially but also in a specific update order, which is from the parent to child nodes (Neal, 1992) similar to the concept of forward sampling in belief networks (Henrion, 1988; Guo and Hsu, 2002; Koller and Friedman, 2009). As long as this parent to child update order is maintained, the network converges to the correct probability distribution described by probability chain rule or Bayes rule. This effect of update order in a sequential p-circuit is shown on a three p-bit network in Figure 1D. In the Supplementary Material, it is shown in an example how the CPT of the BN can be mapped to a p-circuit following Faria et al. (2018).

Unlike sequential p-circuits in ANN literature, the distinguishing feature of our probabilistic hardware is that it is autonomous where each p-bit runs in parallel without any clocks or sequencers. This autonomous p-circuit (ApC) allows massive parallelism potentially providing peta flips per second sampling speed (Sutton et al., 2020). The complete sequencer-free operation of our “autonomous” p-circuit is very different from the “asynchronous” operation of spiking neural networks (Merolla et al., 2014; Davies et al., 2018). Although p-bits are fluctuating in parallel in an ApC, it is very unlikely that two p-bits will update at the exact same time since random noise control their dynamics. Therefore, persistent parallel updates are extremely unlikely and are not a concern. Note that even if p-bits update sequentially, each update has to be informed such that when one p-bit updates it has received the up-to-date input Ii based on the latest states of other p-bits mj that it is connected to. This informed update can be ensured as long as the synapse response time is much faster than the neuron time (τS ≪ τN) and this is a key design rule for an ApC. If the input of the p-bit is based on old state of neighboring p-bits or on time-integrated synaptic inputs, the ApC operation declines in functionality or fails completely. However, for τS ≪ τN, the ApC works properly for a Boltzmann network without any clock because no specific update order is required in this case. But, it is not intuitive at all if an ApC would work for a BN because a particular parent to child informed update order is required in this case, as shown in Figure 1D. As such, it is not straightforward that a clockless autonomous circuit can naturally ensure this specific informed update order. In Figure 1E, we have shown that it is possible to design hardware p-circuit that can naturally ensure a parent to child informed update order in a BN without any clocks. In Figure 1E, two p-bit designs are evaluated for implementing both Boltzmann network and BN. We have shown that design 1 is suitable for both Boltzmann network and BN. But design 2 is suitable for Boltzmann networks only and does not work for BNs in general. The synapse in both types of p-circuits is implemented using a resistive crossbar architecture (Alibart et al., 2013; Camsari et al., 2017b), although there are also other types of hardware synapse implementations based on memristors (Li et al., 2018; Mahmoodi et al., 2019; Mansueto et al., 2019), magnetic tunnel junctions (Ostwal et al., 2019), spin orbit torque driven domain wall motion devices (Zand et al., 2018), phase change memory devices (Ambrogio et al., 2018), and so on. In all the simulations, τS is assumed to be negligible compared to other time scales in the circuit dynamics.

Our proposed probabilistic hardware for BNs shows significant biological relevance because of the following reasons: (1) The brain consists of neurons and synapses. The basic building block called “p-bit” of our proposed hardware mimics the neuron and the interconnection among p-bits mimics the synapse function. (2) The components of brain are stochastic or noisy by nature. p-bits mimicking the neural dynamics in our proposed hardware are also stochastic. (3) Brain does not have a single clock for synchronous operation and can perform massively parallel processing (Strukov et al., 2019). Our autonomous hardware also does not have any global clock or sequencers and each p-bit fluctuates in parallel allowing massively parallel operation.

Further, we have provided a behavioral model in section 2 for both designs 1 and 2, illustrating the essential characteristics needed for correct sequencer-free operation of BNs. Both models are benchmarked against state-of-the-art device/circuit models (SPICE) of the actual devices and can be used for the efficient simulation of large-scale autonomous networks.

2. Behavioral Model for Autonomous Hardware

In this section, we will develop an autonomous behavioral model that we will call parallel probabilistic spin logic (PPSL) for design 1 (Figure 1B) and revisit the behavioral model for design 2, which was proposed by Sutton et al. (2020). The term “Parallel” refers to all the p-bits fluctuating in parallel without any clocks or sequencers. These behavioral models are high-level representations of the p-circuit and p-bit behavior and connect Equations (1a) and (1b) to the hardware p-bit designs. Please note the parameters introduced in these models will represent certain parts of the p-bit and synapse behavior like MTJ resistances (rMTJ) and transistor resistances (rT) but are generally dimensionless apart from time variables (e.g., τT,τN). The advantage of these models is that they are computationally less expensive to use than full SPICE simulations while preserving the crucial device and system characteristics.

2.1. Autonomous Behavioral Model: Design 1

The autonomous circuit behavior of design 1 can be explained by slightly modifying the two equations (Equations 1a,b) stated in section 1. The fluctuating resistance of the low barrier nanomagnet-based MTJ is represented by a correlated random number rMTJ with values between −1 and +1 and an average dwell time of the fluctuation denoted by τN. The NMOS transistor tunable resistance is denoted by rT and the inverter is represented by a sgn function. Thus, the normalized output mi = VOUT, i/VDD of the ith p-bit can be expressed as:

| (2) |

where Δt is the simulation time step, rT, i represents the NMOS transistor resistance tunable by the normalized input Ii = VIN, i/V0 (compare Equation 1a) where V0 is a fitting parameter which is ≈50mV for the chosen parameters and transistor technology (compare Figure 2B) and rMTJ, i is a correlated random number generator with an average retention time of τN. For design 1, the transistor represents the tunable component that works in conjunction with the unbiased stochastic signal of the MTJ. rT, i as a function of input Ii is approximated by a tanh function with a response time denoted by τT modeled by the following equations:

| (3) |

where it can be clearly seen that the dimensionless quantity rT, i representing the transistor resistance is bounded by −1 ≤ rT,i ≤ 1 for all synaptic inputs. Figure 2B shows by utilizing SPICE simulation how Ii influences the average output mi and shows that the average response of the circuit is in good agreement with the tanh-function used in Equation (3).

The synapse delay τS in computing the input Ii can be modeled by:

| (4) |

For calculating rMTJ, i, at time t+Δt a new random number will be picked according to the following equations:

| (5a) |

where rand[0, 1] is a uniformly distributed random number between 0 and 1 and τN represents the average retention time of the fluctuating MTJ resistance. If rflip is -1, a new random rMTJ will be chosen between −1 and +1. Otherwise, the previous rMTJ(t) will be kept in the next time step (t+Δt), which can be expressed as

| (5b) |

where −1 ≤ rMTJ,i(t) ≤ 1.

The charge current flowing through the MTJ branch of p-bit design 1 can get polarized by the fixed layer of the MTJ and generate a spin current Is that can tune/pin the MTJ dynamics by modifying τN. This effect is needed for tuning the output of design 2 but is not desired in design 1. However, the developed behavioral model can account for this pinning effect according to

| (6) |

where is the retention time of rMTJ when IMTJ = 0. The dimensionless pinning current IMTJ is defined as IMTJ = Is/Is, 0 where Is, 0 can be extracted by following the procedure of Figure 2F. This pinning effect by IMTJ is much smaller in in-plane magnets (IMA) than perpendicular magnets (PMA) (Hassan et al., 2019) and is ignored for design 1 throughout this paper.

Figures 2A–D shows the comparison of this behavioral model for p-bit design 1 with SPICE simulation of the actual hardware in terms of fluctuation dynamics, sigmoidal characteristic response, autocorrelation time (τcorr), and step response time (τstep) and in all cases the behavioral model closely matches SPICE simulations. The SPICE simulation involves experimentally benchmarked modules for different parts of the device. The SPICE model for the s-MTJ model solves the stochastic Landau–Lifshitz–Gilbert equation for the LBM physics. For the transistors, 14 nm Predictive Technology Model1 is used. As simulator HSPICE is utilized with the .trannoise function and a time step of 1 ps. The simulating framework was benchmarked experimentally and by using standard simulation tools in the field (Datta, 2012; Torunbalci et al., 2018). The autonomous behavioral model for design 1 is labeled as “PPSL: design 1.” The benchmarking is done for two different LBMs: (1) Faster fluctuating magnet 1 with saturation magnetization Ms = 1100 emu/cc, diameter D = 22 nm, thickness th = 2 nm, in-plane easy axis anisotropy Hk = 1 Oe, damping coefficient α = 0.01, demagnetization field Hd = 4πMs and (2) slower fluctuating magnet 2 with the same parameters as in magnet 1 except D = 150 nm. The supply voltage was set to VDD = −VSS = 0.4 V. The fast and slow fluctuations of the normalized output mi = VOUT, i/VDD are captured by changing the τN parameter in the PPSL model. In the steady-state sigmoidal response, V0 is a tanh fitting parameter that defines the width of the sigmoid and lies within the range of 40–60 mV reasonably well depending on which part of the sigmoid needs to be better matched. In Figure 2B, V0 value of 50 mV is used to fit the sigmoid from SPICE simulation. The following parameters have been extracted from the calibration shown in Figure 2, where Δt = 1 ps was used: τN = 150 ps (magnet 1), τN = 1.5 ns (magnet 2), τT = 3 ps, Is, 0 = 120 μA (magnet 1), Is, 0 = 1 mA (magnet 2).

There are two types of time responses: (1) Autocorrelation time under zero input condition labeled as τcorr and (2) step response time τstep. The full width half maximum (FWHM) of the autocorrelation function of the fluctuating output under zero input is defined by τcorr, which is proportional to the retention time τN of the LBM. The step response time τstep is obtained by taking an average of the p-bit output over many ensembles when the input Ii is stepped from a large negative value to zero at time t = 0 and measuring the time it takes for the ensemble averaged output to reach its statistically correct value consistent with the new input. τstep defines how fast the first statistically correct sample can be obtained after the input is changed. For p-bit design 1, τstep is independent of LBM retention time τN and is defined by the NMOS transistor response time τT, which is much faster (few picoseconds) than LBM fluctuation time τN. The effect of these two very different time scales in design 1 (τstep ≪ τcorr) on an autonomous BN is described in section 3.

2.2. Autonomous Behavioral Model: Design 2

The autonomous behavioral model for design 2 is proposed in Sutton et al. (2020). In this article, we have benchmarked this model with the SPICE simulation of the single p-bit steady state and time responses shown in Figures 2E–H. According to this model, the normalized output mi = VOUT, i/VDD can be expressed as:

| (7a) |

| (7b) |

where pNOTflip, i(t+Δt) is the probability of retention of the ith p-bit (or “not flipping”) in the next time step that is a function of average neuron flip time τN, input Ii, and the current p-bit output mi(t). Figure 2 shows how this simple autonomous behavioral model for design 2 matches reasonably well with SPICE simulation of the device in terms of fluctuation dynamics (Figure 2E), sigmoidal characteristic response (Figure 2F), autocorrelation time (τcorr) (Figure 2G), and step response time (τstep) (Figure 2H). In design 2, τstep and τcorr are both proportional to LBM fluctuation time τN unlike design 1.

Different time scales in p-bit designs 1 and 2 are also reported in Hassan et al. (2019) in an energy-delay analysis context. In this article, we explain the effect of these time scales in designing an autonomous BN (section 3).

3. Difference Between Designs 1 and 2 in Implementing Bayesian Networks

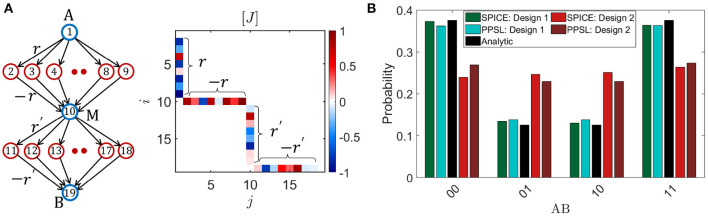

The behavioral models introduced in section 2 are applied to implement a multi-layer belief/BN with 19 p-bits and random interconnection strengths between +1 and −1 (Figure 3A). For illustrative purposes, the interconnections are designed in such a way that although there are no meaningful correlations between the blue and red colored nodes with random couplings, pairs of intermediate nodes (A, M1) and (M1, B) get negatively correlated because of a net −r2 type coupling through each branch connecting the pairs. So it is expected that the start and end nodes (A, B) get positively correlated. Figure 3B shows histograms of four configurations (00, 01, 10, 11) of the pair of nodes A and B obtained from different approaches: Bayes rule (labeled as Analytic), SPICE simulation of design 1 (SPICE: Design 1) and design 2 (SPICE: Design 2), and autonomous behavioral model for design 1 (PPSL: Design 1) and design 2 (PPSL: design 2). It is shown that results from SPICE simulation and behavioral model for design 1 matches reasonably well with the standard analytical values showing 00 and 11 states with highest probability, whereas design 2 autonomous hardware does not work well in terms of matching with the analytical results and shows approximately all equal peaks. We have tested this basic conclusion for other networks as well with more complex topology as shown in Supplementary Figure 1. The analytical values are obtained from applying the standard joint probability rule for BNs (Pearl, 2014; Russell and Norvig, 2016), which is:

Figure 3.

Difference between designs 1 and 2: (A) The behavioral models described in Figure 2 are applied to simulate a 19 p-bit BN with random Jij between +1 and -1. The indices i and j of Ji, j correspond to the numbers inside each circle. The interconnections are designed in such a way so that pairs of intermediate nodes (A, M1) and (M1, B) get anti-correlated and (A, B) gets positively correlated. (B) The probability distribution of four configurations of AB are shown in a histogram from different approaches (SPICE, behavioral model and analytic). The behavioral models for two designs (labeled as PPSL) match reasonably well with the corresponding results from SPICE simulation of the actual hardware. Note that while design 1 matches with the standard analytical values quite well, design 2 does not works as an autonomous BN in general. For each histogram, 106 samples have been collected.

| (8) |

Joint probability between two specific nodes xi and xj can be calculated from the above equation by summing over all configurations of the other nodes in the network, which becomes computationally expensive for larger networks. But one major advantage of our probabilistic hardware is that probabilities of specific nodes can be obtained by looking at the nodes of interest ignoring all other nodes in the system similar to what Feynman stated about a probabilistic computer imitating the probabilistic laws of nature (Feynman, 1982). Indeed, in the BN example in Figure 3, the probabilities of different configurations of nodes A and B were obtained by looking at the fluctuating outputs of the two nodes ignoring all other nodes. For the SPICE simulation of design 1 hardware, tanh fitting parameter V0 = 57 mV is used and the mapping principle from dimensionless coupling terms Jij to the coupling resistances in the hardware is described in Faria et al. (2018). An example of this mapping is given in the Supplementary Material.

The reason why design 1 works for a BN and design 2 does not is because of the two very different time responses of the two designs shown in Figure 2 due to the fact that the tunable component is the transistor in design 1 (τstep∝τT) and the MTJ in design 2 (τstep∝τN). It is these two different time scales in design 1 (τstep ≪ τcorr) that naturally ensures a parent to child informed update order in a BN. The reason is that when τstep is small, each child node can immediately respond to any change of its parent nodes that happens due to a random event, which have a much larger time scale ∝τcorr. Thus, due to that fast step response, information about changing p-bits at the parent node can propagate quickly through the network and the output of the child nodes can be conditionally satisfied with the parent nodes very fast. Otherwise, if τcorr gets comparable to τstep, the child nodes will not be able to keep up with the fast changing parent nodes since the information of the parent p-bit state has not been propagated through the network. As a result, the child nodes will produce a substantial number of statistically incorrect samples over the entire time range, thus deviating from the correct probability distribution. This effect is especially strong for networks where the coupling strength between p-bits is large.

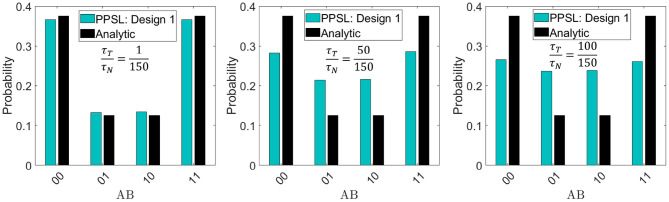

To illustrate this point, the effect of τstep/τcorr ratio is shown in Figure 4 for the same BN presented in Figure 3 by plotting the histogram of AB configurations for different τT/τN ratios. It is shown that when τT/τN ratio is small, the histogram converges to the correct distribution. As τT gets comparable to τN, the histogram begins to diverge from the correct distribution. Thus, the very fast NMOS transistor response in design 1 makes it suitable for an autonomous BN hardware. One thing to note that under certain conditions, results from design 2 can also match the analytical results if spin current bias is large enough to drive down the fast step response time to ensure τstep ≪ τcorr.

Figure 4.

Effect of step response time in design 1: The reason for design 1 to work accurately as an autonomous Bayesian network as shown in Figure 3 is the two different time scales (τT and τN) in this design with the condition that τT ≪ τN. The same histogram shown in Figure 3 is plotted using the proposed behavioral model for different τT/τN ratios and compared with the analytical values. It can be seen that as τT gets comparable to τN, the probability distribution diverges from the standard statistical values. For each histogram 106, samples have been collected.

So apart from ensuring a fast synapse compared to neuron fluctuation time (τS ≪ τN), which is the design rule for an autonomous probabilistic hardware, the autonomous BN demands an additional p-bit design rule that is a much faster step response time of the p-bit compared to its fluctuation time (τstep ≪ τN) as ensured in design 1. In all the simulations, the LBM was a circular in-plane magnet whose magnetization spans all values between +1 and −1 and negligible pinning effect. If the LBM is a PMA magnet with bipolar fluctuations having just two values +1 and −1, design 1 will not provide any sigmoidal response except with substantial pinning effect (Borders et al., 2019). Under this condition, τstep of design 1 will be comparable to τN again and the system will not work as an autonomous BN in general. Therefore, LBM with continuous range fluctuation is expected for design 1 p-bit to work properly as a BN.

4. Discussion

In this article, we have elucidated the design criteria for an autonomous clockless hardware for BNs that requires a specific parent to child update order when implemented on a probabilistic circuit. By performing SPICE simulations of two autonomous probabilistic hardware designs built out of p-bits (designs 1 and 2 in Figure 1), we have shown that the autonomous hardware will naturally ensure a parent to child informed update order without any sequencers if the step response time (τstep) of the p-bit is much smaller than its autocorrelation time (τcorr). This criteria of having two different time scales is met in design 1 as τstep comes from the NMOS transistor response time τT in this design, which is few picoseconds. We have also proposed an autonomous behavioral model for design 1 and benchmarked it against SPICE simulation of the actual hardware. All the simulations using behavioral model for design 1 are performed ignoring some non-ideal effects listed as follows:

Pinning of the s-MTJ fluctuation due to STT effect is ignored by assuming IMTJ = 0 in Equation (6). This is a reasonable assumption considering circular in-plane magnets that are very difficult to pin due to the large demagnetization field that is always present, irrespective of the energy barrier (Hassan et al., 2019). This effect is more prominent in perpendicular anisotropy magnets (PMA) magnets. It is important to include the pinning effect in p-bits with bipolar LBM fluctuations because in this case the p-bit does not provide a sigmoidal response without the pinning current. This effect is also experimentally observed in Borders et al. (2019) for PMA magnets. Such a p-bit design with bipolar PMA and STT pinning might not work for BNs in general, because in this case τstep will be dependent on magnet fluctuation time τN.

In the proposed behavioral model, the step response time of the NMOS transistor τT in design 1 is assumed to be independent of the input I. But there is a functional dependence of τT on I in real hardware.

The NMOS transistor resistance rT is approximated as a tanh function for simplicity. In order to capture the hardware behavior in a better way, the tanh can be replaced by a more complicated function and the weight matrix [J] will have to be learnt around that function.

All the non-ideal effects listed above are supposed to have minimal effects on different probability distributions shown in this article. Real LBMs may suffer from common fabrication defects, resulting in variations in average magnet fluctuation time τN (Abeed and Bandyopadhyay, 2019). The autonomous BN is also quite tolerant to such variations in τN as long as τT ≪ min(τN).

It is important to note that, for design 1 (Transistor-controlled) to function as a p-bit that has a step response time (τstep) much smaller than its average fluctuation time (τN), the LBM fluctuation needs to be continuous and not bipolar. It is important to note that while most experimental implementations of low barrier magnetic tunnel junctions or spin-valves exhibit telegraphic (binary) fluctuations (Pufall et al., 2004; Locatelli et al., 2014; Parks et al., 2018; Debashis et al., 2020), theoretical results (Abeed and Bandyopadhyay, 2019; Hassan et al., 2019; Kaiser et al., 2019) indicate that it should be possible to design low barrier magnets with continuous fluctuations. Preliminary experimental results for such circular disk nanomagnets have been presented in Debashis et al. (2016). We believe that a lack of experimental literature on such magnets is partly due to the lack of interest of randomly fluctuating magnets that have long been discarded as impractical and irrelevant. The other experimentally demonstrated p-bits (Ostwal et al., 2018; Ostwal and Appenzeller, 2019; Debashis, 2020) fall under design 2 category with the LBM magnetization tuned by SOT effect and are not suitable for autonomous BN operation in general. It might also be possible to design p-bits using other phenomena such as voltage controlled magnetic anisotropy (Amiri and Wang, 2012), but this is beyond the scope of the present study. Here, we have specifically focused on two designs that can be implemented with existing MRAM technology based on STT and SOT.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

Author Contributions

RF and SD wrote the paper. RF performed the simulations. JK helped setting up the simulations. KC developed the simulation modules for the BSN in SPICE. All authors discussed the results and helped refine the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank professor Joerg Appenzeller from Purdue University for helpful discussions. This manuscript has been released as a preprint at arXiv (Faria et al., 2020).

Funding. This work was supported in part by ASCENT, one of six centers in JUMP, a Semiconductor Research Corporation (SRC) program sponsored by DARPA.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2021.584797/full#supplementary-material

References

- Abeed M. A., Bandyopadhyay S. (2019). Low energy barrier nanomagnet design for binary stochastic neurons: design challenges for real nanomagnets with fabrication defects. IEEE Magnet. Lett. 10, 1–5. 10.1109/LMAG.2019.2929484 [DOI] [Google Scholar]

- Ackley D. H., Hinton G. E., Sejnowski T. J. (1985). A learning algorithm for Boltzmann machines. Cogn. Sci. 9, 147–169. 10.1207/s15516709cog0901_7 [DOI] [Google Scholar]

- Alibart F., Zamanidoost E., Strukov D. B. (2013). Pattern classification by memristive crossbar circuits using ex situ and in situ training. Nat. Commun. 4, 1–7. 10.1038/ncomms3072 [DOI] [PubMed] [Google Scholar]

- Ambrogio S., Narayanan P., Tsai H., Shelby R. M., Boybat I., di Nolfo C., et al. (2018). Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67. 10.1038/s41586-018-0180-5 [DOI] [PubMed] [Google Scholar]

- Amiri P. K., Wang K. L. (2012). “Voltage-controlled magnetic anisotropy in spintronic devices,” in Spin, Vol. 2 (World Scientific; ), 1240002. 10.1142/S2010324712400024 [DOI] [Google Scholar]

- Arias J., Martinez-Gomez J., Gamez J. A., de Herrera A. G. S., Müller H. (2016). Medical image modality classification using discrete bayesian networks. Comput. Vis. Image Understand. 151, 61–71. 10.1016/j.cviu.2016.04.002 [DOI] [Google Scholar]

- Behin-Aein B., Diep V., Datta S. (2016). A building block for hardware belief networks. Sci. Rep. 6:29893. 10.1038/srep29893 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhatti S., Sbiaa R., Hirohata A., Ohno H., Fukami S., Piramanayagam S. (2017). Spintronics based random access memory: a review. Mater. Tdy. 20, 530–548. 10.1016/j.mattod.2017.07.007 [DOI] [Google Scholar]

- Bielza C., Larrañaga P. (2014). Bayesian networks in neuroscience: a survey. Front. Comput. Neurosci. 8:131. 10.3389/fncom.2014.00131 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borders W. A., Pervaiz A. Z., Fukami S., Camsari K. Y., Ohno H., Datta S. (2019). Integer factorization using stochastic magnetic tunnel junctions. Nature 573, 390–393. 10.1038/s41586-019-1557-9 [DOI] [PubMed] [Google Scholar]

- Brown W. (1979). Thermal fluctuation of fine ferromagnetic particles. IEEE Trans. Magnet. 15, 1196–1208. 10.1109/TMAG.1979.1060329 [DOI] [Google Scholar]

- Camsari K. Y., Chowdhury S., Datta S. (2019). Scalable emulation of sign-problem-free hamiltonians with room-temperature p-bits. Phys. Rev. Appl. 12:034061. 10.1103/PhysRevApplied.12.034061 [DOI] [Google Scholar]

- Camsari K. Y., Debashis P., Ostwal V., Pervaiz A. Z., Shen T., Chen Z., et al. (2020). From charge to spin and spin to charge: Stochastic magnets for probabilistic switching. Proc. IEEE. 108:1322–1337. 10.1109/JPROC.2020.2966925 [DOI] [Google Scholar]

- Camsari K. Y., Faria R., Sutton B. M., Datta S. (2017a). Stochastic p-bits for invertible logic. Phys. Rev. X 7:031014. 10.1103/PhysRevX.7.031014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camsari K. Y., Salahuddin S., Datta S. (2017b). Implementing p-bits with embedded mtj. IEEE Electron Dev. Lett. 38, 1767–1770. 10.1109/LED.2017.2768321 [DOI] [Google Scholar]

- Chakrapani L. N., Korkmaz P., Akgul B. E., Palem K. V. (2007). Probabilistic system-on-a-chip architectures. ACM Trans. Design Automat. Electron. Syst. 12:29. 10.1145/1255456.1255466 [DOI] [Google Scholar]

- Correa M., Bielza C., Pamies-Teixeira J. (2009). Comparison of bayesian networks and artificial neural networks for quality detection in a machining process. Expert Syst. Appl. 36, 7270–7279. 10.1016/j.eswa.2008.09.024 [DOI] [Google Scholar]

- Darwiche A. (2009). Modeling and Reasoning With Bayesian Networks. Cambridge University Press. Available online at: https://www.cambridge.org/core/books/modeling-and-reasoning-with-bayesian-networks/8A3769B81540EA93B525C4C2700C9DE6 10.1017/CBO9780511811357 [DOI]

- Datta D. (2012). Modeling of spin transport in MTJ devices (Ph.D. thesis: ), Purdue University Graduate School; ProQuest. Available online at: https://docs.lib.purdue.edu/dissertations/AAI3556189/ [Google Scholar]

- Davies M., Srinivasa N., Lin T.-H., Chinya G., Cao Y., Choday S. H., et al. (2018). Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99. 10.1109/MM.2018.112130359 [DOI] [Google Scholar]

- Debashis P. (2020). Spintronic devices as P-bits for probabilistic computing (Ph.D. thesis: ). Purdue University Graduate School. Available online at: https://hammer.figshare.com/articles/thesis/Spintronic_Devices_as_P-bits_for_Probabilistic_Computing/11950395 [Google Scholar]

- Debashis P., Faria R., Camsari K. Y., Appenzeller J., Datta S., Chen Z. (2016). “Experimental demonstration of nanomagnet networks as hardware for ising computing,” in 2016 IEEE International Electron Devices Meeting (IEDM) (San Francisco, CA: IEEE; ), 34. 10.1109/IEDM.2016.7838539 [DOI] [Google Scholar]

- Debashis P., Faria R., Camsari K. Y., Datta S., Chen Z. (2020). Correlated fluctuations in spin orbit torque coupled perpendicular nanomagnets. Phys. Rev. B 101:094405. 10.1103/PhysRevB.101.094405 [DOI] [Google Scholar]

- Faria R., Camsari K. Y., Datta S. (2017). Low-barrier nanomagnets as p-bits for spin logic. IEEE Magnet. Lett. 8, 1–5. 10.1109/LMAG.2017.2685358 [DOI] [Google Scholar]

- Faria R., Camsari K. Y., Datta S. (2018). Implementing bayesian networks with embedded stochastic mram. AIP Adv. 8:045101. 10.1063/1.5021332 [DOI] [Google Scholar]

- Faria R., Kaiser J., Camsari K. Y., Datta S. (2020). Hardware design for autonomous bayesian networks. arXiv preprint arXiv:2003.01767. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feynman R. P. (1982). Simulating physics with computers. Int. J. Theor. Phys. 21:467–488. 10.1007/BF02650179 [DOI] [Google Scholar]

- Friedman J. S., Calvet L. E., Bessiére P., Droulez J., Querlioz D. (2016). Bayesian inference with muller c-elements. IEEE Trans. Circ. Syst. I Regular Pap. 63, 895–904. 10.1109/TCSI.2016.2546064 [DOI] [Google Scholar]

- Friedman N., Linial M., Nachman I., Pe'er D. (2000). Using bayesian networks to analyze expression data. J. Comput. Biol. 7, 601–620. 10.1089/106652700750050961 [DOI] [PubMed] [Google Scholar]

- Guo H., Hsu W. (2002). “A survey of algorithms for real-time bayesian network inference,” in Join Workshop on Real Time Decision Support and Diagnosis Systems. American Association for Artificial Intelligence. Available online at: https://www.aaai.org/Papers/Workshops/2002/WS-02-15/WS02-15-001.pdf

- Hassan O., Faria R., Camsari K. Y., Sun J. Z., Datta S. (2019). Low-barrier magnet design for efficient hardware binary stochastic neurons. IEEE Magnet. Lett. 10, 1–5. 10.1109/LMAG.2019.2910787 [DOI] [Google Scholar]

- Heckerman D., Breese J. S. (1996). Causal independence for probability assessment and inference using bayesian networks. IEEE Trans. Syst. Man Cybernet A Syst. Hum. 26, 826–831. 10.1109/3468.541341 [DOI] [Google Scholar]

- Henrion M. (1988). “Propagating uncertainty in bayesian networks by probabilistic logic sampling,” in Machine Intelligence and Pattern Recognition, (Elsevier) 5:149–163. 10.1016/B978-0-444-70396-5.50019-4 [DOI] [Google Scholar]

- Hinton G. E. (2007). Boltzmann machine. Scholarpedia 2:1668. 10.4249/scholarpedia.1668 [DOI] [Google Scholar]

- Jansen R., Yu H., Greenbaum D., Kluger Y., Krogan N. J., Chung S., et al. (2003). A bayesian networks approach for predicting protein-protein interactions from genomic data. Science 302, 449–453. 10.1126/science.1087361 [DOI] [PubMed] [Google Scholar]

- Jonas E. M. (2014). Stochastic architectures for probabilistic computation (Ph.D. thesis: ). Massachusetts Institute of Technology, Cambridge, MA, United States. [Google Scholar]

- Kaiser J., Faria R., Camsari K. Y., Datta S. (2020). Probabilistic circuits for autonomous learning: a simulation study. Front. Comput. Neurosci. 14:14. 10.3389/fncom.2020.00014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser J., Rustagi A., Camsari K., Sun J., Datta S., Upadhyaya P. (2019). Subnanosecond fluctuations in low-barrier nanomagnets. Phys. Rev. Appl. 12:054056. 10.1103/PhysRevApplied.12.054056 [DOI] [Google Scholar]

- Koller D., Friedman N. (2009). Probabilistic Graphical Models: Principles and Techniques. MIT Press. Available online at: https://mitpress.mit.edu/books/probabilistic-graphical-models

- Li C., Belkin D., Li Y., Yan P., Hu M., Ge N., et al. (2018). Efficient and self-adaptive in-situ learning in multilayer memristor neural networks. Nat. Commun. 9, 1–8. 10.1038/s41467-018-04484-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu L., Pai C.-F., Li Y., Tseng H., Ralph D., Buhrman R. (2012). Spin-torque switching with the giant spin hall effect of tantalum. Science 336, 555–558. 10.1126/science.1218197 [DOI] [PubMed] [Google Scholar]

- Locatelli N., Mizrahi A., Accioly A., Matsumoto R., Fukushima A., Kubota H., et al. (2014). Noise-enhanced synchronization of stochastic magnetic oscillators. Phys. Rev. Appl. 2:034009. 10.1103/PhysRevApplied.2.034009 [DOI] [Google Scholar]

- Lopez-Diaz L., Torres L., Moro E. (2002). Transition from ferromagnetism to superparamagnetism on the nanosecond time scale. Phys. Rev. B 65:224406. 10.1103/PhysRevB.65.224406 [DOI] [Google Scholar]

- Mahmoodi M., Prezioso M., Strukov D. (2019). Versatile stochastic dot product circuits based on nonvolatile memories for high performance neurocomputing and neurooptimization. Nat. Commun. 10, 1–10. 10.1038/s41467-019-13103-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mansueto M., Chavent A., Auffret S., Joumard I., Nath J., Miron I. M., et al. (2019). Realizing an isotropically coercive magnetic layer for memristive applications by analogy to dry friction. Phys. Rev. Appl. 12:044029. 10.1103/PhysRevApplied.12.044029 [DOI] [Google Scholar]

- Merolla P. A., Arthur J. V., Alvarez-Icaza R., Cassidy A. S., Sawada J., Akopyan F., et al. (2014). A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673. 10.1126/science.1254642 [DOI] [PubMed] [Google Scholar]

- Mizrahi A., Hirtzlin T., Fukushima A., Kubota H., Yuasa S., Grollier J., et al. (2018). Neural-like computing with populations of superparamagnetic basis functions. Nat. Commun. 9, 1–11. 10.1038/s41467-018-03963-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neal R. M. (1992). Connectionist learning of belief networks. Artif. Intell. 56, 71–113. 10.1016/0004-3702(92)90065-6 [DOI] [Google Scholar]

- Nikovski D. (2000). Constructing bayesian networks for medical diagnosis from incomplete and partially correct statistics. IEEE Trans. Knowl. Data Eng. 4, 509–516. 10.1109/69.868904 [DOI] [Google Scholar]

- Ostwal V., Appenzeller J. (2019). Spin-orbit torque-controlled magnetic tunnel junction with low thermal stability for tunable random number generation. IEEE Magnet. Lett. 10, 1–5. 10.1109/LMAG.2019.2912971 [DOI] [Google Scholar]

- Ostwal V., Debashis P., Faria R., Chen Z., Appenzeller J. (2018). Spin-torque devices with hard axis initialization as stochastic binary neurons. Sci. Rep. 8, 1–8. 10.1038/s41598-018-34996-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostwal V., Zand R., DeMara R., Appenzeller J. (2019). A novel compound synapse using probabilistic spin-orbit-torque switching for mtj-based deep neural networks. IEEE J. Explorat. Solid State Comput. Dev. Circ. 5, 182–187. 10.1109/JXCDC.2019.2956468 [DOI] [Google Scholar]

- Park D.-C. (2016). Image classification using naive bayes classifier. Int. J. Comp. Sci. Electron. Eng. 4, 135–139. Available online at: http://www.isaet.org/images/extraimages/P1216004.pdf [Google Scholar]

- Parks B., Bapna M., Igbokwe J., Almasi H., Wang W., Majetich S. A. (2018). Superparamagnetic perpendicular magnetic tunnel junctions for true random number generators. AIP Adv. 8:055903. 10.1063/1.5006422 [DOI] [Google Scholar]

- Pearl J. (2014). Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Elsevier. Available online at: https://www.elsevier.com/books/probabilistic-reasoning-in-intelligent-systems/pearl/978-0-08-051489-5

- Pervaiz A. Z., Ghantasala L. A., Camsari K. Y., Datta S. (2017). Hardware emulation of stochastic p-bits for invertible logic. Sci. Rep. 7:10994. 10.1038/s41598-017-11011-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pervaiz A. Z., Sutton B. M., Ghantasala L. A., Camsari K. Y. (2018). Weighted p-bits for fpga implementation of probabilistic circuits. IEEE Trans. Neural Netw. Learn. Syst. 30, 1920–1926. 10.1109/TNNLS.2018.2874565 [DOI] [PubMed] [Google Scholar]

- Premebida C., Faria D. R., Nunes U. (2017). Dynamic bayesian network for semantic place classification in mobile robotics. Auton. Robots 41, 1161–1172. 10.1007/s10514-016-9600-2 [DOI] [Google Scholar]

- Pufall M. R., Rippard W. H., Kaka S., Russek S. E., Silva T. J., Katine J., et al. (2004). Large-angle, gigahertz-rate random telegraph switching induced by spin-momentum transfer. Phys. Rev. B 69:214409. 10.1103/PhysRevB.69.214409 [DOI] [Google Scholar]

- Querlioz D., Bichler O., Vincent A. F., Gamrat C. (2015). Bioinspired programming of memory devices for implementing an inference engine. Proc. IEEE 103, 1398–1416. 10.1109/JPROC.2015.2437616 [DOI] [Google Scholar]

- Rish I., Brodie M., Ma S., Odintsova N., Beygelzimer A., Grabarnik G., et al. (2005). Adaptive diagnosis in distributed systems. IEEE Trans. Neural Netw. 16, 1088–1109. 10.1109/TNN.2005.853423 [DOI] [PubMed] [Google Scholar]

- Roberts G. O., Sahu S. K. (1997). Updating schemes, correlation structure, blocking and parameterization for the gibbs sampler. J. R. Stat. Soc. Ser. B 59, 291–317. 10.1111/1467-9868.00070 [DOI] [Google Scholar]

- Russell S. J., Norvig P. (2016). Artificial Intelligence: A Modern Approach. Pearson Education Limited. Available online at: https://www.pearson.com/us/higher-education/product/Norvig-Artificial-Intelligence-A-Modern-Approach-Subscription-3rd-Edition/9780133001983.html

- Shim Y., Chen S., Sengupta A., Roy K. (2017). Stochastic spin-orbit torque devices as elements for bayesian inference. Sci. Rep. 7:14101. 10.1038/s41598-017-14240-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strukov D., Indiveri G., Grollier J., Fusi S. (2019). Building brain-inspired computing. Nat. Commun. 10. 10.1038/s41467-019-12521-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun S., Zhang C., Yu G. (2006). A bayesian network approach to traffic flow forecasting. IEEE Trans. Intell. Transport. Syst. 7, 124–132. 10.1109/TITS.2006.869623 [DOI] [Google Scholar]

- Sutton B., Camsari K. Y., Behin-Aein B., Datta S. (2017). Intrinsic optimization using stochastic nanomagnets. Sci. Rep. 7:44370. 10.1038/srep44370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton B., Faria R., Ghantasala L. A., Jaiswal R., Camsari K. Y., Datta S. (2020). Autonomous probabilistic coprocessing with petaflips per second. IEEE Access. 157238–157252. 10.1109/ACCESS.2020.3018682 [DOI] [Google Scholar]

- Thakur C. S., Afshar S., Wang R. M., Hamilton T. J., Tapson J., Van Schaik A. (2016). Bayesian estimation and inference using stochastic electronics. Front. Neurosci. 10:104. 10.3389/fnins.2016.00104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ticknor J. L. (2013). A bayesian regularized artificial neural network for stock market forecasting. Expert Syst. Appl. 40, 5501–5506. 10.1016/j.eswa.2013.04.013 [DOI] [Google Scholar]

- Torunbalci M. M., Upadhyaya P., Bhave S. A., Camsari K. Y. (2018). Modular compact modeling of MTJ devices. IEEE Trans. Electron Dev. 65, 4628–4634. 10.1109/TED.2018.2863538 [DOI] [Google Scholar]

- Tylman W., Waszyrowski T., Napieralski A., Kamiński M., Trafidło T., Kulesza Z., et al. (2016). Real-time prediction of acute cardiovascular events using hardware-implemented bayesian networks. Comput. Biol. Med. 69, 245–253. 10.1016/j.compbiomed.2015.08.015 [DOI] [PubMed] [Google Scholar]

- Vodenicarevic D., Locatelli N., Mizrahi A., Friedman J. S., Vincent A. F., Romera M., et al. (2017). Low-energy truly random number generation with superparamagnetic tunnel junctions for unconventional computing. Phys. Rev. Appl. 8:054045. 10.1103/PhysRevApplied.8.054045 [DOI] [Google Scholar]

- Vodenicarevic D., Locatelli N., Mizrahi A., Hirtzlin T., Friedman J. S., Grollier J., et al. (2018). “Circuit-level evaluation of the generation of truly random bits with superparamagnetic tunnel junctions,” in 2018 IEEE International Symposium on Circuits and Systems (ISCAS) (Florence: IEEE; ), 1–4. 10.1109/ISCAS.2018.8351771 [DOI] [Google Scholar]

- Weijia Z., Ling G. W., Seng Y. K. (2007). “PCMOs-based hardware implementation of bayesian network,” in 2007 IEEE Conference on Electron Devices and Solid-State Circuits (Tainan: IEEE; ), 337–340. 10.1109/EDSSC.2007.4450131 [DOI] [Google Scholar]

- Zand R., Camsari K. Y., Pyle S. D., Ahmed I., Kim C. H., DeMara R. F. (2018). “Low-energy deep belief networks using intrinsic sigmoidal spintronic-based probabilistic neurons,” in Proceedings of the 2018 on Great Lakes Symposium on VLSI Chicago IL, 15–20. 10.1145/3194554.3194558 [DOI] [Google Scholar]

- Zermani S., Dezan C., Chenini H., Diguet J.-P., Euler R. (2015). “FPGA implementation of bayesian network inference for an embedded diagnosis,” in 2015 IEEE Conference on Prognostics and Health Management (PHM) (Austin, TX: IEEE; ), 1–10. 10.1109/ICPHM.2015.7245057 [DOI] [Google Scholar]

- Zink B. R., Lv Y., Wang J.-P. (2018). Telegraphic switching signals by magnet tunnel junctions for neural spiking signals with high information capacity. J. Appl. Phys. 124:152121. 10.1063/1.5042444 [DOI] [Google Scholar]

- Zou M., Conzen S. D. (2004). A new dynamic bayesian network (DBN) approach for identifying gene regulatory networks from time course microarray data. Bioinformatics 21, 71–79. 10.1093/bioinformatics/bth463 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.